Motion capture has forced actors to don blue lycra to provide animators with artistic interpretation of characters. But the race is on to make this process easier, faster, cheaper and less awkward for the actor. One such advance is the recent showing of markerless motion capture from Organic Motion.

Recently Organic Motion announced a huge advance in motion capture at The Games Developers conference. The system is remarkable as it is the first markerless full body motion capture system. Organic Motion’s technology improves the motion capture workflow by producing immediately usable clean data that can be imported into Autodesk’s leading real time character animation application, Autodesk MotionBuilder software, as well as any custom software applications. It does this without the need for special suits or special markers. As such the system is designed to allow animators themselves or performers in costume to perform and either be motion captured or interact with digital characters, props and environments.

Andrew Tschesnok founder and the main force behind the technology came to motion capture about four and half years ago after developing and launching the startup World Wide Publishing, Inc., that was later acquired by publicly traded YouthStream Media Networks, and serving at Discreet Logic where he worked on the flame system and its networking and storage development. Tschesnok actually wanted to develop a system that addressed balancing and animating characters. The research was to study real people and allow a computer with artificial learning to make autonomous character that had real balance – and when they were pushed they would for example auto correct their centers of gravity. To do this he need some motion capture technology. As it would happen there was nothing in this area intelligent enough for Tschesnok so he started solving motion capture first and the rest as they say is history… Tschesnok has never gone back, his entire research efforts are now focused on just motion capture ” I got tricked into it – it must be an easy problem to solve, hey what if we don’t use markers to solve this problem” – he jokes and now “nearly 4 years later we got the prototype working, …introduced the technology and we have a beta in July, with a September release date”.

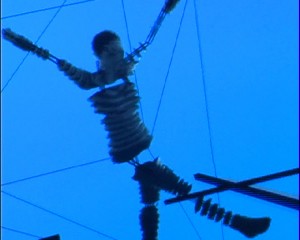

The organic motion system ideally likes the background for the motion capture to be a relatively flat and even colour – ideal for anyone wanting to shoot greenscreen. While the background may be flat grey or green the actors in front of the screen can be wearing normal cloths or costumes. This is radically different that previous system that required odd blue lycra body suits populated with numerous ping pong balls.

The innovation lies not only in being a markerless system but also in the clean data stream generated. Existing motion capture technologies contain inherent shortcomings and high operating costs that limit their usefulness to many projects. Many systems require a trained operating technician, subjects to wear cumbersome body suits and set up and calibration may take hours, but then the resulting output from some current systems then also require many hours of cleaning to correct data occlusions and inconsistencies before the information can be used to create professional animations. Both the calibration time, markers and data clean up have been addressed in the Organic Motion system.

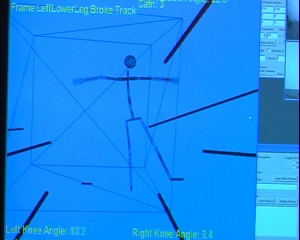

The system works by having multiple cameras, and a simple calibration system but the calibration takes minutes, not hours and is not complex. The system uses a person walking into the stage to work out their body shape and size, and even without scanning the person before hand or having any actual markers the system is accurate to 1mm over a 4m x 4m stage.

What normally happens in traditional systems is that dots are constantly being covered by other limbs or body parts. The cleanup break through is avoiding tracking dot data occlusions, which is the main problem with marker systems, dot/marker loss and re-acquiring is a huge source of data inaccuracies. By not using a marker approach the company avoids this problem and tends to produce much better and vastly ‘cleaner’ results. Organic Motion assumes some biped (or quadped) model constrained motion, in other words it has some ‘laws and logic’ of where an arm can go that predictively reduces the possibilities of limb movement based on real world freedom of movement, but as Tschesnok points out ” this is only part of our solution” the company identifies and tracks the limbs in the first place using a completely different system than point tracking. Not unlike an optical flow analysis the software does texture tracking and contour point tracking with other computer vision algorithms, and literally looks at every pixel in the images provided from the set of various cameras. This combination of cognitive predictive tracking and computer vision allows the actor to do almost anything on screen and maintain a clean result. The only things that help the system is to have more texture than less in the outfits, and of course highly loose clothing can create issues, but generally as a rule of thumb “normal street clothing works really well” says Tschesnok.

“People are so excited about it and in so many industries – with so many ideas on how to use this technology we had not even thought of”

The company offers a turnkey solution and organic motion is a development partner with Autodesk. It has first been integrated with Autodesk’s MotionBuilder, with more to follow. The system is estimated to sell for $80,000 and it involves a complete turnkey system including ten cameras and the processing unit. The ten cameras are special firewire cameras and they work in black and white but each camera individually is not vastly expensive. A flat background is also provided.

As the system provides not only per frame basis motion capture data, but 3D mesh and texture scanning data a colour system is in development for the next release.

Easy of use has always been a key factor for the company, Tschesnok is keen to see animators at studios being able to jump up from their workstation and block out their own motion capture – sitting down examining and working with their own capture, without cleanup, complex calibration and if the capture is not right – it is easy and fast to just go again !