Day two at FMX 2011 brought a major announcement from Weta Digital and The Foundry, along with some great presentations on virtual characters. We also speak to Molinare’s Dayne Cowan about The King’s Speech.

Today was another busy day. John and I spent the morning watching some virtual character talks by DD, Dneg and Framestore. ILM also dazzled the assembled crowd with its Rango presentation by VFX supe Tim Alexander and CG supe Patrick Cohen. Cinesite’s Ben Shepherd showed off his work for Battle: Los Angeles and a bunch of effects houses (The Mill, MPC and and unexpected) ran through some recent TVCs. The Visual Effects Society’s Jeff Okun discussed the politics of VFX, animation director Bill Kroyer took a nostalgic look at 1982’s TRON and a whole bunch of open source software announcements were made.

Here’s look at a couple of the presentations from today and a quick run down of the announcements made by Walt Disney Animation Studios and Weta Digital.

Open source sessions

A large part of the day was spent in multiple sessions discussing open source software. Speakers such as Marc Petit (Autodesk), Dan Candela (Disney), Sebastian Sylwan (Weta), and Rob Bredow (SPI) all gave their perspectives on open source. The common thread was that open source software was a very necessary part of the post production process, but not necessarily suitable for every application. It is most useful from an infrastructure (facility plumbing) standpoint in order to make workflow much more efficient — and then facilities can concentrate on the creative tools in order to differentiate themselves.

One key aspect is that the best and most used open source software comes from tools developed in the process of production…in other words: battle tested. Facility infrastructures all have the same basic requirements; there are nuances and differences between them of course, but their demands and needs are the same. ILM’s OpenEXR was universally applauded as an example, as it is a standard borne from production and thus has been universally adopted by manufacturers and facilities. All of the panel members felt that it was highly unlikely they would try to adopt a piece of open source software that was not developed by another facility….for instance, if someone external wrote an application and offered it up as open source. Another aspect which is important is the flexibility of the software license…basically the rules which stipulate how end users may use and modify the software, and what liability the original software creator has. All participants effectively require the BSD License…which limits liability to the creator as well as has a very permissive license that allows great flexibility in using the software.

Disney’s Dan Candela announced several new open source initiatives which will be released in 2011. They are creating “Dee”, which is a ready-to-animate character which will be offered to the public. It’s a pre-rigged Maya model which they have spent a lot of time developing to make sure its of high quality (Candela quipped that they’ve maybe spent more time on it than some of their production characters). They are also offering “SeExpr”, a universal expression language that can be used in a variety of packages. It is used in house at Disney in various applications such as shading, painting, procedural geometry, and dynamic simulations. Candela mentioned that we could expect to see the language integrated into The Foundry’s Mari. He also announced “Reposado”, a remote OSX installer/updater that runs on non-Mac workstations.

The big news of the day came from Sylwan, who announced that they were open sourcing their deep opacity technology as part of the upcoming OpenEXR 2.0 specification. This would be an important step in and of itself, but a key component is that they will be licensing their compositing tools for deep compositing to The Foundry. So not only will there be a standard format for this technology, artists will have tools immediately available to them to actually use the format with the release of Nuke 6.3 in the middle of the year. This is some exciting tech being made available to a wider market…if you’re interested in more information about it check out our fxguidetv episode #95 which covers deep compositing.

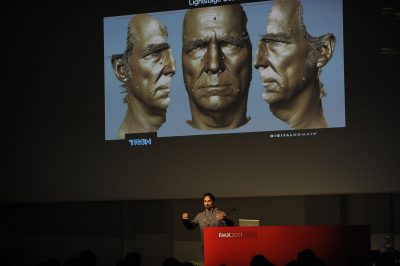

Digital Domain and TRON: Legacy

There’s been some pretty thorough coverage of DD’s work for TRON: Legacy, but here animation supervisor Matthias Wittmann delved into the creation of the young Jeff Bridges for the film with a ton of background material to show. He detailed the multiple steps in their emotion capture process, which started with a life cast of the actor (a surprisingly useful resource despite the difference in years – the position of ear holes, for example, don’t change). A Light Stage and MOVA scan also formed part of the reference and led to early modeling in Mudbox to set up a FACS rig. I particularly liked the tests Wittmann showed using old TV footage of Bridges with the model animated to match. Then of course the additional reference included head cam footage from the shoot and the body double on set.

Perhaps the most interesting aspect was that DD would adhere to Bridges’ most nuanced movements that might not have been initially obvious – such as a small head shake when he gets angry – but then could also change his performance where necessary if he was smiling or smirking in a way that didn’t quite suit the scene. There was a shot of the digital young Jeff Bridges turning to look at his son in his bedroom, and initially he looked too ‘arrogant’, but they tilted his head slightly and this suddenly made him feel more welcoming and warm.

Double Negative and Paul

Animation supervisor Anders Beer from Dneg showed some great clips and befores and afters from Paul, including some test shots that showed a slightly scarier and more ‘teethy’ alien. He said Dneg’s greatest challenge was overcoming any disconnect between the small and bony alien and the larger set and gravelly voiced Seth Rogen. With the actor not available for much of the production, Dneg’s animators relied heavily on reference. That came from video of Rogen recording lines, the use of a Xsens MVN suit and Flip cam reference taken of the animators themselves. Beer also discussed Paul’s skin shading which had the initial effect of taking away detail and muscle definition on the face.

Framestore and Dobby/Kreacher

Framestore’s head of modelling Ben Lambert gave an incredibly thoughtful and detailed presentation on his studio’s Dobby and Kreacher set-ups for Harry Potter and the Deathly Hallows – Part 1. There was a lot of detail here on the modeling and reference process, which started with a look at the Dobby animation for the previous films and scans of the Dobby macquette. One cool piece of footage showed Kreacher’s voice actor’s eyes being roto’d out and tracked onto the digital character just to check reference. It resulted in the artists realizing they needed to make a greater distinction between the brow and the eye and needed a new eye fold.

Other issues they ran into for Dobby was occasionally too much volume around the mouth that could be dialed back, and extra detail on the render model maps being required when sub surface scattering was applied especially in a flatly lit environment. The behind the scenes footage of Dobby’s death on the beach was impressive – showing the match moving and roto work necessary. Also the macquette was used as a prop for those plates, so Framestore had to use its original scan data model which essentially matched exactly. We also saw the use of nCloth in Maya to make contact wrinkles and folds where Dobby is being held around the neck.

Molinare and The King’s Speech

Creative Director Dayne Cowan presented work from The King’s Speech by London company Molinare and by sister company Pixion in India. Starting with an incredibly low budget that was reduced even further and , the effects studios worked hard to complete around 140 shots in a four month period with only a crew of seven people. Cowan detailed a number of the invisible effects shots – ranging from views of London locations, removal of modern buildings, cars and artifacts.

We were able to have a quick chat to Cowan after his presentation, and talked about the use of certain tricks to accomplish the work with budget and time pressures.

fxg: You’ve managed to complete so many great invisible effects for this film without having the usual budget for a Hollywood film. How did you approach that from day one?

Cowan: It’s come down sometimes to, ‘Well, this is what we’ve got to work with’. There will be some projects where the bid goes in quite high but then gets eaten down over the course of the project. In large films, you can sometimes start with a V shape for the budget – you start where you think it should be, it drops down to where they want it to be, but over the course of production things change and you can end up where you started. But this doesn’t happen with smaller projects. We’d always like it to be a certain amount, but they only have so much money. For The King’s Speech we really had to go through the film shot by shot and work out if each VFX shot added to the story.

fxg: It sounds like you could also leverage the London/India operations of the companies.

Cowan: We absolutely leverage the benefits of having a lower cost base in India. To give you a rough idea, London can be three times more expensive than India and LA sometimes six times. But there are other aspects in play like tax credits.

fxg: In terms of the actual tricks, what were some of the techniques and approach to the shots you used to keep the costs down but quality of work high?

Cowan: It starts right at the beginning. We had to think in very lateral terms and not always go for the 3D/CG solution. You have to come up with a clever solution, which is not always the most elaborate.

fxg: One of them was, wasn’t it, a shot of a hotel with Cannes in the background, where you didn’t have an element for that and you used a royalty free image from the internet?

Cowan: Exactly – that’s a classic situation where normally we might go on location for a reccy to Cannes and would shoot extensive photography for the backgrounds. But here there just wasn’t the time or the budget. So we sourced a series of images and re-painted it. Another shot like that was the view of the Buckingham Palace looking from the balcony. Because we couldn’t shoot that from that actual angle, it was just set up around the other side in Green Park which has a similar view. Then we replicated the crowds and added in digital people using XSI. There were also a lot of two and a half D projections done in Nuke, as opposed to whole 3D geometry.

fxg: And generally there were a lot of creative solutions weren’t there – I’m thinking of a shot of a car heading out of the Palace gates and in the plate it stops at an outdoor screening because it can’t go any further, so you added a digital car there for some traffic.

Cowan: Yes, I really liked shots like that. My favorite is actually a few of the priest leaning over in front of a movie projector and the film is seen on his head and robe. When I saw that I assumed the artists had modeled him in 3D and then mapped the film onto him, but it turns out they used Nuke to warp the footage so that it looked as if it was being projected.It helped that the angle was fairly static and that he didn’t move too much.

Similarly, the stadium replacement shots – we could get away with re-projection more here because they were fairly static shots. I thought the wires in the microphone, which were slightly transparent, came off really well even though we had to replace the backgrounds.

______________________

For us the day ended with a great fxphd meet-up in the heart of Stuttgart. Tomorrow we’ll be looking to attend some of the global VFX production seminars and ones on stereo. And we’ll be talking to some artists from Pixar and ILM. You can also look tomorrow for an interview with visual effects supervisor Kevin Tod Haug on his thoughts about stereo/3D acquisition.

Thanks to FMX and Reiner Pfisterer for most of the photographs in this post.