This week we talk to Dr Paul Debevec, long time friend of fxguide, now at Google and still publishing as part of the team at USC ICT. This year at SIGGRAPH the team are presenting Practical Multispectral Lighting Reproduction. We discuss the work extensively in the podcast, and below are key images that we refer to in that discussion.

Click to download the paper: Multispectral-Lighting-Reproduction-SIGGRAPH2016

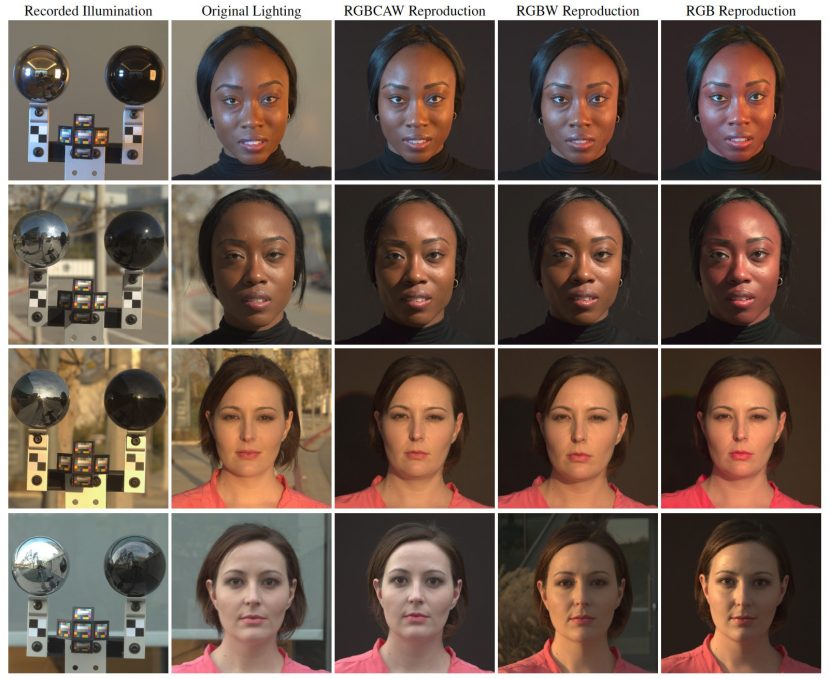

At the heart of the paper is capturing and reproducing highly accurate lighting. The paper goes beyond RGB to capture and reproduce a much more complex spectrum of lights, with far greater accuracy. The team solves the problem of inaccurate colour in a traditional HDR pipeline (especially on human skin).

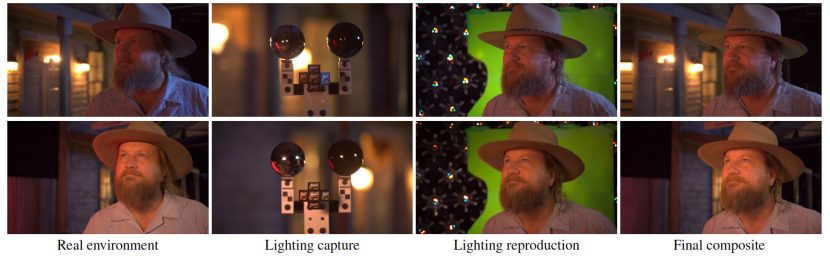

The image above shows a comparison of a real person photographed on the left, compared with the same person but shot in a Light Stage and then composited over a blank shot of the background. The match is remarkable.

The image above shows a comparison of a real person photographed on the left, compared with the same person but shot in a Light Stage and then composited over a blank shot of the background. The match is remarkable.

One of the reasons is that beyond capturing a HDR (which really focuses on dynamic range), the new system also captures what the spectral makeup of the light is in every direction. To reproduce this, the team has upgraded their Light Stage to have red, green, blue, cyan, amber, and white LEDs. (There is also a special version just using RGB and white LEDs).

The paper by the team at USC Institute for Creative Technologies is authored by Chloe LeGendre, Xueming Yu, Dai Liu, Jay Busch, Andrew Jones, Sumanta Pattanaik (University of Central Florida) and Paul Debevec .

Any initial approach to this problem might seem like it would need to involve expensive equipment such as an optical spectrometer, but this would have made it very impractical for real world film sets. Chloe LeGendre, who leads the paper team commented, “when I first started thinking about accurate color rendition in lighting reproduction, my initial intuition told me that the technical approach would surely involve measuring and matching the spectra of real-world lighting environments. But ultimately, we were pleased with the visual matches we achieved, comparing a subject’s appearance in the real-world and in the Light Stage, while using only photography for the environmental lighting capture”.

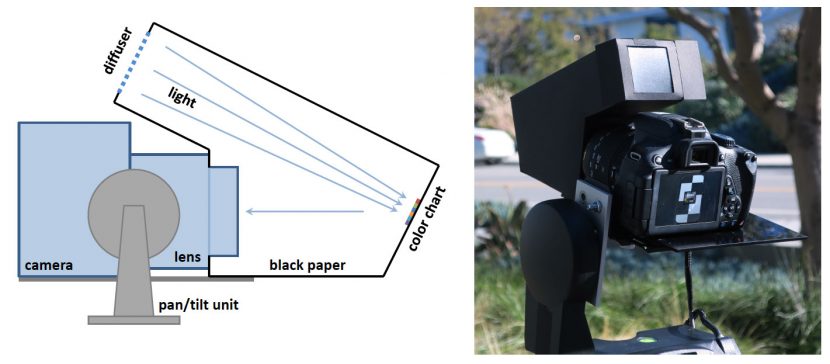

As you can hear in the podcast, there are two options for capturing the light on set. The first is a complex rig seen immediately below. This is highly accurate but time consuming. Below that image is the ‘run and gun’ faster method which is surprisingly close in results, but takes a fraction of the time to shoot.

And the simpler version:

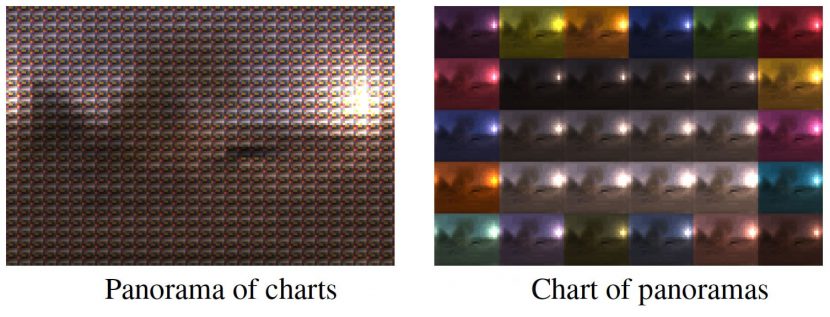

We also discuss how the world would look from the top version, (for the complete details, see the SIGGRAPH paper).

RGBCAW vs. RGBW

Below is a comparison of multiple set ups and their results. Far Left is the recorded illumination using the fast method. Next is a ‘ground truth’ version of the non-composited actor in the ‘target’ environment (no vfx). The next three shots are : The full LED (RGBCAW) set version. The RGBW only LED version and the old Light Stage (RGB LEDs only). Note on the right the faces seems saturated and pink. (Click on any of the images for a large version).

In the end of the discussion, Mike and Paul turn to discussing video, here is that video along with some frames from the video test, (with long time researcher and VR specialist Greg Downing standing in as cowboy actor).

The comps were in NUKE and special attention was paid to not recolour the foreground (spill compensation etc) as part of the compositing process.

As Paul Debevec explains in the podcast, the USC Institute for Creative Technologies remains a strong interest for him and a continuing research centre for advanced imaging with Light Stage type research.

The team is presenting as part of Computational Photography Session. Monday, 25 July, 9:00 am – 10:30 am, Ballroom C, Anaheim Convention Center. Chloe LeGendre comments “SIGGRAPH 2016 will be the fourth SIGGRAPH conference that I’ve been fortunate enough to attend. Although I always look forward to attending, this year there is some added anticipation, as I will have the unique opportunity to share this work with colleagues from a variety of intersecting disciplines and exchange ideas.”

As mentioned at the end of the podcast, Paul Debevec will be the keynote at the 9th SIGGRAPH Asia. Link here. This year, the SIGGRAPH Asia conference is at Macao, 5-8 December 2016.