The world of virtual reality (VR) encompasses immersive headgear experiences such as Oculus Rift and also extends to augmented reality (AR), or as industry experts such as Felix & Paul’s Sebastian Sylwan prefers to say, ‘Mixed Reality’ (MR), which is most identified with Magic Leap and Microsoft’s HoloLens. The difference is best illustrated by Google Glass and Magic Leap’s as yet unreleased newer devices. The ‘screen’ of data on the now discontinued Google Glass moves with your head. It is fixed in relation to your eye. The data or overlay of information in the Magic Leap headset will track with the world. This second approach allows for a digital chessboard to ‘sit’ on a table in front of you and stay fixed relative to the desk as your head moves – very much unlike the Google Glass display.

John Root at Magic Leap also discussed his personal view of the future of virtual reality and chief amongst the key technologies of the near future he named as key is light field display technology. As VR and AR (MR) merge, Root said sound field audio and light field display technology would be key.

When you understand what the team at Magic Leap are trying to do , compared to just traditional stereo bi-ocular vision, the need for Light field technology becomes clear.

When you are watching a stereo movie with normal cinema passive glasses your eyes converge at a point in space that indicates depth, but that perception of depth of an actor is at odds with where you are focused. In other words, you focus on the screen 30 feet in front of you no matter how far in front or behind the screen your brain converges the stereo pair into thinking the action is taking place.

Key to MR (AR) is placing the ‘chessboard’, to use the example from above, at the same depth as the actual table in front of you. Since the chess board needs to appear as it is on the table – and you are focusing on the table – you need to see and focus on both the virtual chessboard and the table…at the same time. The only way this can work is if the MR (AR) gear not only does the normal stereo trick of correct stereo convergence but ALSO changes the focus to match that of your view of the table. Of course, if the head gear projected the chessboard onto the table with a tiny video projector then this would not be a problem, but it doesn’t. It projects the chessboard back into your eyes – thus the MR (AR) trick is complete.

This MR (AR) trick is pretty impressive, which is perhaps why Google has invested in Magic Leap to the value of $643,000,000 (*it is estimated). The tech that allows a device to vary the focus to the correct depth value without just completely re-rendering is light fields.

Light fields are a relatively simple concept and are not that new, but their actual implementation is extremely hard to pull off.

Until recently only pre-rendered sequences were possible in VR. In those pre-rendered worlds, the computational camera maths is much easier as everything is virtual and so working out how to view any angle of the light field is all that is required. In effect it is a two-part process, render a light field out of real-time and then look at it from the angle you want – in real-time.

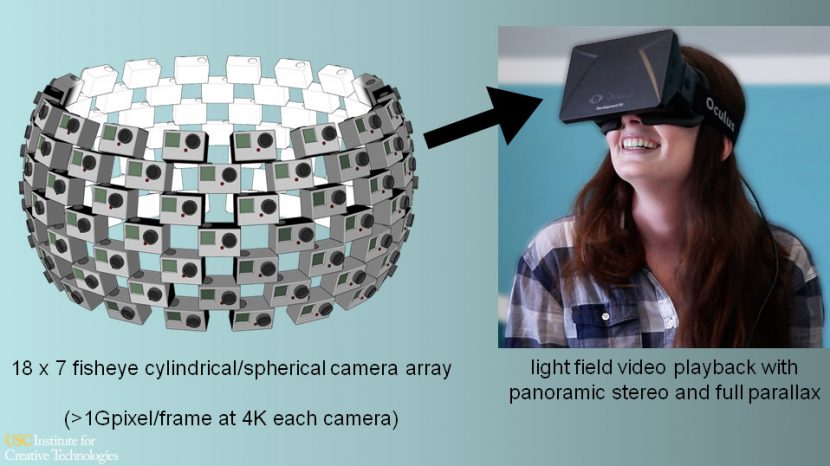

Paul Debevec of USC ICT recently presented a short history of light field research which spans several decades of research starting with key papers from the mid 1990s. Debevec explains light fields by conceptually thinking of a nodal pan as a perfect 360 capture. If one imagines a stereo pair turning 360 degrees on the nodal pan – there would be almost no parallax. (A single-lens panning on its nodal point has no parallax and that is what people such as Debevec have used for years to make 360 light probes and 360 panoramas). Now conceptually extend the ‘cameras or eyes’ to be ‘a few feet’ off the nodal center. Now as they spin a horizontal 360 degrees they will define a large circle with a radius of ‘a few feet’. Of course, now there is vast parallax compared to the nodal case, but if you extended the middle of each pair of cameras or eyes back towards the center – they would still be pivoting around the center.

“Of or relating to all the light, traveling in every direction in a given space.” Plenoptic or light field cameras can capture a space or light field and then render to pixels as needed.

The idea of light fields is that with computational photography, one could take those off nodal camera pairs or eyes sweeping around and find any point inside that circle. There is really no position inside the circle the cameras/eyes do not cover from one camera or another. Using computational photography you could extrapolate the view back from any pixels or points on ALL the views. In theory, you can compute any point from some combination of all views inside the circle. If one thinks of it as ray tracing and the cameras/lens as MR (AR) or VR screens – then you could fire a ray from any point in the circle and one of the views will contain that ray path. But for one 360 rotation you just have a horizontal slice. If you want to look up or down you will need more rings either above or below our first ring, again with a small amount of overlap.

So to provide all the data for a fairly wide light field capture in the real world – in every direction, you would need a lot of cameras. Using GoPros, for example, need about 18 cameras around, and seven layers of them (stacked up). This 126 camera array, if all sync’d and lined up with matching exposure etc, could produce a gigapixel image per frame. While possible in theory, in practice GoPros cant be sync’d and the exposure and lens alignment is not controllable enough – not as much as say a DSLR. Which is why Paul Debevec and his team realized that the same effect could be achieved for a static scene by just moving a DSLR on a motion control arm around in seven varying circles and the resulting stills combined into computational light field model. The absolute key to remember is that the team are not aiming to just stitch an entire spherical bubble. The output is not the stitch of all of these camera positions. The camera data is the input to the light field computations that allows the system to show a correct stereo pair from anywhere inside that bubble.

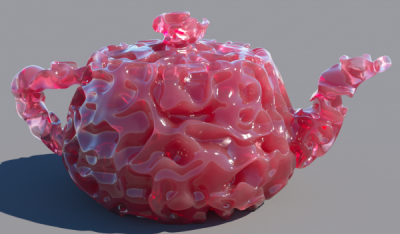

To process and display this world-first ‘captured’ or ‘filmed’ light field, the team worked with OTOY, the makers of Octane. As we have already covered here at fxguide, OTOY leads the industry in generated VR light fields. At Siggraph last year they showed a real-time VR playback light field system which uses fully rendered data to provide an very realistic and impressive light field. In the original OTOY demo the ‘world’ can be solved by a virtual rig and a scene rendered in a light field format that allows for real-time playback. The light field may take many seconds to create a frame – it really does not matter how long the render is, since the playback or final rendering of that scene into the VR headgear is in real-time. The net effect is that one can move one’s head inside an OTOY Octane rendered light field and see around objects, get parallax but with all the polish of a pre-rendered experience. Normally pre-rendered experiences have no head tracking. The OTOY digital virtual camera/light field solution already works at 75fps and higher with a regular DK2 Oculus Rift.

Thus with this existing VR light field player, compression and VR Oculus Rift interface already working, Paul Debevec’s team inserted, not a virtual render, but a live-action capture of a ‘still’ scene. Once this ‘light field capture’ is loaded into the OTOY playback engine one can look around say a real apartment and office and you get full parallax, reflections move and spec highlights ping and illuminate surfaces as if it is a moving image, in stereo with full head tracking.

OTOY: Octane

OTOY is, of course, continuing its own light field Octane rendering path, improving dramatically their render environments and the ease of generating a light field.

At the time of writing, the current release of Octane is the 2.22 version which allows a user to render normal images or a VR environment to say a PC and Oculus Rift. For example, there is a plugin for C4D to render to the Octane. There is also a special build of the 2.22 version that allows rendering to the special light field codec. That allows the team at OTOY to render out test light fields, which fxguide has seen and experienced. OTOY plan to release a special Octane VR version – and for 90 days be free. This version will work with Blender (but not Max or Maya).

Come the release of Octane version 3.0 (which has been announced) this plugin will be integrated and any normal customer using version 3 will be able to render light fields, VR and of course normal high-quality images for any purpose. Octane v3.0 also includes a host of new features, such as pause/resume renders etc.

At the moment most of the Octane rendered work has been immersive light field still environments, but with the OTOY codec and system design, you will be able to render animations, full light-field animations (the files work at around 25Mbs). This means both head movement real-time and the world you are looking at is a moving animated world – spatial and temporal VR movement.

The Octane renderer has plugins for a range of products from 3DsMax to Maya and even Nuke.

Otoy has also been involved with various live action tests with sporting bodies such as the NHL, but as yet this is not using Lightfield capture.

Collaborating with New Deal Studios and Immersive Media, OTOY tested VR NHL in February in California. Previewing an NHL Stadium Series game between the LA Kings and San Jose Sharks at Levi’s Stadium. Along with the 360-degree HD live stream, OTOY broke new ground recording 6K high frame rate, high dynamic range (HDR) 360-degree streams intended for non-live content for fans. As a next step, OTOY stated that they will “record future events using an experimental light field camera which is designed to provide even greater quality to the experience and over time will benefit live streamed VR experiences”.

HoloLens

At the same FMX conference where Paul Debevec presented, Alex Laurant, from the Hololens/Microsoft team spoke. Laurant is the art director on the project, having previously been at ILM, Crytek and EA. Microsoft is not only building their own MR (AR) headgear but building hooks and links into Windows 10 at the most fundamental level to support and work with the Hololens. MR (AR) is typically expected to have a smaller field of view than current VR, and it faces a huge range of issues, amongst them is that the MR (AR) pivot point is your eyes, and that to be truly effective you need to have some kind of opacity map that blocks or solidifies the overlay images, otherwise the overlay graphics will always seem faint and ghost-like.

Nozon & Presenz

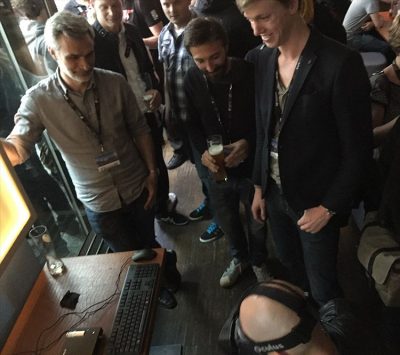

One of the most startling and interesting tech shown at FMX was not in a lecture or on the show floor, but at a demo given at the Arnold (Solid Angle) party. Fxguide got to experience the demo and interview the team from Nozon about their Presenz (and part of that interview is in our FMX fxguidetv episode).

What makes the Presenz so exceptional is that it showed a non-light field animated scene, where head movement was tracked. In other words, it featured most if not all of the properties of a rendered animated light field but not using plenoptic light field approaches.

With the VR rig on, the user can lean forward, look around and move inside a limited space and watch moving rendered footage. The limited space is a box about a meter by a meter around you, which can be ‘illuminated’ live while you are watching the VR. The notion is that most people watch a VR sitting or standing but for obvious safety reasons – as you are effectively blind to the real world – you rarely walk around.

What makes this even more incredible is that the pipeline is based on a standard Maya-Arnold render. The team have developed a plugin or ‘version’ of the Arnold render that takes normal assets, with normal shaders, and allows them to be rendered so the completed file can be played on an Oculus Rift with full head tracking (when connected to a PC). This is very significant. Imagine working at a post-house and the client wants a VR experience based on the very expensive car TVC your team has just made. If you are licensed and using Presenz – the same car assets and 3D environment you have already built and rendered can be turned around quickly into a truly immersive VR experience. The system runs now on the Rift, but it could run on any VR gear in theory. The files we tested with were production grade but the rendered files were still very large – too large for a common download, but then it is still very early days for the Nozon team.

We have no NDA or special secret insight into how they are doing this, but an educated guess is that they are rendering with the deep data of Arnold and using that to somehow perform the real-time VR head-tracked version of the scene. The whole scene, at the complexity level we saw first hand, could not be fully rendered in real-time from scratch, so clearly the clever team at Nozon has an intermediate format, from which the final render is done.

NextVR

In fxguide’s earlier VR story from GDC we discussed that NextVR were using light field technology. Since that story was written we have managed to talk further with the team there and piece together more of the NextVR solution.

We spoke to Dave Cole, Co-founder of NextVR who explained how they have built on top of existing live broadcast technology, that was originally designed for 3D television. This technology extracted depth information from stereo pairs for the “purpose of maintaining the strength of edge information as the image is moved through various stages of compression as it reaches the broadcast channel,” says Cole.

NextVR uses this to extract depth information, “and keep it out of band so it is not subject to depth decimation and then it is rendered as part of the final presentation,” Cole notes, supporting the occlusion and keeping the edges sharp and thus avoiding ‘rubber matting’ seen in early stereo conversions.

That technology has meant that all the rigs at NextVR have had feature detection and depth information generated as part of NextVR’s pipeline. The depth map is used to create a simple mesh of the environment “and we use that mesh for a number of things, and one of them is that we don’t seam in the traditional sense – we basically build a representation of the world, inside the player, and then we map the textures to that world – so when you are experiencing content from us you are actually experiencing a CG virtual world or an approximation mapped to a mesh,” explained Cole, “and that’s really the secret sauce from us.”

NextVR is focused on a few key VR areas but the main one is live VR coverage of say a sporting event. It is in this context that NextVR uses technology including light fields to produce a more interactive experience. NextVR was very open in pointing out that their use of light fields is not as technologically engaged as say OTOY. NextVR seems to be able to able to create a more immersive experience by building a depth map of say, a basketball court from the position of the intended live coverage. Onto this 3D stage, they effectively project the real-time captured event – thus providing more parallax. It is in making this stage that a light field could be used, but the team also uses LIDAR and other non-realtime tools.

For the moving players on the field, fxguide believes other valid tricks are used to create their depth, since clearly in the amount of time one has for VR rendering, NextVR can’t scan the players, make a 3D model – per frame – and project the player VR footage back over them. This would be well beyond any 3D or VR system’s performance. But it would be possible to solve for the stage and have the moving actors on the stage (or in this case basketball players) placed with less 3D precision – but with still enough fidelity that the illusion is complete and the experience immersive.

After successfully working with numerous sports, news and entertainment groups including the NBA, the NHL, Fox Sports, NASCAR, the band Coldplay and more, NextVR is preparing for their next stage of development. Key industry luminaries such as Peter Guber (founder and CEO of Mandalay Entertainment) have joined an advisory board to provide strategic advice through NextVR’s expansion as virtual reality becomes more mainstream.

“For those who haven’t experienced it yet, it’s hard to imagine what NextVR is capable of delivering with their live virtual reality technology. They have engineered the closest thing to putting you in the best seat in the house virtually that is possible,” said Peter Guber, Mandalay Entertainment CEO, in a release. “It’s a game-changer for live sports, festivals, concerts, shopping, and travel.”

NextVR is therefore providing a powerful immersive experience without rendering into light fields, although they have used light fields to help at the start of the process in some cases. They are also using “LIDAR, camera and using its own position in a mapped space, we’ve used ‘time of flight’, we’ve used ‘structured light – which is really just LIDAR and SLAM, we have used dynamic light, but out of all of them light fields has been the most effective for quickly acquiring an accurate depth map and then being able to include that depth map in the processing that we already do,” says Cole.

NextVR’s pipeline is significant and has already resulted in several patents. The company aims for maximum image quality, mastering in very high resolution, often shooting using multiple RED cameras with 6K capture and well above their required frame rate, both for higher image fidelity and lower noise images.

Cole points to the absolute need for low noise, high dynamic range without crushing the blacks or clipping the highlights. “There is infinitely less that the viewer can tolerate (in VR) than when they say in a traditional 3D display like a 3D movie or on 3D television,” he says. “As you ratchet up the immersion level – the tolerance for artifacts becomes lower and lower.” Quality is clearly key to the process, as the multiple RED rigs are on paper overkill in resolution and quality, yet Cole thinks capture quality is key to producing good VR.

The popular press has taken NextVR’s use of light fields to mean they have a real-time full light field solution and the company stresses this is not the case. Of course, how they make immersive live events does not matter to the public – what matters is that the experience is compelling and enjoyable.

The NextVR solution is effective and no more processor-intensive then say a PC game engine VR experience. They are “killing the decoder,” jokes Cole, in the mobile version of their application, “the thermal load we put on the phone is about heavy as playing a pretty hardcore game or VR game on your GearVR”. (2880 x 1440 @60Mhz). NextVR is available as stream-able with between 4 and 8 megabits per second “with very, very precise compression,” says Cole.

But like light field solutions, NextVR has a solution that allows 6 degrees of freedom (positional tracking), but it also allows for applications “which are not binocular stereo,” comments Cole, referring to things such as Magic Leap. Cole also points out ideally for such MR solutions you want an entire volumetric solution, which is not really feasible from the standpoint of acquisition and rendering. But a hybrid solution of real-time stereo resolution and a pre-calculated map does offer real potential in such future volumetric applications, Cole believes. “We can render our video experience into an accurate enough volume to make it work inside one of these non-stereo, rendered technologies.”