fxguide is here at FMX in Stuttgart. The conference this year is once again diverse in its offerings. Later in the week VR will play a large part of the program, along with big VFX films like Age of Ultron and also virtual humans. Day One at FMX was heavily focused on rendering – with presentations from DNeg, Weta Digital, Walt Disney Animation Studios, Animal Logic and Pixar. In addition, a huge crowd saw Weta Digital senior visual effects supervisor Joe Letteri run through a history of The Hobbit and 20 years of the visual effects studio. fxguide was also able to speak to Letteri on camera – you’ll find that in an upcoming fxguidetv episode.

FMX is really an incredible event – each day there are at least 4 main tracks of talks taking place (often presenting the problem of which talk to go to!). Plus there are a number of other smaller, more focused sessions on tech from companies like Chaos Group or Side Effects. Then there’s the recruitment booths, educational institutions, screenings, workshops and masterclasses. Then of course FMX is a great place to meet and discuss tech with professionals, students, companies and pretty much anyone interested in the field. We can’t recommend the conference highly enough.

Into the Wormhole – DNeg

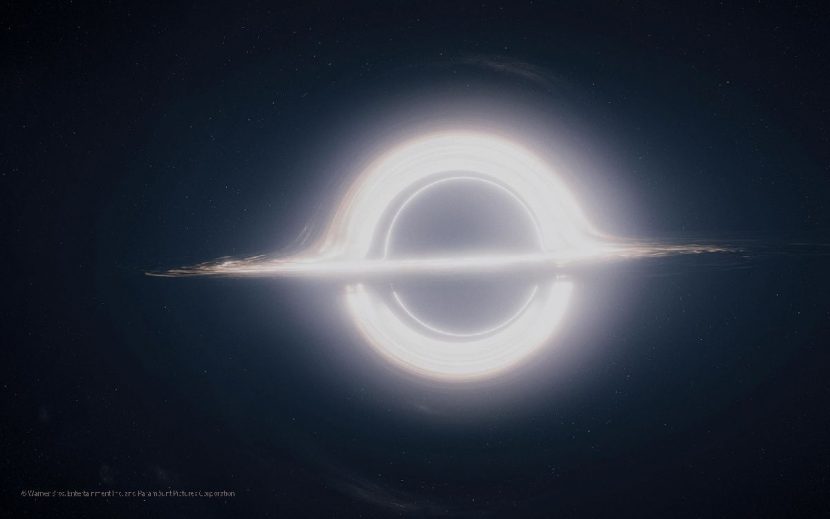

DNeg’s chief scientist Oliver James recounted his experiences in helping to realize the wormhole and blackhole imagery for Interstellar. James said he essentially had to learn general relativity, apply it to blackholes and wormholes and then write a renderer. He credits scientific advisor Kip Thorne in making the shots possible – Thorne shared with the team at DNeg 1000, 12 papers, 9 Mathematica notebooks and 2 peer reviewed articles – the result was that the imagery started from a real and scientific base

DNeg’s chief scientist Oliver James recounted his experiences in helping to realize the wormhole and blackhole imagery for Interstellar. James said he essentially had to learn general relativity, apply it to blackholes and wormholes and then write a renderer. He credits scientific advisor Kip Thorne in making the shots possible – Thorne shared with the team at DNeg 1000, 12 papers, 9 Mathematica notebooks and 2 peer reviewed articles – the result was that the imagery started from a real and scientific base

Thorne actually designed a special ‘DNeg wormhole’ just for the film, and then James led the development of what was called the Double Negative Gravitational Renderer – taking the science on how the path of light is affected as it goes past a blackhole – to render the shots with the appropriate gravity effects.

When Thorne wrote an early paper on the subject, DNeg had completed a test within a week that showed a spinning blackhole. “It was a horrible image with lots of artefacts!” noted James. But, he says, “Things quickly got better.”

One of the challenges was to represent black hole accretion disks. After seeing an early test, director Chris Nolan asked if it could look more volumetric. DNeg used Stanford bunny-like CG object to show how the imagery was warped as it spun around a black hole – achieved by designing this in Houdini, then putting through the Gravitional Renderer and being warped by real gravitational lensing.

One of the challenges was to represent black hole accretion disks. After seeing an early test, director Chris Nolan asked if it could look more volumetric. DNeg used Stanford bunny-like CG object to show how the imagery was warped as it spun around a black hole – achieved by designing this in Houdini, then putting through the Gravitional Renderer and being warped by real gravitational lensing.

To show stars being properly warped, too, DNeg downloaded NASA’s Tycho2 catalogue of 2.5 million of the brightest stars. With that they could calculate where each star appears in the viewport and then draw them – it meant as you zoomed in the stars would hold up. The stars had to be ray-traced and treated with analytic motion blur to look most effective. Even then, a final shot of The Endurance passing through the wormhole was slightly art directed to add parallax and volumetrics. Lens flares on stars were convolved HDR images acquired from real production lenses acquired by James in a dark cellar box.

James acknowledged the incredible complexity of the task, including the fact that they were somewhat locked in to the approach since early renders were used on set and projection into the windows of the live action space vehicles. “If it didn’t work,” said James, “the Plan B was to take the reference imagery, take our renders and then ‘do something’ in Houdini.”

But, he added, that the results felt real because they were real and resulted in beautiful shots. “If you used the real science quite often you get something you didn’t expect to find.”

You can read our previous coverage on Interstellar starting here.

Hyperion – Walt Disney Animation Studios

WDAS Principal Software Engineer Brent Burley took audiences through the history of Disney’s new path-traced renderer, Hyperion, used on Big Hero 6.

WDAS Principal Software Engineer Brent Burley took audiences through the history of Disney’s new path-traced renderer, Hyperion, used on Big Hero 6.

Burley suggested the impetus for building a new renderer (Disney had been working with Pixar’s RenderMan) was the need to do more complex imagery. But their approach had also always been to ‘fight complexity with simplicity’. This is something they had done with Ptex and the Disney BRDF. Burley says his Aha moment for a new path tracer that involved large, sorted ray batches along with deferred shading came in November 2011. He still has the email he wrote to CTO Andy Hendrickson about it. Hendrickson’s reply was “Wow, please come see me this is a pretty big development.”

Disney embarked on some experiments, including using a tower scene from their film Tangled, and we incredibly surprised by the results. They published ‘Sorted deferred shading for production path tracing’ at EGSR13 and then went head first into building the production renderer. The challenge was that its – Hyperion’s – development was to coincide with production on Big Hero 6.

One of the first things Disney did was create a lighting lab to validate the renderer – this is where real world objects are photographed and then the renderer activated to match them. The hardest match, notes Burley, was a ping pong ball since it had a lot of light bouncing around inside and coming through. They ultimately nailed the look for the ball and this turned out to be particularly useful and relevant for Baymax – a white, plastic shape that looked fantastic using 10+ bounces of indirect lighting.

Half way through the first year of development, the team soon surpassed the ‘No plan B’ and ‘point of no return’ marks. Hyperion was still missing motion blur, volumes and the ability to make final quality images. But, says Burley “It had already invaded our culture and we were so committed to the quality. It was determined that continuing with Hyperion was only way we could finish the movie.” Some fallbacks were developed – Disney built a post-process motion blur system, depth of field pipeline and were prepared to render volumes in another renderer (ultimately they never needed to do these things).

One issue Disney had to deal with was noise – the path tracer could reproduce any lighting effect (quickly) but it also generated noise, and lots of it. Their solution was sampling via a decision tree. “You sample things with probability, and eventually we’d sample enough,” says Burley.

When the team realized that there could be 500,000 lights required in some scenes, including lights with emission profiles, they then needed to develop a light database where for various cached points in the scene the renderer determined which lights contribute significantly.

The next step was also filtering to further reduce noise – a post process after rendering. For subsurface scattering such as on a character’s skin, Disney incorporated a particular diffusion profile in the filtering. They advanced this for the short Frozen Fever where subsurface on the ice creature was important. Work is continuing o be done on the next feature Zootopia which required a hybrid hair / fur approach in the path tracer (more will be discussed at SIGGRAPH).

Ultimately, Disney’s use of Hyperion on Big Hero 6 was a successful one. The final images were rendered just 1.15 times (that means only 15 per cent of the frames had to be re-rendererd). All of the shots were approved noisy with only a final filtering required once. Disney lit and rendered 90 per cent of the frames of the film in less than four months – providing more time for creative changes. Still, the studio was able to create 340 million unique items, render with 500,000 lights and generate 10.5 billion rays per frame.

“Should a company do two ray tracers?” concluded Burley, in response to a question about why Disney was not using RenderMan. He says developing Hyperion has meant more cross-talk between the studios and in fact Christophe Hery from Pixar added that his studio and Disney are sharing tech and that Pixar are using Disney’s de-noising filter for Finding Dory.

Here’s our earlier coverage on Hyperion.

A New Path Trace Approach for Finding Dory – Pixar

Our thanks to Alex Kucera for contributing this write-up.

Our thanks to Alex Kucera for contributing this write-up.

Pixar senior scientist Christophe Hery took us on a journey through the history of RenderMan. From the basics of RSL (Renderman Shading Language) with its empirical shading to RSL 2.0 and the first steps in physically plausible shading to the new RIS path tracing engine that allows physically accurate image creation.

The talk started with RSL 1.2, which basically was state of the art in big budget productions up to 2005. It had the hallmarks of traditional computer graphics: light sources without any size or falloff (in fact, ILM usually didn’t use falloff on lights at all even though an option for realistic light falloff existed) and of course idealized materials that had lots of dials for creative control without any regards to physically accuracy or energy conservation. One could easily create a surface that was brighter then any of the lights hitting it for example.

With that creative freedom comes a lot of complexity though to get something that can be considered “correct” when it comes to realism, a required goalpost when you want to integrate your renderings into filmed footage.

To get to an acceptable result quicker and with less complications, Pixar started to implement physically plausible rendering which was first used in Iron Man and later in Monsters University to great effect. This proved that both realistic and stylized results were possible with this physically more accurate model despite it having less dials.

While RSL 2.0 was quite successful it still had a few problems. Mainly it slowed down in complex raytracing setups due to Ray Hit Shading taking a lot of time and the memory usage exploded with complex scenes. This lead Pixar to develop a path tracing approach and a new render engine called RIS.

RIS allows for even simpler controls, while rendering more accurately, faster and with less memory then the RSL approach. Shaders are now fully integrated but very modular, which allows for easy debugging or switching out whole block for quickly getting different results.

Finding Dory, which is currently in production at Pixar, is the first movie that is using RIS. In fact, Dory is the first use of what Christophe calls the RUKUS pipeline: RIS, USD, a new interchange format developed at Pixar similar to Alembic, and Katana. Christophe still hasn’t figured out what U and S stand for…

Since RIS has the ability to do progressive rendering, the look development and final rendering has accelerated quite a bit. An artist can set up a complex shot, put it on the farm and then “check in” after say 20 minutes and get a good idea what the final images are going to look like as the whole image is already visible, albeit very noisy. Then they can just let it “cook” on the farm until an acceptable noise level is reached. The remaining noise residue is being filtered away as a last step. A technology that Pixar was able to use from Hyperion, Disney’s new Path Tracing engine and it will be part of the public RenderMan release in the future.

This means there is less human (expensive) time needed to set up a shot as there is much less trial and error. The artist always have a really good idea of what the final result is going to look like.

Sadly, Christophe wasn’t able to show us final imagery of Dory, as production isn’t that far along yet, but we did see a few shots of Dory and Nemo in their new look and they do look much improved from their old selves. We saw them being shaded, lit and look dev’ed in realtime in Katana and the experience looked very smooth and seamless.

See fxguide’s previous coverage of RIS here.

The Hobbit and 20 years of Weta Digital – Joe Letteri

This was perhaps the first absolutely full session of FMX – a packed crowd turned out to hear Weta Digital senior visual effects supervisor Joe Letteri recount the history of the studio and also deliver some insightful comparisons between VFX on the original Lord of the Rings trilogy and The Hobbit films. Letteri even joked that Weta is still working on the Extended Cut for the last film…

The talk began with nuanced breakdowns showing the forced perspective approach done for Fellowship – enabling Gandalf and Frodo to converse. The production had to take an alternative approach for The Hobbit because of shooting in stereo – here a replica greenscreen set of Bag End was built for Gandalf to act in, with two moco cameras slaved together to film the scene, and a live stereo comp delivered to Peter Jackson to check on line-ups. Weta Digital then spent months doing comp clean-ups, adding in sections of the set and massaging the plates. “As often is the case,” said Letteri, “it was one of the first scenes to shoot, and one of the last to deliver.”

The talk began with nuanced breakdowns showing the forced perspective approach done for Fellowship – enabling Gandalf and Frodo to converse. The production had to take an alternative approach for The Hobbit because of shooting in stereo – here a replica greenscreen set of Bag End was built for Gandalf to act in, with two moco cameras slaved together to film the scene, and a live stereo comp delivered to Peter Jackson to check on line-ups. Weta Digital then spent months doing comp clean-ups, adding in sections of the set and massaging the plates. “As often is the case,” said Letteri, “it was one of the first scenes to shoot, and one of the last to deliver.”

We next saw the miniatures work for Rivendell in LOTR and the completely CG locations made for The Hobbit. Similarly, Letteri ran through the CG troll creations for the new films and how they were captured live and mixed live. One of the most fun breakdowns next was how Legolas’ legs were replaced in both trilogies.

Letteri then moved onto Gollum – perhaps one of the two trilogies’ finest creations. He praised Andy Serkis for bringing so much life to that character – the actor was originally only brought on as a voice performer “but pushed Peter Jackson to allow him to get into the scenes with the other actors.”

Back on LOTR, Serkis would wear a skin tight leotard and interact with the actors, but then replicate the performance on a mocap stage. Letteri says one of the simple breakthroughs was actually treating that extra motion capture like a real shoot, on a proper stage and with professional takes. Weta Digital then incorporated the performance into the digital character, with a ton of keyframing. A major factor, too, was the advent of subsurface scattering on Gollum’s skin – greatly improving the realism.

Back on LOTR, Serkis would wear a skin tight leotard and interact with the actors, but then replicate the performance on a mocap stage. Letteri says one of the simple breakthroughs was actually treating that extra motion capture like a real shoot, on a proper stage and with professional takes. Weta Digital then incorporated the performance into the digital character, with a ton of keyframing. A major factor, too, was the advent of subsurface scattering on Gollum’s skin – greatly improving the realism.

On The Hobbit, so much performance capture development had been completed by Weta Digital by then that Serkis could be captured live on set (his face too). Plus the studio had Tissue – an approach to creature building that relied on full anatomically correct under-structures to get the right muscle and skin dynamics.

Letteri was later asked about the common debate around digital humans/real actors and motion capture and the role of animators. He was up front in his response, saying, “Andy is what gets the headline. We’re not the ones the press wants to talk to. I’ve been in interviews with Andy where they’ve framed me out.” Letteri also mentioned the possibility of digital actors receiving awards, noting that “the Academy has resisted awarding this to an actor because they know how much we do to the performance. I don’t see the Academy going there anytime soon.”

However, Letteri clearly acknowledges the role of Serkis in creating Gollum (and of course many other characters Weta Digital has worked on). “Actors still give you a great performance,” he said. “If you did Gollum without Andy, you’d have a different character. You may get that by doing straight animation but I find you get a great performance with actors. Actors work in the moment – they are very physical and visceral – they bring drama to the scene. We’re not replacing actors but bringing actors into the process.”

However, Letteri clearly acknowledges the role of Serkis in creating Gollum (and of course many other characters Weta Digital has worked on). “Actors still give you a great performance,” he said. “If you did Gollum without Andy, you’d have a different character. You may get that by doing straight animation but I find you get a great performance with actors. Actors work in the moment – they are very physical and visceral – they bring drama to the scene. We’re not replacing actors but bringing actors into the process.”

Other great breakdowns Letteri shared included the Goblins and Goblin-town, Smaug and Lake-town, the barrels sequence (including tests done at a waterpark), Azog and Bolg and the enormous battle at the end of Five Armies. Weta Digital provided Peter Jackson with virtual production methods for staging the battle – using a jury-rigged Sony HDV camera (it had to match a camera Jackson was familiar with and even when it was trashed a similar body was molded to match). In fact, Letteri finished his talk answering a question about what’s in the next 20 years for Weta Digital – he said: “The track we’re on with virtual production will define a new movement in filmmaking.”

And it was clear the audience couldn’t wait to see what Weta delivers next.