The GPU Technology Conference (GTC) has grown considerably over the years, a reflection of the increasing use of GPU processing. For the vfx and production community, this tech is has become an incredibly important part of the process — one could argue critical part — and its why fxguide is covering the event.

This holds especially true for NVIDIA GPUs, being widely adopted across numerous industries. In 2008, there were 100 million installed CUDA GPUs and 150,000 CUDA programming kit downloads. Today, there are over 430 million installed CUDA GPUs and 1.6 million downloads of CUDA

The conference itself has also grown in size and scope, with tracks in industries from design to medical, entertainment & media to finance. This is a high level professional conference for anything NVIDIA GPU. The second day of the conference opened today in San Jose with a keynote by Jen-Hsun Huang, Co-Founder, President and CEO of NVIDIA. There were several items of interest to the vfx and production community, including key demos and major product announcements.

GPU Roadmap

Huang laid out the GPU roadmap, which continues the trend of doubling the GFLOP performance per watt every two years. This is an important point to NVIDIA — they are very much focused on making the processing much more energy efficient over time. It’s a big deal and an important distinction for the future. This is something frankly you don’t see much in CPU development from Intel, especially on the desktop. As installations such as large render farms scale in size, this differentiation will become critically important (it already is). Especially if some of the shortcomings dealing with memory access can be addressed in the GPU in the future. And it looks like it will be dealt with.

The next iteration, Maxwell, is due in 2014 and aims to have “unified virtual memory”. This makes it possible for the GPU operations to read and see the CPU memory and the CPU to see the GPU memory.

What does this tech mean for you?

Effectively, the bottom line is that it should make it easier to program for the GPU, which means better and more efficient tools for artists.

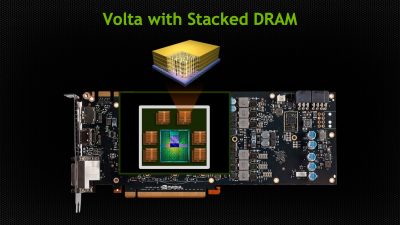

The next step, Volta (due date was not given), is a nod to Alessandro Volta. Volta is credited with inventing the battery, and Huang says the name conveys the fact that they aim to make the architecture even more energy efficient. But the big news in Volta is the memory.

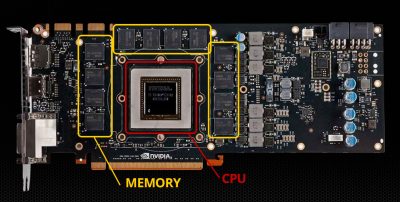

In the current Kepler architecture, the GPU chip is surrounded by the memory. And while the bandwidth of the memory on a GPU is greater than that on the CPU, there simply isn’t enough memory on the GPU for many of the tasks needed in vfx and post. Moving data from the GPU to the memory chip on the card is inefficient as the card itself has speed limitations due to its size and it actually takes (relatively) considerable power consumption to do the move.

In Volta, the new generation will come with something new called “stacked DRAM”. This will solve having to get memory off the actual GPU chip and onto the surrounding board. The solution is to actually move the memory and stack multiple memory chips it on top of the GPU on a silicon substrate (a slice of silicon). They then cut through the memory, directly connecting the memory to the GPU.

What does that tech mean to you?

How does 1TB a second bandwidth through the GPU sound? Pretty damned fast ! However, getting the data to the GPU is another matter, – but at least it appears as though the GPU itself will no longer be a bottleneck.

FaceWorks

There was a bit of eye candy, with a demonstration of real-time GPU rendering of a talking human, utilizing a bit of tech NVIDIA calls “FaceWorks”.

In partnership with Paul Debevec and the crew at ICT, the initial data was acquired on the LightStage. However, that data ends up being around 32GB in size, which is far too much to be dealt with by a GPU’s limited memory bandwidth (though stay tuned for info about upcoming tech).

The data is reduced in size by various methods, one way being to pare down the textures by breaking it into tiles and then discarding tiles which are nearly identical to one another. In the end, through texture and mesh reduction the data can be reduced to around 300MB. While it wasn’t explicitly mentioned, the final HD resolution of the renders also impacts the needed resolution of the data.

The actual rendering is done through an 8000 instruction program which handles articulating geometry to transition between poses, as well as pixel processing of the renders and comping over the various HDR backgrounds. All in all, it takes close to two teraflops of performance, which is actually only about half of the capacity of the recently introduced GTX Titan. The end result is impressive. Admittedly, it wouldn’t be considered final render for motion picture work, but this is a real time talking head that looks pretty damned good. It’s complete with special rendering tweaks for the eyes as well as the obligatory sub surface scattering.

GRID VCA

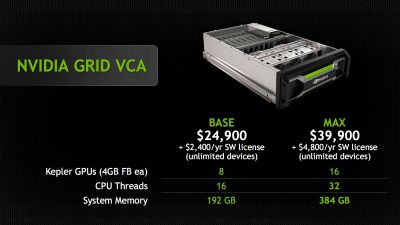

Huang also introduced a new bit of hardware called the GRID Virtual Computing Appliance, or GRID VCA . This is a four rack unit high system that comes in two varieties:

- 8 GPU system with 16 threads of Xeon CPU and 192GB of system RAM ($24,900 + $2,400/year for software license).

- 16 GPU system with 32 threads of Xeon CPU and 384GB of system RAM ($39,900 + $4,800/year for software license)

NVIDIA already has enterprise level GRID hardware intended for very large installations with major IT support. The new GRID VCA is intended for small to medium size installations, allowing 8 concurrent connections for the base level system and 16 concurrent for the larger system.

So what’s the idea behind it?

Basically the unit has a virtualized environment on the VCA hardware set up by NVIDA at shipping to run a software application of some kind, ideally a GPU-intensive application. The end user then uses a lightweight client app to connect over the network to the VCA from their Windows, Linux, or OSX-based system. With the client app, it appears to the end user as though they’re running the application on their local machine….but in reality it is running on the GRID VCA in the datacenter. What is happening is that the graphics card “output” in the VCA is being sent over the network to the client as data instead of driving a graphics card.

If you’re familiar with VNC (or screen sharing), this is quite similar to that. The benefit is that as an artist you could be using an underpowered computer such as a Mac Book Air, yet effectively running a full CUDA enabled version of After Effects and be able to take advantage of ray traced rendering. They demoed at the keynote, “running” 3dsMax on your MacBook Pro…an app which doesn’t run on OS X.

This kind of tech is often used at vfx facilities to more easily manage installs, version changes, and even remote collaboration. Servers in the machine room run production software in virtualized systems and the artists computers are simply viewing a display of the virtualized desktop and app. This also helps with a major concern at facilities: media security. Because artists don’t actually have direct access to clips on their system, facilities can more easily meet the stringent security requirements studios place on their footage.

In the max system, there are eight graphics cards, each with two GPUs. In the initial shipping version only one GPU may be assigned per session, so a user can’t take advantage of utilizing multiple GPUs in a single session. Storage is via a NAS, as there is no built in storage and connectivity to storage is through 10 GigE.

The appliance is specifically designed for GPU-heavy applications. The specs list up to 16 concurrent users, but that many concurrent users could be problematic for many applications in the vfx industry due to the limited available CPU cycles (16 or 32 threads). So even though Adobe Premiere was demoed on stage for the keynote, the VCA isn’t ideal for an app like Premiere because it uses a lot of CPU and also a lot of storage bandwidth. 3D applications are a more appropriate use case, as would setting up several render nodes for After Effects’ raytracing engine. NUKE also wouldn’t be appropriate due to it’s use of CPU.

In the enterprise version of GRID, several virtualization solutions can be used, such as Citrix, VMWare, and Microsoft. For the new appliance version, NVIDIA created their own custom connectivity which is designed to be used over a LAN. The system comes totally pre-configured for user sessions. The goal was to make it easier to install in a facility that didn’t have a large IT staff.

OTOY Octane Renderer

Jules Urbach, OTOY chief executive officer, took to the stage today at the GTC keynote to show off their cloud-based Octane rendering solution. He demoed using their new service, utilizing 122 GPUs to render a fully ray traced scene from a Hasbro Transformers commercial in a 3dsMax viewport. As he moved items in the scene, it would only take about a second to fully render the frame.

fxguide covered the Octane renderer in an article earlier this year, but in brief it is the first unbiased 3D ray traced renderer to work exclusively on the GPU and more specifically only on NVIDIA CUDA GPUs. It achieves phenomenal results, with ten to fifty time speed increase over CPU based unbiased systems….all without sacrificing quality.

The “new” news related to the presentation is that pricing for the cloud-based Octane renderer was announced today. Their initial data center will have room for ten racks and they’ll be able to fit 160 GPUs per rack, connected entirely by infiniband. When the cloud rendering service enters beta in a couple of weeks, the pricing model they’ll be starting with pegs a maximum cost of $1/hr for 1 GPU.

They admit this is all new and they’re very open to looking at other pricing methods. For instance, they might also look to a spot model like Amazon AWS. This means that GPUs which weren’t currently being used could be offered at a lower price point to the end user, but the user would get bumped back in a holding pattern if standard jobs came through. Looking towards the future, Urbach says he expects the price to drop and the performance to increase, saying that one could render a :30 HD scene for a few dollars by as soon as next year. In a session later in the day, he also showed their realtime research game renderer, Brigade, and it was quite impressive. It showed fast realtime ray-traced renders while a car was being driven through the scene, complete with game engine physics.

We’ll have more coverage this week from GTC, including notes from Douglas Trumbull’s HFR presentation, some non-vfx tech covering live graphics for The America’s cup, and GPU tech being used at Pixar.

Nice one John! Thanks.

cant wait love nvidia

And here’s their whole keynote:

http://www.youtube.com/watch?v=A84v7lbdcYg&list=SPZHnYvH1qtOY0ZrWQgnQlj4dwGZ1pVgoj

Wonderful advances! Slightly marred by the oversimplification of the VFX process in the octane render video. “You’re not going to understand it when they say it takes two weeks” very much depends on the changes you’re asking for. If you want a simple time of day lighting change, sure, do that in realtime. Rendering’s not the only bottleneck in the pipeline.

Pingback: NVIDIA GPU Tech Conference Report | CGNCollect