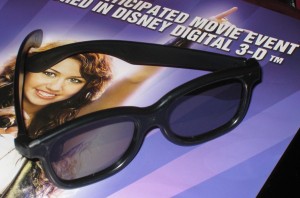

From U2 to Hanna Montana. From Beowulf to Journey to the Center of the Earth. 3D is hot once again. One of the strongest trends in visual effects films today is stereoscopic capture, post production and projection. Technology is catching up just when theatre owners are looking for ways to hold and appeal to a media saturated audience. We explore the theory and practice of digital 3D stereo imaging.

Introduction

Stereoscopic cinema failed in the 1950’s & 1980’s when the mechanical and photochemical variables were too great and maintaining alignment was impossible. However, digital acquisition, post-production, and projection permit one to craft images with far greater precision, managing to avoid the eyestrain and headaches that plagued earlier attempts. Earlier systems, if very precisely aligned, could work well. But many times, films were exhibited with alignment flaws and lead to the audiences literally feeling sick.

For a while, IMAX and theme park theaters became one of the only places to see 3D films. Exhibitions such as James Cameron’s Terminator 2 3D, shot in 65mm stereo, proved popular with audiences. The box office of IMAX films was healthy, with Into the Deep grossing $71.3M, Space Station $95.1M and T-Rex 3D $98.1M. But on the whole, regular cinemas did not embrace 3D due in part to the cost of having specilized projectors and the lack of good films to justify this expenditure. There were exceptions such as Spy Kids 3-D, which grossed $190M, but these films relied in many cases on only having some and not all scenes in 3D. Spy Kids 3-D used an older style “anaglyph” technique — simply different coloured gels for the left and right eye to produce the 3D effect.

3D is regaining popularity due to newly acquired digital control. “The main reason why stereo cinema is likely to work this time around is because we have unprecedented control over the precise alignment of the left and right images,” explains VFX supervisor and 3D workflow expert Tim Baier. This is coupled with dramatically improved digital projectors that can now run at frame rates high enough to ‘flash’ both stereo images from the same projector using a fast moving polarizer or glass. Combined with newer technology in glasses, all these things help produce much better 3D effects.

This article is only concerned with the latest wave of Digital Cinema 3D stereo production and post-production. Since it is this last wave of interested that seems to have been able to reach a level of quality and technical control to produce technically correct films. Audiences appear to agree, following on the heels of the U2 3D film this year, which swept Sundance, and Hannah Montana 3D, which opened Super Bowl weekend and grossed $31.1M in 683 theaters. This is a record for any movie on Super Bowl weekend and to date, it has grossed $62M, a record for any 3D movie or for any concert movie. This compares to a $27.5M opening weekend for Beowulf on 3,153 2D and 3D screens. U2 3D was produced by 3eality productions with an amazingly impressive 3D stereo OB van live switching style setup. The film’s stereography is extremely impressive, but interestingly U2 3D performed very poorly at the box office, indicating that 3D in and of itself is by no means a guarantee of success.

Several major feature films are in production, including James Cameron’s newest epic, Avatar, and Journey to the Center of the Earth which is to be released July 11th. In the area of animated films the market is moving even faster. On the heels of the 3D Meet the Robinsons, Pixar announced several new 3D releases over the next several years. In addition to next year’s new UP (Pete Docter & Bob Peterson co-directing), Toy Story 1-3D, Toy Story 2-3D, and Toy Story 3-3D, will be released in 2009/2010 with the dates staggered. These will be using REAL D projection technology, which one Pixar insider referred to “technology that appears to becoming the standard for digital stereoscopic projection”. Interestingly Disney is also planning a sequel to Tron in 3D for 2011, and Joseph Kosinski is in talks to direct.

This week’s fxpodcast : Tim Baier

In this week’s fxpodcast we talk to Tim Baier, a vfx supervisor and 3D researcher. Baier’s career started as a broadcast camera op, moving to tv post production, linear tape, avid, senior henry, senior inferno. The year 2000 he moved to features working at places such as Mill Film, MPC, Weta, Animal Logic, Fuel International, Rising Sun Pictures, Photon, Meteor and others, before switching to doing full time 3D stereo production .

In an endeavor to identify the critical stereo issues and explore the creative opportunities, Baier spent almost three years on an R&D project that culminated with the launch of a presentation about the fascinating 1.7 billion year old geology of Arkaroola Wilderness Sanctuary and the Flinders Ranges. “From records of ice ages, meteor impacts and orbital speed of the moon to the very first animal life on Earth, South Australia has a window into deep time and a treasure of global significance” explains Baier. Using DSLRs to shoot time-lapse, stop-motion, and stills, Tim acquired imagery at 4.3k RAW format and then post produced this for a 2k projection master. “My project about the Flinders Ranges geology, received an exceptionally enthusiastic response” he explains in the podcast.

To acquire some of the footage, Baier piloted an ultra-light and photographed with a stills camera. By altering the distance between the cameras, people’s sense of scale can be manipulated to enlarge or miniaturize a scene (discussed further, below). An ultra-light aircraft and hyper-stereo techniques were used to depict aerial landscapes with more depth than would have been possible if just taken from on-board a single plane. Baier found that he could fly in a straight line and photograph at 90 degrees from the craft.

By using only two stills he effectively obtained a stereo pair, separated by vast interoccular distances. These were then re-aligned in post-production and could be animated for breathtaking “hyper-stereo” shots. Baier also went the other way and reduced the distance between the lenses to an amount less than human vision and then used this “hypo-stereo” technique to ‘gigantify’ subjects like lizards, flowers, and crystals. If hyper-stereo makes the large seem like a miniature, the reverse occurs with small distances between the lenses and small objects seem enormous when projected.

3D Theory

When we view the world we get two different images into each of our eyes. This difference is defined by the different positions of the two eyes. This distance is normally 65mm for men, 63mm for women, and clearly less for kids who have an average distance of about 51mm. If we average this data we can call it 64mm, which is generally assumed as the average. This distance between one’s eyes is called the average human interoccular distance and without it we have no stereo 3D effect.

To get the world to look correct digitally, we also need to film a scene with our lenses about 64mm apart. If we place our cameras a larger distance apart then the resulting 3D effect – while still just as vivid – can produce a sensation in the final viewer that makes them perceive the scene as being smaller than normal scale. In extreme cases, mountain ranges can look like miniature sets, since one is getting a much greater difference between the point of view of each eye than we would normally. This is simply because the eyes are much further apart. Our brain – not used to seeing much variation of wide, and vast distance scenes, but still seeing correctly in stereo – assumes the whole scene must be much closer and hence we believe what we are looking at is a set or miniature.

It is this desire to get the lenses a head’s width apart that results in complex camera rigs involving mirrors and odd 90 degree mounts. As a standard example, the closest you can normally place two RED One cameras together is about twice that (125mm) due to the housing, lens covers and cabling. Without additional distance between the cameras — “Hyper-stereo” as it is sometimes known, with its very wide interaxials — most people loose much of the perceived stereo relief when the subject is more than the 12-15 feet from the cameras.

While it is possible to convert 2D images shot with a single normal lens into a pair of left and right eye images, this is an extremely labour intensive process and has only been successfully attempted a few times, normally on specialist films such as Nightmare before Christmas. George Lucas is reported to be investigating and experimenting with a Star Wars 3D conversion. One of the approaches to doing this is to take each discreet object in the shot and map that vision on to a 3D shape to create a new 3D scene that can be then ‘filmed’ with a second camera. This becomes much harder with live action, so much so that remastering live action films in stereo is unlikely to ever be a major source of 3D movies.

It is much easier to achieve artificial stereo with fully animated 3D footage, as a second camera can be placed in virtual space a nominal constant distance from the original camera. In fact, programs such as renderman have this built in so that the second camera’s render, if done at the same time as the primary camera, will not even take as long to render as the first camera. On average, this second render would add only a 10-15% overhead compared to rendering just one camera, according to Peter Moxom of Pixar.

Our eyes do a lot more than just act as static cameras; they focus, they zoom, and they independently converge on a single point to help estimate distance. Interestingly, according to Baier, “your eyes take very little notice of the angle that your eyes are pointing as this is an automatic process, just as focus is also an automatic process. So convergence does not in of itself give you distance,” but you do know when you need to do major convergence adjustment from close to far or vice versa.

Actually, Baier is correct for a cinema experience, but at closer distances the disparity between focus and convergence can be a big issue for the brain, a major factor influencing head worn stereo viewing helmets. But at normal screen distances it is this virtual independence of focus and convergence that allow us to focus on the movie screen 40 feet in front of us, but simultaneously converge our eyes to make two offset images appear as one 3D image in a movie theatre.

Solving the problem of achieving dual and equal focus and convergence has lead to cutting edge research into Wavefront display technology. Lens pixels are adjusted individually using electro magnetic force — a new technology that we may see in several years time. A new patent application from Apple, for example, details how to implement a 3D stereoscopic display or “auto-stereoscopic” systems that don’t require the viewer to be wearing special glasses or goggles. (source: macrumours.com/freepatentsonline.com)

To make 3D work in a cinema today we need to find a way to project two images, and control only one of these each being seen by each eye. If we do this correctly, the viewer gets the very convincing illusion of depth – both in front and behind the plane of the theatre screen. This universally requires some type of glasses to be worn by the audience.

If we aim two laser dots at the centre of the screen and measure the horizontal offset of that laser on the screen as a percentage of the screen. The dot at the centre of the screen is 0% (horizontal parallel) and your eyes are converging at the distance the screen is from the viewer. In other words, if the dots are sitting on top of each other in the middle of the screen, we assume the dot is where the screen is (in fake/perceived 3D space). Let’s use two lasers and assume there is some sort of polarized laser system, so only one eye sees one laser and the other eye sees the other laser. If you want something to appear beyond the screen then we define that as a positive parallax % so the left image moves to the left and the right image moves to the right, the extreme case of that is the stars in the sky – If you look at an object effective infinitely far away – your eyes are parallel. With 3D cinema we do not want to go much beyond that since that does not happen in nature. You can only push most people three degrees beyond straight parallel.

If we move the screen to the left and the right we get negative screen parallax and become ‘cross-eyed’. We may ‘think’ we are focusing on something standing in front of the screen our actual eyes are still individually focusing on the real cinema screen – which of course has not moved. This phenomena of focusing at the distance of the screen while converging our eyes to look at something ‘in front’ of the screen does not seem to upset anyone. In effect we are tricking ourselves, with the apparent 3D defined by convergence or ‘cross-eyed-ness’. Going the other way, in real life objects an infinite distance away should have our eyes pointed in parallel to each other. We can actually ‘read’ images with divergency. Most experts agree that up to 3 – 4% divergent imagery still works, but as we have no real world case of need to diverge our eyes, excessive divergence can lead to audience headaches. So we need to pay attention to managing convergence and divergence or risk making the audience sick.

A rule of thumb: A left eye image moved right – the image object appears closer. Right over left – it appears further away.

Modern digital systems go to great lengths to avoid creating the poor stereo effects which in earlier analogue stereo systems lead to the audience literally feeling sick. One such example is an incorrect vertical disparity as this never happens in nature;the images need to be vertically balanced and aligned. Other problems include rapid and constantly shifting convergence points for the eye to adjust to, poorly colour matched left and right eye images, weave and float, image misalignment and many others. It is the hope of the current industry that since these new films can be fully digitally captured, post-produced, and projected that these effects can be minimized, controlled or removed.

Parallel vs Convergent

While most of the industry agrees strongly over most of the science involved in 3D imaging, there is one remarkably important point that divides the community: Should the two primary cameras, when set correctly at 64mm apart, look straight ahead or point in slightly towards each other? This may seem like a fundamental question, and to some the answer appears at first glance to be obvious. Our eyes converge so logically shouldn’t the cameras?

It turns out both approaches work and that if both cameras look straight ahead or are in parallel, you can easily adjust the resulting images in post. A simple left and right shift on one of the cameras will overlap the images and produce a stereo effect. Prior to the advent of DI and fully digital manipulation of film, the point was moot, as one needed to align the images as best one could in camera. Still, this central point seems to divide the industry. Key leading industry projects such as those of James Cameron use converging rigs, while still others adamantly point out technical issues that theoretically adversely effect convergent cameras.

The two arguments are as follows:

For Convergency

This is how 3D was developed and perhaps most importantly how human eyes work. Clearly convergent cameras work, films such as Titanic Secrets of the the Deep and many many others have produced beautiful and highly effective material using cameras that converge on set. Most feature films in production and recently finished have been shot with convergent rigs. But as we discuss later, calibrating such rigs is not trivial.

Against Convergency (ie. Use Parallel Cameras)

The counter argument is that with digital post convergence is not necessary. Furthermore, if one points two cameras towards a point along the center line of the cameras, then each image would appear to be correct at the center but they would keystone relative to each other at the sides. Now if all the points on a plane are the same then there would be no perception of depth. If there is no perception of depth, all points would match between the left eye and the right eye. If each of the left and right images are key-stoned, then the center seems to be at the same depth. However, the edges don’t have their left and right most points in the same place and thus they should read as either in front of or behind the screen. But they want to be on the same plane as the screen, so all we want to do is film with parallel cameras and then shift the images left and right.

The debate is more than academic as the decision needs to be made on set and is not easily fully corrected in postproduction.

Filming

The complexity of filming 3D is centered around two key aspects. The first and most obvious is that there is a two camera rig and, depending on the cameras, there is a need get them close enough together to have the lenses 64mm apart. Two cameras side by side would be bulky enough, but once one adds in a complex system of angled mirrors or prisms the rigs can become even more cumbersome. Adding to the complexity is the need for the focus, zoom and f-stop on both cameras to be calibrated and match each other. The system is no longer easily moveable on set and the grips job increases dramatically. The second issue is the need to control the convergence of the camera. This requires a special rig and, much like pulling focus, the rig needs to have a dedicated operator who is controlling the convergence during a take which contains complex action.

Perhaps the most famous maker of 3D filmmaking rigs is Vince Pace, President & CEO of Pace 3D Technologies. Pace, currently providing rigs and expertise on a number of projects including Final Destination 4, is best known for his work with film maker James Cameron. Cameron’s rigs are called fusion and use a beamsplitter, but Pace and Lightstorm (Cameron’s Company) have worked with a huge variety of possible configurations. Pace currently has 20 complete 3D rigs set up for a variety of cameras from Sony CineAlta 950s to RED and Silicon Imaging Cameras. Pace was Executive Producer on Hanna Montana 3D and is now working on Cameron’s next big budget feature Avatar, which is 3D with extensive motion capture.

Pace and his team have perfected the exacting requirements of building a rig that can not only accurately control convergence but be fully calibrated. In basic terms, calibrating a rig takes so long (up to 5 hours per lens setup) that zoom lenses are mainly used. To operate the cameras successfully the zoom need to be calibrated, aligned, and then locked for combined motorized operation. Each rig needs to not only adjust the focus and convergence in sync but also are generally adjustable in their interoccular shooting distance as well. The entire rig must also work seamlessly to allow the convergence to be adjusted during shot in much the same way as one normally pulls focus.

On set, the crew expands to include a configuration team doing calibration on secondary rigs, ready to swap out as the director changes lens. The primary camera crew is also extended to include a convergence puller, making the team a three person crew: camera operator, focus puller and convergence puller.

In most rigs, one eye is deemed the primary eye. For instance, the right eye might be primary and the left eye is the secondary eye, which is filmed at a correct interoccular distance by virtue of mirrors or a beam splitter. The same rig that was used in the live concert setting of U23 D was also used on Journey to the Center of the Earth, a drama feature film. In both cases the cameras ‘pulled’ convergence.

Iconics camera is able to work without a beam splitter as the lenses allow for them to be naturally close enough, but some film makers prefer to work with larger cameras and more traditional lenses. Interestingly, most productions are not using PL mounts but B4, which actually is a positive as many film makers like a larger depth of field than traditional 35mm when filming stereoscopically.

Sports film makers and broadcasters are also interested in 3D. Fox Sports Network Southwest and the Dallas Mavericks provided a look into the future of sports television on March 25 when they teamed with PACE to produce the first-ever NBA regular-season game live in 3D HD. This was secured through PACE Fusion 3D and was the third ever live sporting event presented in the innovative format. The game will be beamed across Dallas via satellite into Mavericks owner Mark Cuban’s Magnolia Theatre, where an invitation-only audience will watch through special 3D glasses using Sony’s SXRD 3D Projection System on an 18×42-foot screen.

Post-production

There is both and art and science to the world of 3D digital post, as stereography expert Lenny Lipton commented on his blog. “All of the major animation studios that produce computer generated images have physicists and imaging specialists who are attempting to produce a computer world that can be rendered with remarkable real-world fidelity or with controlled departures from the real world, to produce a beautiful visual effect. The people who create this content – the animators, background artists and other specialists – for the most part deal with content creation on an intuitive level. They aren’t doing calculations, but they are using computers. They need to be able to do what they do as any creative artist does, using on intuition to work the medium.” Lipton literally wrote the book on stereography and is now a leading player in the world of digital 3D projection (see below).

A simple way to understand 3D is to place an image on the screen. If an image in the scene is to look like it is exactly where the physical screen is in the theatre, then there would be no difference between there left and right eye and there is no horizontal offset. Of course, in real life you would have had to have filmed a flat wall or billboard poster so that the content of the projected scene is itself flat and has no depth. If you film a real scene you can never get all the points to sit on top of each other as in a normal scene. Shot with two cameras – everything is offset differently within the scene. A car in the distance is just a small amount different for each eye , but for a person close to camera in the same shot the left and right eye have a large difference. But if you filmed a poster on the wall – all the image could be made to overlap and align – and if you did that the audience would see that poster as being in the same place in 3D space as the projection screen.

Horizontal displacement is proportional to ‘parallax’, or the 3D effect. Thus, if we move one of the eyes left or right we can make the image move in front of behind the screen.Of course, if we move the image left or right you will have a gap. Think of the 3D world in the cinema as two viewing pyramids — one from each eye. This is called the left and right frustum.

When you film a scene with parallel cameras or if you move the left eye to the side to increase the 3D effect, there is clearly a bit on one side that is not not covered by both frustums. In the same way, if you moved a background plate you’d end up with a black strip in 2D along one edge. It turns out that in 3D there is no black edge, simply a section of the screen without information for both eyes: the left eye may have some image there but the right eye does not. This gap can be cropped out in post, but it turns out that it goes virtually unnoticeable in 3D stereo. A large missing slice at edge of frame of one eye which visible when viewed without glasses is not noticeable when watching with glasses.

Post production falls into several categories:

Colour Adjustment

As a starting place, the left eye needs to match the right eye in basic grade. Most often a beam splitter or a mirror will colour the image slightly, typically in the shadows. Both eyes need to be balanced exactly and this is an area of research to provide automated tools for perfect left and right eye matching.

Colour Grading

Once the two eyes are balanced, the pair of eyes can be graded. Systems need to either automatically apply a matching grade to each eye instantly or the project can not be graded in stereo and the colourist risks getting head aches. Often times only one eye is graded at a time and then the grade is applied on top of the offset grade mentioned above. For example there is a hot key in Scratch to transfer a grade from one eye to the other, but you can not grade in stereo.

Stereo Adjustments

The first adjustment is matching the left and right eye vertically. Even if you tilt your head, your eyes always remain fixed relative to each other so all shots need to be checked for vertical alignment.

Even if the on-set team very accurately pulled convergence, edits can change orders and sometimes scenes need to be re-converged, which is primarily a horizontal adjustment. Also, while a shot may itself be technically perfect it may not cut well in terms of convergence with the shot following or preceding it. Convergence can be tapered to ease into edit and avoid annoying convergence jumps.

As one does not want an audience’s eyes to have to move too much between near and far objects rapidly, Tim Baier has a graph from a python script based on a database he uses to track his shots graphically during the edit. The graph shows the near and far objects in scenes and if they jump too much, he can ease eye strain by feathering the disparity of the convergence and divergence to bring them close to each other either side of a cut. This is also based upon the content of the scene and the subject point where the audience is most likely to be looking.

Creative Stereo Effects

Having solved technical problems the director may still grade the convergence per shot for dramatic or comic effect.

Flares and Light Plays

One thing that does not film well in stereo are lens flares and light plays. As a lens flare is so positionally based, even the modest interoccular difference of 64mm can make the flares not match eye to eye.

Effects in Stereo

Simple tasks such as roto and paint are much more complex in 3D. Left and right eye roto must match very accurately. It is not possible to just copy and paste between eyes – due to the change in perspective so fine detail roto work needs pains taking attention to detail.

Special passes

While Real- D is very popular with audiences and gaining acceptance, the silver screen used in the process can cause a ghosting highlight from one eye to the other that reads like a second offset limit. To solve this, a “ghost-busting’ pass is done for the REAL D version, which mathematically subtracts the bright highlights of the primary eye from the secondary eye. This cancels out the effect, but leaves the left eye with strangely dark hot spots. For this and reasons of stereoscopic adjustment and minor softening from beam splitters/mirrors, the untreated primary eye is used as the standard DVD or TV version.

Compositing in 3D Space

While there is nothing stopping 2D techniques from being applied in 3D, special consideration must be given to compositing at the right depth. It is easily possible to create 3D objects that have the incorrect stereo placement in the scene when composited with 3D live action. For example, a 3D car could be composited so that the car reads as if it is in front of the talent but the perspective of it and size would have it behind the talent in 3D space. The same thing happens with green screen layering. Special attention needs to be given to both layer priority and 3D stereo priority.

When tracking scenes it can be advisable on fixed relationship cameras to have one track for the primary eye and then offset the second camera based on the known interoccular distance and convergence to track the second camera. If the convergence is dynamic during the shot or the convergence is not known, dual stereo tracks will be required for both eyes.

Cost

Even for animated films – the cheapest to make in stereo – there are real costs that can run into sizable amounts for a stereo version. DreamWorks Animation SKG Chief Executive Jeffrey Katzenberg said at Show West last month that Monsters vs. Aliens (releasing March 27, 2009 ) in 3-D was estimated to have cost an additional $15 million to make the film in the 3D format. He was confident that enough theaters would be ready to show the film that the studio would recoup the additional cost.

Spotlight: Jeff Olm, Stereo Colourist

We spoke to Jeff Olm, Stereo Colorist from Journey to the Center of the Earth.

fxg: When you are grading in 3D what systems to you use?

Olm: Scratch, for over two years now, on material that provided from sony 950’s on the Fusion rig and RED footage from a Paradise effects Rig – on another project.

fxg: Do you have an opinion of shooting stereo with parallel cameras or converged?

Olm:Converged allows you to make more creative choices on set with the director, it does require an additional operator but it allow you to direct the viewers attention in a more dramatic fashion. Parallel is a very safe way to shoot and it is a faster way to shoot, but all your decisions do need to be made in post. Most of todays concert and dramatic projects are shooting with converged rigs.

fxg: What are the main mistakes that causes eye strain and how well do digital tools allow you to remove those?

Olm:Anything that causes a headache is the result of vertical misalignment and/or excessive convergence, … anything that hurts the viewer is just wrong. In a DI situation I use Scratch – the ability to view and make real time pan and scan adjustment is a a great tool to resolve these issues.

fxg: How much does balancing the colour of the left and right eye exactly to each other matter? There must always be some differences – no matter how careful one is on set?

Olm:If you are wearing glasses it is very hard to know what is wrong , but you can tell something is wrong, normally this is the left eye that has been affected by the beam splitter, – but it is very hard to know that just by looking at the screen – often times it is the black levels on the left eye. The other issue is lens flares and rotation of the flares in extreme highlights – differences between the eye views can cause eye strain and confuses the viewer where they should be looking.

With the Scratch system I felt we really able to minimize the viewer fatigue for the viewer and really enhance the experience for the audience.

fxg: When you grade do you grade one eye and just apply a LUT or grade to the other or do you grade in stereo?

Olm:In the current version of Scratch you can only grade one eye at a time, and they gave me a hot key to apply the right eye grade to the left eye and immediately preview the shot in real time.

fxg: Which projection systems do you think are most effective today – away from IMAX ?

Olm:I would say in my opinion the Dolby gives a great image in stereo but the glasses can be too expensive. REAL D seems to be really gaining in popularity – and this is key. I think REAL D will continue to grow as they have cheaper glasses and so it is easier for the theaters to provide glasses to the audience. The REAL D system provides a great rich experience for the audience. They have a new REAL D XL system they unveiled to exhibitors at a screening at ShoWest that can project a spectacular 3D image on a 60 ft. screen, and based on feedback from the exhibitors screening at ShoWest of Journey To The Center of the Earth 3D everyone was very impressed with color, and clarity of the images.

fxg: In general terms, do you believe the DOP should aim for a compressed depth of field as they do in normal mono, or given the 3D nature of the experience, should the focus be broader allowing audiences to ‘look around’ the screen more.

Olm:Personally I like an infinite depth of field, I like the image of the F950, F23, and the appearance of infinite depth field, it is a more hyper real feeling but it is completely different from the classic look from a 35mm camera.

fxg: What are the greatest post challenges facing 3D production.

Olm:Large amount of data, management and organization of the data, and real time review of visual effects and offline work in progress that come off say a 2D AVID system – all are areas that present challenges. The other thing is just the image processing, image enhancement, etc all take twice as long as you have twice the data.

Post Gear

Quantel Pablo

Quantel’s paint system is legendary for good reason and Pablo inherits a wealth of visual effects tools, such as great keyers and image warping/corner pinning tools plus a very well tested audio system. Quantel’s Pablo was used on Hanna Montana. Quantel is slower than some system due to rendering times.

Quantel’s paint system is legendary for good reason and Pablo inherits a wealth of visual effects tools, such as great keyers and image warping/corner pinning tools plus a very well tested audio system. Quantel’s Pablo was used on Hanna Montana. Quantel is slower than some system due to rendering times.

Disney’s Hannah Montana 3D was the first live action feature produced using Quantel’s Pablo 4K with the Stereoscopic 3-D option. The film, directed by Bruce Hendricks, was also produced in record time. Shot in Salt Lake City in late October, the concert film was in theaters a mere 11 weeks later. That allowed Disney to capitalize on the intense interest in the Hannah Montana/Miley Cyrus live concert tour. Completing an ordinary feature film in less than three months would have been a tall order, but, given the technological hurdles, to do so with a 3-D movie was extraordinary. Color grading and conforming was completed at FotoKem using a pair of Pablo 4Ks, each with the stereoscopic 3D-D option, in DI Theaters set up specifically for 3-D work.

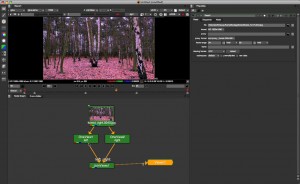

Scratch from Assimilate

Scratch was used on both U2 3D and Journey to the Center of the Earth. The program is the only one to be able to natively work with Red’s R3D files and, through excellent use of the GPU, is extremely fast to grade. Its major shortcoming is its lack of robust audio tools. Clip-based audio is not yet possible, but in the area of 3D alone its XML basis makes it an extremely strong and very cost effective program. Journey to the Center of the Earth utilized four Scratch systems, doing on-set work, EDL conform and XML workflow in addition to stereo colour correction.

Scratch was used on both U2 3D and Journey to the Center of the Earth. The program is the only one to be able to natively work with Red’s R3D files and, through excellent use of the GPU, is extremely fast to grade. Its major shortcoming is its lack of robust audio tools. Clip-based audio is not yet possible, but in the area of 3D alone its XML basis makes it an extremely strong and very cost effective program. Journey to the Center of the Earth utilized four Scratch systems, doing on-set work, EDL conform and XML workflow in addition to stereo colour correction.

Speedgrade

SpeedGrade DI 2007 offers a raft of new features and enhancements including the new DualStream stereoscopic module and support for live playback and grading of “RAW” files which offer better image quality at one third the size of standard RGB formats.

Nuke

One of the first compositing packages to embrace 3D is NUKE 5.0. The Foundry has laid the groundwork for efficient multi-view compositing in Nuke 5 and is now concentrating R&D efforts on creating unique tools to further support 3D stereoscopic workflow. They will preview their work in this area during the upcoming NAB trade show, including premiering its Furnace 4 image-processing tools for Nuke.

Nuke 5 is already creating a major buzz, with Animal Logic adopting it for their workflow moving forward. Others are equally as impressed, including Daniel Smith who was Stereoscopic Supervisor for Spy Kids 3D (then at Cafe FX) and has completed numerous other large stereoscopic projects. “Nuke 5 stereoscopic features are a godsend for visual effects,” he says. “It simplifies work flow and optimizes how fast you can visualize the 3D stereo space for the greatest impact on the final shot”.

Nuke 5.0 not only has tools for viewing left and right images, but colour corrections and even paint can be applied to both left and right eye simultaneously (or also separately). While working, one can view the composite in anaglyph format to quickly and easily preview the 3D effect and also render anaglyphs into an OpenEXR file. However, more important considering today’s technology, you have the ability to both read and write multi-channel OpenEXR sequences which contain both left and right eye images. This can greatly simplify workflow in a stereoscopic compositing environment. “It’s a perfect marriage with Nuke’s multi-channel workflow and we’re really happy that the Foundry are embracing a stereo workflow like they are!” , commented Erik Winquist, compositing supervisor, Weta Digital. Weta are large Nuke users.

Projection

According the the New York Times (13/03/08), “the biggest brake on the 3-D rollout has been the slow expansion of digital projection systems, which are steadily replacing film projectors at multiplexes nationwide. So far, just 4,600 out of about 37,000 movie screens have been converted to digital. Studios have been subsidizing the conversions, which cost theaters about $75,000 for each auditorium, with “virtual print fees” approximating their savings from not having to print and ship hundreds of film reels for each release. But Michael Karagosian, a technology consultant to the National Association of Theater Owners, said film companies like Kodak and Agfa had responded to the threat of digital cinema by lowering their prices for film prints, reducing studios’ appetite for big digital subsidies.

The article went on to point out that “Even with the subsidies, theater owners have to pay about 1.7 times as much for digital systems over time as they do for projectors, because of high maintenance costs and short equipment life spans, Mr. Karagosian said. Film projectors, by contrast, are much like Cadillacs in Cuba, kept humming for decades with cheap replacement parts”. There are several systems in widespread use but, according to the New York Times, REAL D controls about 97% of screens.

Jeffrey Katzenberg recently presented a sneak preview of the studio’s Monsters vs. Aliens along with a special 3D clip of DreamWorks’s 2D summer film Kung Fu Panda. The clips were shown at the Paris Las Vegas Theatre Des Arts on a 60-foot screen utilizing REAL D XL with a single DLP projector. The nearly 2,000 convention-goers at the event were impressed with the depth, clarity, and color of the 3D visuals. Katzenberg said “the proprietary tools and techniques that we’ve developed as a part of our 3D initiative and the use of REAL D’s groundbreaking XL technology together allowed us to project onto the silver screen 3D images with unprecedented clarity during Tuesday’s ShoWest presentation.” (marketsaw.blogspot.com)

New Line Cinema and REAL D also screened the summer tentpole Journey to the Center of the Earth 3D at the Theatre Des Arts, in REAL D 3D utilizing REAL D XL. New Line Cinema President of Domestic Distribution David Tuckerman said “We were thrilled to screen Journey in its entirety in REAL D 3D to ShoWest attendees. The film looked great on the extra-large screen – bright, sharp, and colorful- and the audience was blown away.”

“REAL D to date has installed about 1200 systems worldwide,” according to Joshua Greer, President and Co-Founder of REAL D. “Of those, approximately 900 systems are in the US at about 680 locations. The remaining 300 or so are deployed in 24 other countries. We are under contract right now for another 600 systems in Europe, but we have to wait for the 2D roll-out before we can install,” Greer related to noted 3D producer Phil Streather (via CML).

Earlier systems required two projectors, which lead to very unreliable results and thus a poor audience experience. Lenny Lipton, who SMPTE describes as the father of electronic stereoscopic display technology, invented the Z-Screen which is the basis of the single projector REAL D system. The Z-Screen sits in front of a DLP digital projector and a modulator switches the characteristics of polarized light at high speed. The Z-Screen is made up of a linear polarizer and two pi-cels in the optical path. The pi-cells switch on and off to create left and right handled circularly polarized light in sync with the left and right eye images, according to Lipton in the November SMPTE Journal.

The system requires a silver screen to be used in the cinema and the audience to wear polarizing glasses. The cost of the glasses is cheaper in the REAL D system than in others, a possible significant factor in the systems adoption. This produces a vastly better and more stable result than the old anaglyph solution of two coloured lenses. The last major film to use the anaglyph system was Spy Kids 3D. “Spy Kids 3D was the biggest released 3D film in the last 25 years,” says Smith. “It had to play on 3000 screens and doing anaglyphic was the only option at that time. Even now you only have about 800 screens in the US for REAL D and IMAX release. There should be more in the next 2 years almost 4000 are being planned. At that time 3D was very risky and no theater owner was going to pony up the money to switch over for one film.”

The system requires a silver screen to be used in the cinema and the audience to wear polarizing glasses. The cost of the glasses is cheaper in the REAL D system than in others, a possible significant factor in the systems adoption. This produces a vastly better and more stable result than the old anaglyph solution of two coloured lenses. The last major film to use the anaglyph system was Spy Kids 3D. “Spy Kids 3D was the biggest released 3D film in the last 25 years,” says Smith. “It had to play on 3000 screens and doing anaglyphic was the only option at that time. Even now you only have about 800 screens in the US for REAL D and IMAX release. There should be more in the next 2 years almost 4000 are being planned. At that time 3D was very risky and no theater owner was going to pony up the money to switch over for one film.”

The other prime system is the Dolby system, which is still based on colour and technically an anaglyph. But it is a vastly more accurate system than the older style anaglyph system and as such the term is not normally used in relation to the Dolby system. The Dolby system is actually A wavelength selection problem.

Nearly all theaters use the digital micro mirror (DMD) system by Texas Instruments, which is used in the major projectors made by Christie, Barco and NEC-Ballantyne. Lenny Lipton summed it up, once again from his blog. “None of this would have been possible without DLP projection which is the invention of Larry Hornbeck, who I just had the pleasure of meeting at the SPIE Stereoscopic Displays and Applications Conference in San Jose,” says Lipton. “Larry asked me for my autograph, so I asked him for his. As you can see, his autograph is on the back of a pair of paper 3D eyewear, which is entirely appropriate.”

Digital projection systems are going to expand under a new agreement to almost 14,000 movie screens within the next year. Reuters reported recently that a line of credit has been established for $1.1 billion. The screens in the new deal will be owned by Regal Entertainment, Cinemark, and AMC Entertainment and can be upgraded to REAL D 3D movies. In the report, Michael Lewis, chairman of REAL D projection systems, noted that “3-D is the big game changer and the compelling reason for doing digital cinema.” The loans will be repaid by a per movie fee, in lieu of the normal distribution charge, as digital cinemas require no expensive prints or physical distribution of them.

It should be pointed out that prior to REAL D most 3D work was done for IMAX on 70mm film. However, IMAX has announced a partnership with Texas Instruments where “they will phase in digital over the next two years, and maybe even faster,” according to Nancy Fares, TI’s business manager for its DLP cinema products group. “Just one day moviegoers will show up to a theater and the movie will look a lot better.” For IMAX, crossing over to digital is about more than just delivering what the company claims will be superior picture – it’s about saving a whole lot of money. Because IMAX movies are shot on 70mm film, they require expensive specialized cameras to shoot and expensive specialized projectors to display. “Since everybody else uses 35 mm film, the volume is not very large and there’s no economics behind it,” says Fares. In fact, with an all-digital IMAX, Fares predicts that 70mm film will be completely dead within five years. (Popular Mechanics.com)

Summary

We give the last word to Lipton who sums up the state of the industry well:

“Digital technology–content creation, post-production, and projection–has enabled the stereoscopic medium to become a part of the filmmaking armamentarium; not only to provide beautiful projection but to provide a dependable product, free from the mistakes of the past, that I don’t want to dwell on because they’re such a bummer. But today’s modern 3D digital projection is free from fatigue and eyestrain, and can now allow content creators to do their best to discover the art of this new medium. We’re going to see several years of experimentation and discovery, and at the end of that time the stereoscopic medium will be on a firm foundation. Creative people will never stop creating, but we will reach a plateau where many of the creative and production technical processes become routinized. Oddly enough, the reintroduction of the stereoscopic cinema comes down to turning that which had been more or less a laboratory experiment into a routine.”

More information

An excellent source of discussion on 3D imaging can be found on the CML list at www.cinematography.net. This professional email discussion group was extremely helpful in the writing of this article.

Lenny Lipton’s blog is at http://lennylipton.wordpress.com.

Daniel Smith’s blog is at http://www.danimation.com/blog.

Pingback: FX Guide Stereo Article - Danimation