Background

Paul Debevec and ICT: Light Stage X

To give you a glimpse of what fxphd is all about, we thought this was a great chance to also highlight one of this term’s fxphd interviews from our Background Fundamentals series (fxphd’s version of fxguidetv). We had intended to post a cut down edition of this in-depth interview with ICT’s Paul Debevec here on fxguide, but this gives a reason us to publish the interview in full. Given the importance of the ICT’s work and the wealth of material they have contributed to our industry, we welcome this chance to show you the full length interview.

Right below is a full fxphd Background Fundamentals class. Mike Seymour discusses the development of the Light Stage with ICT’s Paul Debevec.

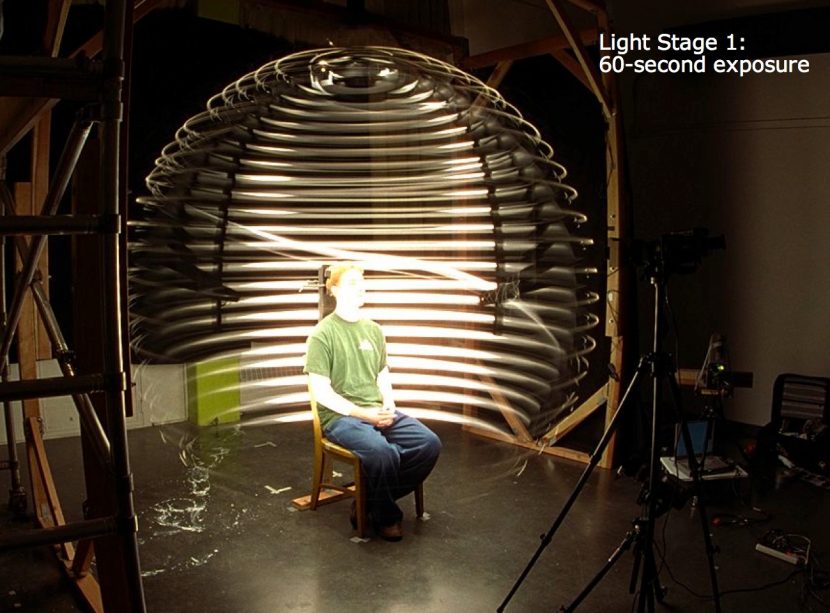

The Light Stage is one of the true scientific innovations with major Hollywood visual effects applications of the past 10 years, and one that fxguide has had the pleasure of following for some time. Developed by Paul Debevec and his team at the University of Southern California’s Institute for Creative Technologies, the Light Stage is a spherical structure made up of LEDs and designed to duplicate light from any environment in order to re-light characters.

It’s been used to help create memorable performances in numerous films, from Spider-Man 2, King Kong, Superman Returns, Spider-Man 3, Benjamin Button, Avatar to Underworld: Awakening, and has both the ability to deliver assets for creating believable CG characters, or to re-light actors and place them into virtual or live action backgrounds.

When a person sits or stands inside the Light Stage, the LEDs can illuminate them from any direction. By then photographing the person, a 3D computer model of how light reflects off the person can be created. This allows you to make a virtual actor that accurately reflects light the way a real person or character would. You can also take existing lighting information captured on set, say from a light probe or HDRIs, to re-create that lighting on the person inside the Light Stage.

In leading the development of the Light Stage, Paul Debevec drew on his work as a pioneer in the field of high dynamic range imaging and image-based modelling and rendering. His thesis research at UC Berkeley was in the field of photogrammetry, and in 1997 he and others created The Campanile Movie, a virtual flyby of a bell tower made up only of photographs. As a key part of the production, several of the photographs used to produce the Campanile model were taken from above the Campanile using kite aerial photography by UC Berkeley professor Charles Benton.

Below is “The Campanile Movie” (1997), directed by Paul Debevec, which demonstrated new image-based modeling and rendering techniques, in particular those from the “Facade” photogrammetric modeling system presented in Debevec’s 1996 UC Berkeley Ph.D. thesis. The tower and campus were modeled from a set of twenty still photographs and virtually rendered using projective view-dependent texture mapping. At its premiere at the SIGGRAPH 97 Electronic Theater in Los Angeles, “The Campanile Movie” attracted the attention of visual effects supervisor John Gaeta who would leverage its techniques in creating virtual backgrounds for the ‘bullet-time’ shots in the 1999 movie The Matrix.

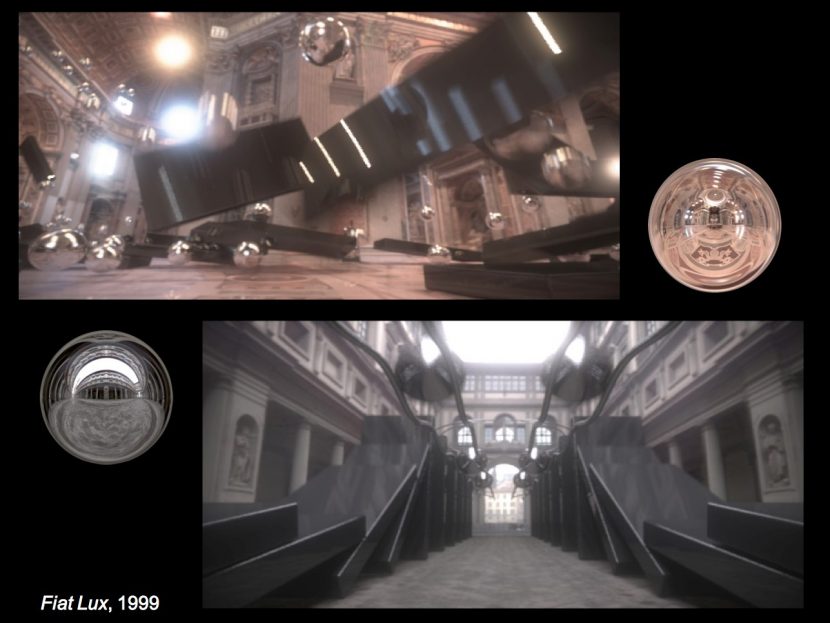

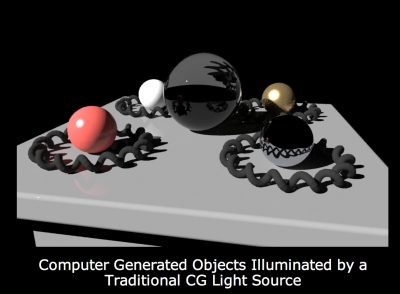

A key film done to show the power of HDR lighting was “Fiat Lux”. In normal CG, motion blurred highlights for example would blur down from a full value to a lower level, this idea of highlights that appear being white, but actually being ‘more than white’ higher than 1, was central to the very heart and invention of HDRs, and the need to move from 8, 10 and 12 bit files to floating point files such as OpenEXR which we have today.

The work developed from image based modeling to image based lighting, by taking location HDR (bracketed images and using it for superior cg lighting).

At USC, Debevec has been part of the team behind several incarnations of the Light Stage, first publishing a paper at SIGGRAPH 2000. The work was received commensurate recognition in its contribution to film technologies. Debevec, Tim Hawkins, John Monos and Mark Sagar were awarded a 2009 Scientific and Engineering Award from the Academy of Motion Picture Arts and Sciences for the design and engineering of the Light Stage capture devices and the image-based facial rendering system developed for character relighting in motion pictures.

A key project was “The Parthenon” which took enormous effort to accurately model and light. “The Parthenon” was a short computer film which was described as an animation “which visually reunites the Parthenon and its sculptural decorations, separated since the early 1800s. The film used combinations of time-of-flight laser scanning, structured light scanning, photometric stereo, inverse global illumination, photogrammetric modeling, image-based rendering, BRDF measurement, and Monte-Carlo global illumination in order to create the twenty-some shots used in the film.”

Note: Arnold renderer founder Marcos Fajardo was on the ICT team at this time, hence the Monte-Carlo rendering comment above.

Debevec’s initial goal was to be able to go somewhere, take a light sample and re-create it in a controlled environment – the Light Stage. The idea, of course, is that a character that is re-lit to look the same as say a background plate, can more realistically be composited into that background plate. “I had been developing techniques for image-based lighting,” he says. “I knew how to go out, take HDRI maps, panoramic, high dynamic range images in the real world and I knew that that was a data set of what the light was like incident on a point in space. Using a good global illumination algorithm, you can render a computer generated object with light captured from the real world. It became immediately interesting to think, ‘How can we take a real face, like a person, and light them with that illumination?'”

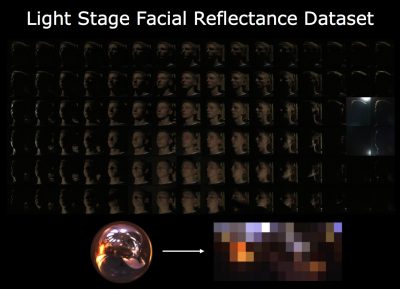

Looking to derive the colors and intensities of an HDR map or light probe image from a sphere of lights, Debevec sought a ‘scientifically correct answer’, rather than an approximation and a button press solution. “I realized I’d have a better shot at it if I captured a data set of what a person’s face looks like lit from every direction light can come from, and then use that data set as a way of simulating what it would have looked like if it had different colors and intensities of light coming from all around them, corresponding from one of the HDRI maps.”

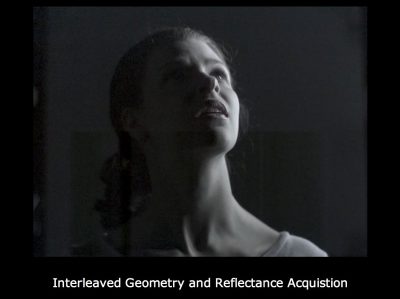

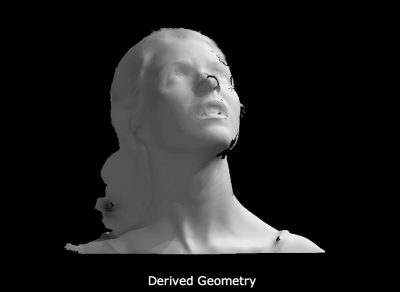

From simple relighting the team moved to capturing data of the face as well. This allows for a model of the face not just relighting the 2D image captured by applying maths based on the dataset.

Separation of Spec and Diffuse

The next development once the pipeline above was still completely valid, was to explore the modeling side of the research further. While image based relighting had lead to some modeling, a breakthrough came when the team perfected being able to very quickly scan and capture a complex human face in terms of separate diffuse and specular. Human skin is known for its sub-surface scattering of light – it is the property that gives skin a more wax light and less plastic property. The problem is this is also a property of skin that made scanners inaccurate, as their beams could be scattered by the exact same property. But human skin when catching a specular highlight is not scattering the light.

A sheen on a human face is actually happening at surface level and as such it a very accurate window into the surface properties at that point of the ‘ping or sheen’. The ICT team developed the Light Stage to be be able to photograph a person’s face with the use of polar filters and very accurate projection and produce a completely accurate specular map of the face. By having specular and diffuse separate and combined with the wealth of innovation the team had already developed they could not turn the Light Stage into a super accurate facial geometry scanner in additional to everything else it could do. The Light Stage can do this quickly enough to scan expressions. This is a key aspect. Compare this to covering a face in plaster and making a mold. Not only is it vastly faster and more comfortable for the actor, but a mask requires a neutral pose, but not so the Light Stage. This allowed the team do provide key poses showing exactly how the skin pores moved and stretched under extremely exaggerated poses. The computer was no longer required to bend the neutral pose to the extreme, one could have a library of poses, extreme and subtle.

“As it turns out, the shine of the skin is a reflection of light that doesn’t scatter,” explains Debevec. “It just goes right off the surface. The shine of the skin also has this nice property that it maintains the polarization of light. So if you put a polarization filter on a light source and it hits the skin, it’ll stay polarized.” That’s exactly what was done on the Light Stage – the lights are polarized and an opposite polarizer is placed on the camera. By creating a difference image, the specular reflections could be isolated and that was then used to help understand the underlying geometry of the skin. This breakthrough became known as the gradient illumination scanning process.

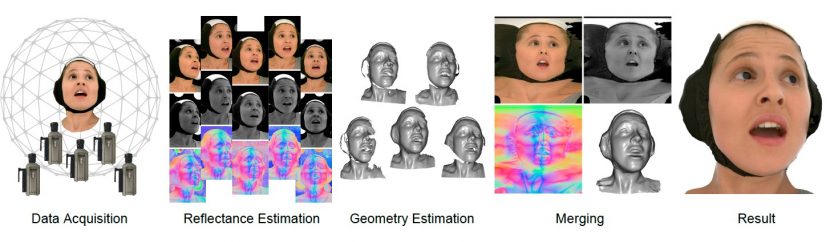

The making of Digital Emily.

“[This is] the process that made possible the Digital Emily project and the scanning that we did for Avatar,” recalls Debevec. “I realized that you could put polarizers not just on one light but on all of the lights in the Light Stage at the same time and cross-polarize out the entire sphere of illumination at the same moment. In addition, if you flip the polarizer on the camera you can get just the specular from the entire sphere of illumination at the same time as well. You can actually take a photograph of somebody’s spec map.”

Debevec also reasoned that by turning up the lights at the top of the stage to full intensity and then fading them down, or doing left to right gradients and using polar filters, a person’s normal map can be photographed. “You can get from the specular reflection and how the pixel values change in the specular under those lighting conditions,” he says. “It will read out which area of the Light Stage is reflecting in the skin toward the camera at that one little skin pixel. And if you know what part’s reflecting, you can take that vector and move it half way toward the view vector and that is a surface normal estimate. It’s from the specular, it doesn’t have any of the scattering in it and it has every tiny little bit of detail you can hope to get on the skin.”

Light Stage 6

One of the previous Light Stages, LS 6, allows a person to actually stand up and move (on a treadmill) in the sphere of lights. This Light Stage was able to replicate lighting as the smaller version did, but also had a set of cameras that allowed for the viewing camera to be virtualized. Instead of viewing a person running from just one point of view, with a rotating treadmill and virtualized cameras, the ‘flat 2D’ runner could be seen running from any angle in any lighting condition in the world.

With a matte also able to be extracted, by darkening the subject and lighting up a white screen, the runner could now also run with themselves, anytime, anywhere!

Today

Although there have been several incarnations, today the newest Light Stage X is made up of 350-odd LED light sources that surround a person’s face inside the sphere. Originally, the Light Stage provided a 2D re-lighting process. Sony Pictures Imageworks wanted to apply that process to digital actors in Spider-Man 2 and Superman Returns. “To turn it into 3D,” explains Debevec, “they take the data back from our Light Stage – Light Stage 2, then – map it onto three-dimensional geometry that is like a facial animation rig and they would put that data onto the face”.

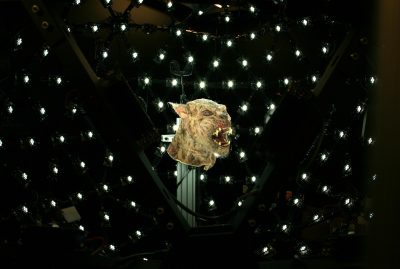

From those experiences, Debevec’s attention turned to being able to provide ready-made data out of the Light Stage capture process – ie. geometry, texture maps, information about sub-surface scattering – that could be used in, say, a visual effects pipeline. That’s something the ICT team have been able to do, most recently, for the films Underworld: Awakening and Journey 2: The Mysterious Island. For Journey, the film’s principal cast was scanned and re-lightable texture maps – diffuse, normal and specular – acquired that could be married to geometry, and for Awakening, which required werewolf transformations, both actor and macquettes were scanned in the Light Stage.

Links

History of the Light Stage – http://gl.ict.usc.edu/LightStages

Paul Debevec’s home page – http://www.pauldebevec.com

Fantastic interview and article. Paul Debevec’s work is mind blowing.

Can you make this Digital Emily into a face for Cortana in Windows 10, please? I would especially love to see how she grimaces a disappointed face when she cannot find or open something!

Pingback: Research: TEDtalk- Digital construction of human face (Paul Debevec) (ongoing) – BA Digital Arts