Rendering is always an exercise in managing how much computer power you are willing to devote to simulating reality – that cost is expressed in both dollars and time.

Once considered a commodity item in the whole CG / VFX world – rendering is now a hot topic. CG supervisor Scott Metzger jokes that one can’t talk about renderers without annoying someone. “Renderers are like religion (laughs). Rendering is a religion! Especially now in this era, which is really really exciting, there is so much going on and there are so many renderers, so much happening. To me it is the most exciting part of being in our industry right now.”

As Dana Batali, Vice President of RenderMan products at Pixar commented to fxguide at an earlier Siggraph, “Rendering drives the largest computational budget of getting the pixels to the screen.” He pointed out at that time ‘sims’ (physical sims like cloth etc) were only about 5% of most film’s computation budgets. Since rendering dominates render farms one cannot devote as much effort to perfect light simulations in a render as you can to a destruction simulation in just perhaps one shot.

Renderers are easy to write in the abstract, as perhaps a university project, but to work in production environments is extremely difficult. Arnold, by Solid Angle, is some 200,000 lines of highly optimized C++ code, and it is considered a very direct implementation without a lot of hacks or tricks. Production requirements in terms of rendertime and scene complexity are staggering. And the problem is not just contained to final render time, as Arnold founder Marcos Fajardo pointed out at Siggraph 2010 – final render CPU time might cost $0.10 per hour, but artist time is closer to $40 an hour, so interactivity is also vital.

This leads to the heart of rendering: picking the best approach that will get the results looking as good as possible, in the time you have, and more precisely picking which attributes of an image – be it complex shading, complex motion blur, sub-surface scattering or some other light effects should be your priority – which ones will play in your shot, and which attributes need to be more heavily compromised.

Rendering is an art of trying to cheat compromises.

Concepts

There are many choices and factors that influence the decision of a studio to pick one renderer or another, from price to their pipeline experiences, but for this article we focus on a comparison based on the needs of global illumination (GI) in an entertainment industry production environment. We have chosen to focus on major studios with the expectation that many smaller facilities are interested in the choices made by those larger companies with dedicated production and R&D personnel. This is not to lessen the importance of smaller facilities but rather to acknowledge the filter down nature of renderer choices.

Reflection and shading models

The goal of realistic rendering is to compute the amount of light reflected from visible scene surfaces that arrives to the virtual camera through image pixels. This light determines the color of image pixels. Key to that are the models of reflection/scattering and shadingthat are used to describe the appearance of a surface.

- Reflection/Scattering – How light interacts with the surface at a given point

- Shading – How material properties vary across the surface

A mathematical description of reflectance characteristics at a point is the BRDF – bidirectional reflectance distribution function.

BRDF

An object’s absorption, reflection or scattering is the relationship between incoming and outgoing illumination at a given point. This is at the heart of getting objects looking correct.

Descriptions of ‘scattering’ are usually given in terms of a bidirectional scattering distribution function or, as it is known, the object’s BSDF at that point.

Shading

Shading addresses how different types of scattering are distributed across the surface (i.e. which scattering function applies where). Descriptions of this kind are typically expressed with a program called a shader. A simple example of shading is texture mapping, which uses an image to specify the diffuse color at each point on a surface, giving it more apparent detail.

The modern chase for realism revolves around more accurate simulation of light and the approaches renderers have taken to provide the best lighting solution. Key to current lighting solutions is global illumination.

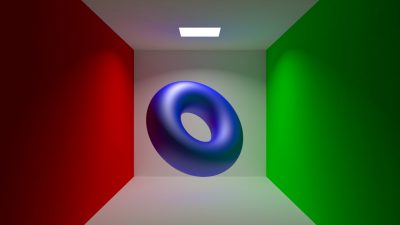

Global illumination

The defining aspect of the last few years of renderers has been global illumination (GI).

Jeremy Birn (lighting TD at Pixar and author of Digital Lighting and Rendering, 2006) succinctly defines GI as “any rendering algorithm that simulates the inter-reflection of light between two surfaces. When rendering with global illumination, you don’t need to add bounce lights to simulate indirect light, because the software calculates indirect light for you based on the direct illumination hitting surfaces in your scene”.

We want to get all the contribution from all the other surfaces so that it takes into account BRDF and radiance from each direction. GI makes CG lighting much more like real world lighting and accounts for radiosity or the color bleeding that happens when no reflective surfaces still provide bounce, and bounce tinted to their diffuse color.

Solutions:

- Radiosity

- Photon mapping (and with final gathering)

- Point clouds

- Brick maps

- Monte Carlo ray tracing

Conventional radiosity

Conventional radiosity is an approach to GI where indirect light is transmitted between surfaces by diffuse reflection of their surface color, and sorted in the vertices of the surface meshes. While this was one of the first types of GI to become available, the resolution of your geometry is linked to the resolution of your GI solution. To achieve more detail in the shadows, you need to increase the poly count, and if the objects are animated and moving it needs to be recomputed every frame. As such it was not popular in VFX.

In a simple ray tracer, the ray’s directions are determined regularly and normally in a simple grid. But there is a key alternative, Monte Carlo ray tracing, also known as stochastic ray tracing. In Monte Carlo ray tracing the ray’s origins, directions, and/or times are set by using random numbers. See below.

A ray tracer suffers in render time as the number of shiny surfaces and the number of lights and objects balloons – as it tends to on a major effects shot.

The key with a ray tracer is not its complexity but the complexity of its optimizations and implementation.

The key concepts are simple enough, but the demands on a production ray tracer to deliver inside a computational budget on exceedingly complex projects is no small demand. Until recently, full ray tracers were not used for animation. They were popular for still shots, or very small special cases, but most ray tracing commercially happened as part of a larger solution, as part of a hybrid solution.

Now that that is changing there is great demand for the amazing accuracy and subtlety of a ray tracing solution. But the key is to stay focused on producing good results not necessarily accurate results. In films and TV shows it is rare that accuracy is regarded as the absolute yardstick. Flexibility to solve directorial requirements need not also encompass physical accuracy, but the ability to create realistic imagery is pivotal.

Photon mapping

The photon mapping method is an extension of ray tracing. In 1989, Andrew Glassner wrote about ray tracing in An introduction to ray tracing:

“Today ray tracing is one of the most popular and powerful techniques in the image synthesis repertoire: it is simple, elegant, and easily implemented. [However] there are some aspects of the real world that ray tracing doesn’t handle very well (or at all!) as of this writing. Perhaps the most important omissions are diffuse inter-reflections (e.g. the ‘bleeding’ of colored light from a dull red file cabinet onto a white carpet, giving the carpet a pink tint) etc.”

The photon map algorithm was developed in 1993–1994 and the first papers on the method were published in 1995. It is a versatile algorithm capable of simulating global illumination including caustics and diffuse inter-reflections. And for many years it provided the same flexibility as the more general ray tracing methods using only a fraction of the computation time.

The key with photon mapping compared to early radiosity ray tracing that stored values at each vertex is that in the new approach the GI is sorted in a separate data type – the photon map. The resolution of a photon map is independent from the rest of the geometry.

The speed and accuracy of the photon map depends on the number of ‘photons’ used. They bounce around a scene and bounce off any surface which should be brightened by indirect light and are stored in a map – not unlike a ‘paint gun’ effect where the blotches reflect the photons. This means that you can get good results from just photon maps, but if the number of photons are not enough, the results will not be smooth.

The solution to this problem is to use photon maps with final gathering, which in effect smooths out the photon map, providing much more continuous and smoother illumination. In addition to ‘filtering’ the photon map it provides what Birn describes as something that “functions as a global illumination solution unto itself, adding an extra bounce of indirect light.”

The photon map with final gathering for computation of global illumination is a three-pass method:

- First, photons are emitted from the light sources, traced through the scene, and stored in a photon map when they hit non-specular objects

- Then, the unorganized collection of stored photons is sorted into a tree

- Finally, the scene is rendered using final gathering (a single level of distribution ray tracing). The irradiance at final gather ray hit points is estimated from the density and power of the nearest photons. Irradiance interpolation is used to reduce the number of final gathers.

The photon map is still decoupled from the geometric representation of the scene. This is a key feature of the algorithm, making it capable of simulating global illumination in complex scenes containing millions of triangles, instanced geometry, and complex procedurally defined objects.

Combining global illumination with final gathering can achieve the most physically accurate illumination results required. And it is widely used for interior architectural shots that require the effect of the light contribution from exterior and interior light sources. To seamlessly turn final gather on and off with global illumination, a scene must be modeled in a physically plausible way for both of these lighting effects. For example, lights should have roughly identical values for color (direct light) and energy (photons) attributes. Materials are normally also designed to be physically plausible also. So in Softimage, for example, there is an Architectural Material (mia_material) shader that is designed to support most physical materials used by architectural and product design renderings. It supports most hard surface materials such as metal, wood, and glass. It is tuned especially for glossy reflections and refractions and high-quality glass.

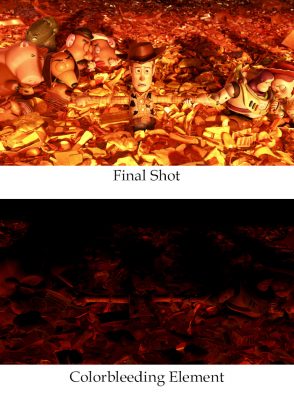

Radiance or ‘color bleeding’ in RenderMan

As time progressed companies aimed to add radiosity to images, even if they were not doing a full ray tracing solution. With release v11 of Pixar’s RenderMan, ray tracing was implemented as part of the shading language, to aid in rendering physically accurate interreflections, refractions, and ambient occlusion. In the case of RenderMan today, v16 offers several new features implemented specifically to enhance the performance of ray traced radiosity, including a radiosity cache, physically plausible shading, and a pure brute force ray tracing solution. The new Raytrace Hider in v16 lets renders bypass the REYES algorithm altogether. The Raytrace Hider is a new option that allows one to render images using pure ray tracing, bypassing the usual rasterization process that PRMan uses. Rays can now be shot from the camera with jittered time samples and lens positions to produce accurate motion blur and depth of field effects. With v16, ray traced GI has become a viable production tool. Prior to v16, RenderMan was still producing great GI solutions using multi-pass solutions.

Before the expense of ray traced radiosity became feasible for large productions, Pixar’s RenderMan tackled GI in two distinct ways, one using ray tracing to just add the indirect radiance after the direct illumination has been calculated, and the other using a version with no ray tracing at all. all. These techniques were first used in production on Pirates of Caribbean 2: Dead Man’s Chest, 2006. For production scenes with complex shaders and geometry, these techniques proved to be relatively fast, memory efficient, and artifact free, but because of their multipass nature, they required significant disk I/O, careful asset management, and were unsuitable for interactive re-lighting.

Pixar’s RenderMan provided two multipass solutions or options for natural color bleeding: brick maps (this is an approach similar to photon mapping) and point clouds. We spoke to Pixar’s Per H Christensen, about these two multi-pass approaches.

[fx_audio src=”/wp-content/uploads/2012/04/renderman_per.mp3″ link=”/wp-content/uploads/2012/04/renderman_per.mp3″]

Click to listen to Mike Seymour talk to Per Christensen who explains the differences between brick maps and point clouds. Per Christensen is a senior software developer in Pixar’s RenderMan group at Pixar in Seattle. His main research interest is efficient ray tracing and global illumination in very complex scenes. Before joining Pixar, he worked for Mental Images in Berlin and Square USA in Honolulu. He received a Master’s Degree in electrical engineering from the Technical University of Denmark and a Ph.D. in computer graphics from the University of Washington.

Option 1 Brick Maps: Ray traced solution/brick maps to solving indirect illumination or radiosity

The steps are :

– Render with direct illumination, and during this render the software writes out point cloud (with each point in the cloud having the direct illumination color on it), this is “baking the direct illumination”.

– Then the software converts this point cloud into a 3D brick map. This 3D map – very much like a texture map – is independent of the camera effectively.

– The final step is to render the final image and for each shading point where you want to know the indirect illumination or the radiance the software shoot rays back out into the 3D brick map and looks up the color at that point. Doing it this way gets expensive very quickly, but it is optimized in RenderMan to just look up in the brick map and to minimizes the number rays. As REYES divides the surface into micropolygons RenderMan does this well.

Option 2 Point Clouds: to solving indirect illumination or radiosity with point clouds (no ray tracing)

The steps are:

– Render with direct illumination as before, write out point cloud (each pt has the direct illumination color), but do not do the brick map

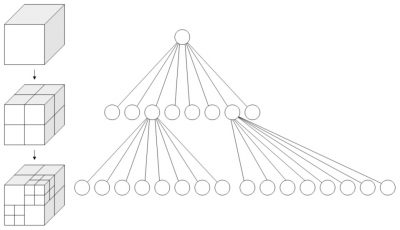

– Render the final image – for each shading point where before you would shoot rays into a brick map – you now look up in an octree. So points close by where we are in 3 space the software evaluates fully, but points a long way away are just clumped together for an aggregate solution. In a way at each shading point you want a fisheye view of the world, at that point, a rasterization of the world – but using the octree to speed everything up.

RenderMan doesn’t account from every point in the cloud and does not use ray tracing at all in this method.

To deal with these very big point clouds there is a cache system that reads the points as you need them. Similarly, for the ray tracing method, the software was optimized to allow for dynamically offloading of geometry when not needed to reduce memory use.

In the past, TDs picked one of these two methods for a show (film) to establish the approach that works for that film’s scenes. For example, Wall-E used the point cloud methods for ambient occlusion, as there was a lot of very dense garbage (in the start of the film) and for the ray tracing method they would need to access all of the geometry to determine ray intersections, making the point cloud method the one the Pixar team selected to use for that film.

Importantly, most of the discussion above is about diffuse transports. To cover a broader more general approach to GI it should be stated that only photon mapping and Monte Carlo ray tracing allows one to solve for the specular paths or even diffuse to specular lighting effects such as in caustics. Caustics remains a very complex and demanding issue. While solved many times in production it tends to rarely be solved directly and is more often solved as a special case.

The RenderMan team used to recommend using multipass methods such as baking the direct illumination or photon mapping. But with the multi-resolution radiosity cache introduced in PRMan v16 it is just as efficient, and much easier to use the new techniques.

Christophe Hery, Senior Scientist at Pixar says, “Obviously I have been a big supporter of multipass approaches in the past, and in particular of point-based techniques. But through my more recent Physically Based and Energy Conservation work (primarily at ILM with my former colleague Simon Premoze – though I now reproduced and enhanced it at Pixar), I discovered that Multi Importance Sampling is a very practical solution for allowing a unification of illumination: for instance, it is because we sample the BRDFs that we can transparently swap a specular emitting light of a given shape and intensity, with a geometry of the same size and color, and in essence get the same reflection from it (obviously a crucial part to get that working is HDR textures). Interestingly, solving the visibility for specular (ray-tracing for MIS) will essentially give you for free the shadowing on your diffuse components. Associate to that the radiosity cache (from PRMan v16) and then you find yourself in a situation where the PBGI (Point Based Global Illumination) stuff or even spherical harmonics become obsolete (to justify these, you would need to have more or less a pure diffuse illumination, at least a low frequency light field).”

Christophe Hery joined Pixar in 2010 after spending 17 years at ILM. In 2010 he received a Technical Achievement Award for the development of point-based rendering for indirect illumination and ambient occlusion. He is recognized throughout the industry as one of the leading technical innovators and researchers in areas of lighting and rendering. As such, fxguide asked for his personal opinion as to whether this means he increasingly favors a full ray traced solution.

“Yes. I believe the whole industry is moving in that direction. Normalized BRDFs and area lights, in conjunction (through MIS), deliver a plausible image with minimum tweaking, empowering the artists to focus in beautifying the shots.”

In recent times at Siggraph and elsewhere there have been advances in lighting such as the use of spherical harmonics, but the use of these cutting edge approaches are somewhat mitigated by adopting a more complete ray tracing solution. Hery expands on the point above:

“SHs (Spherical Harmonics) as a full pre-canned illumination solution can ‘only’ reproduce low frequency luminaires or materials. As such, they are not a complete approach, and they always need to be supplemented by something else. Plus they come with serious issues related to precomputation and storage. If one is to use MIS to achieve specular, one might as well share the light sampling (and traced visibility) and essentially get diffuse for free.”

When the master GI solution is ray traced, the setups are also easy: there is no special extra data to manage. Hery, again:

“You can even make the whole thing incremental (in a progressive refinement manner), enabling fast interactions. With PRMan v16, I do not think we are at a time that it makes sense to trace the camera rays (better to rely on Reyes tessellation), but everything else can. On the other hand, Lumiere PRMan’s relighter, is starting to work great in pure ray-trace hider mode.”

Lumiere is a part of RenderMan RPS, and provides an API for developers to access. Lumiere is the REYES re-renderer, but there is also the RAYS re-rendering/relighter. Many studios have written their own interface into Lumiere, including from inside Nuke, inside Maya, or as a stand-alone facility application. Lumiere is actually a name given to two different types of re-rendering inside Pixar, both a relighting Katana-style tool and an interactive tool – the name is used on a several of Pixar’s internal tools.

Monte Carlo ray tracer

Arnold is an example of a Monte Carlo Ray tracer, it’s an unbiased, uni-directional stochastic ray tracer. Unlike RenderMan it uses ray tracing for direct and indirect lighting, but also unlike earlier ray tracers is not slow and difficult to use with animations and moving objects. Arnold is very much a production renderer designed precisely for VFX and animation production. (see below).

Arnold fully supports GI and provides incredibly high levels of realism and visual subtlety while also covering the flexibility needed for productions.

Arnold has no rasterization tricks, no irradiance caches or photon maps for light sources. According to Eric Haines (Ray Tracing News): “Motion blur and depth of field effects weakens rasterization’s appeal, since much sampling has to be done either way; these features are a natural part of stochastic ray tracing. Not being a hybrid system using some form of rasterization has a number of advantages. First, there’s only a single version of the model stored, not one for the rasterizer and another for the ray tracer. Reducing memory footprint is critical for rendering large scenes, so this helps considerably. Arnold also uses compression and quantization of data (lower precision) in order to reduce memory costs. Not using two methods of rendering avoids other headaches: maintaining two separate renderers, fixing mis-syncs between the two (e.g., one thinks a surface is in one location, the other has a different result), dealing with a large number of effects consistently in both, etc.”

Avoiding irradiance caches, as in the hybrid approaches above, means that there is minimal precomputation time for Arnold. This means rendering can happen immediately versus waiting for precomputations to be completed. Combined with progressive rendering (where an image is roughed out and improves over time), this is an advantage in a production environment, as artists and technical directors can then iterate more rapidly.

Image Based Lighting (IBL)

An important part of GI is image based lighting. IBL involves capturing an omni-directional representation of real-world light information as an image, typically in one of three ways:

Dreamworks (updated)

It would be wrong to imply all productions are moving away from PBGI and are focused just on ray tracing. While Dreamworks do not use RenderMan – they use their own renderer – they have been using point based solutions very successfully on their films for some time.Eric Tabellion from PDI/Dreamworks, R&D published the following as part of his 2011 EuroGraphics paper

“Point-based global illumination (PBGI) is an efficient method for computing smooth interreflections which are vital for photo-realistic rendering. PBGI is an alternative to ray tracing based approaches, such as Monte Carlo path tracing or irradiance caching and has been utilized in several DreamWorks Animation feature films, including “Shrek Forever After”, “Megamind”, and many others.

In its most basic form, PBGI is a level-of-detail (LOD) algorithm for simplifying both geometry and shading. As a preprocess, the geometry is finely sampled using a large number of points, which are shaded using direct illumination and a view-independent diffuse shading model. This point- based representation is then aggregated into a hierarchy, usually an octree, where each coarser level accumulates the geometry and outgoing radiance from the finer levels.”

At Siggraph 2010 Tabellion summarized it as: “GI remains very valuable for animated film production lighting. We’ve analyzed both ray-tracing and point-based GI approaches … overall, ray-tracing is more appropriate for applications seeking higher accuracy or when scenes have large flat surfaces without too much high-frequency texture detail. Points offer a close approximation and are a very appealing alternative for film production. Both approaches produce images that are visually very similar, but point-based GI produces very stable images more efficiently while handling higher scene complexity.”

“When considering rendering performance and scene complexity handling, I’m still a big fan of PBGI,” Tabellion explained to fxguide this month, but he also pointed out that, “When considering ease of use and setup, interactivity (minimize the ‘time to first pixel’) and reliability / predictability, then I think rendering systems based on ray tracing are better.”

The Main Renderers

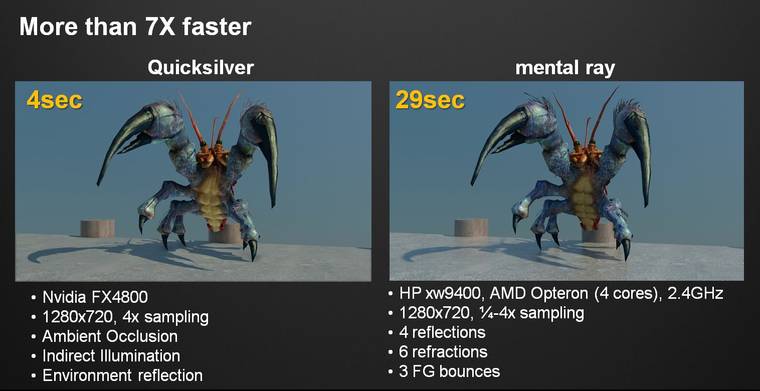

The market for renderers can be divided into three groups. Stand alone packages such as RenderMan, application specific packages such as Mantra (Houdini’s renderer) and GPU renderers that may or may not be bundled with an application such as Quicksilver which ships with 3ds Max. Of these the production gold standard for the past few decades has been RenderMan from Pixar’s RenderMan group.

RenderMan – Pixar

Photorealistic RenderMan, PRMan, is Pixar’s primary renderer and one of the most influential and respected renderers in the world. Not only has every major Pixar film and thus many Academy Award winning animated feature films used it, is also the blue ribbon high end package that all other renderers must be compared to.

Photorealistic RenderMan, PRMan, is Pixar’s primary renderer and one of the most influential and respected renderers in the world. Not only has every major Pixar film and thus many Academy Award winning animated feature films used it, is also the blue ribbon high end package that all other renderers must be compared to.

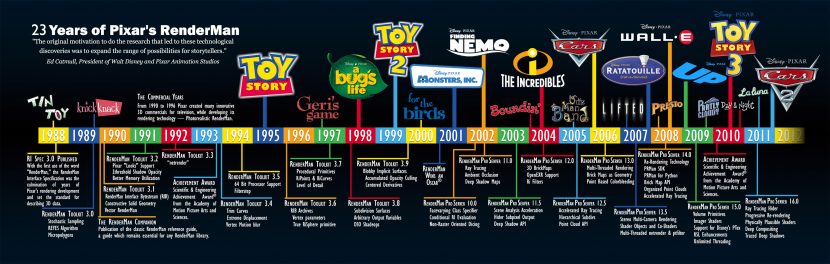

The first use of the word RenderMan was in the RenderMan Interface Specification, in 1988. Strictly speaking, PRMan is a RenderMan compliant renderer, and there are other RenderMan compliant renderers not made by Pixar.

PRMan uses the REYES (Renders Everything You Ever Saw) algorithm but it is also able to deploy ray tracing and global illumination algorithms making it a hybrid renderer. Loren Carpenter and Robert Cook developed the original REYES renderer when Pixar was part of Lucasfilm’s computer and graphics research unit in the early 1980s. It was first used commercially to render the incredibly impressive and landmark Genesis Sequence in Star Trek II Wrath of Khan (1982).

Since the beginning of the CGI / VFX industry, Pixar’s RenderMan has been playing a key role, rendering VFX for such classic films such as The Abyss, Terminator II, Jurassic Park, and fully animated features such as the Toy Story series, Wall-E, Cars and the rest of the great Pixar films. RenderMan was involved in 84 VFX films and 23 animated films in 2011 – worthy of note this is approximately ten times the volume of a decade ago.

Today, Pixar’s RenderMan has evolved to become the de-facto standard for much of the VFX industry, used everywhere by studios large and small to create outstanding graphics for feature films and broadcast television. Pixar’s RenderMan has been used on every Visual Effects Academy Award Winner of the past 16 years, and as of last year, 47 out of the last 50 nominees for Visual Effects have chosen Pixar’s RenderMan to render their effects. Since 1999, Pixar has been awarded some 5 technical Oscars, from the 2011 – Scientific & Engineering Award to David Laur (Senior RenderMan Developer) for his work with Pixar’s Alfred render queue management system, to the Academy honoring Pixar’s head Ed Catmull with the Gordon E. Sawyer Award for a lifetime of technical contributions and leadership in the field of computer graphics, including his work with Pixar’s RenderMan.

Today PRMan is used to render everything that comes out of Pixar and is commercially available to other studios as either part of the RenderMan Pro Server or directly for Maya. There is also renderfarm queue management software and special student and educational versions.

RenderMan History

The Early Days 1988 to 1995

- RenderMan renders curved surfaces by using micropolygons about the size of a pixel

- It did not originally use ray tracing, but now that has been added in 2002, making RenderMan a hybrid render

- It uses high level primitives. Geometry is tessellated in screen space, one can ask RenderMan to render a sphere, at this location, you don’t need to define the sphere

- From the outset it was very well designed for parallel processing a scene on a renderfarm. It was a tiled approach and thus only needed to use a fraction of the total geometry per tile, making it very efficient

- It used stochastic (randomizing) sampling – and very early on it had motion blur which allowed it to be used very effectively for effects work, integrating with live action more seamlessly

- And it was very well suited to displacement mapping

1995

Toy Story was introduced and by then RenderMan had 64 bit support, and was at RenderMan toolkit 3.5

2001

Monsters Inc was released and and the ricurve primitive, for rendering fur and hair, was added.

2002

Finding Nemo was released.

Ray tracing was introduced, in RenderMan Pro Server 11. This was a key release – it included not only ray tracing but also ambient occlusion and deep shadow maps.

2004

The first widespread use of ray tracing at Pixar was for ambient occlusion in the movie The Incredibles.

2005

Accelerated ray tracing was introduced for the film Cars. Cars used pure ray tracing without color-bleeding, radiance or radiosity effects. The ray tracing did provide accurate sharp reflections and shadows – notably ambient occlusion, to provide very realistic car paint textures.

2006 – 2007

Brick maps and point based color bleeding were introduced.

Ratatouille was released with dramatic sub surface scattering used on the elaborate food shots. But the film pre-dated the new brick map and point based color bleeding, using it only for some shiny pots and pan shots, but not widely throughout the film.

2008

Wall-E is released and extensively used brick maps.

2009

Pro Server v15 was released, including Ptex support for the first time.

2010 – 2012

PRMan today supports deep compositing, a new ray tracing hider, a radiosity cache, and physically plausible shading. It is still a hybrid renderer but with v16 it is possible to just ray trace and not use the REYES algorithm. This is not to imply that the REYES approach is being phased out. As Dylan Sisson, RenderMan Technical Marketing Specialist at Pixar, explains, “Today more complex scenes than ever before can be handled by pure ray tracing, but not all scenes. There is room for alternative techniques and tools which may be useful depending upon the unique demands of any given scene, so the RenderMan team sees its role as providing many varied approaches to a user – choice rather than just ‘one tool in the tool box.”

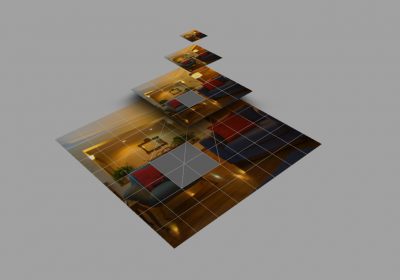

Pixar Toy Story 3 shot progression

Current developments

Today some companies like Weta Digital have scenes that are too complex for an ‘all-in-one’ ray tracing solutions. For some of their work, ray tracing just isn’t a solution. “If you look at companies like Weta who are putting out really really large data sets,” says Sisson, “the size of data sets they are generating is mind boggling. So for those top level studios there is going to be a place for point based solutions for scenes that can’t be rendered with ray tracing. But on the other hand, there are going to be more and more types of shots where ray traced GI will be perfectly reasonable.”

The use of ray tracing or not is a direct factor of image rendering time. Performance is therefore a big issue for the engineers in the RenderMan team. “If you look at the performance with the new radiosity cache, RPS 16 can be up to 40 times faster than RPS 15 on some of the scenes we have tested in production. So RPS 16 is just much faster,” notes Sisson.

Today RenderMan ships as RenderMan for Maya 4.0, RenderMan Studio 3.0 and RenderMan Pro Server 16.0.

RPS 16 is very much a landmark release for RenderMan. It is not only significant for what was added but it lays the ground work for several new chapters in the RenderMan story. RPS 17 is planned for release in 2012, and it will very much build on 16, especially in providing improvements in ray tracing as they relate for example to volumetrics, photon mapping and object instancing. “RPS 16 is a foundational release,” says Sisson, “We looked on the horizon and we see that more expensive effects like ray traced GI and ray traced subsurface scattering will become applicable to production VFX, even becoming the norm. We’ve gone to great lengths to make PRMan very savvy about how it calculates these effects, so we implemented the radiosity cache, the plausible shading, the ray tracing hider, creating a solid base for rendering ray traced radiosity, interactive re-rendering, etc. We’re buying into the idea that we want to provide people with a great tool box – so if you want to render everything in one pass – great – but if you want to break your scene up and render it in many passes, you can do that too.”

Although RPS16 is still based on the REYES algorithm, the team at Pixar have extended PRMan with various ray tracing tools while maintaining RenderMan’s reputation for handling the most complex production scenes. By the use of ray differentials and multi-resolution texture and tessellation caches, very complex scenes can be ray-traced and high levels of radiosity realism can be used in production.

Which begs the two questions – why go this way and where is this heading?

- Why add ray tracing? Certain desirable special effects, such as accurate environment reflection, inter-reflection, refraction, and colored shadows can be easily handled by a general tracing facility. Also, the same internal facilities are required for generating photon maps, which Pixar uses for global illumination. (In other words, RenderMan already has ray tracing and has for some time, this new work builds on it).

- So has PRMan been replaced by a ray tracing renderer? Pixar’s existing PhotoRealistic RenderMan product has been extended to add ray traced shading as an integrated set of new features. PRMan continues to be based on Pixar’s highly evolved version of the REYES algorithm, which has been so successful in handling large production shots. In fact, Pixar continues to add interesting new features and performance enhancements to the core renderer, independent of ray tracing, often in response to their own demanding feature film requirements. Ray tracing has been added to the shader writer’s toolkit, in an advanced form which builds on the tracing proposal that has been part of REYES all along. PRMan still efficiently sets up primitives for rendering as it always has, but now shaders that execute on those primitives have the ability to use several built-in ray tracing operators to probe other geometry in the scene. The techniques that long-time PRMan users have learned and refined will continue to be useful and effective, ray tracing just adds to the bag of tricks. Indeed, many scenes will not benefit from adding ray traced effects, but they can now be added when required in a fully integrated fashion.

An example of ray tracing being used prior to RPS 16 is in the films Cars and Cars 2. While the sharp car reflections in the cars were generated with ray tracing, an even better example of complex yet unusual use of the algorithms is in the background car crowd scenes.

To produce background large crowds, a huge number of cars were required. Pixar used Massive, Houdini, and Marionette – their own in-house animation system – to produce the car crowds in the stands. To render the cars a technique called ‘Shrink-wraps’ was developed. Pixar used displacements to ‘shrink-wrap’ a cube shape down to a car shape for the crowd cars in Cars and Cars 2. Essentially, the crowd cars collapsed the entire model hierarchy down to a box for the body, and four cylinders for the wheels, these base simple shapes were then displaced to the shape of ‘hero cars’ that were separately and previous traced. These ‘hero car’ models were placed in a sphere, that was traced and then the data applied to the very simple shapes to make them displace to look like very complex much more heavy geometry. These ‘crowd cars’ render extremely fast and the geometry is tiny by comparison.

“That is one of neat things you can do with RenderMan,” explains Sisson. “You can write arbitrary shaders that allow you to create interesting assets.” To create the Shrink-wraps for the cars the team “essentially placed a virtual sphere around the actual model of the car (hero car) and then used a shader to trace into that sphere along the normal until it hit the car, and then would report back the distance, to create a 32-bit float map for displacement. This is a great example of how flexible ray tracing is in RenderMan – it is not just casting a ray and retrieving a color – rays can generate arbitrary data like these shrink wraps.”

Cars were then randomized for color etc. The end effect is extremely effective dramatically lighter in memory requirements and faster to render. For example: 200 normal cars would render in 59:24 seconds (long distance – far away cars) vs 00:51 using the Shrink-wrap approach, and 3.8GB of PRMan memory becomes just 61MB with the Shrink-wrap ‘trick’.

The use of shrink-wraps was not ideal for models with concavities or transparency. Side mirrors of many cars were the main areas one can see a visible difference, but in a wide crowd shot this is an acceptable limitation. Heavy displacement can also create render-time artifacts from lighting and texture filtering, but for background characters Pixar found these minor points acceptable.

But the net result is extremely effective, and yet the technique dates back to 2006, and it goes to show the flexibility of ray tracing in RenderMan.

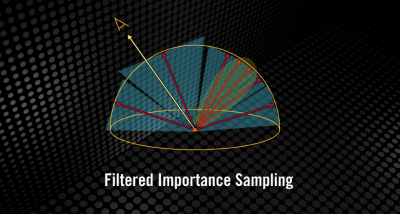

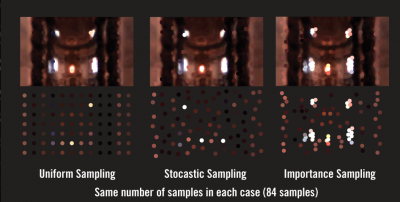

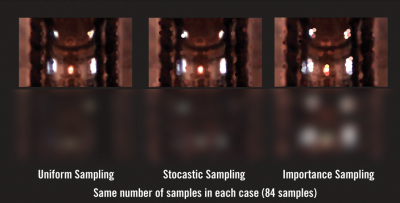

For the 2011 implementation of ray tracing inside RenderMan, the Pixar engineers use importance sampling or rather ‘multiple importance sampling’ (which samples from both surfaces and lights and combines the results in a smart way in the shader for the lowest noise, and less artifacts while still being very fast).

Importance sampling is part of the process that determines how many rays you cast into a scene and making sure you don’t cast more rays than you need to. This is important as ray tracing is expensive but too few rays will result in noise. The point of importance sampling is to therefore provide more rays but just where it matters. The problem is that normally using the necessary number of samples to reach the required result is too expensive and fewer samples are evaluated at the cost of visual noise within the image. Importance sampling reduces the brute force approach.

This all affects writing shaders, in addition to the other things mentioned, in RPS 16 RenderMan has new tools in the shading language to calculate global illumination effects. “For example”, says Sisson, “if you want to create an area light, we have surface shaders that can reduce that type of calculation into as few a steps possible. In the beginning, we had shaders with an ambient component, diffuse component, and a specular component – those were the building block of shaders. Over time more features were added. New functions allowed shaders to calculate new effects, like ambient occlusion, but they also added cost to the shader. With RPS 16 we asked if there was a better way to consolidate the calculations shaders make? We created new calls for direct lighting and specular lighting as well, so that view-independent lighting (diffuse) can be reused by the radiance cache, while view-dependent lighting (specular) can be recalculated. This is one of the things that makes ray traced GI in 16 so fast. Over the next 3 or 4 years people are going to become a lot more familiar with the new features of RPS 16.”

Looking forward to the rest of this year, Dylan Sisson points out that RPS 17 and RMS 4.0 will be released. The current RMS 3.0 has RPS 15’s internal ray tracer. “We are lining up the releases this time so RMS 4.0 will have the internal RPS 17 renderer with RMS 4.0 – so a complete upgrade. That release will have direct support for a lot of the features that are in v16 and v17, making these new features accessible to the average user.”

Longer term, RenderMan continues to grow and develop and Pixar, like many companies, is partly tied to the overall health of the general production community. Luckily, that is improving globally. Pixar has watched the market recover from the economic upset of the GFC a few years ago, and as Sisson comments, “We are seeing a lot more productions, a lot more growth. The pulse of the industry is a lot stronger than it was and we are seeing that reflected in (our) sales as well.”

Arnold – Solid Angle

One of the most interesting and successfully expanding renderers is Arnold. A fully ray tracing solution, it has gained tremendous credibility in the last few years at the high end especially in major vfx feature films and animated features. Sony Pictures Imageworks was the catalyst for the professional adoption of the product, but its use and reputation have blossomed to places such as Digital Domain, ILM, Luma Pictures, Framestore, Digic Pictures and others.

The product is ‘hot’ and yet remarkably unavailable. If you go to the Solid Angle web site you will be greeted with just a logo (and an equation if you can find it). No sales page, no specs, no PR, no user stories – nothing. Still the program is considered by many to be massively important, and its adoption is spreading just on word of mouth, amongst high end facilities.

Arnold was started by Spaniard Marcos Fajardo, but while he lived in the USA. Today Fajardo is still the chief architect of the “Arnold” at Solid Angle and the company is back based in Europe.

Arnold history

1997

Fajardo decided at age 24 in 1997 to write his own renderer. The skeleton of what was to become Arnold was started in 1997.

According to an interview with Fajardo by Eric Haines (Ray Tracing News), during Siggraph 1997 Marcos slept on the floor at the house of some of his friends. These friends introduced him to many of their own friends; one of them was Justin Leach, who was working as an animator at Blue Sky Studios. Justin knew Fajardo was into rendering and introduced him to Carl Ludwig, co-founder and one of the original developers of Blue Sky’s renderer. By all accounts, Ludwig was great, gave Fajardo a tour, and they spent some time chatting about ray tracing. Blue Sky Studios was the first studio to use (classical, later Monte Carlo to some extent) ray tracing for all rendering. Fajardo was blown away by their images, especially one rendered by John Cars, with illumination coming from the sky. He was inspired – these images convinced him that full stochastic ray tracing was the way to go. Blue Sky’s Bunny was done with stochastic ray tracing, though the bunny itself did not have indirect lighting (too expensive at that time). It was probably the first use of unbiased Monte Carlo radiosity in film, back in 1998.

1999

A renderer is not normally an end product like a paint program is, and thus in many respects it is an API that allows programs to access it for rendering models and animations created up stream in the process. Hence it was that Arnold was initially called RenderAPI. But in 1999 Fajardo was working with Station X Studios in LA. One night he went with two friends to an Arnold Schwarzenegger film, End of Days. Again according to Haines, Fajardo friends “imitated the Arnold accent from the rear of the theater, cracking up the audience.” Fajardo had never realized what a distinctive voice Schwarzenegger had, since he had only seen Schwarzenegger’s films in Spain, where they’re dubbed in Spanish. “They just impersonated him.. and one of them was a stand up comedian – and pretty good,” he laughingly recalls. Andy Lesniak, one of the friends at the theater, suggested “Arnold” as a joke, and Fajardo liked it. “So I thought – what the Hell – I will call it Arnold, but I did expect to change it but I never did.” The code name was picked up by people, so the name became permanent.

2001

In 2001 the animated film 50 Percent Grey was nominated for an Oscar. This was a key moment for the product. “Yeah that was just one of my friends working by himself, Ruairi Robinson, on 3D Studio Max, back then we had a 3DMax plugin that another one of my friends had developed (Gonzalo Rueda).” Arnold was used exclusively for the rendering. It was a key illustration for the power and speed of Arnold: it showed that ray tracing could produce high quality images with a small CPU budget. It was just one guy – eventually he sent the sound to Cinesite and they did the sound professionally but basically it was just one person with one or two machines.

To render the whole film with global illumination, full ray traced on just a few a machines was remarkable – something not lost on the film’s director who actually invited Fajardo to attend the Oscars with him in 2001. “He wanted to thank me, so it was the first time – it was the only time – I was invited to the Oscars. So that was a lot of fun!,” Fajardo remembers.

At that time Fajardo was working for Paul Debevec at the ICT in Marina Del Ray. Fajardo worked on the Parthenon project with Paul Devevec, which was all rendered on Arnold.

2003 – 2004

In 2001 a key paper was published on sub-surface scattering. Fajardo recalls that the first paper he saw on this was one by Henrik Wann Jensen, in 2000, but it was a follow up paper in 2001 which “made it a lot more efficient by precomputing the illumination at the surface of the object and then doing the integration as a secondary process. “When I read that second paper., the first time I read it I did not understand it, which is what happens with these technical papers, they are really heavy on the maths. I said ok this is kind of cool but I don’t get it, but then I re-read it a few times, and once I got it – I was attending a conference at the time and listening to some other papers – but once I got it I was so excited that I had actually understood the technique that I thought this is very cool and this is very easy so I am going to program it right away. So I opened my laptop and just implemented it into Arnold in half an hour.” The first major Arnold film to use it was Cloudy with a Chance of Meatballs when Fajardo arrived at Sony Pictures Imageworks (SPI).

In 2004 Fajardo joined SPI at Century City – although his flat mate was Sebastain Sylwan – now Chief Technology Officer at Weta Digital, but then he worked also for ICT and Sylwan and Fajardo shared a flat opposite the old home of the ICT in Marina Del Ray. Fajardo’s time at Sony was critical and so influential that now Arnold is the only renderer that SPI uses. While there he continued to develop the software but just as he had negotiated with ICT – he owned the rights to Arnold outside of the company.

2012

Solid Angle is now sixteen people and half of those are working on the core renderer. “I just cant seem to stop hiring people,” says Fajardo. “Every time I do another deal with a major studio I seem to hire another engineer!”

Of the sixteen, twelve are in Madrid, while others are remote, in places like London (on site at Framestore), San Francisco and Utah. We asked why Utah? “Utah is one of the historical and classical centers for computer graphics, Ed Catmull started at Utah, there is a lot of history and a great university. And in fact Utah became a center for ray tracing research a few years ago. Nvidia acquired a small company Rayscale (2008). They were from University of Utah. There are a lot of really good students in ray tracing coming from University of Utah. There is one really good researcher Peter Shirley (Adjunct Professor, School of Computing, University of Utah). He is one of the researchers I have learnt the most from in my career. He is one of the Gods of sampling and computer graphics. This is the guy who lead that group.”

It may seem like a diverse group, but talent is hard to find, says Fajardo. “You just go where the best talent is. Writing an efficient Monte Carlo ray tracer is really hard. There are only a few people in the entire world who can be really good doing this. We have to find them wherever they are.”

Arnold is now used at many companies around the world – Sony Pictures Imageworks, Framestore, Whiskytree, Luma Pictures, Digital Domain and ILM. The last one is interesting as ILM has a permanent site license of RenderMan, having been very involved from the earliest days and of course Pixar being a spin-off from Lucasfilm. ILM came to Arnold as they were exploring Katana from the Foundry, and as Katana works well with Arnold, John Knoll said he decided he wanted to try it. It was used in the car park sequence near the end of Mission Impossible 4: Ghost Protocol. “Pretty much every major studio you can think of is either using Arnold or is evaluating it,” points out Fajardo.

Arnold is now used at many companies around the world – Sony Pictures Imageworks, Framestore, Whiskytree, Luma Pictures, Digital Domain and ILM. The last one is interesting as ILM has a permanent site license of RenderMan, having been very involved from the earliest days and of course Pixar being a spin-off from Lucasfilm. ILM came to Arnold as they were exploring Katana from the Foundry, and as Katana works well with Arnold, John Knoll said he decided he wanted to try it. It was used in the car park sequence near the end of Mission Impossible 4: Ghost Protocol. “Pretty much every major studio you can think of is either using Arnold or is evaluating it,” points out Fajardo.

A few other films the renderer has been used on include Thor, Captain America, the upcoming Marvel film The Avengers, Alice In Wonderland (and almost all SPI pictures for several years), X-Men: First Class, Captain America, Red Tails, Underworld: Awakening and in commercials such as the award winning Bear spot by Mikros Image in France. (Nearly all of these we have reported on here at fxguide.com).

Arnold does have one major client not in films, but in the related area of gaming cinematics. Digic Pictures in Hungary is one of the top three cinematics companies in the world. The company’s first Arnold project was AC: Brotherhood, in mid 2010, and most recently Mass Effect 3. Everything in the cinematic was rendered with Arnold, except some FumeFX volume renders. At FMX they pointed out that the full HD frames character renders were around 45 min to 3 hours (face closeups) and backgrounds were 30 min – 2 hours.

“But keep in mind that lighting was very heavily based on indirect (big square area light behind the window, the rest is almost exclusively indirect illumination) so we needed more GI sampling than usual. And only the final renders (and a few tests were done in 1080p HD, before that the rendering and comp was in 720p).”

But with all their success, Solid Angle’s marketing program is almost non-existent. The company is working “from the top studios down” in a deliberate attempt to not grow too fast. The company likes to know all its customers and provide high level support. “It is much easier for me to sell a huge number of licenses to a large company and give them good quality support,” says Fajardo, “than it is to sell to thousands and thousands of individuals which would give me a lot more support work for me and my small company. Some day we may move from big customers to small studios but for now it is working well.”

What goes on in Arnold’s heart

At its core Arnold is a ray tracer that tries to solve as efficiently as possible ray tracing for film and media production, with as few tricks, hacks and workarounds from the end user as possible. “We are just trying to solve the radiance equation, on the fly without doing any type of per-computation, and pre passes,” explains Fajardo. “So we just trace a lot of rays around and hope to get an accurate answer. The challenge is to design a system that is optimized so that it traces a relatively small number of rays for a given quality and also the ray tracing needs to be very fast. That’s what we do everyday we try and optimize the renderer with both mathematical processes to optimize the Monte Carlo equations and also to make the code very fast – so those two things – the speed of the rays and the number of the rays – that is what we work on everyday.”

Arnold can optimize so well as it is focused on just one task: great images for movies. The Arnold team is not trying to produce a general purpose renderer that covers a wide range of uses and industrial applications, like RenderMan it is firmly developed with a very targeted user base in mind. There are other ray tracing products but they often seek to be used in automotive design, industrial and architectural design. Not so Arnold.

“Thanks to that efficient system you can actually use the system. For many years we have been led to believe that you would not be able to use ray tracing in production, and that is just a legacy, from when the software was not ready,” says Fajardo. Solid Angle have worked hard to make Arnold production ready so it can be used in production on a daily and exclusive basis. “It is paying off finally, it has been a lot of work but it is paying off.”

Interestingly, many of the earliest problems with ray tracing – sampling issues, mathematical approaches have been known for some time, and the basic equations were laid out by Pixar in the 80s in a seminal paper called Distributed Ray Tracing by Robert Cook, (Cook, Carpenter, Porter Siggraph 1984).

“Ray tracing is one of the most elegant techniques in computer graphics. Many phenomena that are difficult or impossible with other techniques are simple with ray tracing, including shadows, reflections, and refracted light. Ray directions, however, have been determined precisely, and this had limited the capabilities of ray tracing. By distributing the directions of the rays according to the analytic function they sample, ray tracing can incorporate fuzzy phenomena. This provies correct and easy solutions to some previously unsolved or partially solved problems, including motion blur, depth of field, penumbras, translucency, and fuzzy reflections.”

– Excerpt from the Abstract of Distributed Ray Tracing, 1984.

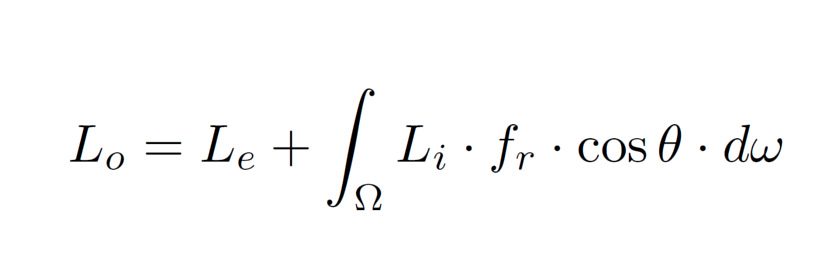

The initial equations published in this paper are indeed the starting point for Arnold, although, says Fajardo, “they were later refined by Jim Kajiya in 1986 and that’s the equation – that paper The Rendering Equation by Jim Kajiya that laid out the full set of equations today we call the radiance equation or for short the rendering equation. That is what we are trying to solve and that encompasses everything you see in our rendered image: anti-alising, soft shadows, global illumination, motion blur, glossy reflections – everything, all of that – it is all in that equation.”

The name of Fajardo’s company can even be found in this equation, if you look up the definition of the word radiance it is loosely described as the “amount of light that is emitted from a particular area, and falls within a given solid angle in a specified direction.”

The rendering equation itself is defined as an integral equation in which the radiance at a point is given as the sum of emitted plus reflected radiance under an optical approximation.

It was simultaneously introduced into computer graphics by David Immel et al.and James Kajiya in 1986.

If you click on the Solid Angle logo on their minimalist web site – this is the equation that appears.

But it is one thing to have an equation that has been freely published for twenty years and it is another to make a viable and growing product in the incredibly competitive world of visual effects.

Clearly Arnold is very good implementation of this equation.

We asked Fajardo about their implementation: “At the bottom of it (Arnold) is just a Monte Carlo integration, which is just that ‘we have a complex problem to solve – how do we do we solve it?’ The answer is we just throw a dice many times and by evaluating this function at all these random positions and average together all these evaluations we come up with an answer that converges to the right answer, with more and more samples. That is the basic process and that will give you better anti-aliasing, better soft shadows etc for everything. That is the basic way to describe it. It is more complicated that than in practice but that is how it works.”

As many computer graphics graduates know, it is not impossible to write one’s own ray tracer and many people at college do just that, but the difference with Arnold is that a basic ray tracer will be slow and unworkable. Arnold is not – it is fast and finely tuned for quality and speed simultaneously. “Writing a single path tracing renderer is not hard, but it is way different from writing a production renderer with thousands of features!,” jokes Fajardo.

Solid Angle does this by using not only their own team of in-house programmers but also the open source community when appropriate. An example is in texture anti-aliasing – a common problem for students writing their own ray tracer. A regular pattern of say one ray per pixel and point sampled will naturally produce aliasing and moire patterns. This is a problem Fajardo fixed very early in Arnold’s development. “When it comes down to texture filtering,” he says, “we can compute what we call a texture convolution and use a mipmap to pre-filter the texture so even one sample per pixel you would still get anti-aliasing texture maps. That is a very common practice – and everyone in the industry does that – and so do we, we use an open source library lead by Larry Gritz called OpenImageIO.”

Project OpenImageIO started as ImageIO – an API that was part of Gelato, the renderer software developed by Nvidia. Work started in 2002, and in 2007, when project Gelato was stopped, the development of ImageIO also ceased. Following this Larry Gritz started OpenImageIO.

“We incorporated OpenImageIO into Arnold and we rely on it to compute these very accurate and beautiful texture filtering that are aliasing free,” says Fajardo. “Not only do you get anti-aliasing textures but you can get a lot of textures into memory. OpenImageIO has a texture cache – and with a cache you can allocate say a 100 megabytes of memory just for texture maps and as you load more and more textures. And so with a limited amount of memory you can render huge scenes with thousands and thousands of textures – gigabytes of textures – so with regards to textures we are very happy with how we implemented this solution.”

This was in 2009 in Los Angeles. “We did this integration while I was at Sony Imageworks and the first show we used the OpenImageIO filtering was Cloudy with a Chance of Meatballs (September 2009). OpenImageIO played an important role because it lead us to load a lot more textures than before.” Fajardo was at Sony Pictures Imageworks as an employee but one with very special arrangements. Most of the development of Arnold was done with Fajardo negoiating with companies that he work on projects but be allowed to develop Arnold and, critically, keep the rights to the source code.

Interestingly, not only is SPI a fully Arnold facility and has been now for some time, but they have a concurrent version of Arnold. Under the deal negotiated SPI branched the source code. SPI has a copy and continues development of their version of Arnold and Fajardo owns his version and continues to develop and sell the software. This may sound like a divorce but if it is, it certainly is one where the children are not suffering. So strong is the relationship between Solid Angle and SPI that ideas and new developments continue to be swapped between both camps. “So if I come out with something that makes Arnold ten times faster Sony can take that and use it,” says Fajardo, “and in fact they have done that with different techniques. And likewise if they come out with something new I can incorporate it into my branch. Rob Bredow, SPI CTO, negotiated this deal for SPI. “In fact it was his idea!” compliments Fajardo, who clearly respects Bredow. “I left Sony not a rich man but I left with my own technology, and with that technology I started my own company.”

The Solid Angle branch uses C++ shaders. “The C++ library is a solid tool used in production for many years, currently at version 4.0. The SPI branch used C shaders for years, then both C and OSL for a while, and after the current set of shows is finished they’ll be OSL only,” Fajardo recently said.

The bulk of development and support is carried out by dedicated engineers in the Solid Angle team. Having key customers also with access to the source code such as the MtoA and SItoA may seem risky to some, but not so says Fajardo. “Making some source available to customers, and accepting contributions, is not the same as letting them write the software for you.”

Arnold is not the only ray tracer on the market – RenderMan is a hybrid scanline and ray tracer and Maxwell is a pure ray tracer with very little in the way of optimizations and customizations. Fajardo believes that Arnold sits between these two other professional packages. “If you think about a line – then at one end you have RenderMan which I describe as being great at painting pixels and at the other end of the line you have something like Maxwell – which is a very pedantic physically based renderer that does not let you ‘paint with a brush’ – it just lets you simulate light. Arnold somehow sits somewhere in the middle – what we have is a system that is something that is pretty much physically based – it lets you simulate but it also allows a lot of artistic control to change things.” But perhaps the greatest difference to users is the speed of the ray tracing – there is no doubt that Arnold is fast. “You can fire a lot more rays for a given CPU budget – and for that reason you can use it for animation. My goal when designing Arnold was to make a renderer we could use for doing animation – physically based renderering for animation. That is not the goal of Maxwell, say. With Renderman of course you use it for animation but then it is not a physically based system – it is more like a paint brush.”

Looking to the future, Fajardo feels that while there has been a lot done, there is “still a lot of room for optimization. You can optimize for a single image or you can optimize for animation, such as motion blur, but to give you an example one of the only limitations of Arnold is that it does not really do caustics very well. “We haven’t bothered to spend our resources optimizing on caustics. The reason is that the effect is very difficult to render – you need a lot of time and many rays – but honestly for film and animation production nobody needs it. Well, I mean to say, in my 15 years in production there has not been a single film that has requested accurate caustics – actually I think I do remember one! On Watchmen we used Arnold to render the glass palace. They did investigate it, but it turned out that as it is glass over glass it was very complex. But in the end they did not use it, so that is one example of something other renderers have a good approximation for already, and that I would like to do more research on and explore one day. But other than things like that, it is mainly more optimization across the board.

Fajardo says this optimization will include for the areas of more efficient motion blur, more efficient storage of polygons and hair – so you can render twice the amount of hair or twice the amount of polygon, and more efficient instancing – so you can use millions and millions of replications of an object for populating an environment. “We are continuously looking for improvements,” he says. “It is never ending – there is always something the users want – it just never ends!”

Case Study: Framestore and Maya with Arnold

Today, Arnold is being used on productions around the world, one of the largest in production right now is Gravity. This is rumored to be the largest Arnold film outside Sony Pictures, but as it is not out for some time, it is too early too discuss. Framestore actually used RenderMan and Arnold on Wrath of the Titans.

Framestore had two major sequences for Wrath:

• The Cyclops – mainly rendered with a pre-release version of RenderMan 16

• The Labyrinth – mainly rendered with Arnold

“The initial intent was for Wrath to be an entirely PRman show,” commented Framestore’s Martin Preston. ” We already had a lot of experience in using PRman for creature work, and so even though Wrath were switching their shading and lighting approach over to a more physically plausible scheme, they felt that PRman would be the easiest fit.”

However as the scope and complexity of the Labyrinth work became clearier to the team, Framestore believed that PRman would struggle with the quantity of geometry that they needed for the environments, using the lighting methodology they had choosen for the film. “Fortunately we’d added support for Arnold to our pipeline, for another production, so Wrath were able to quickly evaluate how well that could cope with their work, and so rather than rejigging how they’d expected to build the labyrinth they could switch renderers comparatively painlessly. It was quite a late decision!” explained Preston.

Framestore’s own press release on Wrath states that “Arnold chews through geometry.” We asked Preston if this was because of Arnold’s Object instancing? (in RenderMan 16 there is no ray tracing object instancing)

“Yes, that’s right,” he said. “The labyrinth, which is essentially a large quantity of instances of (comparatively) small amounts of unique geometry (all ray-traced with rather extreme atmospheric scattering) was a perfect fit for Arnold. That said in this show, and others, we are finding that Arnold is perfectly capable of ray-tracing against surprisingly large quantities of non-instanced geometry.”

Plausible lighting has become an important part of the Framestore pipeline. The company believes that from a technology point of view there are two ways a studio like Framestore can improve the efficiency of lighters. “Either by making their technology fast (through techniques such as progressive rendering) so that they can turn around lighting iterations more quickly, or secondly by making the lighting technology more intuitive. so they need fewer iterations,” explains Preston.

The switch to plausible lighting is the core of the second of these approaches. By having lighting and shading behave in a predictable way it is easier “for lighters to predict the results, they’re going to get and so avoid unexpected surprises!”

One area that is of real interest is the Maya to Arnold and Softimage to Arnold utilities. Solid Angle has been developing these but they are still in alpha and beta. The specific tools are known as MtoA and SItoA respectively. The community has known about their late stage development for some time and many people are keen to see them released. Framestore did not however go this route on Wrath of the Titans.

“When we adopted Arnold we wanted to make it relatively simple, from the point of view of our artists, to switch renderers. As such we’ve gone to some lengths to add Arnold support to our existing technology, rather than develop an entirely seperate Arnold-specific pipeline around a tool like MTOA. So while we looked at MTOA we didn’t want to integrate Arnold in this way,” said Preston.

Wrath is a good example of why Framestore did this, using the tools developed in house the film was able to switch a sequence to a new renderer without needing to change how their team or their pipeline worked. “Our lighting pipeline is built around our proprietary PRman lighting tool, fRibGen. That already allowed developers to supply plugins to the tool (for things like integrating procedural geometry, new types of passes and so on) so to adopt Arnold the first thing we needed to do was to add a plugin (fArnoldGen) which knew how to generate and control Arnold renders.”

fRibGen works by by allowing look-developers to build palettes, containing both shaders and the logic used to attach shaders to geometry, which can then be used during the export or rendering process to match whatever the current assets are to the ‘look’ defined by the palettes. “Our Maya scenes typically consists of lightweight proxies of assets, for things like geometry, fur, crowds, volumes, particles and in Wrath’s case instances. Then at rendertime procedurals will use the logic held in palettes to continue to perform shader or data assignment (which allows lighters of look-developers to influence the appearance of assets which aren’t present in their Maya scene).”

As a result Framestore also needed to write Arnold equivalents of all of their PRman procedurals. “The result of all this work is that lighters see a lightweight version of their shot, which they can choose to render in either PRman or Arnold using the same pipeline,” explains Preston.

MtoA, SItoA

Some companies however are using the alpha and beta tools. “The SItoA and MtoA plugins are still in private beta,” explains Solid Angle’s Fajardo, “but our betas are pretty solid. Writing solid plugins for complex 3D apps like Maya and Softimage is really, really hard and takes a long time. We are a bit obsessed with quality and won’t make an official release until the plugins are polished to perfection. SItoA has been in development for longer, currently at version 2.1.1, while MtoA is still at version 0.15.0. We follow a strict versioning procedure that lets our testers use the software in production with sufficient guarantees that new changes don’t break existing scenes, we issue frequent bugfix releases etc. So in practice, many of our testers have been using our plugins in production for a year or two.”

Luma Pictures used MtoA in X-Men: First Class, Captain America, Underworld: Awakening and others in production. ObliqueFX used SItoA in Source Code. And countless studios have used both SItoA and MtoA for commercials (such as Mikros Image, a Maya based studio in Paris and winners of the two VES commercials awards this year and one of them last year).

Case Study: Whiskytree & Softimage (SItoA)

One company using SItoA is Whiskytree on several small sequences on some very big budget projects. “Whiskytree has utilized SItoA on feature film projects including TRON: Legacy, Thor, Captain America: The First Avenger, The Hunger Games, and most recently on Marvel’s The Avengers,” comments Votch Levi, Computer Graphics Supervisor, Whiskytree.

“Thor was our first project to use SItoA and Arnold. We believed that the level of geometric density and shading requirements on Thor would be difficult to achieve using Softimage’s built in renderer. SItoA proved very successful through the project and we have started every subsequent project based on a SItoA render pipeline.”

Levi believes SitoA is currently very stable and dependable. “Arnold is able to handle very dense scenes well beyond what Softimage is able to represent in the viewport. We have built our asset publishing system, “Distill”, around the Arnold asset types. This allows us to abstract asset complexity from Softimage and layout/render very complex scenes. Our typical rendered scenes contain over 140 million triangles across thousands of objects though we have pushed over 1 billion polygons within 24 GB of ram. Previously we would break scenes up into layered passes to manage scene complexity but now with Arnold and Sitoa we render all passes at once.”

We asked Levi how he found developing shots for Arnold to render.

“Arnold and SitoA have completely changed the way we develop and light shots. For many years lighting was a very technical art requiring management of baked assets and multiple illumination models to achieve certain looks. Before Arnold it was very rare to use features like displacement, motionblur, IBL, glossy reflections, and multi-bounce diffuse samples on shots. Now when putting shots together we don’t have to consider if the render will fail because we’ve enabled Motion blur. It’s reasonable to use all these effects concurrently without repercussions. After years of battling motion blur and displacement issues it’s very liberating to turn these features on at the beginning of a project and not have to worry if it’s going to increase render times or cause frames to fail. However, working with Arnold is not all dandelions and pony rides, sample noise is a challenge and can require very long render times to remedy. Arnold is a brute force raytracer that lacks the ability to apply selective levels of sampling across specific shaders and all pixels are sampled uniformly based on the shading feature requirements. This is a good thing because it simplifies render settings but it also means some areas of the frame may be oversampled to reduce sample noise across the whole frame. It took us some time to adapt to the difference in Arnold’s philosophy from other raytracers but, once we got accustomed to its approach, render optimization was quick and easy to understand.”

Whiskytree is known for environment creation and matte paintings. Traditionally the matte painting workflow involved creating paintings in Photoshop and projecting the artwork onto cards or articulate geometry in a 3D environment to simulate parallax within a complex camera move. Because of the speed and efficiency of Arnold the company now tends to create more geometry in CG with physically accurate shading and rely less on paint and projections. “This gives us more freedom to create dynamic camera moves and quickly iterate through shot production,” says Levi. “SitoA and Arnold are an excellent complement to the matte painting toolkit.”

Individual sales

“Everyday,” says Fajardo, “we get individual artists across the world emailing us and saying ‘please can we test Arnold’ and it is tough, we’d love everyone to have access to the software, but at the same time it is hard for us to support crowds and hundreds or thousands of people. Until we grow the support structure in the company, we won’t have it online to download, but we are working on it, I am hoping this year we will have some good news on our web site.”

But Fajardo is at pains to point out that “if you are a studio you can just email the company and discuss company level licenses”.

Mental ray – NVIDIA

Mental ray is a production renderer developed by NVIDIA. As the name implies, it uses ray tracing to generate images. It is licensed to Autodesk and is therefore an option on programs such as Maya and 3ds Max. Autodesk is therefore NVIDIA’s biggest customer for mental ray, and mental ray is one of the most common renderers in the world. But NVIDIA also have other rendering solutions include GPU solutions – see below.

Barton Gawboy, ARC Training and Special Projects, NVIDIA says: “I like to think that the time has finally come in terms of the advancement of technology that we can take advantage of what we would call brute force approaches, light is a very simple thing, it travels in one direction and does not change unless it bumps off something, and it can bump off a lot of things. Now to make real light simulation you have to do a lot of work to model all these simple bounces. When rendering first started a lot of it was approximations, tricks, to simulate light and not do much indirect lighting, but as processes got faster and there is more memory we can get closer to the actual simulation of light, which is really just an unbounded infinite ray tracing thing going on all around us.”

A key feature of mental ray is the achievement of high performance through parallelism on both multiprocessor machines and across render farms. Central to using mental ray is to understand final gathering. Final Gather is a technique for estimating GI. Users can achieve GI in mental ray by using photon tracing or a combination of this and final gathering, but final gather is recommended by Autodesk as a simpler approach.

When Final Gather is enabled, objects effectively become a source of indirect light, mimicking the natural world in which objects influence the color of their surroundings. When one Final Gather ray strikes an object, a series of secondary rays are diverted at random angles around it to calculate the light energy contribution from the surrounding objects. The light energy is then evaluated during the ray tracing process to add the effect of the bounced light.

When rendering animated sequences, it becomes necessary to bake the Final Gather Map (FGM). When rendering a sequence, if rendering a unique FGM for each image is kept, the render output result will be calculated based on a different FGM for each frame. When playing the sequence, there will be a noise created by the per frame FGM fingerprint.

In versions prior to say Autodesk 3ds Max 2010 software, animation flickering was caused by two main factors:

- When the Final Gather points are not “locked” on the geometry, they “slide” along the surfaces as the camera moves.

- Moving objects create Final Gather points that are “floating” or “ghosting” in the 3D space, introducing rendering glitches.

After 2010, GI animation flickering is substantially eliminated by two main techniques:

- To handle camera movements, Final Gather points are shot from several locations along the camera path. Final Gather points become “locked” on the geometry, thus removing the “sliding” effect of those points across frames.

- To handle moving objects, one Final Gather file per frame is processed in a first pass. When the beauty pass is rendered, Final Gather points are interpolated across multiple frames to “smooth out” the solution.

V-Ray – Chaos Group