There has been enormous growth in VR and investment in the companies involved, especially those doing production. This year has seen the introduce of production headset units and even greater advances in VR camera tech. Here is our insider view of the sector’s growth.

Cameras

There is little doubt that the next 12 months will see an explosion in integrated cameras that solve many of the issues seen in simpler rigs for live action 360 capture. The current go-to configuration for live mono VR capture is a 6 camera Go-Pro rig but this has many limitations.

First the cameras are not gen-locked so any camera can be up to half a frame out of sync with any other. The individual on and off of each camera makes the rig open to user error. Who hasn’t lost a take by not either correctly switching on a camera or not seeing that one has the wrong settings or worse switched off mid-shot? Finally, the stitching has large seams which are not perfect matches, and given the nature of the process, the results can not be judged until the images are stitched in post.

Integrated cameras will go a long way to address these issues and are not expected to be extremely expensive. While mono 360 capture with simple audio is not at the cutting edge of VR, it dominates the content that is currently being made, and integrated cameras will only increase this entry level segments production volume.

Google’s Daydream

Google has long been associated with the push to take VR to the masses (if any VR player can be said to have long been doing anything), with Google cardboard and the YouTube 360 VR program. This is now being expanded with Google’s Daydream, a new VR platform for Android devices that should provide easier access to virtual reality content.

This will take the form of

- An optimal specification list for manufacturers of smartphones. Earning a Daydream-ready label.

- There will be a reference design for a Daydream headset.

- Daydream will provide a hub for VR content. Daydream Home will be a one-stop place where you can download or run virtual reality software/programming wearing the headset itself.

The list of manufacturers that have so-far committed to releasing Daydream-ready phones includes Samsung, HTC, LG, Xiaomi, Huawei, ZTE, Asus and Alcatel. Google’s next Nexus devices will also be compatible.

But the real interest is what happens beyond this. Where is this all going?

People have argued, and I am one of them, .that the end game is not VR. It is AR or MR – as in Augmented Reality or Mixed Reality. The term AR is used for overlaying graphics on a camera feed, so you hold up your iPhone and see graphics on top of the camera’s live feed. Key to the concept is that the graphics are tracked to the camera’s real world video feed and therefore allows you to swing around the phone and see the graphics ‘attached’ to the world. This is a start, but the term MR is preferred by industry professionals as it is this refers to the Hololens or Magic Leap headset approach.

The first MR devices are now in the wild. Key customers and sites now have the Hololens and are adding content with the API. For example, one museum is loading 3D models of very small insects which you can now walk around as ‘person’ size floating objects that can be rotated and viewed from any angle. Magic Leap has also released some actual clips filmed with their prototype.

Central to this world is the ability to not only track images to the world but do them not on a video feed but on your eye. To do this a few things have to be done and rendered very quickly:

- The image needs to be in stereo for depth perception

- It needs to be focused at the right point in space, If you look at your desk and MR has something placed on your desk – it needs to be in focus on your desk.. at the correct optical focus plane as the desk.

- It has to have an opacity map so that the image does not look ghostly or faint

- It needs to be refreshed fast enough to cope with rapid eye movement

- Ideally it should be cut off by objects closer.. so if it is behind your desk in 3 space, the edge of the desk cuts it off so it appears obscured by the desk.

Light field or LDI?

The technology to address the MR case is light field technology as it can produce a variable focus plane image with correct stereo and spacial head movement support. During this is however is extremely complex.

If filming a VR video with some GoPros seems not too hard, it is because it is trivial compared to light fields. It requires no head tracking on playback other than rotation, no stereo, no interactivity, focus or obscuring. Most of all it is putting a screen in front of your face not projecting an image to align and mix with the real vision of each of your eyes.

So where is light field or LDI (Layered Depth Images) at?

Well, the companies making this technology are in an arm’s race of sorts and thus fairly secretive but from what we do know while not breaking NDAs is:

- It is working to a point (the wide field of view is still an issue)

- The opacity maps are working really well. Even against a light well lit background it is possible to have very clear graphics

- The head tracking and stereo are not the issue,

- The complexity of light fields, their data rates, and update speeds is an issue

- There are some big medium term user acceptance issues with the head sets.

One way to understand these issues is to debunk some of the great VR -AR-MR urban myths.

Myth Busters

“It will get faster and quicker really soon – the screen look crappy but soon the resolution will be much higher”

It is getting better but these are incredibly complex problems and billions of dollars are being spent on them because they are hard. While it is true the screens on VR look low res, they are not. The refresh rates are very high and the images are in stereo and with a wide field of view. Thus already, the data rate on say Gear VR is so high that it overloads the phone’s cooling design and most applications can not run the screens or the phones at full power. Similarly, the head gear on special purpose VR-AR-MR rigs is being trashed to get even this current level of performance.

“They just need to compress it better”

Actually, light field compression is a very hard problem. There is some good compression being shown but much of it is around LDI and not full light fields. A full light field is the holly grail of which these companies seek, but there is a stage along the way called Layered Depth Images.

The LDI representation was first proposed in about 1998 by Jonathan Shade et.al., (including Dr Richard Szeliski – Director and a founding member of the Computational Photography group at Facebook but prior to that Microsoft research). The main contribution of their work lies in unifying the depth information obtained from several locations to a single view location by warping. This approach comes handy when dealing with occlusions. An LDI can be constructed by warping n depth images into a common camera view.

This is not a full virtualized scene light field. LDIs are great but they are best when looking at a scene in say VR and having full high resolution head movement. They lack the focus control that is the hallmark of light fields. Great tech but not it is only a partial solution.

“The headgear will get super light or wireless really soon…”

Weight of head mounted display is one of the real issues for acceptability. A heavy head unit will lead to user fatigue while cords and cables can be a problem in many instances.

Having seen Google glass, people assume that a Hololens will be just as smaller or smaller. Here there are two problems: wireless data rates and batteries. First, there is no high speed bluetooth or otherwise solution that can pass the high data required from a remote pocket unit to a lightweight pair of glasses. This may change but there is no current tech that has the low latency and high data rates required. None.

Second is an even bigger problem: the batteries. If the problem is that the current phone based VR setups are running hot (to the point of shutting down), you know that they must be chewing power. So the problem is one of light weight, high capacity batteries. There is an instant billion to be made overnight if you can have a battery breakthrough, but then that is true for every mobile phone company, laptop maker etc. Some of the greatest technical minds of our generation are focused on new battery tech and we have simply not seen any quantum breakthroughs yet.

If we can’t separate the computer and its battery from the head gear, you can’t have a lightweight pair of ‘google glass’ style AR-MR glasses. For now, you are left with the weight of the computer and its battery on your head or you need a good cable back to a computer.

There is a real issue about head strain with VR due to weight on your head and face. This is perhaps why only short VR projects have proven popular.

“They will make AR contact lens within a few years”

No they won’t.

Yes there have been patents, but that’s all they are. And yes, there has been a ‘prototype in Japan’, but it had less pixels in total than you have fingers on your hands.

Again computer power and battery do not get solved by making a contact lens.

There are medical contacts by companies like Google but they are not AR-MR solutions.

New Camera Platforms

What is exciting is the move from RGB camera phones and cameras in general to RGBD inside a computer environment that allows for complex computational photography. This is very different from a 360 degree camera. This combines a camera, depth sensors and computer power for a whole new range of imaging solutions. One such advance is Google’s new grand Project Tango.

Developer kits are shipping for Google’s Project Tango. Project Tango combines 3D motion tracking with depth sensing to give your mobile device the ability to know where it is and how it moves through space. Already Vive HTC are on board and Google seems committed to working with more than just one company.

Google is not alone in producing RGBD camera tech, as Intel themselves are very far advanced with cameras and the company’s RealSense is shipping (SR300). This camera like the SR200 before it are user facing cameras for use in laptops, Ultrabook devices, 2-in-1, and all-in-one devices and allow users to interact with an additional layer of accurate depth information.

Apple purchased PrimeSense in 2013, the camera technology company that was at the heart of the Microsoft Kinect camera sensor. This caused Microsoft to develop a whole new line of tech for the next generation of the Kinect.

Once there is wide spread availability of these cameras in mobile phones or other mobile computing devices, it will be possible to build very complex 3D views of a scene in successive passes simply by moving the phone around. The end game would be to use this successive additive approach to build complex (but most likely static) light fields from one’s cell phone. This would mean today’s GoPro VR style shoots are tomorrow’s cell phone light field ‘snapshots’.

Magic Leap and funding

Magic Leap’s total funding now stands at $1.39bn

Earlier this month, Magic Leap, the Florida “startup”, released another video of its unreleased technology, (see below). The company, which has been funded by Google and technology investors like Andressen Horowitz, has closely guarded its secret “photonic light field” technology, but many have called it revolutionary. fxguide has spoken to several users who bear testament to this video being an accurate reflection of current technology but NOT the best they have seen or the most impressive of their demos.

Magic Leap’s technology is know about mainly from their patent, “Planar Waveguide Apparatus with Diffraction Elements,” filed by Magic Leap founder Rony Abovitz in 2011. It defines a headset with small projectors that beam images directly onto your eyes, and sensors that blend the images with your surroundings. For instance, allowing virtual jellyfish to vanish underneath tables, only to appear on the other side.

https://www.youtube.com/watch?v=GmdXJy_IdNw

Above; the clip is shot and recorded directly from Magic Leap Technology without later comp or vfx of any type.

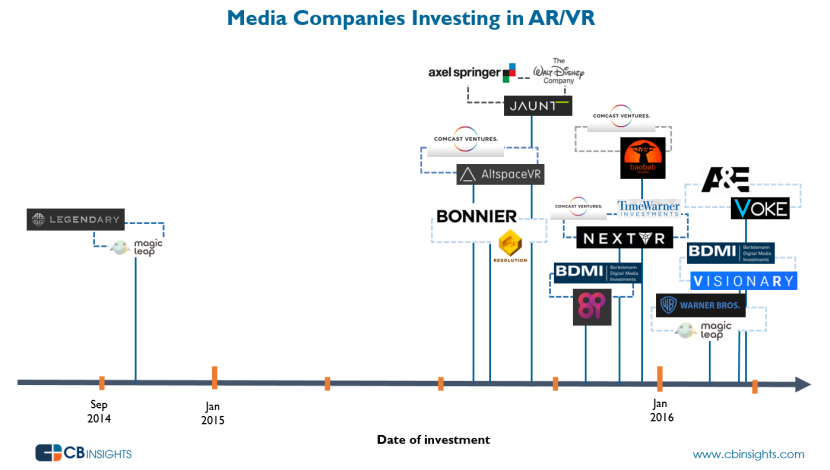

Big Media Investment

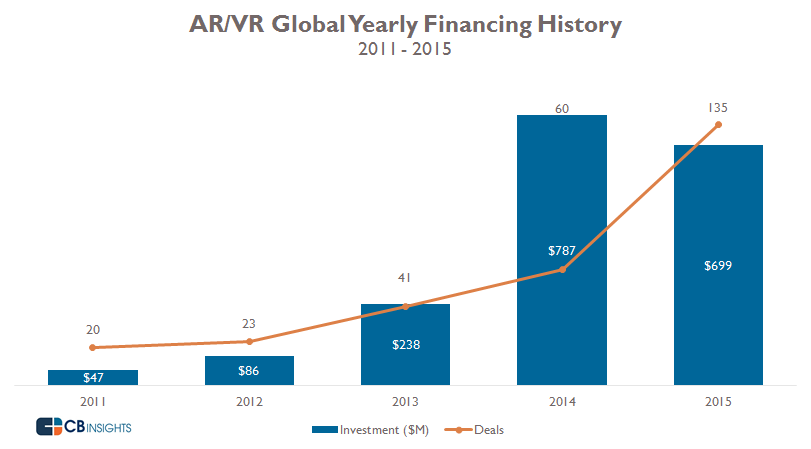

Much is written about the funds being invested in VR, but research firm CB-insights has recently quantified the industry segent. In this first timeline below, one can see the adoption of VR by the mainstream VR focused companies. This is when these key players got funding; companies such as NextVR, Jaunt, 8i and others we have covered here at fxguide.

With nearly 20% of AR/VR deals going toward companies focused on content, media companies don’t want to be left out on the next content-delivery platform, so in this mix of new companies are traditional players like Disney, A&E and others.

Venture Capital

The level of funds going into VR is incredible from a vfx or post-production perspective. This excludes money spent by say Microsoft on Hololens since it is not seeking VC funding.

The vertical axis is both number of deals and millions of dollars (US$) but this is just up to 2015. We used CB Insights data to see funding trends to private companies in virtual and augmented reality. Despite seeing deal activity jump 125% in the year, 2015 saw funding pull back 11%, down to $699M (funding totals in 2014 were buoyed by Magic Leap‘s $542M Series B mega-round). 2015 still saw plenty of large deals including Jaunt ($65M Series C), ANTVR ($46M Series B), and Movidius ($40M Series E).

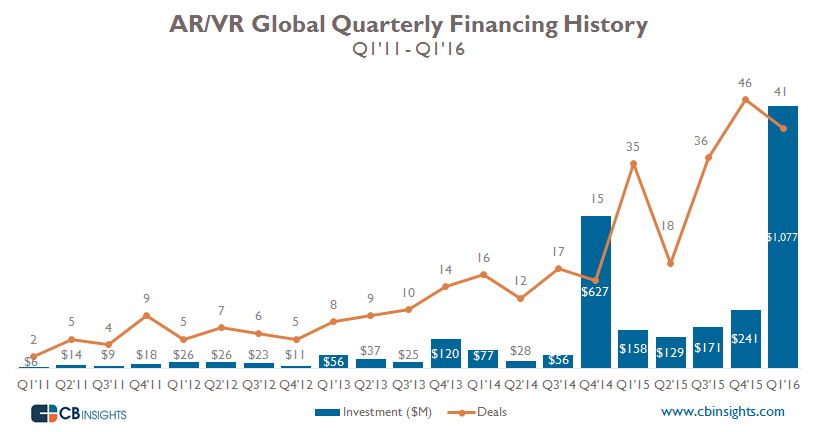

But that as they say “this was so last year.” With the public increasingly exposed to consumer VR headsets like the Oculus Rift and HTC Vive, investment has poured into the category, with $1.1B in funding to startups in this space in Q1’16, across 41 deals alone.

If Google and Facebook cast a long shadow over the VR space thanks to Cardboard/Android and Oculus Rift, then it is Magic Leap and Microsoft that dominate AR-MR. And it is Magic Leap that has been getting the outside investment dollars.

Taking a look at the quarterly trends highlights the large mark Magic Leap leaves on the overall industry is evident. The two largest quarters for funding, including Q1’16, were bolstered by mega-rounds to the Florida-based AR-MR company. Magic Leap‘s latest Series C fundraising in February was for a massive $793M, which brings its total funding to nearly $1.4B.

With the mega-round considered in the tally, overall funding in Q1’16 saw 347% growth quarter-over-quarter, with the number of deals at a moderate 41.

Other notable AR/VR deals in Q1’16 went to VR systems maker MindMaze ($100M), mobile-based AR app Blippar ($54M Series D), and VR headset maker Baofeng Mojing ($34M Series B).

* special acknologment to James Morra @ Electronic Design

Whats next? Neuro-gaming

Wikipedia defines Neuro-Graming “as a nascent form of gaming that involves the use of Brain–computer interfaces such as EEG so that users can interact with the game without use of a traditional controller.”

At last week’s TedX in Sydney conference Karen Palmer spoke of her experiences combining neurogaming, film, and parkour to create dynamic immersive experiences that may be the level beyond VR, AR or MR.

Her work and the work of others in the field is to have 360 immersive experiences that are driven by brain wave activities. Her recent project SYNCSELF 2 recreates the process of transcending fear through parkour and was showcased at the iconic V&A Museum in London. This interactive parkour experience is controlled by the user’s mental focus, monitored by EEG biosensors, technology developed by Brunel University London.

Her work and the work of others in the field is to have 360 immersive experiences that are driven by brain wave activities. Her recent project SYNCSELF 2 recreates the process of transcending fear through parkour and was showcased at the iconic V&A Museum in London. This interactive parkour experience is controlled by the user’s mental focus, monitored by EEG biosensors, technology developed by Brunel University London.

Her next project is called Riot and the user finds themselves at the start of a game at the outbreak of an urban riot – you have one task to get home alive. The game play is a reaction to your reaction to the circumstances.

For example, at one point in SYNCSELF you are confronted by a difficult jump and the game’s audio starts telling you that you can’t make the leap and that you will fail. Only by remaining focused and not letting into that emotional anxiety, can you make the jump. It does this via a series of sensors built into the head set. If you get nervous, your physical body reflects this and the system bio-senses flag you to fail. As such, the game acts as a form of bio-feedback and can be quite powerful for players to experience.

We will have an exclusive interview with Karen Palmer coming up on fxguide very soon.