During the 2024 Computer Vision and Pattern Recognition (CVPR) Conference opening session, the CVPR Awards Committee announced the winners of its prestigious Best Paper Awards, which annually recognize top research in computer vision, artificial intelligence (AI), machine learning (ML), augmented, virtual and mixed reality (AR/VR/MR), deep learning, and much more.

This year, from more than 11,500 paper submissions, the CVPR 2024 Awards Committee selected the following winners for the honour of ‘Best Papers’ during the Awards Program at CVPR 2024, which is taking place now through 21st June at the Seattle Convention Center. Here are the top two.

Best Papers

● Generative Image Dynamics

Authors: Zhengqi Li, Richard Tucker, Noah Snavely, Aleksander Holynski (link)

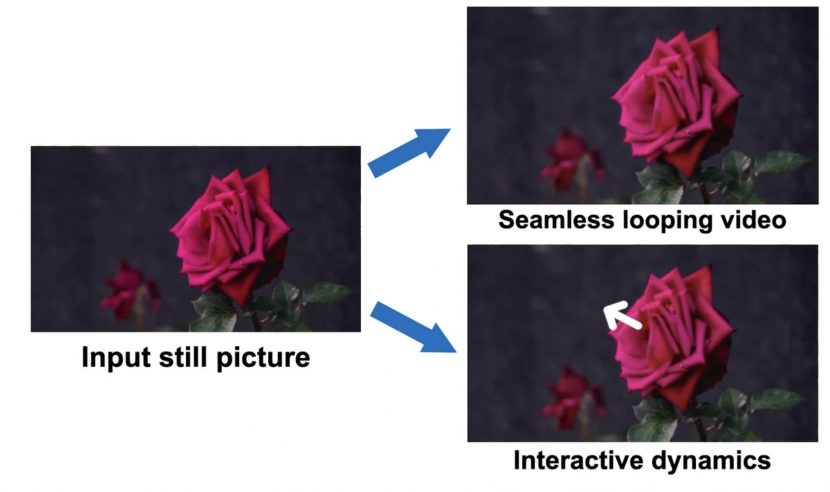

The paper presents a new approach for modelling natural oscillation dynamics from a single still picture. This approach produces photo-realistic animations from a single picture. There is a fully interactive demo here – try it yourself, but in short, you can click and drag on an image to move things in the image and have them believably spring back into position as if you had touched the real objects.. in other words, its kind of fun to play with, and could be a brilliant way to bring to life imagery in engaging interactive experiences. It also demonstrates the potential to enable several downstream applications, such as creating seamlessly looping or interactive image dynamics. Imagine this on a vast scale, on a fully interactive, immersive Apple Vision Pro high-resolution environment – built from real-world photography; the effect would be remarkable. it would bring panoramas and environments to life.

● Rich Human Feedback for Text-to-Image Generation

Authors: Youwei Liang, Junfeng He, Gang Li, Peizhao Li, Arseniy Klimovskiy, Nicholas Carolan, Jiao Sun, Jordi Pont-Tuset, Sarah Young, Feng Yang, Junjie Ke, Krishnamurthy Dj Dvijotham, Katherine M. Collins, Yiwen Luo, Yang Li, Kai J. Kohlhoff, Deepak Ramachandran, and Vidhya Navalpakkam

This paper highlights the first rich human feedback dataset for image generation. The authors designed and trained a multimodal transformer to predict the rich human feedback and demonstrated some instances to improve image generation. Text-to-image generative AI models such as Stable Diffusion and Imagen have made significant progress in generating high-resolution images based on text descriptions. However, many generated images still suffer from issues such as artifacts/implausibility, misalignment with text descriptions, and thus low quality. Supervised learning is key to ML and having more and better labelled data is greatly in demand.

CVPR is the preeminent computer vision event for new research in support of artificial intelligence (AI), machine learning (ML), augmented, virtual and mixed reality (AR/VR/MR), deep learning, and much more. it is sponsored by the IEEE Computer Society and the Computer Vision Foundation. CVPR delivers important advances in all areas of computer vision and pattern recognition and the various fields and industries they impact. It includes tutorials and workshops, a leading-edge expo, and networking opportunities. CVPR, is annually attended by more than 10,000 scientists and engineers.