At SIGGRAPH Asia 2013 in Hong Kong, Hao Li will present a technical paper entitled 3D Self-Portraits. And that’s exactly what the pipeline Li and his collaborators have developed will do – create a 3D ‘selfie’ using a single Kinect sensor that can then be 3D printed. We talk to Li, a computer science professor at USC, about the research, how it will soon be available to everyone, and about his recent stint at ILM on real-time performance capture.

fxg: Can you describe the ideas behind the ‘3D Self-Portraits’ research?

Hao Li: The project we’re going to present at SIGGRAPH Asia is called 3D Self Portraits. The idea is that you want to create 3D selfies of yourself. This whole 3D printing business is pretty big, it’s a huge trend. People are thinking about ways of, instead of just having a photograph of yourself, you have a 3D print of yourself.

So there are several problems you face if you want to create a 3D selfie of yourself. You have two choices – either you use a technology like Kinect Fusion that Microsoft released, where you take your Kinect or a hand-held 3D scanner, scan the person by walking around them and you have your 3D model. It’s all about digitizing a 3D model.

But that can be very difficult because first of all you have to stand very still. The second problem is you need a second person. So if you want to do a lot of experiments, for example, it can be a pain to have someone else there with you.

– Above: watch the 3D Self-Portraits demonstration video that accompanies the SIGGRAPH Asia 2013 Technical Paper.

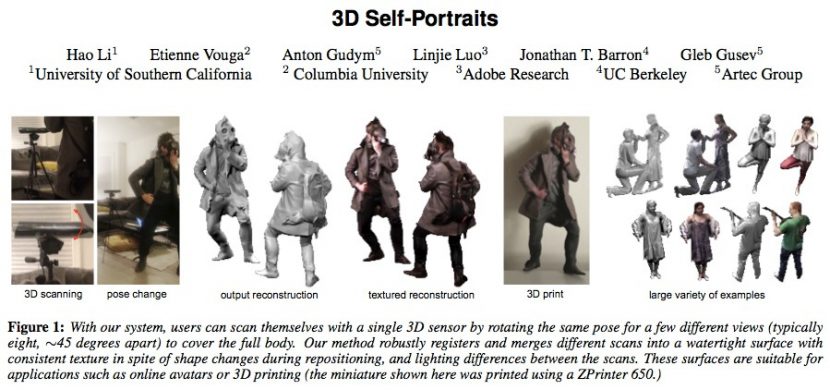

So the idea was, is it possible to capture yourself, without requiring an operator, inside your home? You don’t need to go to a high-end studio to digitize your body. To do that we needed a low-cost 3D scanner, so we used Kinect, put it on anything you want but ideally a tripod, put it in your living room and then you just spin around. The Kinect is on a tilt head to go up and down to measure as much as possible and increase the resolution.

In the end, you just have a single button application software that automatically puts all the scans together and you can create a full model with textures, and even textures that are compensated – because if you turn around in a static environment the lighting might change. The lighting is static, but the shading might change across the views.

fxg: How does the setup solve for the different positions a person must stand in?

Hao Li: Well, I’ve been working on that for a long time. If you have dynamic point clouds, that change over time, or 3D shapes of arbitrary models, how can you find relationships between them? How can you find very dense correspondences even though their shapes are deforming or different. And even though there are no manually labelled correspondences. The technique that we used is a generalization of something we’ve done before called ‘Non-rigid registration’ which allows you to automatically warp one scan onto another even without having the user to tell which part has to go where.

fxg: How does that work?

Hao Li: It’s basically a numerical optimization problem that is based on refinement. So it has to be not too different. But this algorithm is extremely robust so you can have a significant amount of deformations happening. You can see in the video there are regions where the legs are really, really apart. You can have up to 10 or 20cm apart and it can figure out how to deform everything. One of the biggest issues is that if you are given two shapes, there’s only a sub-region in the two-scans that have a common region of interest, so you can only find correspondences in those overlapping regions.

fxg: How successful is this in someone’s living room, say?

Hao Li: You still need some decent lighting. If you have a strong backlight it’s just going to be really dark, especially as the Kinect has an auto white balance. But to get high quality textures, we use an optimization algorithm that can separate albedo and shading from the surface color that it’s capturing. What it’s trying to do is trying to create a smooth blending on the albedo and, by separating the shading, you can obtain a consistent color information throughout different views even though your pose is changing.

fxg: Can you talk more about the role of the Kinect in all of this?

Hao Li: The Kinect gives you a very noisy and low resolution point cloud. In the future you’ll have higher resolution depth sensors (although even if they are higher resolution, depending on the distance, the resolution will always be limited because it’s all discrete). We do a trick where we tell the person to go relatively close to the Kinect – roughly 1 meter. And the Kinect is actually tilting up and down, and by doing that every frame is actually recording a sequence of 3D scans, but it actually doesn’t have any sense of where the Kinect is relative to space, and relative to where the user is. It has to compute it on the fly while it’s actually tilting. So this is a registration process.

Also, because the resolution is very low, if we swipe the Kinect multiple times you have a lot of overlapping regions between every frame, so what we can do there is super-resolution. We can cancel out the noise information by using redundancy – that’s how we get high-res geometry from a low-res Kinect. And a better resolution depth sensor would give you even higher resolution by over-sampling your subject.

fxg: There’s also an imaging or texturing side to the system which is important in getting the ‘selfie’, isn’t there?

Hao Li: The texture is very important because given that the geometry is still relatively low-res. So the color is important because it helps tell what clothing you’re wearing, skin color hair color – the identity of a person. Fortunately, the Kinect has a higher effective resolution of a texture, so we can do sort of the same thing as we do with the geometry information – the point cloud. We can also super-sample the textures and get even higher resolution than we could get out of one single frame. The video capture is still a little bit noisy and the colors might not be very consistent, even though the Kinect is tilting up and down.

fxg: This research seems to combine some high tech study with something practical – what led you to it?

Hao Li: The most attractive part of this technology, apart from the low cost – probably only around $100 – is that when I get to capture someone I immediately get to see the results. The computation takes about five minutes and in the end they just see themselves immediately in 3D. It dramatically reduces turnaround time for rapid prototyping, and if you think about visual effects there’s a big movement in shifting post-production into pre-production. Previs is being used heavily of course but their also trying to increase the quality of previsualization work in order to make less mistakes while telling the story and have a better workflow.

For now, it’s very much a prototype, but I don’t see why this technology couldn’t be used to create crowds that are real people and also give animators the ability to test out certain scenes. This was actually a collaboration with a couple of friends from Columbia, Berkeley, Adobe and a 3D hand-held scanning company Artec. For the last three months we’ve been working on the productization of this technology so we’d like to offer a web service that’s free. Anyone with a Kinect scans themselves at home, upload a 3D model onto an online gallery and press a button and order your 3D print directly. The project is called Shapify.me and will be launched soon.

fxg: How do you think this system might be used in visual effects or animation, say in creating characters?

Hao Li: That’s a super-cool idea and we already have results. We’re working with a research scientist called Ari Shapiro from USC ICT and he has built a technology called SmartBody. The idea is that he needs a way to take a 3D scan of a person – it doesn’t matter what the person is wearing. The tool automatically rigs your 3D scan. The possibilities here are infinite, so you can really think of yourself in a game or a virtual world for any possible purposes.

– Above: watch a demo of SmartBody from USC ICT.

fxg: Can you talk about your own history and areas of interest in this research?

Hao Li: I always felt there was this merging between the field of computer graphics and computer vision where you really have the idea of capturing reality directly, instead of trying to have an artist and modeling and taking reality as only a reference. So it’s bringing the physical world into the digital world. In my PhD I started becoming interested in deformable things – human bodies, facial animation – things that were pretty new at the time. At the time we also looked at real-time 3D acquisition systems and the idea was can you re-construct animation directly from reality? The whole thing went that direction, and there are a ton of problems that haven’t been solved yet. One being more realistic facial animation, human bodies, hair capture. These are the core directions I’m following for the next couple of years.

fxg: You worked at ILM as a research lead on some next generation real-time performance capture technology, which many people have seen via that YouTube video. Is there anything you can say about that work?

Hao Li: I can’t say much but I can say that I was pretty much involved in the real-time virtual production effort there. A lot of technologies were built to help produce upcoming Lucasfilm movies. I think the entire effort has to do with how the visual effects industry is changing. There was an effort there to work out what the future of visual effects should be, including the shift from post-production to pre-production. First of all, to improve the quality of the movie, and then to reduce costs by making a movie in real-time. The idea is that the real-time graphics quality is catching up. If you look at recent game engines like Unreal 4, Frostbite – they all look amazing. It seems like in the coming years that will still improve, especially on the animation side. Activision, for example, has spent a lot of time and effort in pushing real-time facial animation.

Above: watch the LucasFilm/ILM performance capture demo.

fxg: What do you think is the future of performance capture?

Hao Li: The direction I want to take in the next few years is trying to take all these 3D capture technologies to the people, sort of like a democratization of performance capture tech. You have to solve two big problems still; one is that you need high fidelity tracking and capture in real-time, and the second is that all these things need to work in arbitrary environments. This whole 3D Self Portraits research is an initial step, but in the future I think you will be able to have drastic motions, actions that are being occluded entirely, or be able to capture things in the wild. That’s really important because 3D depth sensors will be more ubiquitous – you will find them in tablet devices, on your laptop, possibly inside cars.

Another thing that I think that is actually interesting – initially the goal was can we capture human body shapes at home. So virtual clothing was an idea – solving the retail store problem of being able to try on your clothes right from home without going to a shop. One of the things, though, that still stops us from doing this ‘virtual try-on’ is privacy. Nobody, or not many people, would really like to scan their naked body and upload them on a specific server. So it’s important to anonymize your data or have a way to swap your clothing without taking it off, but these are directions we’d like to go in. It sounds a little bit like science fiction at the moment but I think it’s totally doable in a couple of years.