Generative AI

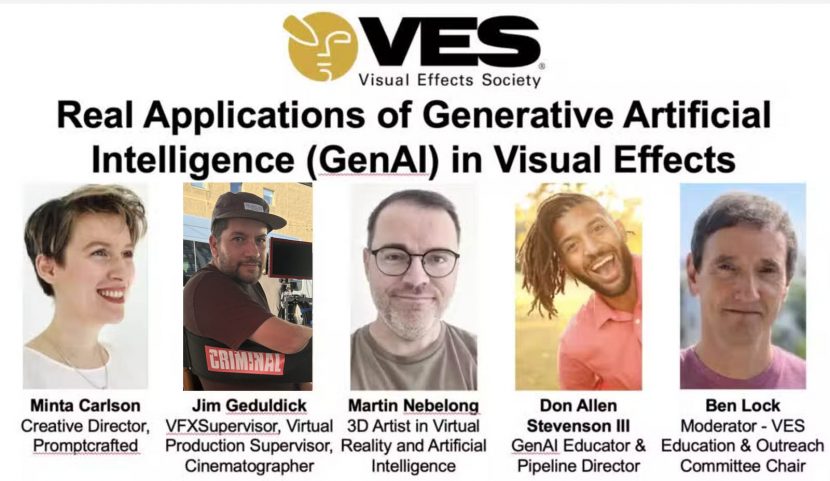

ChatGPT has undeniably been the poster child for the generative AI (GenAI) revolution, capturing both headlines and imaginations. But as AI-powered tools continue to push the boundaries of creative content generation, it’s clear that GenAI’s impact extends far beyond chatbots. This week, the Visual Effects Society (VES) is hosting a timely panel discussion exploring GenAI’s role in the VFX industry, featuring a roster of experts with hands-on experience at the cutting edge of AI-driven artistry. Among them is visual effects supervisor Jim Geduldick, a longtime friend of fxguide and a recognized pioneer in the intersection of virtual production, machine learning, and computer vision within visual effects and filmmaking.

Geduldick’s career spans decades of creative problem-solving, from real-time rendering to LED volumes, making him an ideal candidate to demystify the impact of AI in visual effects. With AI’s rapid evolution, panel discussions like this are becoming increasingly critical. AI may not yet have a digital consciousness or a theory of mind popular in sci-fi movies, but it is getting closer to helping VFX artists create those movies.

Joining Geduldick on the VES panel are other prominent voices in the space, including avant-garde AI artist Minta Carlson, concept artist and digital sculptor Martin Nebelong (who has worked extensively with AI tools such as OpenAI’s SORA), and creative technologist Don Allen Stevenson III. The discussion promises to examine AI’s influence from multiple perspectives—technical, artistic, and cultural.

We sat down with Jim Geduldick to preview the panel, and he emphasized the need to distinguish between GenAI and AI as a whole. “A lot of what we do now is just educating clients, studios, and labels,” he said. “So much of the discourse around AI in VFX gets lumped into ‘good, bad, or ugly’—with people assuming it’s just tools like Runway ML or SORA. But in reality, we’re talking about a range of technologies, from machine learning to computer vision, that have been foundational to VFX for years.”

The need for education around these technologies is not new. AI represents just the latest in a series of technological shifts that have required industry-wide adaptation. AI is already woven into the fabric of VFX—often in ways that go unnoticed. “The misconception that AI is only about generative tools is one of the biggest hurdles,” Geduldick explained. “AI is in render denoising, deep compositing, and countless other aspects of production, yet the public doesn’t realise how much we’re already relying on it.”

One of the most persistent misconceptions is the belief that using an AI model inherently contributes to its training data, which is not the case. Training Data is used to make the model, but to run the model doesn’t have to contribute to the model. Naturally, many in our industry remain concerned about copyright issues, fearing that running an AI model on local project automatically integrates that data into a global dataset. “People freak out over copyright issues, assuming that running an AI model on local data means that data is automatically being added to a massive public dataset,” Geduldick said. “But training data can be air-gapped. Studios can keep their AI tools completely self-contained and secure, just as they do with cloud computing.”

Beyond legal and ethical considerations, Geduldick’s focus at the VES panel will be on AI’s practical applications in VFX. His discussion will explore how AI is already enhancing production pipelines today. “For example, the way we approach site scans and data wrangling has changed with AI-assisted depth capture,” he said. “We’re integrating LIDAR scans with machine learning to automate tedious tasks, allowing artists to focus on creative work.”

A particularly promising area of AI-driven innovation is the use of Neural Radiance Fields (NeRFs) and Gaussian splats for location and set surveying. Geduldick has been actively involved in this space, contributing to early research with NVIDIA. “We’re getting closer to a state of maturity where NeRFs and splats can be viable for production,” he noted. “That said, we still face challenges—occlusion errors, data quality issues, and the need for additional processing to make these formats truly production-ready.”

Another major focus for Geduldick is the role of AI in digital doubles. Several of his recent projects have required the creation of digital doubles for actors who are no longer living. “One thing that I’ve come across, especially this last year, was having to do a lot more doubles with people that have passed on,” he said. “The challenge is that if we don’t have a person that we can scan today, we need to find someone with similar facial structure to make it believable.” AI can really work well using techniques such as DeepFakes.

The quality of training data remains a key factor in AI-generated assets, and Geduldick emphasized the increasing importance of synthetic data in addressing these challenges. “AI-generated training datasets can be incredibly useful for avoiding copyright issues and ensuring data quality,” he noted. “Yet, it’s a topic that often gets overlooked in these discussions.”

The VES panel is scheduled for Thursday, February 20th, beginning at 11:00 AM PST. Given the high level of interest, the session is expected to extend beyond its allotted time to accommodate audience questions.

With AI in VFX becoming a highly debated topic, this discussion aims to offer clarity and practical insights rather than speculative hype. Geduldick’s focus will be on real-world applications and current production pipelines, reinforcing the idea that AI is not a replacement for artists but a tool that, when used effectively, enhances the creative process. “AI is a tool, not a magic button,” he said. “It’s about finding ways to use it effectively, not replacing the artistry that makes visual effects so compelling.” In a rapidly evolving industry, such discussions are essential to ensuring that AI is leveraged in ways that support and elevate visual storytelling.

The panel is on this week, Thursday, February 20, 2025 at 11:00AM (PST), (which is 1:00PM (CST), 2:00PM (EST), 7:00PM (GMT), 8:00PM (CET), Friday, February 21, 2025 at 12:30AM (IST), 6:00AM (AEST), 8:00AM (NZST))