One of the most successful Marvel films of all time, directors Anthony and Joe Russo’s Captain America Civil War was a combined effort of multiple effects houses to bring to life what has been referred to as Avengers 2.5.

IMDB lists nearly 20 effects houses on Captain America Civil War, encompassing visual effects, special effects, stunts and practical suits. Some companies such as Lola VFX specialise in particular types of work, (De-aging – see below) others such as ILM focused on sequences such as the major so called ‘Splash Panel’ in Germany.

In addition to those mentioned above visual effects included work by Deluxe’s Method studio, Luma Pictures, Dneg, Image Engine, Method, Trixter, Cinesite + others. The film was extensively prevised by The Third Floor (see below).

DOP & Lensing

Trent Opaloch, DOP on Captain America Civil War, is a Canadian cinematographer best known for his work with director Neill Blomkamp along with the Russo Brothers. His major films include District 9 (2009), Elysium (2013), Captain America: The Winter Soldier (2014), and Chappie (2015).

This was the first film to be shot with the Alexa IMAX camera with the film features 15 minutes of that footage. The Alexa IMAX was a collaborative development between Arri and IMAX in customizing the Alexa 65 6.5k camera to facilitate viewings in IMAX theatres without overhauling equipment.

As Opaloch told THR, the BAFTA nominee will be reteaming with the Russo Brothers on the next Avengers films. On Civil War, he put the Alexa 65 through its paces, using it on a Technocrane, Steadicam, dollies, and even with a drone team who did flyovers of the German Airport using the camera.

“Additionally, key grip Michael Coo developed a bungee rig system. Mark Goellnicht, the ‘A’ camera operator had used a similar system on Mad Max: Fury Road,” Opaloch explained. “Basically, it’s a long bungee tube attached to a rope that will run up 30 or 40 feet; we suspend that line over the set. It allows the operators to get very dynamic and right in there with the action; it feels like handheld but they don’t have the brunt of the weight of the camera.”

In the shot above watch for the camera operator jumping after Bucky!

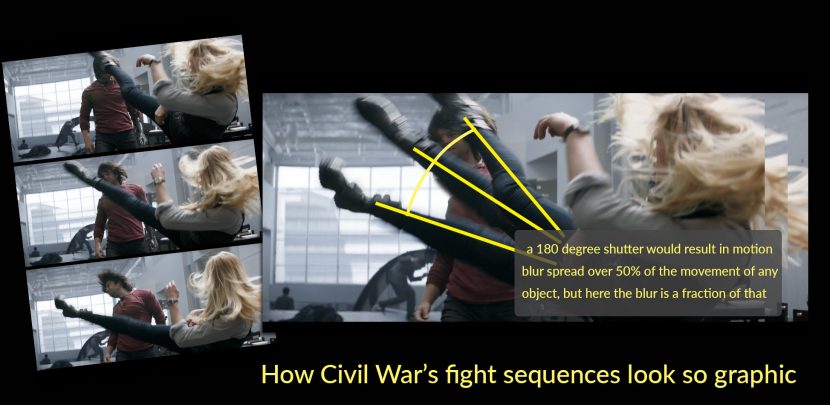

The fight sequences in the film deployed a reduced shutter angle, so the images appear very graphic and almost strobe like.

As you can see in our infographic below, if you superimpose the movement of Agent 13’s leg from three successive frames the amount of blur in each is much less than would normally be seen. While there is some blur on her leg, it is much less than one could expect from a 180 degree traditional shutter. A 1/48th shutter (on 24fps) would have the shutter open for exactly half the time. One would therefore expect to see the leg blurred half the distance to the next position. Below the amount of blur is a fraction of that. This technique was first widely deployed in Saving Private Ryan and it has the cinematic effect of making the action seem very brittle, impactful and visceral, while also being slightly disorientating and kinetic.

Young Tony & Vision

Tony Stark was de-aged in Captain America Civil War in what now a Marvel trademark. The scene involved telling a version of Tony’s youth via a hologram (although this is only later revealed to be the case). Once again, as with Captain America, Peggy Carter, Michael Douglas (in Ant Man) and others, the visual effects were created by Lola VFX in LA. The team primarily used compositing techniques, and Lola also provided the robotic perfection of Vision. Both Robert Downey Jr as Tony Stark and Paul Bettany as Vision were on set, and not solved via CGI. The work Lola does is brought to life by painstaking compositing which seeks to modify the actor, – without losing the performance and acting choices of the actors.

Tony Stark’s younger self required Lola VFX to deage the actor by almost 30 years. “In this case, we analyzed footage of Mr. Downey at the approximate age that we wanted to target, which was around the time of the film Less Than Zero [when Downey was in his early 20s].” commented Trent Claus, visual effects supervisor at Lola VFX in THR recently.

One shot that required a de-aged Tony Stark was 4,000 frames long. This makes the tracking and compositing difficult as errors can accumulate and it is even more complex as the actor is turning their head from one side to side multiple times in the one shot – which ends in a close-up.

Previz

Gerardo Ramirez was the Previs and Postvis Supervisor for The Third Floor. We spoke to him along with Austin Bonang, Previs Supervisor for The Third Floor, about the company’s tenth major Marvel action feature film.

fxg: When did the third floor get involved?

Gerardo Ramirez: The Third Floor was very involved from early stages. We handled the previs and postvis for the film across all the major sequences and then working on postvis as well. My team in Atlanta visualized scenes including Bucky’s Escape and the Raft Prison and we had a team under Austin Bonang in Los Angeles that worked on Lagos, the UN, Bucky’s Capture and movement studies and tests for characters like Black Panther. Some of the larger scenes, like the Final Battle and the airport battle, were shared between our teams.

We were privileged to work with the directors, visual effects supervisor Dan Deleeuw, the producers, the stunt department and many other departments through the process as ideas and approaches were pitched. There was great openness to ideas and a mantra to achieve the best ones. A lot of our work was sequencing story beats, either from boards, if available, or from outlines in the script, where we would go on to visualize the particulars of what would be happening beat to beat in, say, a large fight scene.

they developed flow of sequences and planned technical shooting and visual effects

characters by the visual effects teams.

Copyright Marvel 2016 and courtesy of The Third Floor, Inc.

fxg: Were you doing previs for the story beats or a technical previs for how it was to be shot?

Gerardo Ramirez: Sequencing the action through previs was very important and this was done for almost everything we previs’d as the filmmakers visualized the flow of shots and scenes. The process was especially critical for the Final Battle, where there needed to be a certain logic to the character staging, with each beat having a cause and effect to move the story forward. With the “Splash Panel” scene, where it’s hero against hero, everyone needed to get a feeling of what the type of fighting might be when tactics used to normally defeat the characters’ adversaries are unleashed among themselves.

Beyond visualizing story beats, there was a lot of technical planning and previs where we collaborated with key departments to create mockups and schematics to inform the shoot. For Lagos scenes, Austin’s team worked with production to determine the height of the building for a Falcon stunt and what his free-fall would look like over that distance. They also visualized flight paths, speeds, etc. for Red Wing that helped outline how or low the character could go and how he would maneuver. In one scene, Falcon flies through a market at speed, weaving through the crowd. This was another technical challenge to figure out the path he was going to take and how fast he would fly.

In another scene, Captain tries to hold a helicopter by its landing skids. Once the previs for that scene was approved, we provided diagrams that laid out camera crane positions on the large exterior helipad set. We worked closely with the special effects supervisor on determining rig setup for the physical helicopter that tips over the edge. Many other scenes required techvis to work out camera positions, speeds, placement of actors and safe execution of stunts.

was doing and who would fight whom.

Copyright Marvel 2016 and courtesy of The Third Floor, Inc.

fxg: Where was your team based ?

Gerardo Ramirez: My team worked first out of Atlanta and then with production on the studio lot after the movie had been shot. Austin’s team was based in Los Angeles.

We had a large previs team split between two locations during filming and a medium-sized postvis team during post production as we created postvis for editorial.

fxg: The ‘characters’ are very pose specific, Iron Man has body pose symmetry at some times (flying), but not during landing etc… how key to getting those poses is the previs? They clearly relate back to the original comics but clearly here they need to work from a movie perspective. Or is your work more blocking than say character stances or poses?

Gerardo Ramirez: During the previs and postvis process, we focused primarily on helping visualize the story and “selling” a specific action or idea quickly. The process allows us to focus on overall action versus the details of a specific pose or animation style, which are addressed by the final visual effects teams.

fxg: Spider-Man was made to be deliberately ‘youthful’ and less accomplished. Was that in at previs? For example his moves are less refined…

Gerardo Ramirez: It was very important to the directors that Spider-Man’s limited experience be evident in his action. This meant the fight scenes had to be designed and depicted with his experience in mind. Even though he’s stronger than other characters in the fight, he can still be outwitted by their years of experience.

fxg: Were there any challenges around a giant Ant-Man?

Gerardo Ramirez: ‘Giant-Man’ makes his first appearance here and, as with the Panther tests, we worked a lot with the film team to visualize how he might move and conceptualize that in motion before they began filming and visual effects. Since the character is so large does he move slowly? The directors wanted clear rules for every super power – for example, that Giant-Man could be giant only for a limited amount of time before it would take a toll on his body.

characters to create postvis shots. Copyright Marvel 2016

fxg: Was all the film done with previs or just the action sequences?

Gerardo Ramirez: We visualized most of the major scenes in the film, some that were more story driven and some that were more action and story driven. The Russos used various methods for planning and they used each department for what it does best. The early edits were a combination of storyboards, previs and stunt performance clips. Stunts would choreograph the hand-to-hand action while the storyboards would be used for character story moments and previs was used for the big action scenes that involved many digital characters.

fxg: How locked were your locations for those sequences? Could you work with accurate sets/location measurements in previs (LIDAR etc) ?

Gerardo Ramirez: All of the scenes we worked on were based on the real or digital location. We worked closely with the locations and art department to make sure we had the correct information for each set piece. We knew early on that we would need to provide technical information for the scenes we prevised so it was imperative that we visualized the action scenes within those parameters.

fxg: In terms of lensing and actual camera assumptions, is there a Marvel preferred look (heroes on an 85mm etc) or is this very much a directorial/DOP decision on a film by film basis? Given the films need to fit in a Marvel universe but the directors change between various films…

Gerardo Ramirez: We knew this film would carry where Winter Soldier left off, so in the previs we used the same cinematography style from that film. The Russos want the camera angle to be with the characters in the action, so we would create out previs with that in mind. This gave the previs a gritty, documentary style.

fxg: Anything else you’d like to discuss from your perspective?

Gerardo Ramirez: This is the ninth Marvel film I’ve worked on and it’s always a pleasure, with amazing characters and amazing teams to work with. The new challenges are fun. Black Panther, for example, this was to be his introduction in a film so it was interesting to be among the first to look at ways he might move and fight. We did many animation tests based off parkour videos, stunt performers, dancers and martial artists to test out unique ways to capture the look of power that a panther has without going too far beyond a man that also would walk on two legs.

movement and fight styles for Black Panther, who makes his first big-screen appearance in the film.

Copyright Marvel 2016 and courtesy of The Third Floor, Inc.

Gerardo Ramirez: On this film it was also really unique to work with so many characters and with new combinations of characters. One of the first things we did with Dan Deleeuw was to pair out different combinations of characters — for example, what would it look like if Falcon had to fight Giant Man, or if Wanda fought Black Panther? How long could you stay on any one fight and how could you bridge between fights to move the action ahead?

It was also great collaborating to map out the different “logistics” of the action – with Dan, we actually put together a layout of the Leipzig Airport to map out who was going where when. We also had the opportunity to help design specific character-based props and vehicles, like the jet Black Panther flies near the end of the film.

Deluxe’s Method Studios

Method VFX Supervisor. Greg Steele, oversaw the work done by Deluxe’s Method Studios work as one of the principal vfx suppliers on the film. His team delivered 440 effects shots in total. The team did two sequences. The first is the Bucky escape sequence where Bucky makes his way to the roof and tries to fly off in a helicopter, only to be stopped by Captain America. This sequence comprised of about 50 shots. The second sequence was the bulk of the work and it comprised much of the third act, in which Bucky, Ironman and Captain America fight it out inside the secret ex-soviet base. This involved everything from arm work for Bucky to Iron Man’s suit, Cap’s shield, set extension and much more.

The Berlin Escape

A helipad was built at the studio with a large ‘prop’ helicopter or ‘buck’ using an old decommissioned AS350 helicopter on a hydraulic arm. The helicopter was substantial enough that Sebastian Stan (Bucky) could sit in it while it moved. The set was built outside to be filmed under natural sunlight, and was surrounded by green screen so it could later be placed in Germany. The intent was for this to be happening at a very specifically place: next to the Paul Löbe building situated close to the Reichstag. The Paul Löbe building contains roughly 1000 offices and rooms for members of the German parliament.

Unfortunately, the beautiful location which had been shot with stills and drones was not allowed to be shown in the final film, so Method had to replace all the close buildings surrounding the helipad with full CG buildings. The team ended up only being able to use some reference for the far distant German skyline, but all the main background is fully CG. Steele was still happy that the production had shot at the location, as it did provide great lighting reference and scale “even if it did make things a bit more challenging.”

Given the nature of the action and interaction as Captain America holds the chopper from flying off, much of the foreground also got replaced. “We had to put the rotors on, replace parts especially where there was rigging,” says Steele. “We ended up replacing pretty much all of the helicopter in every shot.” The team worked with animation in Maya, into a Houdini pipeline rendered in V-Ray or Mantra. The shots also included digital people and the river below.

When the helicopter goes over the edge, having failed to escape, both Cap and Bucky fall down into the river and go under water. These cool underwater sets were setup in Houdini “in its own little Houdini pipeline..the mirk in the water, the bubbles coming off the helicopter and the destruction as it bumps into the ground at the bottom” relates Steele.

Mostly this sequence was a Houdini pipeline with the exception being Bucky’s arm which was rendered in V-Ray. Mantra was used as the team had previously done San Andreas (2015) in Mantra and Steele says “it just seemed to make sense, especially with all the interaction with the volumetrics. Plus we didn’t have to do holdout mattes or the motion blur, as we used a deep workflow.”

The deep pipeline was somewhat of an experiment and Steele is the first to admit that it means a data-heavy workflow, but the compositing supervisor Carlo Monaghan (The Hobbit: An Unexpected Journey) had used deep before at Weta Digital and was keen to use the approach. On their previous Ant Man workflow, Steele and the team had explored a few shots in the pipe attack as a V-Ray deep compositing pipeline, but this was a more robust attempt as a whole sequence. V-Ray would be used almost exclusively for the third act including Iron Man’s suit and all the environments.

“The renders are so great these days…picking which renderer comes down to casting based on the talent on the particular sequence as well as some things like volumetrics. For characters like Iron Man it just makes more sense to go with V-Ray which has been the staple of our studio for sometime now,” says Steele. “For the destruction stuff and volumetrics on the helipad it just made sense to go Mantra. We could have done it in V-Ray and are toying with doing a bunch of stuff (volumetric) using OpenVDB from Houdini and rendered in V-Ray, but we didn’t quite get that far in this show,” he explains.

The Third Act

One of the rendering challenges in the third act was getting Iron Man the right color. The asset was shared with ILM and rendered by Method in V-Ray. “It was one of those things where we got the asset – the first work in progress asset and almost immediately we had to a teaser trailer,” says Steele. “We took all the lookdev and all the things we were given and kind of make it work!”.

The team delivered two shots for that trailer and learned a lot about how Iron Man would look in the dark Russian set. In terms of this film’s version of the iconic hero, the production wanted “more of a classic Iron Man look” versus the really glossy car paint look as Iron Man appeared in the previous Avengers movie. In other words, more like he was in the first and second Iron Man movies where his suit was a little duller, a little more beat up…”less of a car,” laughs Steele, recalling the process. “We’d get notes that he looked ‘wet baby’ when he was too glossy. So even though we were using the same shaders and we were using the same lighting techniques per shot, we spent a lot of time getting his suit surface properties right”.

Lighting has always been an issue for Iron Man’s suit, as it is hard maintaining the right color when his suit naturally picks up colors from it’s environment. The team had to be very careful about the character’s own ‘repulse’ weapon when it fired, needing to make sure its reflection in the specular did not make the suit seem wrong.

As the team had worked with other vendors on sharing assets on Ant Man, they had learned to ask for various layers of AOV so that the team could break down exactly what was happening to match. “ILM was very accommodating,” says Steele. “They did a nice little write up of how they were putting their work together and we saw right away – funnily enough – that their AOVs were all out of V-Ray – which is what we were using. So we were able to match them perfectly”.

The team first matched their suit to ILM’s suite in the bright sun environments of the airport. Steel says it was “impossible to tell the difference. Then we took the same setup into our dark environment and it looked totally different! So then we had to re-engineer it so it felt like the same as the outside suit”. This was not due to a bug or problem, but the reality of such a suit under such vastly different lighting setups so that it did not look too red or too purple.

The sequence was shot at Pinewood in Atlanta where a huge set was built there for the end sequences(Ant Man was shot there and Guardians 2 is now being shot there). To get the right physicality for Robert Downey Jr., the actor wore what was called a ‘displacement suit’. This was a special piece of stunt Iron Man Armor built so that any interactions would happen in the right point in space relative to Downey’s body, but with no expectation that this prop armor would be seen in the final film. Without it he could perhaps lean too close to a wall. The only issue was that this special rig did not have a neck piece, and Method had to blend up very closely to his neck. Steele says this is one of the only things they would do differently.

Legacy did actually build a suit for the stunt man to wear on set while filming the end fight scene, but the team found that a partial suit from the second Iron Man was better reference. This less glossy partial suit was filmed in each camera set up. “For every set up we’d do the clean plate, the HDR (bracketed Canon-5D stills w/ 7 exposures), the grey balls and then we’d walk the old suit through which was really cool,” Steele explains.

The production had all the sets scanned with LIDAR and Method also had a set of four of their own people to measure and record each environment. This came in handy as there was extensive set extensions and relighting.

Bucky’s metal arm was also a shared asset. Method had not worked on Winter Soldier, so the team did some work to get the metal arm to match. “It was a fun little asset to work with,” says Steele. “It has these hyper real little pieces like near his waist, for example.” The team ended up adding additional masks and scratch maps. Interestingly, when the hand was being used in the earlier helicopter sequence, the team needed a extreme close up and up to this point Bucky had not needed to be seen like this as he normally wore a glove. When the team ‘took off’ the digital glove they discovered the hand was only partially finished from a texturing point of view, so Method worked on the top of his hand and then sending that out to the rest of the vendors.

On set, Sebastian Stan wore a Legacy arm section, but as it was over the top of his real arm it was naturally a bit bulkier than Bucky’s replacement arm would need to be. This silicon arm was removed in post and the digital version replaced it. Method expected the arm might have been more awkward to remove and replace but it actually worked out very well thanks to the object tracking team doing a very accurate job tracking the legacy arm.

Humorously, due to a trailer fan picking it apart, Method had to add Bucky’s arm back into some shots for the international trailer, as it had been blown off by that point in the film. But this was causing screams of curiosity from fans prior to release of the film. In fact, a lot of shots in the trailers are not in the film, instead inspired by the actual shots but composed differently for the trailers.

For Captain America himself, the majority of the work was the shield. While he had a shield on set, the prop was not quite as dramatic and impactful as the film makers wanted. “We pretty much replaced every shield in every shot we did,” comments Steele. “That digital asset was from the first film I think — from DNeg back in the day — so we took that and ‘upgraded it’. In the end, ILM had to put a Panther claw mark in it…so that put a nice little set of grooves in it, so we got those and integrated them into our pipeline.”

The environment work in the end fight sequence was broken up into three primary sets or locations inside the Russian base. Each had their own constraints and all required some set extension. The first was the main Status Chamber which was a huge set with dead Winter soldiers in it. While vast, it had only about 2 floors worth of set height so the rest had to be digitally extended.

The second was the decommissioned missile silo which was built up about three floors high. Here the set was circular with about just over 180 degrees built, so there was both set extensions and full digital builds. “Due to the rigging, blue screen and set height, it was just easier in many cases to lift the actors off the real sets and fully replace everything around them with a full digital environment,” says Steele.

Here the aim was not to relight or alter the set but just have much more of it and in any direction. The virtual set was therefore modelled and lit as closely to the real set as possible. As the digital set was lit with the ‘same’ lights as the crew used on set, the actors matched back in seamlessly. Unfortunately the physical set was very detailed and included heat resistant sort of padding.

Steele says that to handle the complexity, the team very successfully used V-Ray proxies “which means we could render very quickly. For example we could get an entire render out in under 6 hours, even looking way up or down, – which was pretty brilliant given the number of polys we were throwing at it”. This is even allowing for each level having six or seven lights per level, with at least ten levels visible, all ray-raced with multiple bounces, plus the character. “It was fun to push the technology of our pipeline with V-Ray and it worked out really well,” outlined Steele.

The last set was at the bottom of the Silo. It was a smaller 20ft x 20ft set but required seeing the environment outside the doors and blast vents. The team used Norwegian plate photography for the outdoor matte paintings and added some 3D snow. The main challenge was enhancing the lighting on the characters and on the set. Almost every shot was relit to get get a richer more rounded and sculpted look to the light. “In the original photography it was a little bright in there so everyone wanted to bring it down a bit,” says Steele, “which allowed Iron Man to be more backlit when he was menacing and more front lit when he was more vulnerable. It worked out really well”.

Method’s work on the film was roughly equally divided between their LA office and their Vancouver office. Much of the modelling was done in LA, but most of the helicopter assets were made in Canada.

Steele speaks extremely highly of the work of Dan Deleeuw, the visual effects supervisor for Captain America: Winter Soldier who earned an Oscar nomination for his work alongside Russell Earl, Bryan Gill and Daniel Sudwick. Deleeuw previously worked on Marvel’s Iron Man 3 as the production overall visual effects supervisor. His team provided outstanding set and data pipelines and managed the complex mutli-facility vendors extremely well. For example, even the details such as the 17 different levels of damage to Iron Man in the story had to be precisely managed. This is with multiple companies working on the shots in an edit that could change or vary as the edit came together. It was not as if there one just one Iron Man asset that was signed off on and then used for all shots across all facilities.

ILM

The big epic battle of the film actually takes place in the middle of the film as both sides face off at the Airport.

ILM was tasked with doing the main fight sequence which ends the second act of the film. The sequence runs for approximately 20 minutes when the two sides of the civil war square off in Germany. ILM has a long standing relationship with the Marvel characters and in Civil War they had to provide both visual effects/environments and digital characters.

Russell Earl, vfx supervisor, headed the ILM team that handled the epic battle. The sequence grew to be a largely fully digital sequence. “We ultimately ended up replacing 99% of everything that was shot,” says Earl, with the actors being lifted from the background. Originally both Spider-Man and Black Panther were planned to be photographed elements “but we ended up almost completely replacing them (when in costume).”

ILM built a fully digital airport and surrounding area. All the actors were on set, but each of the leads had at least one if not two stunt stand-ins. Chris Evans was only replaced in one of two shots with a digital double but his shield always was almost always replaced. By contrast, for much of the fight, Iron Man’s mask is down and the character is fully digital. To achieve this the team chose V-Ray as the renderer. This was influenced by their past experience and they knew there would be both characters and architecture. Earl wanted to ray trace everything, and light the sequences as naturally as possible. One of the huge parts of the sequence was the Airport itself and its various planes and vehicles. The generalist/environment team members wanted to use V-Ray and so “it made sense to do it all in V-Ray” says Earl, who really likes the look his team can achieve in the renderer.

The approach Earl took was to bring up all the shot elements together. So rather than solve one part of the vfx, but without having another part ready, he advocated all the parts being combined together so that the work could be judged overall. Some projects use a more sequential model, that gets each stage in the pipeline done and then the shot moves to the next stage in the pipeline. “We worked to get everything up to a certain level – in all areas – and then we’d evaluate it and then go lift it all again … to again get it all better overall.. until we got to a point that it was looking how everyone wanted it”.

ILM started on the film a year ago (May 2015) with the first shots being made for D23 which then “bled into the early trailers” explains ILM Animation Supervisor Steve Rawlins.

For the character work, ILM had to create digital stunt doubles and they also created detailed character animation for Iron Man, Ant Man, War Machine, Black Panther, Vision, and Spiderman. All of these characters are masked while fighting with the exception of Vision who was a hybrid of the actor Paul Bettany for closer shots and a fully CG Vision for more distant work. All assets — from the characters to the airport –were ILM’s assets, although they shared them with other facilities such as Method to allow for continuity with other sequences.

While ILM has worked on some 13 Marvel films, Rawlins pointed out that in every film Iron Man gets a new suit, with new design variations and new characteristics that not only affects the shaders for the look, but also how it animates. In this film, early concepts had Iron Man’s suit having tiny thrusters or stabilizers all over the suit. This would “make him more elegant and be able to stabilize and change direction without having to use his arms or legs as much,” says Rawlins.”But that idea went away fairly early as these directors have a very strong live action bent to what they do. They were keen to have Iron Man get back to Iron Man 1… get some more of the physicality back into this film”.

The team even referenced real life “flyboard” type jetpack/hoverboard which uses its connected propulsion system to drive the flyboard through air and/or on water, just to get some of the real world balancing and physics into the shots. “There was actually more that went into him in terms of development (Iron Man) than you might have expected for such an already defined character,” points out Rawlins.

Unlike Iron Man, Black Panther was completely new. While Chadwick Boseman played both T’Challa and Black Panther, in this action sequence most of the shots involved a stunt man in a suit which was then tracked and replaced with a fully digital Panther. His movement is primarily hand-animated and matched to the stunt performer. There was some motion capture with and blending of different takes, but on the whole the team matched to the performer unless he was doing extreme jumps or falls.

Even in shots where the suit was seen in close up, the suit changed. The costume had both beauty work to remove wrinkles etc but also the suit had subtle design changes. Similar work had to be done with Falcon, but with the additional complexity of wire and rig removal. Also, any time that Falcon’s wings are seen, the wings and most likely Falcon himself are digital.

This sequence also introduced Spider-Man in his Tony Stark high tech suit. A complete fan favourite, the movement of Spider-Man was carefully animated to look slightly immature. “He is not hitting his iconic Spider-Man poses yet – he is more of an amateur at this stage,” explains Rawlins.

This film’s version of the suit was a carefully designed and crafted version of the Spider-Man costume. To provide more expressiveness to his character, the suit’s eye patches were given particular attention. The suit has an iris system which allows the black framed surround of the eye patches to animate and express emotion. This happened in the comic book as still frames, but here the ILM team had to have a rig for the eyes to animate.

The result is a high tech iris that could slide over the fabric of the whites of the eye patches. Rawlins explains that “it was used sparingly. It is not used all the time…just when he is using his spidery-sense. It is kind of set up in the film where Peter Parker talks about his senses and how he tries to control them — focus them in — (but) at that point he has a pair of glasses or goggles. And (later) it is the Tony Stark high tech suit version.”

Spider-Man was exported from Maya, into Zeno for the cloth and muscle stimulation, from there to Katana and then rendered in V-Ray. “We had these huge renders of the full airport set and it looked just gorgeous. Outside with full global illumination, lit just like it was on set, right down to cloud shadows that matched the silks (transparent lighting cloths suspended over the actors) used on set,” says Rawlins.

The ILM team did not have much in the way of facial animation in the sequence, as most characters were hidden by masks. But when it came to Spider-Man, rather than pose ‘expressions’ on the face of the mask hinting at the emotion underneath, Rawlins consulted the rigger at ILM from Ultron. Instead of using a facial library on the mask, he explored having a face under the mask that would drive the mask more correctly. This lead to a digital Tom Holland face that would say open its mouth, enabling the viewer to see that movement under the cloth of the mask.

Due to the subtle nature of the ‘facial’ animation, the skin tight suit, the simulation, and the shading means that final sign off on the animation was not possible until the final renders were done. The animators animate the the basic human form, but this is added to by the muscle simulation and given the fabric properties the smallest lighting changes to the cloth simulation could change Spider-Man’s expression. “Instead of saying a shot is ‘final’ in animation, we would call them ‘done pending render.’ ‘Cause you know once you see it is rendered you know it may change..it is the only way we can work with this level of nuanced performance,” says Rawlins.

While the animation was key frame animated, the ILM team would also use their own motion capture stage to capture a stand in double especially for body animation or posing. For example, there is a scene filmed in Germany where Spider-Man is hanging off the side of a structure and talking to Falcon. On set, Tom Holland was on a piece of scaffolding so he was at the correct eye line while delivering his lines. Holland wore an i-mocap (grey) suit and also had a head capture rig on so that ILM could get his face footage.

He acted out the scene but, of course, his body position was not accurate to what it should be were Spider-Man be ‘attached’ to a wall. So while the line was recorded as production sound, the body was animated weeks later. Back at the ILM mo-cap stage, the team got one of their own animators to pose in the spidery pose only on an inclined ramp, something that gave an idea of weight but not so steep he would slip off. In animation this was rotated to be a vertical pose and blended with Holland’s acting performance and delivery of the line of dialogue.

To get Spider-Man’s swinging action when he is flying in on a spidey web is very complex. There can be a clash between the maths and what the pacing of the action requires. To help, ILM has an automated pendulum tool in Maya that will illustrate how fast something should move ‘in theory.’ This allows the team to find creative ways to meet the directorial needs or editorial pacing and still keep things believable, even if an audience would be hard pressed to know why a spidery swing motion was inaccurate if done for real. One creative solution to this problem is that the spidey web has been deemed to be elastic, so the character can catapult himself to achieve faster speeds and more dramatic entrances.

For Spider-Man’s web, ILM set up a system where the look would come from the effects animation team, but the firing and timing of it was done in animation. “The web is really a critical part of the swinging,” says Rawlins. “Selling when the web was tight or loose is an important part of the language. He’d fire the web and swing…and maybe it would go loose for a second and then pull tight and that would kind of jank on his body. As the web line is animated…we would do that in animation and that would be passed along just a curve or spline. If we could sell it in animation. it would go straight to the lookdev team. Otherwise it would go to the creature dev department and get simulated.” The web simulation was done in Houdini to make sure it was not too solid, not too glassy, and move just the way the team wanted.

Hawkeye and Wonda had arrows added and simulations for her ‘magic’ power. To drive her super jump, simulation work would be added to plate photography of Elizabeth Olsen (Wanda Maximoff / Scarlet Witch). As she jumped, the character would transition to a digital double and then back again as she landed. In addition to a ‘take over’ of refined digital doubles, there needed to be crude digital double work of most of the characters. This was to allow them to cast shadows on the digital environment and create passes to add elements such contact lighting on the limbs of Olsen once her magic thrusters are added digitally.

Every shot would be filmed with a minimum of a stunt person in shot so the camera was always framing on something or someone real. These are preferred by editorial. But with so much being digitally replaced, having reference of performances like Captain America’s Chris Evans fighting a stunt man (standing in for say Iron Man) is more valuable to the animation team than clean plates with no one in shot.

The fight sequence was filmed both at Pinewood Studios and at Germany’s Leipzig/Halle Airport, with the two shoots blended via the environment replacement.

Luma Pictures

Vincent Cirelli (Deadpool, Ant-Man, Avengers: Age of Ultron) was the Visual Effects Supervisor for Luma Pictures. The company was tasked with doing a series of Bucky arm shots and the metal brainwashing chair. Luma had worked on the previous chair from the Winter Soldier. While the asset was similar, the team reworked the shaders and animation to match the needs of the new film. In total, the Luma Pictures team had approximately 200 shots in this film.

The team also did the break out from the Task Force lobby as Bucky, the winter soldier, fights to escape. In the on-set photography the team had wanted the walls to be concrete but the actual walls were more plaster. Dan Deleeuw made the decision to hang “grey sheets everywhere instead of green screen, which was wonderful for us” comments Cirelli. “We were able to add the details of the concrete without having to deal with pulling full keys, green screen spill and all the problems you have with green screen location photography. That was really beneficial and I had not experienced that before”.

The approach worked particularly well as the values of the grey were within range of what was wanted from the concrete to look like. “It saved a ton a work and a unique aspect of this film,” says Cirelli. The characters were either grey luma keyed or just roto-ed off the grey boards and “it was excellent. We had no problems with edges, it was forgiving, we could add highlights and detail…it was seamless…it was one of the best things. I mean (other than) all the explosions and robots and everything, that was really innovative. Usually people just throw up blue screen and then we have remove spill, with colour correction needed on the actors skins…(it’s) so much worse for the final film.”

The team added concrete detail and executed a traditional set extension and environment build. The roto work was done in Nuke. The team has looked at other packages and specialist tools, but with advances in Nuke, the benefits of having the roto tools integrated with all the other compositing tools outweighs any advantages a stand alone package can offer.

Tracking was done in PFtrack, with the point cloud data exported to Nuke and this allows the use of proxy geometry for the same reason. “Now with the production providing LIDAR, the tracking is so much easier,’ explains Cirelli. “The proper geometry aligned with the LIDAR and the proxy geometry that we brought into Nuke.” A few years ago it wasn’t that easy to do full 3D tracking. But advances in LIDAR and 3D tracking that have really evolved over the last 5 years, camera tracking has advanced significantly and is much more reliable and robust, especially in programs like PFTrack. The team completed their work at 2K, even though there were parts of the overall pipeline in 4K at various points in capture.

The team also created the hybrid new type of suit formation on Iron Man. This could well be a nod by Luma and the filmmakers to the nano tech ‘Bleeding Edge Technology.’ In the comic books, fans will know that Iron Man becomes more integrated with the suits; it is less armour and more something that combines with his skin. Of course, it may just be that Luma produced a cool new extension to the Iron Man folding suit that first appeared in Iron Man 2…only time will tell…

The scale of Captain America Civil War’s visual effects is enormous, given it is meant to be just one of the character films rather than the primary Avengers franchise. At FMX 2016 in Germany, Diana Giorgiutti, Executive Producer of Features at Luma Pictures and a former Marvel producer made the point in regard to just how much effects work Disney Marvel Studio is generating now for the industry. “The Matrix was only 420 VFX shots. These days, it’s 2,000 [shots], certainly on a Marvel film, and they have gone up to releasing one more movie per year,” related Giorgiutti. Even as she was speaking at FMX, Luma had already moved on to Dr Strange, coming out this fall. (Be sure to check out the cool Church transformation sequence in the new trailer.)

Images Copyright Marvel 2016