PIXAR at FMX

At FMX last week, Pixar’s Dylan Sisson gave a preview of the upcoming release of RenderMan 22, featuring advanced light transport, greatly improved interactivity for artists, and integration with Pixar’s Universal Scene Description (USD in version 22.1).

Given that this year is the 30th Anniversary of RenderMan, Sisson also looked back at some of the history of the product and gave a sneak peak into what is coming beyond the next release.

Dylan Sisson is a technical artist with over 20 years of experience in animation and VFX. Sisson joined the RenderMan Products Group at Pixar Animation Studios in 1999, where he has been engaged in the development and evangelization of RenderMan.

Prior to Pixar, Dylan Sisson worked creating animation and VFX for games and commercials. Today he is still an active artist, and the creator of several award-winning independent animated shorts. (Dylan is also the guy responsible for the creation of the famous Pixar’s RenderMan Walking Teapot toy). As he is both a company guy, and a really good artist in his own right, his talks are always one of the best ways to appreciate the current roadmap for RenderMan.

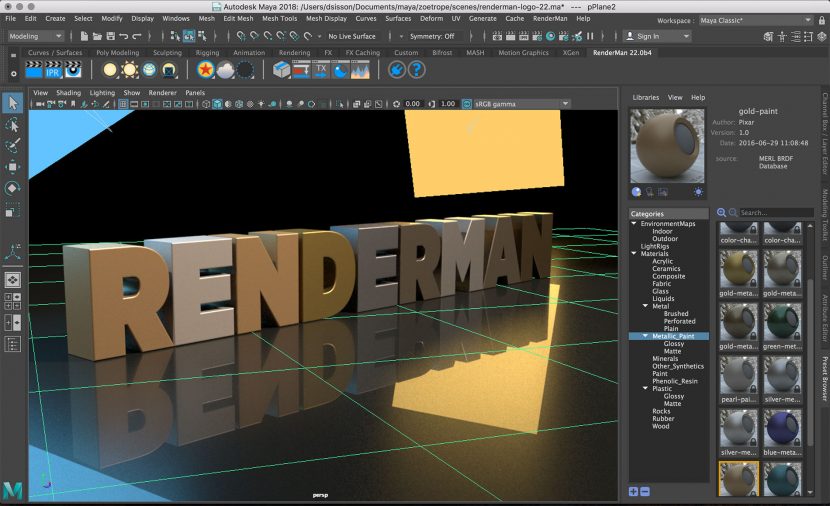

For RenderMan Version 22, the product has both technical innovations and speed improvements. RenderMan for Maya has been also completely rewritten and modernized, significantly enhancing the experience for artists with powerful new workflows.

Bao

As part of his talk, Sisson was able to screen for the FMX audiences the European premiere of Bao, Pixar’s new animated short which will appear before the new The Incredibles 2. It is stunning, and another significant cultural contribution, to the increasingly balanced, Pixar creative diversity of projects.

In Pixar’s all-new short, a dumpling springs to life as a lively, giggly, dumpling boy, giving an aging Chinese mom another chance at motherhood.

Bao is Domee Shi’s directorial debut. When Domee Shi was a little girl growing up in Toronto, her mother treated her like a precious dumpling, constantly making sure she was safe and didn’t wander away. That maternal care inspired the story behind Pixar’s latest short film, which was rendered in RenderMan as a fully physically based path traced project. The company is now 100% committed to RenderMan as a Physically Based Path Tracer.

Director Shi joined Pixar in 2011 as a story intern. It was when she was working on Inside Out in 2015 that she decided to create her own side project.

Lessons from Coco

Like Bao, Coco is both a healthy celebration of a different culture, while being a technically complex project that allowed the RenderMan team to extend the renderer and solve new complex production problems. Those high end production lessons have been packaged up into new tools inside version 22. Pixar’s production issues always feed the RenderMan team’s R&D agenda, as we highlighted in our fxguide story on Coco.

In Coco, big sets, large crowds and vast amounts of lights all lead the developers to once again expand the limits of what RenderMan could handle. For example, in Coco there was 8.5 terabytes of cache data for crowds in the afterlife. As we documented, the Lightspeed team worked in concert with the RenderMan team to addressing this in a world with over 2334 practical light – including 729 point cloud lights, (equal to millions of individual lights). A direct result of Coco, is that RenderMan, Light Discovery algorithm was greatly enhanced.

The Incredibles 2

The next film from Pixar is the highly anticipated, Incredibles 2. This film has also pushed the boundaries of what can be done technically, and some of these innovations were previewed by Sisson in Germany last week.

The materials on the suits of the Incredibles are much more complex than in the original film. They look very similar but contain much more detail, which can be seen in the closeups. This comes from the new Microfacet details in the cloth. “The microfacet detail is created with Bump to Roughness. It’s an area of recent research in a number of papers, which Pixar has implemented in our RIS bxdfs” explained Sisson. (You can read more about Bump to Roughness in this Pixar Research paper – See section 2.4). Currently Bump to Roughness can be implemented through custom shader patterns, and there is a OSL example implementation provided on Pixar’s tech memo page. “We plan to productize it, by adding it to our default shaders, but this work is non-trivial and will follow after release 22”, he added.

Sisson pointed out that the shaders (bxdf’s) presets that ship with RenderMan are based on production presets that the studio uses for it award winning feature films such as The Incredibles 2. The majority of RenderMan’s shipping Presets are based on the MERL BRDF Database, and very similar types of Presets to the ones used in The Incredibles 2 for example. But Sisson was quick to point out that “the presets are simply starting points, the shader networks do get very complex. Pixar Production and RenderMan share the same BXDFs and many of the same patterns too”.

The shaders, lights and blockers are still the same in version 22 as the previous release. This includes the Pixar production shaders recently released such as the Pixar Marschner Hair Shader, (see our fxguide Story).

Note: there are also new toon shaders coming made in concert with Lollipop Shaders.

RenderMan’s Architecture

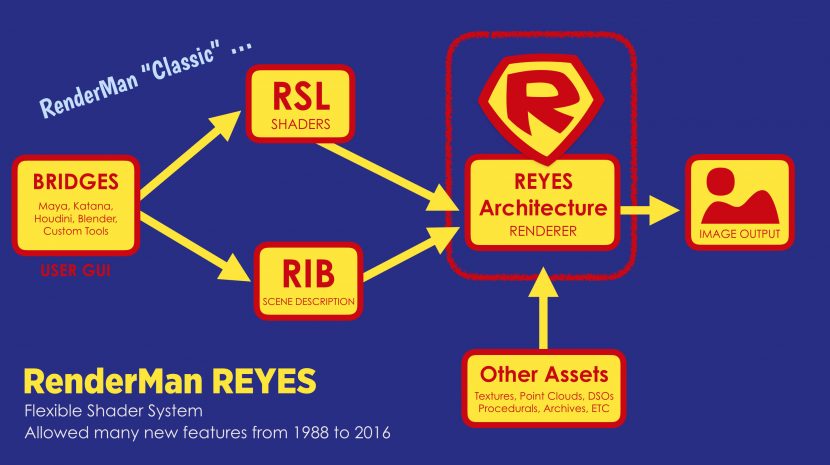

RenderMan Evolution : REYES

The advanced technology in RenderMan has evolved so it can deliver exceptional quality for any sized production, from a Pixar feature or ILM VFX film, to broadcast animation for television. Sisson gave a short technical history to explain this journey, starting with the pre-RIS ‘RenderMan Classic’ internal pipeline. In this older version of RenderMan, the pipeline could be thought of as shaders and RIB scene description both feeding the REYES render. The RSL shaders and the RIB files were incredibly flexible, but not designed to be physically plausible by default, and came from a time when most optical effects from global illumination to caustics needed to be faked.

This classic RenderMan won numerous Oscars and worldwide praise in producing both animated and photoreal effects, but it relied very heavily on both the technical skill of the TD and the eye of a great artist to judge what was correct.

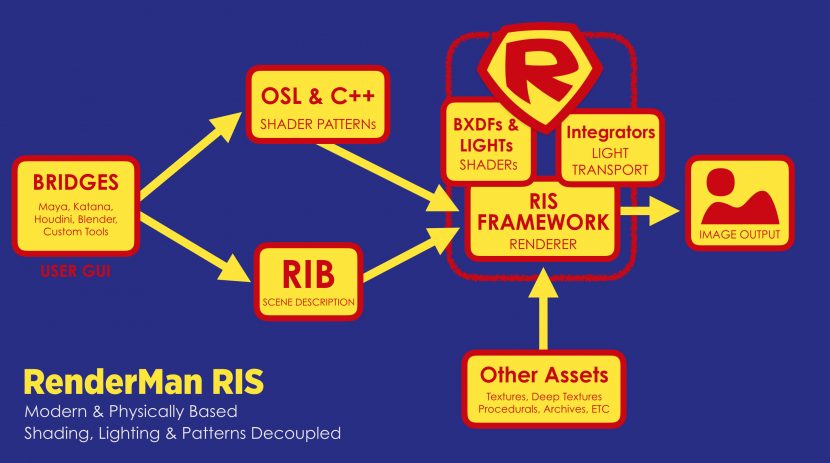

RenderMan Evolution : RIS

While this early approach worked well up until around 2016, RenderMan then evolved into Hybrid solution that allowed the use of the new RIS integrator. This second period of RenderMan’s history in this area, offered artists and TDs a modern physically based shading system, with OSL shaders but still with the older RIB files as the core of the scene description. The RIS provided an enormous boast in solving what something would physically look like, and reduced the programming complexity of using RenderMan.

With RIS, global illumination worked straight out of the box and to improve things further, Pixar introduced interactive rendering to allow the rapid iteration of work by artists.

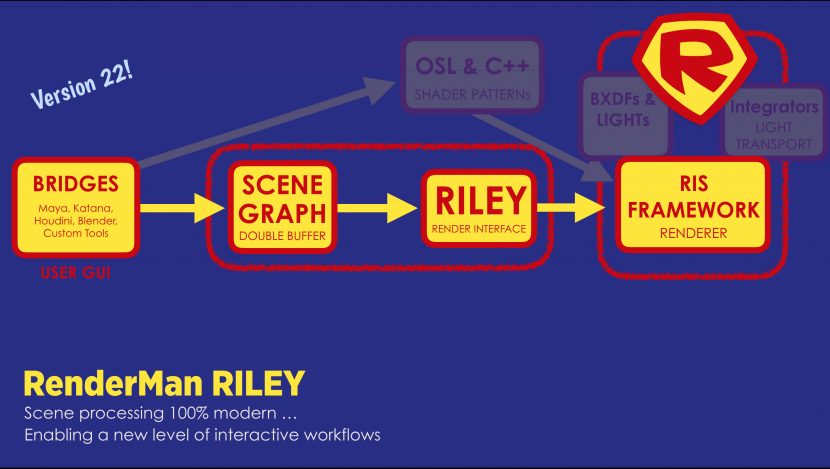

RenderMan Evolution : Version 22

In the last few years, Pixar has been developing and then promoting its Universal Scene Description USD, but this was not an inherent part of this interim Hybrid version of the internal pipeline.

With version 22.1 of RenderMan the transition will be completed to a USD based scene graph and a new RILEY render interface that reties the RIB file double buffer format.

The modern version 22 solution is not only cleaner but faster and has less code. The legacy code was cut as RenderMan reduced its code base by 30%. Sisson described this as “the great dejunking of 2018“. The optimization of the code base for 22 includes the removal of over 60 attributes and options, along with a new modern render interface, Riley, which is much faster than the traditional RIB pipeline (note: current RIB workflows will not be impacted, as RIB is still supported).”

ILM did independent testing of a complex test scene with an extreme amounts of curves and found the render was 10 times faster than the older system (other scenes heavy with curves have shown 2x to 6x speedups).(Beta test rendering of a dense block with hundreds of thousands of curves: version 21.7 compared to version 22 on a test setup: was 10 X). While this result won’t be universal across all scenes in RenderMan, version 22 does provide numerous performance enhancements from the ‘clean sweep’ of the code and new core improvements.

Version 22 also makes an enormous jump in performance for interactive rendering in the RenderMan menu or when using RenderMan as the Maya preview renderer. Overall the new system is now faster, anywhere from 10% to 2X with less memory used by 10% to 30%.

Sisson also flagged the latest RenderMan for Katana v3.0 support, with multi-thread scene graph, a multi-threaded Alembic pipeline and new light arrays.

Volume rendering has also been revisited, but Sisson commented that there was a lot more development work had to be done. In this next release, single scatter and multiscatter wispy volumes has been improved, which would be ideal for rendering say ‘god rays’ faster in a misty forest style shot.

Also new in Version 22 is a cleaned up version of Cryptomatte, which has been supported as standard now in RenderMan since version 21.7. Also improved Alembic support, particles are 2X faster, there is faster feedback from modeling to lighting, with less time to the first pixel (Time to first pixel is 20% to 10 X faster). Even the installation of Version 22 has been made easier. All of which “allows artist to try more ideas and make better decisions and have fun again”, commented Sisson, “and who doesn’t want more fun?” he added.

In Maya there is a new RenderMan menu. This has a new look UI that is easier and faster to navigate. In addition to both a new UI and new render settings, there is a much faster new AOV (Arbitrary Output variables) system, which requires much less typing, and most impressively much faster Interactive Preview Rendering, IPR.

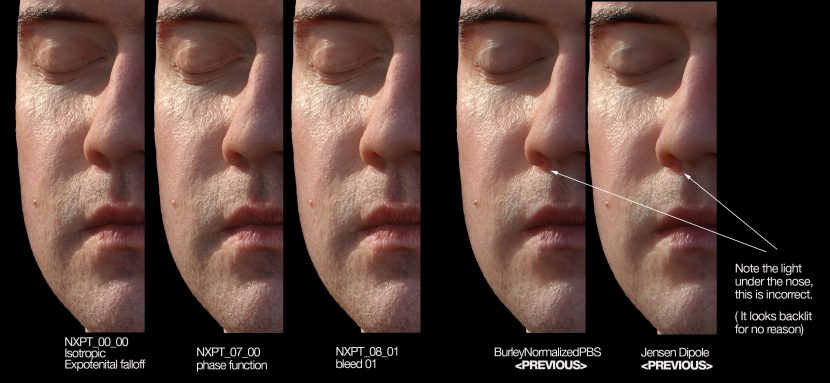

Sisson demoed all this his old(ish) i7 16 Gig Macbook Pro and it was quick and responsive, even when showing the new ‘real time’ sub surface scattering (SSS).

Beyond Version 22

After discussing version 22, Sisson showed some new research beyond v22 and an update on the that will hopefully find its way into future versions of RenderMan. The most impressive of these is from research headed by Pixar’s Christophe Hery into Manifold Walks. Hery joined Pixar in June 2010, from ILM. He holds the position of Senior Scientist at Pixar and we have featured his work (especially on SSS) before here at Fxguide. Hery has already received two Technical Achievement Awards from the Academy of Motion Pictures Arts and Sciences.

Manifold Walk

A Manifold Walk or manifold exploration path tracing, is a way of solving extremely complex problems for path tracing such as caustics. Such things often suffer from unusably slow convergence, which means one either gets a very noisy output, or the render takes an unreasonably long time to resolve satisfactorily. This is due to the complexity of incorporating specular volumetric scattering into the standard path tracing framework.

Sisson showed the new Pixar eye approach, which is used extensively on the characters in The Incredibles 2, (such as Helen above). “For The Incredibles 2, we also had to add controls to fake the refractions ( with a stronger dedicated Manifold Walk IOR ), so as to make the resulting gleam pronounced even on stylized and barely bulgy corneas” explained Pixar’s Christophe Hery after the event.

The approach Hery and the team took is based on key research by researchers such as Wenzel Jakob. The idea is to analyze the high-dimensional differential geometry and use the resulting information to construct an algorithm that is able to “walk” around on it using a simple and efficient equation. This ‘manifold walking’ algorithm then makes up the key component of a type of Markov Chain Monte Carlo (MCMC) rendering method that computes lighting through “general families of paths that can involve arbitrary combinations of specular, near-specular, glossy, and diffuse surface interactions as well as isotropic or highly anisotropic volume scattering”, to quote Jakob’s 2013 Cornell University Dissertation.

Hery went on to explain to fxguide, that the Manifold Walk is a refined type of Next Event Estimation, “not only were we inspired by Jacob and Marschner‘s paper, but also by the pioneering use of it at Weta”, ( see this Siggraph 2015 paper by Johannes Hanika, Marc Droske, and Luca Fascione). “This was re-implemented under my department in RenderMan by our intern Sebastien Speierer”, he explained. “As far as the technique is concerned, the idea is to displace ( ie. walk ) slightly the point on the cornea to satisfy the SD ( ie caustics ) paths to the lights. Thus we create a better connection through refraction. We can even satisfy several levels of refractions, such as with glasses in front of the eyes, with the solver walking on multiple surfaces at once”.

The team has just published an extension of this to the Pixar technical library as a tech memo and this will also make its way to the official PxrUnified integrator in the near future.

Below are tests Hery showed using the eyes of our WikiHuman model of fxguide’s own Mike Seymour. Doing caustics in the eyes is extremely expensive. In the two renders below, the effects can be easily seen. The first render shows the current solution in RenderMan and the second a new new Manifold Walk solution. Note, both renders ran for exactly the same duration. When playing the clips, the amount of noise difference in the eyes is remarkable. Neither of these clips has any noise reduction used at all. The noise in the first clip is demonstrates how much slower it is to resolve to an acceptable solution without the new Manifold walk.

Manifold Walk which is planned to be released in Release 22 as part of the new Pixar Unified Integrator, and as noted above with The Incredibles 2, it currently used in production at Pixar.

This research was also shown by Pixar’s Christophe Hery in the Digital Human’s track of the FMX as part of Mike Seymour’s talk (more on that story coming soon). This research uses the same base Wikihuman model done at USC-ICT, that was used in the SIGGRAPH 2017: MEETMIKE. While the MEETMIKE project is a real time EPIC UE4 project, the broader work of the Wikihuman team, (that Seymour and Heny are a part of), also incorporates research into production non-real time rendering such as explored here in RenderMan.

Current RenderMan technology

Above is the current RenderMan approach, and below is a new version using the Manifold Walk. Note how much less noise there is in the eyes.

Manifold Walk new version

Blockers

Sisson also showed a test that illustrates what is sometimes jokingly referred to as Pixar’s ‘secret source of lighting’ called Blockers. This was introduced in 2016 and as one can see below, it allows for complex lighting control that the Pixar feature film team use to produce detailed dramatic and controllable lighting, such as in the upcoming Incredibles 2.

Above this example of the Blockers uses Louise Head model from Eisko.

Update on RenderMan’s upcoming XPU project

Sisson gave an update on the XPU project. This is vastly anticipated new approach was previewed at SIGGRAPH 2017 in LA. Sisson reported that in XPU is now possible to have the CPU and GPU make identical pixels (see the two images below).

RenderMan XPU, is a project to allow users to render on both CPU and GPU without quality differences. Scheduled for delivery after RenderMan 22, the hybrid render approach takes advantage of all available compute resources, harnessing GPUs when available to deliver massive speed to both interactive and final renders. Artists create one set of production assets, and RenderMan XPU exploits the compute power it finds from desktops to farm machines.

Comparison of a CPU and GPU render (which are now funcaitonally identical).

Below is a video from the 2017 Pixar RenderMan Science Fair at Siggraph 2017, which first outlined how RenderMan is seeking to blend seamlessly between GPU and CPU.

Ahead..

The commercial beta of RenderMan was released last Thursday April 26th 2018.

- RenderMan V22 should be fully released in around June /July

- Renderman 22.1 with support for USD will be soon after that and

- Sometime in 2019 RenderMan XPU will be released.

Pixar is showing real strength with version 22, and the future roadmap looks strong. Siggraph 2018 should see significant new releases as well as some (well earnt) celebration of 30 years of innovation and commitment to R&D in Rendering.