Lost in Space is a new Netflix series based on the 1965 series of the same name, following the adventures of a pioneering space family whose spaceship veers off-course. It consists of 10 episodes.

After extinction event threatens Earth, a number of Jupiter ships, taking selected families, are sent to colonize a new world. The Robinson family is selected for the 24th such ship, their Jupiter 2 crash lands on an unknown but habitable planet. During their adventures Will encounters an alien robot and its crashed ship; he befriends.

Image Engine, with its long history of VFX droids and robots was tasked with providing the alien robot in all its’ forms. While they did some other visual effects environment work, the focus of their work was the innovative robot. Image Engine were one of the key vendors on the project doing over 266 shots, primarily robot creature animation.

Original form

Given the various developments over the 10 episodes and especially the fight in the final episode, the droids needed to appear at varying levels of damage.

In episode 1 we are introduced to the Robinson Family and, unlike the original 1965 version, the family robot was not part of the original crew and not even from earth. When Will Robinson finds a crashed alien ship, he climbs a tree and and finds a robot which is heavily damaged. In the crash, the robot has been thrown clear, but split in two as it was impaled on a tree branch.

The first episode of the series had some of the hardest animation work for Image Engine. They did 87 shots in this one episode alone. Joao Sita was Image Engine’s VFX Supervisor for the series.

Will helps find the Robot reconnect to its base before it’s battery fades, and the two are engulfed by a forest fire. On set, the team had only a stand-in head for eyeline. The animators referenced a range of real world things as the robot’s batteries fade including an elderly man, with a shaking hand.

The entire forest ended up being created by Image Engine. On set there were some flame bars, but given the rate of the fire’s growth had to be carefully managed, it was easier to do the flames as digital fire.

Some shots were completely CG. Image Engine now uses Arnold for rendering and the LookDev was done in Image Engine’s Gaffer. All of the texturing was produced in Mari. For a long time, Image Engine was a 3dlight company, but the team ha been really impressed with the imagery they have been getting from Arnold.

The model of the robot model was built by another facility, and shared with Image Engine. One of the complex aspects in rigging the robot for use at Image Engine was the muscles and sliding plates on the body. The overlapping non-intersecting geometry of the sliding plates and seamingly volume preserving muscles were very complex to rig but this was helped by the robot being technically ’empty’.

With the robot whole, the two escape the forest fire, which is all CG apart from the green screen shots of Will Robinson. Image Engine had just finished working on a forest for Jurassic World, so the team were well prepared to build the CG forest environment. Sita points out that the new forest done for this show learnt valuable lessons from their work on Jurassic World. “On the film, we did the forest primarily with one person and a lot of hand placed items. On this show we spread around the work, … we also made it a lot less manual. we used Houdini not only to distribute the assets but also create the trees and foliage”.

With the robot whole, the two escape the forest fire, which is all CG apart from the green screen shots of Will Robinson. Image Engine had just finished working on a forest for Jurassic World, so the team were well prepared to build the CG forest environment. Sita points out that the new forest done for this show learnt valuable lessons from their work on Jurassic World. “On the film, we did the forest primarily with one person and a lot of hand placed items. On this show we spread around the work, … we also made it a lot less manual. we used Houdini not only to distribute the assets but also create the trees and foliage”.

Denys Shchukin was the FX Supervisor in charge of the team who created the fire and smoke effects, but the effects team also provided the forest building tools. Shchukin adds that “this is one of the cases were Houdini was not used just for effects. We created a library of different elements. We had a set of different trunks, different branches etc, and then procedurally assembled the forest”. This new approach allowed the effects team to work much faster. For example, they could build examples of different versions of the forest several times in a single day.

The same approach was taken with the fire. The team could simulate very quickly iterations of the fire, via a library with different flames, varying wind directions and elements. Using this new instancing approach meant that whole shots could be ‘simulated’ in half a day. Martin Bohm, the image Engine Lighting Lead, pointed out that this also helped lighting. “As the system was modular, it was always the same on every shot, based on instanced points. We were able in lighting to work on a system that was very reliable(consistent) and not shot specific”. Lighting and effects were working very closely together, so the lighting team knew about any changes or issues quickly, but as they also knew how the system worked, they were able to also produce lighting setups very quickly.

The robot, which appears to have rebooted changes form into a humanoid biped form, (and in flash backs it appears the robot would not be friendly had it retained its memory pre-crash).

The principle photography was shot on the RED Weapon at 8K. Image Engine had full access to the raw .r3d files and it’s metadata. The final show was delivered as a 4K master. Image Engine worked on the project for about 13 months with a team that peaked at 70 artists.

Human form:

On set there was an elaborate costume with an active face panel. The producers decided to replace the practical face with a new digital version that could be animated to be more expressive.

The physical suit was highly finished and appeal to be used for many shots in the show. The face mask section was thought to not be expressive enough. In some shots just the mask was ‘face replaced’, and in other sections the whole robot was replaced.

Below: As with the original version, the humanoid version needed to be in various states of damage.

Without a face, the character animators looked for ways to indicate emotion. To help provide an additional animation tool to display emotion, Image Engine animated the back spine much like the hair on the back of a dog or wolf. (See above)

The suited actor, while used in many shots, could not walk in the heavy suit the way the filmmakers quite liked, so for several scenes a fully digital replica robot was animated with a more robotic gait.

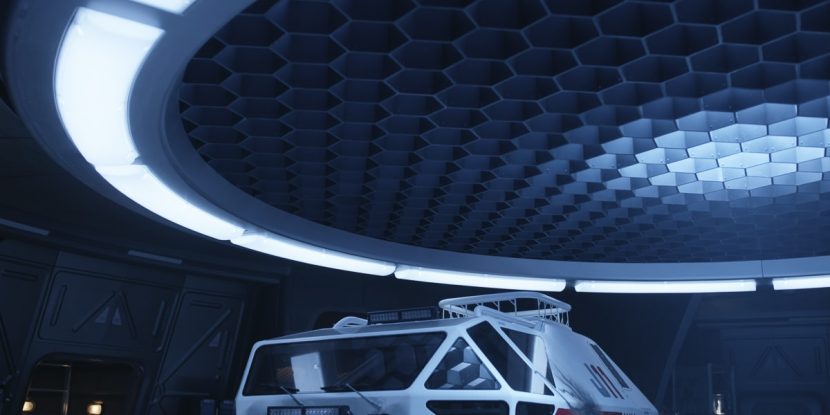

For this set sequence, given the highly reflective robot and surfaces, the Image Engine team rebuilt the set. The team re-projected and rebuilt the set, this allowed for a physically accurate lighting solution. “The main set had approximately 8 primary lighting setups on the set, which we reduced to about 5 different setups that any of which the animators or TDs could access immediately in their digital replica” commented Martin Bohm the Lighting Lead on the show.

The team completed their work in OpenEXR at 4K. While Netflix shows are routinely required to be delivered in 4K, the team had not worked on an HDR / Dolby Vision grade. The HDR finish did not affect Image Engine’s work, but the final result took advantage of the dynamic range and produced an impressive final grade.

The Fight:

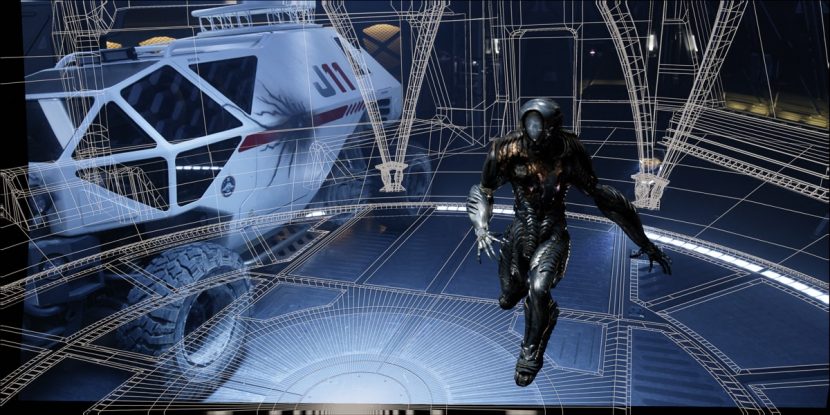

The final robot fight presented an unusual opportunity for Image Engine. When the final script was first delivered, the company was given the chance to previz and design the whole fight. For the company it presented a rare chance to work on a massive sequence from pre-viz to final output.

While the robot was primarily based on the movements of the actor in the suit. The animation team also had their own Xsens suit that allowed them to do small motion capture studies to inform shots where the robot was all CG such as during these key fight scenes.

Unlike Episode one, where the environment and the characters were all CG, the team had plate photograph with an actual hanger, rover and props for this sequence. While this environment ended up needing to be rebuilt in CG for lighting, the plate photography, HDRs and photogrammetry all proved extremely helpful in blocking and animating the fight sequence in Episode 10.

Environment and other vfx work

In Episode 2 a Second Jupiter ship balances on the edge of an abyss after their crash landing. Image Engine helped with this fully CG ship and environment work in Episode 2.

Inside the ship the team used green screen. The team use 3d Equalizer to track the live action plates and composited the shots in Nuke.

The team decided to shoot the studio work with green screen.

All 10 episodes of the show are now on Netflix.