Spherical Harmonics are an invaluable tool for production rendering, and also a common device used in games, but few really understand them and how they are used.

Spherical Harmonics (SH) are a data representation, nothing more. But like a Fourier transform, the SH data transformation has been allowing incredible images to be produced in a fraction of the time and with massive data sets – that only a few years ago seemed impossible. We explain SH and the amazing way Weta Digital in particular is pushing their use in production rendering. Spherical Harmonics representations are used extensively in various fields. They are a basis that is restricted to the sphere, as the name would suggest. They have been used to solve problems in physics, such as in heat equations, the gravitational and electric fields. They have also been used in quantum chemistry and physics to model the electron configuration in atoms.

In computer graphics they have been used to solve:

- scattering phenomena such as modeling or solving

- volumetric scattering effects

- environmental reflections

- BRDF representations

- image relighting

- image based rendering with controllable lighting

- atmospheric scattering and more

-

© 2009 Twentieth Century Fox Film Corporation. All rights reserved.

Spherical Harmonic lighting, as defined by Robin Green at Sony Computer Entertainment in 2003, “is a technique for calculating the lighting on 3D models from IBL sources that allows us to capture, relight and display global illumination style images in real time. It was introduced in a paper at Siggraph 2002 by Sloan, Kautz and Snyder as a technique for fast realistic lighting of models.”

In the graphics community it is a type of short hand for a range of graphics solutions to say that they solved something with “spherical harmonics”, but actually SH is not an algorithm, it is just a data representation. For example “Precomputed Radiance Transfer” is an algorithm that happens to use SHs. As important as they have become, few people apart from TDs understand SH. It is often thought that their use is complex, but in fact that is normally just ‘the maths language barrier’. It is possible to understand the principle of SH without doing the maths – in the same way it is possible to understand MPEG encoding without knowing complex coding maths.

One of the first areas to use SH extensively was gaming. As Microsoft’s Peter-Pike Sloan presented at GDC in 2008, “while spherical harmonics may seem somewhat daunting, they are actually straightforward. They are the spherical analog to the Fourier basis on the unit circle, and are easy to evaluate numerically. Like the Fourier basis used in signal processing, care has to be taken when truncating the series (which will always be done in video games), to minimize the “ringing” artifacts that can occur.”

What are Spherical Harmonics?

It is a way of encoding things.

You can encode the directional occlusion at a point and the IBL. It is then computationally cheap to convolve those to evaluate the contribution of lighting at that point (this convolution “removes” the occluded light). The SH representation of both, will only contain broad diffuse terms not in sharp high detail like a standard environment map. These mathematical representations of light and occlusion are incredibly useful and (the occlusion part) can be thought of as being ‘directional ambient occlusion’.

Another way to think of SH is that it is a way to encode an image over a sphere (that can represent incoming light, occlusion, or other types of data) in terms of their frequency representation. Theroretically with infinite bands, you can represent any resolution. Generally, however, only the first few “bands” are used.

Overall, Spherical Harmonics:

- are representation of an image over a sphere (something like a cube-map but not a cube-map),

- are a frequency-space representation (spherical equivalent of Fourier-transform but for image data),

- work on a set of bands or indexes – more bands or levels more detail, but each additional band can be useful.

SH have some great properties:

- continuous, rotationally invariant – they are camera view independent

- no sharp edges, no “lumpiness” – no seams, no pinching (‘cat’s arse’), no sudden popping at the lower levels or bands

- easy for vector units to manipulate, both for addition and multiplication

- they are really compact , – the first n bands can be useful even without the following ones

- solutions often often used in a cache

In computer graphics, Spherical Harmonics are carriers of lighting information. This is done by first pre-calculating certain data in a SH file and then interpreting this information during rendering.

Once you convert light data to SH, you get a set of values or ‘weights’. Maths on SH data is easy, basis functions that can be used are orthonormal – meaning they they are orthogonal and unit vectors (i.e. to add two SHs, one adds their ‘weights’).

SH is used in games for diffuse light. SH is great in games as it gives smooth results and it is stored sparsely (it is not some massive ‘carry along’ mega file). If you only have a few values you can interpolate and there are no sharp discontinuities. But in the area of games, the level or index levels that are stored are less than in high-end rendering. Some games, use lower quality SH data levels than high-end rendering since game engines have major real-time and memory constraints that vast high end rendering does not. But some games use 3 bands – as does a film pipeline like Weta, (but Weta’s team would use them in lot more points or places in the pipeline.

Why do we need SH?

Rendering works a little like this:

Imagine one light: the diffuse is the strength of light, but tempered by the angle to the light. (read: strength of the light multiplied by the scalar dot product between the surface normal and unit vector towards the light)

The diffuse is also affected clearly by the color of the object (read: all multiplied by the surface color.)

This is why diffuse surface reflection model is sometimes known as “dot product lighting.”

Now, imagine more lights – they all contribute (so for each light source: the intensity is multiplied by the scalar dot product between the unit surface normal N and the unit vector towards the light source L. This value is then multiplied by the surface colour giving the final reflected result). The way it’s used at somewhere like Weta is to encode directional occlusion.

Ambient occlusion takes one parameter that represents the percentage of the “sky” the point can see and multiplies the outgoing energy by that factor. SH does the same but the occlusion is encoded in SH, so you know the occlusion is masking a specific light direction

In plain English, you could say rendering works like this:

“It calculates the incoming light then scales it by the angle between the direction the surface is facing and multiplies the result by the surface function.”

But there are multiple light sources…so rendering actually works like this:

“It calculates ALL the incoming light by doing a sum of the light sources and then multiplying it by the surface functions.”

Simple? It is, if the lights are point lights and the world is simple. Working out simple directional lights is trivial, but what if there is not a number of spotlights but actually a big skydome with light coming from every direction? (IBL) – then it gets expensive.

SH is used to precompute some maths and store it, storing it for the skydome itself, the objects, the scene and to run a cache that has a lot of maths done once and can then be re-used. SH is in Mental Ray with final gathering (MR stores the final gather as a type of SH). SH is in Pixar’s RenderMan internally with point-based color bleeding, and even more so since PR17 was released.

Spherical Harmonics vs Fourier transforms

Fourier vs. Spherical Harmonics: to relate SH to Fourier, it can be helpful sometimes to think of Fourier transform’s basis as being a representation on the unit flat circle, while Spherical Harmonics are a representation on a sphere.

The Fourier ‘series’ is used in electrical engineering, vibration analysis, acoustics, optics, signal processing, image processing, and even quantum mechanics. In mathematics, a Fourier series decomposes periodic functions or signals into the sum of a (possibly infinite) set of simple oscillating functions, namely sines and cosines. The study is called Fourier analysis. This combination is called the Fourier series.

This idea of making one signal a sum of its parts, and increasing in frequency, means people sometimes refer to work in this area as working in the frequency domain. If one wanted to work on a 2D picture in the frequency domain with a Fourier transform you would:

- take source 2D image images

- break it down into set of harmonics, low frequencies to higher and higher

- once you have it split out you need to derive a coefficient for each of these harmonics , so that each of those, multiplied by the basis functions, add up to the original image

- to reconstruct, you would sum up as many of these harmonics as much as you think you might need (more gives you more detail, but more data)

- sum up more harmonics get back closer to the picture (sum only a few key of the first harmonics and you get a picture with no fine detail)

- in reality you need no just the Sin or Cos but their amplitudes and direction.

SH are like this – but applied to directional 3D data, instead of a flat picture, so it works in 3D render pipelines.

AO vs SH

A great question is how SH relates to Ambient Occlusion (AO) – which has been a great trick for many years in approximating accurate lighting.

“Ambient occlusion is one of those techniques that was very successful for what it was trying to describe at the time which was ‘what is the approximate geometric blocking that the surrounding environment has to a point so that we can remove ambient light from that surface,’ explains Nick McKenzie, Research and Development Supervisor at Weta Digital. “It was like describing a giant overcast day and you are saying how in shadow would this be if the lighting was coming perfectly from every direction.”

“Computing the ambient term is done in the same way now really using ray tracing or some other environment to sample and work out where your directions are blocked,” adds McKenzie. “Normally you would just sum those values up and divide by the number of rays cast, to give you an average blocking term, but with Harmonics what that allows you to do is by pushing those directions through Harmonic encoding you can start to reconstruct the directionality.”

One can still use SH as an AO pass, but you can go much further and also reconstruct directionality. Imagine a scene with an object in an exterior environment. The sky has some nice orange clouds on one side, but blue sky on the other. With AO, one would get an average color of the orange and blue – one value for all the contribution from the sky. With SH you can now say for a point – at this very point, it is blocked from that orange light and its shadows are bluer. You would get a much better ambient light level and color, since it knows the orange is coming from camera left and the point, is obscured from light from that side.

If one thinks about it, AO is an approximation of global illumination that is simply the object’s ‘visibility’ to a completely white skydome. In the creases and cracks the AO is dark as it would be hidden from a white sky dome’s light and on top and on the sides – the AO pass is white as those parts would be fully lit by a white skydome. ‘Spherical Harmonic Occlusion’ improves on this, it is computing the aggregate visibility for a number of low order spherical harmonics. It provides a directional ambient occlusion (assuming you have enough level or indexes used).

Indexes & weights

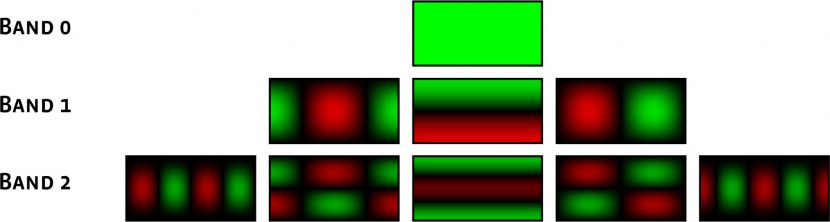

What are these bands or levels? They are the set of functions that represent a specific frequency in the spherical domain. You identify the functions in a given band by its index. Continuing the analogy with Fourier basis, it always has exactly one function per band. Weights are the coefficients for the multiplication.

The number of SH bands can be thought of as the level of detail you wish to go to in SH. You can think of it like Jpeg compression except it is not a scale of 0 – 100, it is much more like Fourier transforms. The low-frequency details are in low number levels, and more detailed information is added back as you move up in levels or bands. One could actually have an infinite number of bands, but we only need a few levels to be useful.

SH is a data format and it is done as:

- a set of N weights or coefficients – these store the actual data

- each weight is usually an RGB color (for IBL, for Occlusion is a scalar)

- multiply each basis function by its weight and sum – the result is the value

- number of basis functions goes on for ever, but first 9 coefficents is excellent for diffuse lighting

- games use 4 coefficients, but non-realtime rendering uses 9

- that is 9 actual floating point number sets (9 x RGB) to represent the SH (color directional occlusion) at any point

- SH is like an super compressed environment map

Weta: SH and PantaRay

Nick McKenzie explained to fxguide just why SH maths is so helpful in high end computer graphics:

“The rendering equation is an integral – it is a summation of all incoming light – normally in a hemisphere. One advantage of SH is that it describes light information from the entire spherical domain, and secondly it has one very good feature, it is very easy to compute. It is typically pretty difficult (without SH), but rather than in the normal ray tracing or rendering equation where you are trying to limit the number rays, and then summating the results, when you express a function with SH it is a dot product. The result of the dot product is the summation of the whole sphere.”

In other words, the maths not only stores the light information well, but later maths done as objects are added or leave a scene is also very elegant and computationally easy.

Leaving aside gaming which uses SH, a brilliant implementation of using SH for rendering occurred at Weta around the time of Avatar. Weta’s R&D guys with some later help from Nvidia came up with a plan to allow sensible render times on completely huge scenes – using a cache and SH.

Sebastian Sylwan, Weta CTO, told fxguide: “In a simplistic way if you think of the rendering equation you can split it into a lighting component and a visibility component. What we do with Spherical Harmonics is encode that visibility in a way that is easy to handle and practical for the computations. What one is asking is ‘is the sky visible at this point in this direction ?’ and then encoding all answers into the Spherical Harmonic representation.”

“To use a concept that any TD would be familiar with,” continues Sylwan, “if you think of mapping any function from the domain of time to the frequency domain, you can represent any function as a series of harmonics, a series of components. What you are doing is really saying that there is a co-efficient for each one of these bands of Harmonics.”

The first coefficient would be an overall value with no direction, one single number at the base level. From there you split the sphere into higher and higher frequencies. At each band you have another set of coefficients, and with enough bands and hence enough coefficients you could represent any pattern on the sphere, but that would be the infinite case. For CGI it is enough to just have an approximation and a really useful approximation may be just 9 coefficents which represent coefficients from just 3 bands.

- 1 band needs just 1 coefficient

- 2 bands needs an additional 3 coefficients for a total of 4 (this is all most games use)

- 3 bands needs an additional 5 coefficients for a total of 9 (this is what PantaRay uses)

NB the first band is often called I0 or level zero, the second is called l1, the third is called l2 etc,…

By storing a limited number of coefficients you store an approximation of the lighting, for the ambient diffuse lighting one does not need fine detail.

The first band of SH is ambient occlusion – “you are at this point on say this surface and you want to know much of the incident illumination is reaching this point, and because say this surface is flat” – it is a hemisphere on top of the object’s surface, but this one value has no direction. If the ambient occlusion value is x then we still know nothing about where the lights are, if all the lights were on the far side of the object, or all facing right at the object – the x single value of ambient occlusion would be way out. In a sense this average AO is just that, an average, and will only be exactly right very rarely, and more often quite inaccurate. It is still useful and can be made to work fairly well, but if you could know something about the source of the ambient light your computer graphics would be much more accurate.

At just one level or band higher, one first gets the direction – the question ‘is the ambient light mainly in front of behind me?’ can now be answered. At two bands higher you get a pretty good approximation – ‘Is the light in front or behind me – to the left or the right?’ can now be answered, with all their relative intensities – seamlessly. It turns out just these 2 bands produce much better final results than a single AO value alone. Going from one AO “average” value for the whole ‘world’ to just nine coeffecients (a total of three ‘bands”) gives you a great level of realism while still avoiding nightmare render computations.

The genius of the Weta solution is not to be the first to use SH – it is to precompute and cache the SH for all objects and encode all the information (along with some other encoding such as the BRDF material). Even though this computation is very expensive, it can often be done once and done before the final beauty rendering. Furthermore, this computationally expensive pre-process can be done on ultra fast GPUs and then cached. This means enormous, vast complex scenes can be crafted without the computer time becoming so expensive as to make iterations impossible and scene rendering time unworkable. Literally without PantaRay and its GPU speed combined with the cache – neither Pandora nor Middle-Earth could be lit as successfully.

If you break it down, the implementation is simple, in principle (but much harder in practice).

Weta stores an SH cache when someone makes something. When an artist uses the model, it can be added to the scene and as the maths on SH is simple – for adding and multiplying – the adding stage is easy. Because one has the cache that has already been worked out and is non-camera dependent, the lighting and render is enormously faster.

The software that does the first stage render is actually PantaRay (GPU). This allows Weta to use RenderMan for the final render but render much faster – or at least render vastly more complex scenes in the same amount of time (Blinn’s law).

PantaRay calculates the cache, PRman renders the image. So SH is not the key or algorithm but its role of holding the cached data is a vital part of the implementation.

Weta devoted so much effort to making this work as lighting takes user time, very costly artist or user time, and as Weta Digital does exceedingly professional work, Weta’s scenes are big – really big – really, really big.

PantaRay is not an add on, it is central to Weta’s render pipeline.

So the PantaRay solution slashes production time by caching and pre-rendering using GPUs. PantaRay was developed using Nvidia’s GPU computing. When Weta presented initially at Siggraph it was estimated that Weta and Nvidia ported Weta’s PantaRay engine to a CUDA-based GPU driven version that runs 25 times faster, utilizing an Nvidia Tesla S1070 GPU-based server, compared to the previous CPU solution. Ray-traced occlusion is often very expensive to compute due to the large number of incoherent ray casts. The PantaRay system uses GPU ray tracing, various spatial hierarchies, and other things to compute SH occlusion. In PantaRay a TD can decide to shoot say 512 or 1024 rays per shading point to compute occlusion. As a result, one test frames from Avatar took over 16 hours and 520 billion rays to compute an occlusion pass.

However, the GPU only provides part of the solution, given the huge data models of Weta, caching is equally important.

For an object say a leaf or a tree in Pandora, PantaRay works out from every direction what is blocking its light on this point of say the leaf. It handles all the dome lights, soft shadows, area lights, and other things. It does this before the tree is added to an actual Pandorian forest. And it stores this set of 9 weights of values. It stores a map of SH values for the object. When an artist adds the tree to the forest it then just adds the coefficients.

If the camera moves or tracks by a tree then the cache remains valid and is not needed to be re-computed. Even if the leaves on the trees sway in the wind slowly, a set of key – left, right and mid position coefficients can be solved and then interpolated between. As SH are very smooth, this interpolation is remarkably workable. A fully animating, fast-moving figure of say a galloping horse would need to have its PantaRay cache re-rendered per frame, but in most scenes such as the interior of a forest, a room or a cave, a vast amount of the geo is not dynamically animating. The camera may fly by, but only complete and unique object movement invalidates the cache. None of the leaves on all the thousands of trees behind that galloping horse need to have their SH re-rendered.

To be clear, the cache is not a low res preview or just the maths of the frame you happen to be looking at right now as a lighting TD. The PantaRay cache solution front-loads the rendering computer crunching so a bunch of computation has been done by the time the lighting artists opens a scene. When they move a sun to adjust the time of day, it is all the pre-computation and cache-ing that allows the new render to be so fast. It is not a cache of rendered images, it is a cache of pre-solved maths – that makes RenderMan at the end of the day render much much faster so the artist can iterate more and get the lighting right on their desktop monitors.

If SH was not camera independent, it would be a lot less useful. Some systems cache a frame’s maths so lighting can be adjusted, but advance a frame and a new cache needs to be built. With SH and PantaRay the cache was built for that object when that object was modeled and remains valid until it seriously animates. But not only is the pre-computation relevant to lighting scenes rather than just the “current parked frame’, but it responds very well to rotational adjustments of say dome lights or sky lights.

“You can have the same SH and rotate your representation – it is cheap and quick to rotate lights around,” explains Sylwan. “It is computationally cheap to rotate the SH, which is very relevant to outside exterior lighting.” It also cuts the other way, it is cheap to rotate the object relative to the light when placing it in a scene. The cache for one object can be rotated to suit the scene. A turntable of an object with fixed lighting positions does not invalidate the cache, for example. Placing objects correctly in a scene or rotating the lighting on a scene are mathematically relatively easy and cheap. Even if the scene is huge.

The SH data does add to the huge data size of scenes, given the need to store 9 coefficients per sample point on each object. Luckily, the SH data can be interpolated across a surface. This interpolation can be done in world space or object/ tangent space (much rarer at Weta). This keeps the amount of samples cached per object to a large but yet still manageable size. The 9 coefficients per point are equal to 3 colors being stored in terms of file size. Just doing simple maths – “9 floats per point, 36 bytes per point,” says Luca Fascione, Rendering Research Lead at Weta Digital, “you can just do the maths, so easily a couple of gigabytes per file or more,” and there is the usual data management of looking after this data and making it available.

Weta does not use a standard point cloud format they have their own “topo-clouds” – (a pun on point clouds crossed with Topology. It is a point cloud that stores manifold connectivity information). They are very similar to micro polygon meshes. Weta has its own format for two reasons:

- so that Weta can very accurately control the caching and access patterns, and not overload the filers, and

- so there is typology information stored to help with interpolation between SH value points. If you want to interpolate between two SH samples you want them to be on the same surface or the interpolation would not be valid. Otherwise “you would just get the ‘nearest point, and you want the nearest neighbors in a surface sense, and then you get much higher quality results,” notes Fascione.

In addition to the per object caching that has been discussed, there is yet another cache possible for the whole scene. Scene Harmonics can be done for “somewhat coarser caching of SH for very big assets,” says Fascione, “so for counts that are multi-hundred million ranges. Although PantaRay is very fast on GPU it can still be annoying to do rendering per frame at this huge scale, so we have a much courser caching based on OpenGL that can do those kinds of loads, and it does it by leveraging local Harmonics and combining them in an approximate way to get the big giant volumes (scenes).”

For example, a scene of a character near a tree in a forest – lit with a skydome. The skydome has a relatively tiny set of its own values, and then the character and the closeup tree have individual SH caches. In the case of the tree being still, that may be a static cache and the character would have a per frame cache. The distant forest would have an approximate combined static SH cache, which respects the relationship of all of the tress (and leaves) relative to each other. This is all precomputed and stored. This ‘baking of the cache’ is not unlike baking a sim, and happens right before final lighting.

Most of this article has focused on skydomes and yet Weta has taken this even further. The SH are sometimes used at Weta for closer views in area lights. “It depends on how you choose to do shadowing,” explains Nick McKenzie. “We could choose to use Harmonic shadows for area lights and it is something we do for broader wider scenes where we want softer shadows. The reason why that works is based on how our visibility data is stored.”

“Our occlusion data is sampled at lots of different points in the world,” adds McKenzie, “and each of those has a different picture of where things in the world are occluding it, so a point on the ground say underneath a horse to see a giant horse above it, or at least an approximation of a giant horse, whereas a point away from it sees a blob away from it, and both of those two functions can be used with the area light, so it becomes a reverse ray casting in a certain way.”

PantaRay has developed over time for production rendering at Weta. Before PantaRay, Weta used RenderMan to make caches. During Avatar the team had been faced with a lot of scenes that could be constructed from a lot of modular pieces, such as the floating mountains. “So they were pre-computed caches and there was not a lot of interaction with them, for example, you would not see a lot of soft shadowing from one floating mountain to another for example”, notes McKenzie. But as the team moved to more character work and destruction scenes with dense geometry, they needed a more complex solution, “and PantaRay grew out of that, and the need to do that on large data sets,” he says. Today PantaRay and SH is used for big dome general lighting and “extremely fine soft shadows as well.”

Both lookdev and rigging use the cached models, in addition to final lighting. The cache is triggered by the asset management system polling on its asset. Some objects just get a cache formed immediately at the time of modeling, such as trees, but most are cached once Weta’s asset management knows the asset will be used.

The cache is maintained by Weta from object creation to the show’s completion. It would be stored with the model always and thus it can be re-used any time the same object is used across a show.

How general is this?

Some other facilities do have advanced SH pipelines, few are as advanced as Weta nor as complete and robust. Changing material properties at Weta would not affect the lighting cache, for example, unlike some other pipelines. If you make an object look a bit wetter, adjusting the spec to make it a tighter spot highlight, would not normally invalidate the cache.

Is SH worth doing if you are not Weta Digital? Sylwan points out that Weta developed it to abstract out a huge amount of complexity so artists can work in a more friendly environment without waiting hours for even minor lighting adjustments. Of course one could also point out that Weta’s scene complexity today … is the next guy’s scene complexity maybe six months or twelve months from now,.

As far as Pixar is concerned, SH makes sense. Weta is one of the largest RenderMan users in the world. The PantaRay solution was widely discussed with Pixar and the RenderMan team incorporated SH more directly into PRman with version 16. PRman can today generate its own SH, although Weta continues to use their own software inside PRman before PantaRay.

As said at the outset of this story, SH is a part of the key maths that is used in the Weta pre-rendering cache lighting solution. In fact, if someone published a new improved mathematical implementation of representing diffuse ambient light Weta could replace the SH component of the pipeline and the principle would still be valid.

But it is this novel combination of high level abstractions, coupled with a very practical implementation that has produced such a remarkable tool for allowing some of the most complex rendering in the world. Only a very few handful of companies can handle such realism in such vast data sets or digital environments. Spherical Harmonics seems like an abstract concept until you see a huge Middle-Earth landscape fully rendered in 3D, in stereo at high resolution and realize how SH and the tools surrounding it are required for artists to light and finish such breathtaking work.

Pingback: Visual Maths: A Brief History of CGI |

Pingback: Cutting Edge Render Tech | 次时代人像渲染技术XGCRT

Pingback: The State of Rendering – Part 2 | 次时代人像渲染技术XGCRT

Pingback: 测试用例 @ 次时代人像渲染技术XGCRT

Pingback: Visual Maths: A Brief History of CGI - Builtvisible

Pingback: Visual Maths: A Brief History of CGI - Builtvisible.