Synthesia

With a BBC project (see below) Synthesia is out of stealth mode and now offering their services for professional face replacement. The service allows for seamlessly changing a presenter or actor’s expressions and dialogue. Synthesia isn’t producing throw away or comic illusions, they are seeking to address existing production problems in language dubbing and ADR. ‘Native dubbing’ is a new method of translating video content that utilises AI or Machine Learning to synchronise the lip movements of an actor to a new dialogue track. This enables translation without the problems of dubbing and mismatching lip sync. The resulting output is ideally completely seamless to the viewer.  Synthesia’s goal is to remove the language barrier from video and allow content from both high-end productions and YouTube influencers to reach a much larger audience, in any variety of languages. They hope that this new approach will foster more respectful cultural exchanges and inclusive footage. It is now offering their breakthrough technology as a service, designed as a high end ADR professional service for professional lip sync, for select partners. The team is building a cloud platform to deliver this type of technology to a wide range of content creators soon and the company has the long-term goal of offering full scene synthesis through an automated but quality controlled cloud platform.

Synthesia’s goal is to remove the language barrier from video and allow content from both high-end productions and YouTube influencers to reach a much larger audience, in any variety of languages. They hope that this new approach will foster more respectful cultural exchanges and inclusive footage. It is now offering their breakthrough technology as a service, designed as a high end ADR professional service for professional lip sync, for select partners. The team is building a cloud platform to deliver this type of technology to a wide range of content creators soon and the company has the long-term goal of offering full scene synthesis through an automated but quality controlled cloud platform.

“What we’ve been doing over the last year, is solving the complex problem of photoreal facial synthesis”, explains Synthesia CTO, Dr. Jonathan Starck. Many fxguide readers will know Starck from his nearly a decade at the Foundry in the UK, as their Head of Research.

The company was co-founded by Prof. Matthias Niessner who was one of the key researchers behind Face2Face: Real-time Face Capture and Reenactment of RGB Videos, a land mark face replacement tool that many saw as a key point in digital facial ‘puppeteering’ technology. This was first shown in the Emerging Tech Hall at SIGGRAPH 2016 and it immediately captured huge interest. Starck comments that “a year ago, results in the academic literature showed the potential but didn’t match the visual fidelity or reliability required to be production ready”.

Today, the Synthesia system uses their ENACT Native dubbing tool which is a new method of translating video content that utilises AI to synchronise the lip movements of an actor to a new dialogue track. ENACT enables translation without the creative casualty of bad dubbing or subtitling, resulting in a seamless experience for the viewer. In the even recent past, such compositing solutions such as this have nearly always produced images that are too blurry or too jittery to be sold as ‘real’. Over the last year, Synthesia has been refining and developing new technology to produce professional industry grade results, but that are still fully automated. “So essentially last year we have been building the tech that can create photo real results” comments Starck. “We have now done work for the BBC and and other people such as the Dallas Mavericks basketball team”.

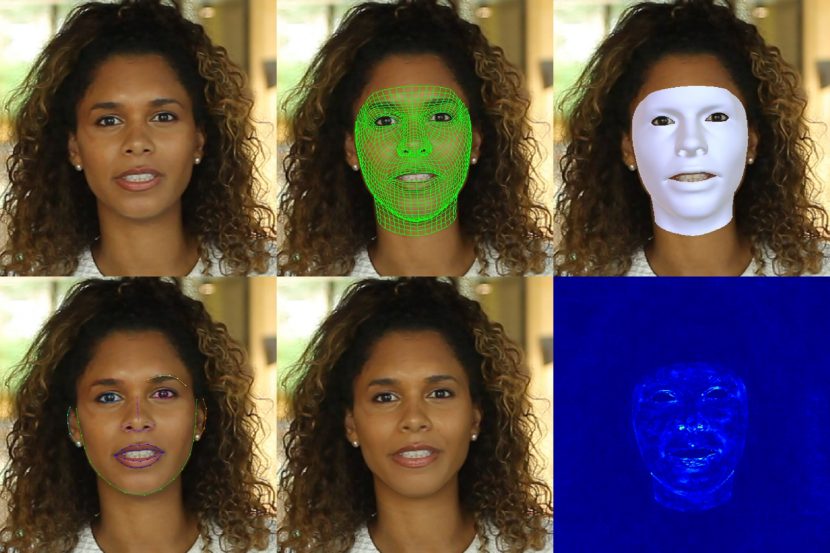

Starck believes there is an enormous future of professional ‘Synthetic media’. He believes there is a huge potential for AI (Machine Learning) systems to make content creation a more intuitive creative process. “We’ve broken down the traditional process of facial modelling, tracking, and rendering and used AI to synthesis photo-real results that are indistinguishable from normal video as a completely automated process. It’s the start of democratisation for high-end VFX” he commented. “We feel strongly that synthetically created media “synthetic media” is going to become mainstream as these techniques become more accessible and we are keen to become a trusted voice in that space”. Synthesia automate the process of bringing in the face, automatically tracking it, and modify a target, using with key machine learning and deep learning AI techniques. Here is how it works:

Process

The first stage is to provide data for the creation of the digital face or puppet rig that will be driven by the new voice. The team require a small data set for creation to build a Synthesia head. There are two main clips they require

- 3 to 5 minutes of natural speech by the presenter or actor

- a head rotation with a specific line of dialogue

But the team are quite flexible on the material, it does not need to be shot on a special stage with special lighting and they want to make the process as easy to use as possible. The second stage is to either commission Synthesia to have the dialogue of the hero clip translated and filmed, or you can do this yourself. Synthesia are set up to allow a user to just provide the hero clip and which new language to target and they can do the rest. However in some audio situations producers may want to record their own ‘replacement’ audio – to match the audio environment and sound acoustics of the original, in which case both that new audio and a reasonable video of it being delivered need to be also uploaded. A key part of the technology is producing accurate markerless facial tracking of the source clip. Once the head is tracked and the face is ‘learnt’ the process matches the lighting as part of the Machine Learning process. As discussed below, the process does not work by producing a 3D model, texturing, animating and rendering it in any traditional sense. Rather it uses cutting edge AI to build a believable face every frame based on training data and incredibly innovative software advances.

As the system needs to work with final ‘on air’ imagery, the head tracking example above shows the markerless face tracking that is key to the process.

Internal Technical Process

The process is fully automated without the need for artist intervention. The process overall takes a few days to have a clip turned around, and it is done in stages. The first internal step is to build a Synthesia head. The whole Synthesia process is based on Machine Learning (ML) and that means training data. As with any such approach, the training process of the Convolutional Neural Networks (CNN) takes time, training alone could take 12 hours or more. Once the training is done, the actual generation of the final re-animation of the face is close to real time. This is important, as it means a 10 min clip does not take twice as long as a 5 min clip in production. Once the face and Deep Learning is done for a particular setup, the process does scale well.

The exact internal technical approach is not published but the Synthesia process does deploy a Generative Adversarial Network or GAN, using CNN Deep Learning. The system is automated but with professional Q&A, which means that if the results are not human believable, the team make tweak the process and re run it before delivery. The whole system runs on AWS cloud services so the company can scale as the work hopefully floods in. For a normal on air program the team suggest allowing a weeks turnaround, end to end. The pricing model is based on a per minute price, and the team are keen to keep this cost affordable and accessible.

What it isn’t…

The new dialogue needs to be done professionally, one cannot provide 20 seconds of dialogue for 10 seconds of original source. It is important for a professional final clip, that is respectful to both the talent and the audience, and that the cadence and intent of the voice, as well as its approximate timing are close. It is not in anyone’s interest to have poorly finished material due to the tone of the voice mis-matching the content and the original delivery.

Reenactment for drama.

The process is not quite ready for feature film narrative drama, most of the focus so far has been on presenters or hosts talking directly to camera. This simplifies the process due to occlusion and lighting changes. The team aim to expand into narrative drama, but even just direct ‘reporting’ style ADR replacement is a huge market.

BBC

Below is an example done last week for BBC newsreader Matthew Amroliwala. He only speaks English, but by using the Machine Learning software, he appears to be speaking Spanish, Mandarin and Hindi. The team used their software to replace an original face with a computer-generated face of another version of the presenter driven by multiple different actors. Amroliwala was asked to read a script in BBC Click’s film studio and the same phrases were also read by speakers of other languages.The Synthesia software, then mapped and manipulated Amroliwala’s face to match the other languages.

Face2Face

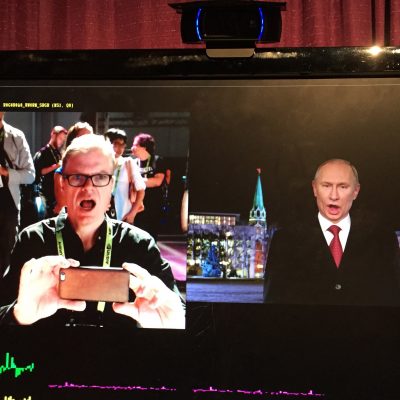

For reference, below is the original Siggraph 2016 Face2Face shown in the Emerging Tech Hall. This is not the same technology used by Synthesia, but it shows the origins of the company’s DNA. Below this is fxguide’s Mike Seymour test driving Face2Face on the SIGGRAPH 2016 show floor (filmed on his iphone !).

Below is the Raw footage of Face2Face working at SIGGRAPH when fxguide first saw this technology in 2016.