visionOS immersive format demands new cinematic language

With the much broader global release of the Apple Vision Pro (AVP) and artists seeking to expand their skills and master this revolutionary new Immersive video medium, understanding the Apple visionOS is rapidly becoming essential knowledge for motion imaging professionals. DOP Ben Allan ACS, a long-time friend of fxguide and contributing guest writer, outlines what you need to know about this new high-resolution format.

Here’s what you need to know…

To begin to understand how these new formats function, it is essential to see that the Apple Vision Pro is a new type of computer rather than simply an interactive display device. While this may seem like a semantic distinction, much of what follows will only make sense in the context of the new concept of “spatial computing”. Calling the Apple Vision Pro a headset or VR device misses many significant things that make it a dramatically different beast from any apparently similar devices that have gone before. Similarly, the differences between spatial and immersive are drastically bigger than they first appear, and Immersive video requires vastly more image data than conventional high-end formats.

What does spatial computing mean?

Spatial runs on a new operating system and relies on a new series of concepts for the user to interface with the computer. My personal take on it is that the experience is at least as different to any existing systems as the mouse & graphical user interface (GUI) based operating system was to command-line computing. Only time will tell how popular and enduring this new concept becomes, but it is impossible even to attempt to properly understand what it means to content creators without understanding the scale of the change it represents.

visionOS tracks the user’s eyes to precisely measure where they look in the full sphere surrounding them. This becomes the equivalent of the pointing device, and tapping your thumb and forefinger together functions as the click. This makes it possible to perform a vast array of functions without any other connected device. These functions can take the form of familiar applications with the difference that they can be positioned anywhere in the 360° space. For example, you could be watching a film while sitting on your sofa and position the film as a virtual big screen directly in front of you but at the same time have your email app positioned off to one side so that you can glance across and check it without turning off the film. You could also have a web browser positioned on the other side showing a link from your front door security camera to keep an eye out for the arrival of a delivery. This makes it possible to keep an eye on various things by glancing across or even in your peripheral vision in a way that is completely different to switching between apps on any conventional computing device.

It is also possible to have native apps running in various parts of the immersive space and simultaneously take the display off a Mac onto a virtual screen inside the , and change its size and position. This is the first thing that strikes me as having potentially huge significance for post-production professionals. The combination of the 8K of resolution per eye, a very large colour gamut at 93% of DCI-P3 and the high contrast capacity of the Micro-OLEDs means that these images on the virtual screen are at a level of quality and accuracy I would normally only expect to see on a precisely calibrated reference monitor. Of course, there are no professional reference monitors that can display images at the scale that these virtual displays can and the capacity to have a very large display, even in a confined space, is something that could be valuable for editors, VFX artists and even colourists among other post pros.

All of this works because the movement tracking system is so seamless. The Vision Pro has forward-facing cameras that “pass-through” your view of your surroundings. This not only allows you to still interact with objects and spaces around you in the real world but also makes it easy and intuitive to maintain your spatial orientation safely. Once you place an app or window in space, it stays there as you move around. Whether your head and body movements are big or small, things in the display stay absolutely rock solid in position in three-dimensional space. For visual work, this makes it possible to perceive all of the fine details that the display can show.

Included in the visionOS are immersive environments that allow the user to be fully surrounded by one of a range of spectacular locations, like Yosemite National Park or the Moon. Apps, videos, or photos can then be placed around the user within that environment just as they would in the real world—surrounding them through the ‘s pass-through cameras. Because the movement tracking is so fast and accurate, the sensation of being there in a real location is surprisingly compelling.

The Vision Pro’s status as a spatial computer rather than just a VR display device also means that there are a wide variety of ways to natively display, distribute, and consume motion image content.

Spatial video

The built-in photos app is similar to the Mac, iPhone & iPad versions and can display photos and videos in both small windows and large virtual displays. Existing panoramic photos from iPhones can be displayed in an immersive format where they curve around the viewer and into the horizontal peripheral vision. This is a very different and compelling way to view panoramic photos where they effectively place you in the space where they were taken and make it possible to see both details and the big picture to an extent that displaying them on any conventional display can’t emulate. It also allows the viewer to look around and see the details in every part of the panoramic shot. There are some factors in how these panoramas are experienced that become very significant to the challenges and opportunities in the moving image capacities of the visionOS.

The Photos app also allows for the playback of spatial videos, which can be captured on the iPhone 15 Pro, 15 Pro Max, and the Vision Pro itself. fxguide.com has already published some in-depth content about the different ways currently available to capture and process spatial video, along with some analysis of their results.

Whatever way images are captured and processed, spatial video is a specific implementation of stereoscopic 3D at 1080p per eye in SDR and at 30 fps. The viewing experience is within a frame, and the stability of the camera determines the extent to which the image can be enlarged partially into the peripheral vision space. This limitation is because of the very real risk of spatial disorientation from contradictory signals between the visual cues and the human body’s other spatial senses, most significantly the inner ear sensations. Because the visual system is the dominant sense when it comes to spatial awareness and any conflicting inputs against the other senses can cause dramatic unsteadiness and nausea, to the extent of falling over even when sitting down and even vomiting.

Because of its risks in aviation, spatial disorientation is a well-researched and understood human factors issue. Apple has clearly drawn on this body of research and is taking a sensibly careful and conservative approach to the challenge.

One key element to understand about our visual-spatial sense is the difference between central and peripheral vision, especially concerning spatial disorientation. Our peripheral vision is much more sensitive to movement, and modern research has revealed many dramatic differences between peripheral and central vision.

The central part of our field of view (FOV), where we see full detail, is surprisingly (and somewhat counter-intuitively) narrow. The part of our eyes that can actually see what we would understand as the equivalent of “full-resolution” is a very narrow 5°. Between 5° and 18° is a transitional zone, and beyond 18° is into the near peripheral vision.

You need some jelly beans

One aspect of peripheral vision that reveals a great deal about how we as a species “see” the world is colour. Between 20° and 40° FOV, our ability to perceive colour drops away dramatically. Now, at this point, you’re probably looking around whatever space you’re in while you read this and feeling first-hand that this is untrue as you see colours all around your field of view. But there is a very simple experiment you can do to test this yourself. Take a bag of jelly beans, and without looking, take one in each hand and, while staring straight ahead, hold them parallel to your ears at a comfortable distance. Then in a gentle arc bring them forward and around without taking your gaze away from a point straight ahead, take note of how far towards the centre of your field of view they need to get to before you can identify their colour. Most people will be able to see the strong colours somewhere around 30° from the centre. And yet we feel like we see colour all around us, so what is happening?

The answer can be partly illustrated by reversing this same experiment. Start with two jelly beans directly in front and slowly move them away from each other and into your peripheral vision. What you will see is that you can perceive their colour far beyond the point where it became visible when you didn’t already know what it was. Our sensation of colour in peripheral vision is primarily a memory of the colours when we have seen them by looking directly at them. This is true of many aspects of peripheral vision. A researcher at the University of Amsterdam, Marte Otten, said in 2016 that the results of recent research she led revealed that “under the right circumstances, a large part of the periphery may become a visual illusion”.

Importantly, our peripheral vision is a major part of our sense of balance and our spatial awareness. The sensitivity of our peripheral vision to movement is critical to our brain’s ability to identify when there are changes to our spatial circumstances. Because of this, the perception of spatial orientation in our peripheral vision is far more significant than in the central vision, and the impact of conflict between that perception and the inputs from our other spatial senses is particularly hazardous in its potential to cause balance and nausea problems.

This is of minimal risk with immersive viewing of panoramic photos for two very significant reasons. First, they are still images and, therefore, present zero movement in the peripheral field to conflict with your perception of balance. Secondly, because they only fill the peripheral field on the horizontal axis, the viewer still has the orientation visual input above and below the image. This is equally effective whether it is the real world or one of the immersive environments.

The restrictions of spatial video playback are both a limitation of the 1080p resolution and also a safety feature because of the biological limitations of human beings. When an image with unstable camera movement is presented without filling our periphery, the potential for severe disorientation is limited.

Professional content

While it can be used to preview content to a certain extent, the Photos app is primarily designed for viewing “homemade” content, and professional content is likely to be consumed via other applications.

Continuing the pattern of built-in apps in common with Apple’s other operating systems, visionOS includes an Apple TV app that can be used for buying, renting and viewing commercially released film and TV content. For films and shows in traditional formats, this allows the viewer to watch on a virtual screen which can be small, enormous or anything in between and the colour and contrast accuracy and overall picture quality is nothing short of stunning and HDR content looks superb. But it is stereoscopic 3D films that benefit the most. To be able to see a film like Avatar in 3D, which is just as bright, sharp and colour-accurate as a 2D film, is a new experience.

The fact that the Vision Pro is a computer with its own operating system means that it is also possible to develop apps to create viewing experiences for specific types of specialist content. The first standout example of this is the IMAX app, which will play IMAX films in their full 1.43:1 aspect ratio at sizes up to the equivalent of the largest IMAX theatre screens in the world, around 100 feet across.

This is a great use of the Vision Pro’s display flexibility because the square-ish IMAX frame is unavoidably pillar-boxed on modern widescreen TVs, limiting the capacity to fully appreciate images that have generally been captured to be seen larger rather than smaller than conventional films.

IMAX is actually a good point of reference for many of the issues around immersive video experiences because a traditional IMAX theatre experience involves the screen reaching well into the audience’s peripheral vision. Because of this, there are many rules of thumb around shooting and editing IMAX content, which become even more relevant for what is arguably the ultimate form of motion imaging for the Vision Pro environment… Apple’s new Immersive video format.

Immersive video

At first glance, the spatial and immersive formats sound like slight variations on the same thing: a stereoscopic 3D video format designed specifically for viewing in the Apple Vision Pro. The reality is that these are drastically different formats for drastically different uses.

Like immersive Environments, the Immersive video format is designed to envelop the viewer in the experience. It virtually fills the peripheral vision both horizontally and vertically, and because of the clarity of the images, the feeling of watching full Immersive video in Vision Pro is dramatically more vivid and realistic than any other motion picture or VR experience I have encountered.

In the Apple TV app on Vision Pro, there is a category for immersive video content, and there are several demo projects available, as well as a demo reel with various impressive clips.

The Immersive format is clearly intended to be used for live-action content in a range of different styles and genres and for professionally produced content. Apple hasn’t made any statements about how they have been shooting this demo footage, but they clearly have some camera system working to capture footage in the format, and the quality of the footage is consistently superb. You can look around the scene and focus your attention on different areas easily, and the overall sense of being there is quite comprehensive and compelling.

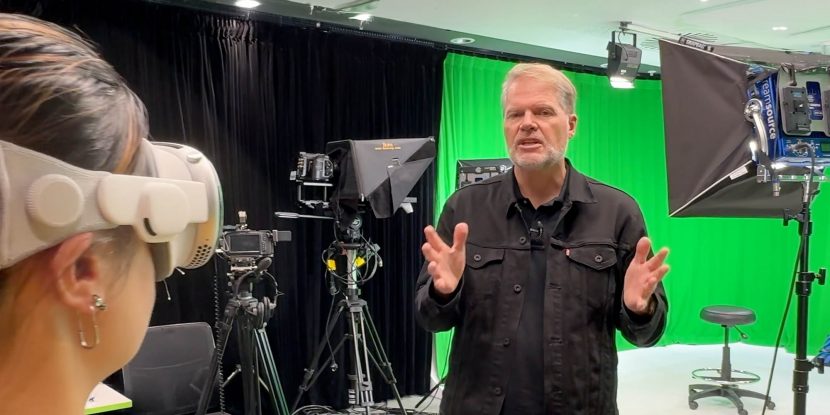

It is apparent that the intention is for the industry to start creating high-end content for the Immersive format, and the announcement at Apple’s WWDC conference that they have partnered with Blackmagic Design to develop an Immersive camera system and post-production workflow. This is logical for a number of reasons, not least of which is that BMD has the capacity to deliver a cohesive camera and post ecosystem for the new format.

The URSA Cine Immersive camera

Only some details of the new Blackmagic URSA Cine Immersive camera have been revealed but it is possible to infer a bit more detail from what has been said. The design of the camera is based on the new URSA Cine platform. One thing BMD does very effectively is create hardware designs that can be applied to many different product variations. They do this across a lot of different product areas but an easy example is the URSA Mini, which has resulted in the Mini 4K, Mini 4.6K, Mini Pro 4.6K, Mini Pro 4.6K G2 and 12K and 12K OLPF variations in the same basic design, to the extent that the same accessories are generally useable across all of these variants. The URSA Cine is clearly designed to be a new, high-end application of this philosophy with the full-frame 12K and 65mm sized 17K versions already announced.

One thing that makes this a perfect platform for an Immersive camera is the new media module, which is a very high-capacity and very high-bandwidth alternative to traditional memory cards.

The amount of data involved illustrates how significant this is. A spatial video in 1080P stereoscopic at 30 fps requires 124,416,000 pixels per second. In contrast, the Immersive format in 8160 x 7200 stereoscopic at 90 fps will require 10,575,360,000 pixels per second, or 85 times as much data and roughly 50 times as much data as 4K cinema.

One saving grace is that it will also use a new version of the Blackmagic RAW codec, which is a high-quality and very efficient codec for high-resolution footage. Even so, we should expect the Immersive camera to be a very data-hungry beast.

In Apple’s recent WWDC presentation, they mentioned both DaVinci Resolve and Compressor as part of the post path for the Immersive content. This suggests that Resolve will master the Immersive video in the 8160 x7100 format, and then Compressor will package that into a file playable in the visionOS. One other question is whether there may be some safety features included in the mastering or packaging process to prevent or at least flag content with the spatial disorientation issues flagged earlier.

The practicalities of working with the camera will also create some interesting challenges. Eight years ago, I shot, produced and managed the post-production of a special venue drama film for Sydney’s Taronga Zoo. The film was created specifically for a one-of-a-kind cinema within the zoo, which has a screen approximately the width of an IMAX screen but is curved around the audience in a 5:1 aspect ratio. This created an experience that was vertically similar to a large cinema screen but horizontally immersive into the full peripheral vision. For that project, we created a three-camera array to capture the immersive perspective. We used 3 BMD Micro Cinema Cameras with 10mm SLR Magic Prime lenses. The URSA Cine Immersive appears to have two image sensors that are roughly full-frame sized, which would require something like an 8mm lens to achieve the 180° perspective.

Shooting immersive

The first reality of shooting such a wide angle of view is that it is not possible to use a matte box because of both the angle of view and the fact that it would become visible to the other cameras. We were able to used filters directly on the lenses but had to match these precisely. Although the URSA Cine Immersive gets the 180° angle of view with a single lens, the fact that it is stereoscopic and has a lens per eye means the same problem with a matte box being in the other lens’s shot. Because these lenses cover a larger image than the Super 16mm sized micro cameras we used, the amount of curvature visible on the front element would make it very difficult, if not impossible, to use filters directly on the lens. Hopefully, it will have ND filters behind the lens because that may well be the only way to control light levels other than the aperture of the lenses. Which is another issue. There appears to be no direct manual controls on the lenses, which makes sense because the left and right eye lenses will need to be adjusted for focus and exposure in perfect unison to maintain the 3D image, so this will be most effectively done via electronic controls.

Operating will be quite specialised because basically everything in front of the lenses will be in shot. This will also mean that things like boom microphones and backlights will become virtually impossible to keep out of shot while still doing anything meaningful.

Not only will everything for most of the space be visible in establishing shots, but because close-ups will be achieved by moving the camera closer to the subject, the background will remain effectively a wide shot. Between two opposing close-ups, the entire space of the location will almost unavoidably be visible. This means that clearing things like lights, crew, etc, out of shots will be tricky and potentially time-consuming.

The integrated lenses and fixed field of view mean that the whole way of thinking about lens choice or even zooming becomes impossible, and it will be interesting to see how much it is possible or even desirable to create a shallow depth of field. In the currently available demo content, one thing that did strike me is that having such clear stereoscopic images does give a sense of depth that really matches the real-world experience of depth perception in a way that is completely different from how we use depth of field to create a sense of depth in a conventional film.

This also highlights how much will need to be learned about this new format. For one thing, how will we think about shots and composition without a frame? While there are edges to the immersive image, it is impossible to see the frame in the way that we do with all conventional formats. As an industry, we have over a hundred years of experience in putting moving images in rectangular boxes. Without that box, much of the visual language of film will have to be rethought and adapted to spaces rather than frames.

Because the experience feels so real, how the viewer will relate to personal space, both their own and that of the people in the scene, could be both a challenge and a new creative tool.

Set construction will have to default to 4 wall and full-ceiling designs for even the most basic of studio interiors. Virtual production with LED volumes should work well, except that hardly any of the existing designs feature the full ceiling and 360° that would be required to get the same level of flexibility as current virtual production studio designs achieve in conventional formats. A full virtual production studio for use with the Immersive camera would require something more like the Las Vegas Sphere than the LED volumes that have become common. Of course, it would be possible to successfully build a 4 walled set with a full ceiling within a conventional LED volume and use the volume for the views out windows.

Monitoring is also a big question, which I’m sure will take much time and effort to solve. What will the conventional screens on the camera be showing? Will it be the full 180° image flattened onto a 2D flat screen? Will it be possible to monitor in 3D? What will feed out to monitors for the director, continuity etc. where both detail and the full shot are critical? Will it be possible to switch between a full view and a zoomed detail view?

Of course, if it is possible to monitor directly in a Vision Pro headset, that would solve many of these issues, but how that would function, how many could be fed a live feed from the camera in real-time and what sort of safety protocols would need to be in place on set are all flow on questions if this is even possible. Not only that but it will be impossible to check the entire image at once while viewing in the Vision Pro, so it will not be effective in monitoring everything that is being captured in a shot.

Another big question is how practical it will be to shoot on the URSA Cine Immersive and then cut out frames for conventional formats from this source footage to deliver for conventional cinema and TV screens or even for IMAX.

Will it be possible or even desirable to have different frame markings to work to, or would it actually work better to simply consider the fact that a sort of roving frame will need to be cropped from the full immersive?

Starting to think through the complexities of these sorts of questions highlights how new and complex this format really is. While the elements of high resolution, 3D, and extreme fields of view each have their own challenges, the combination of factors and elements can be exponentially more complex than the sum of their parts.

With all of that in mind, though, it is an incredibly exciting new technology that provides us as industry professionals with a new creative frontier to explore, new ways of visually telling stories, and genuinely new experiences for the audience.