NVIDIA’s recent research breakthrough, DiffusionRenderer, presented at CVPR, (one of the world’s premier AI conferences), represents a major advancement in neural graphics. This technology effectively combines inverse and forward rendering, achieving exceptional precision and realism. Utilising advanced video diffusion models, DiffusionRenderer unlocks transformative capabilities for relighting, material editing, and seamless virtual object insertion directly fcrom real-world video footage.

Sanja Fidler, Vice President of AI Research at NVIDIA, recalls the inception of this ambitious project: a casual but pivotal conversation at SIGGRAPH 2019 with NVIDIA CEO Jensen Huang. Inspired by Huang’s forward-looking questioning about what might be possible with neural graphics and the potential of relighting technologies, Fidler embarked on this groundbreaking research, culminating in the development of DiffusionRenderer.

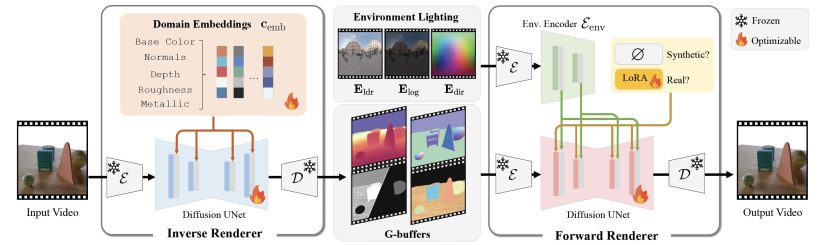

The core of DiffusionRenderer lies in its dual neural architecture. First, it tackles inverse rendering by accurately estimating crucial geometry and material properties, such as depth, surface normals, albedo, metallic, and roughness parameters, from real-world videos. This process effectively strips away existing lighting conditions, creating essential data known as “G-buffers” for realistic rendering.

Subsequently, the forward rendering model generates photorealistic visuals from these G-buffers, employing neural approximations of complex light transport phenomena such as shadows, reflections, and inter-reflections. This approach eliminates reliance on precise 3D scene representations and computationally expensive path tracing.

generates one scene attribute at a time. Conversely, the neural forward renderer produces photorealistic images given lighting information, geometry, and material buffers. The lighting is put into the base video diffusion model through cross-attention layers

Fidler emphasises that DiffusionRenderer is powered by NVIDIA’s Cosmos World Foundation model, a sophisticated neural network that has been extensively trained to understand and simulate physical laws. This model provides a robust foundation, enabling DiffusionRenderer to achieve exceptionally realistic results, even when relighting complex scenes captured from real-world videos.

In practice, DiffusionRenderer enables users to dynamically relight entire scenes, seamlessly changing illumination environments while preserving the integrity of the original video. Fidler explains this capability, highlighting the model’s proficiency in accurately removing directional lighting and reintroducing new environmental illumination. She notes that although currently constrained to approximately 1K resolution outputs and standard dynamic range, the architecture inherently supports scaling to higher resolutions and high dynamic range imaging, which anticipates NVIDIA doing further key developments for future applications, especially in film, television, and advanced visualisation.

Beyond relighting, DiffusionRenderer offers significant creative flexibility, including realistic material editing and seamless virtual object insertion, ensuring digital elements blend naturally into captured scenes. This capability heralds a new era in visual effects and computational photography.

As NVIDIA continues to enhance Cosmos and expand data-driven neural rendering techniques, Fidler remains optimistic about scaling resolution and dynamic range capabilities in the near term, which would be critical for many film and high-end TV/streaming pipelines. DiffusionRenderer is another example of NVIDIA’s commitment to tackling challenging problems in AI and graphics, transforming key ideas into practical innovations for use in our industry.