Vincent Brisebois, Senior Technical Marketing Manager at NVIDIA has released a fun new prototype of NVIDIA’s GauGAN environment generator feeding an Omniverse real-time rendered scene. As the artist roughly sketches a landscape they want, the Machine Learning (ML) system produces a plausible photoreal landscape that updates live in the Omniverse render including reflections. While both technologies have been seen before it is another great example of the interactivity possible with ML and NVIDIA’s RTX ray tracing. In addition to the landscape ML, the renderer in the RTX pipeline uses ML denoising to allow the near real-time ray tracing rendering of the final shot.

GauGAN was a research project, but Omniverse is a more major software undertaking to allow interchangeability between applications and real-time engines all built around Pixar’s Universal Scene Description (USD). See below for background on both projects.

GauGAN

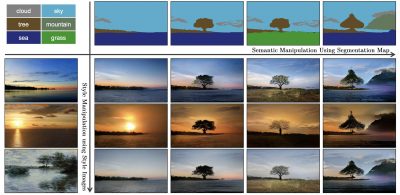

GauGAN, named after post-Impressionist painter Paul Gauguin, creates photorealistic images from segmentation maps, which are labeled sketches that depict the layout of a scene. The program works via a deep learning model developed by NVIDIA Research which turns rough doodles into highly realistic scenes using generative adversarial networks (GANs).

The semantic segmentation feature is powered by PyTorch deeplabv2. Despite lacking any understanding of the physical world, GANs can produce convincing results because of their structure as a cooperating pair of networks: a generator and a discriminator. The generator creates images that it presents to the discriminator. Trained on real images, the discriminator coaches the generator with pixel-by-pixel feedback on how to improve the realism of its synthetic images. It was based on an original paper from 2019: Semantic Image Synthesis with Spatially-Adaptive Normalization by Taesung Park, Ming-Yu Liu, Ting-Chun Wang, and Jun-Yan Zhu.

Artists can use paintbrush and paint bucket tools to design their own landscapes with labels like river, rock and cloud. A style transfer algorithm allows creators to apply filters, changing a daytime scene to sunset, or an image to a painting. Users can even upload their own filters, or upload custom segmentation maps and landscapes as a foundation for their artwork.

Artists can use paintbrush and paint bucket tools to design their own landscapes with labels like river, rock and cloud. A style transfer algorithm allows creators to apply filters, changing a daytime scene to sunset, or an image to a painting. Users can even upload their own filters, or upload custom segmentation maps and landscapes as a foundation for their artwork.

GauGAN won SIGGRAPH 2019 Real-time Live for Taesung Park (Ph.D. student at UC Berkeley) and NVIDIA’s Chris Hebert and Gavriil Klimov.

You can try GauGAN and other interesting AI tools here

Omniverse

Omniverse is a new platform developed by NVIDIA to share scenes and models between different editors and viewers. Ray tracing is used to accurately visualize content within the Omniverse Kit viewer. Omniverse is a powerful collaboration platform and database for 3D production pipelines based on USD and NVIDIA’s RTX. Developers of 3D applications and game engines can build portals to connect their products to Omniverse so artists can seamlessly share assets with other industry-standard applications. Omniverse viewer can also be integrated into the application interface, adding an ultra-high quality real-time ray-traced application viewport.

As the GauGAN demo above shows, the Omniverse provides live updating of the assets allowing full round-tripping between programs such as Maya and engines such as UE4. Omniverse is a way for NVIDIA to connect all of NVIDIA’s tools in rendering, physics, simulations and AI technologies into a core database that is a representation of an artist’s virtual world. The database contains all the 3D world info that is common across all the tools connected to it. It has a web style API where programs can query and ask questions about that 3D world while every tool that is connected can stay synced. For example, if Maya is connected then any changes that happen in Maya are reflected in the Omniverse database can be instantly seen in the Omniverse ray traced viewer. This allows both fast visualization and collaboration to say UE4 which can also be simultaneously connected to the same Omniverse scene file. But as the demo also shows the connected Apps could be experimental or Photoshop, Omniverse is agnostic, and it is all based on USD. It is only the USD version that lives inside the Omniverse, it maintains a reflection of the scene in USD. The reason why Omniverse is so key to NVIDIA’s approach is that while there is almost universal agreement that USD is a good idea, each companies implementation can slightly different, or encompass a subset of what is possible. Omniverse tries to solve that problem by standardizing its database internally and providing constant database interactivity.

The Omniverse kit includes a renderer which fully supports Material Definition Language (MDL). The NVIDIA MDL gives one the freedom to share physically based materials and lights between supporting applications. For example, an MDL material in an application like Allegorithmic Substance Designer can then be used it in Chaos Group’s V-Ray, or any other supporting application.

In the keynote presentation at GTC last year, NVIDIA CEO Jensen Huang unveiled NVIDIA Omniverse, a powerful collaboration platform for 3D production pipelines. Omniverse allows users to seamlessly share assets across applications and view changes being made all in real-time.

David Lesperance created a demo Attic scene with NVIDIA, “I modeled the set, textured, and lit it, for Fstorm, Redshift and Unreal(UE4)”. The set was modeled in 3ds max, Marvelous, Substance design. The first Render was done in Fstorm, then Redshift, UE4, finally via the Omniverse Kit Renderer.