Adobe Max 2017: Best of the Tech Peeks

Adobe Max Sneaks showed a stunning array of technology technology previews. These impressive technology peeks at unreleased technology was co-hosted by Silicon Valley‘s Kumail Nanjiani.

Adobe Max Sneaks showed a stunning array of technology technology previews. These impressive technology peeks at unreleased technology was co-hosted by Silicon Valley‘s Kumail Nanjiani.

Below are a selection of some of the most impressive in our opinion, they range from video rig removal to 360 audio VR tools.

For more on this years Adobe conference see our earlier story.

Cloak

Unwanted signs, rig removal, dust on the lens, marks on clothes, people from shots, just a few examples of Adobe’s remarkable new technology, Cloak. Below are the full clips shown during the demo.

In each case below, a rough tracked matte indicates what needs to be removed.

Above: the demo removes the strap only

Above: the demo removes both people

Above: several of the cars are removed

Above: the light pole is removed.

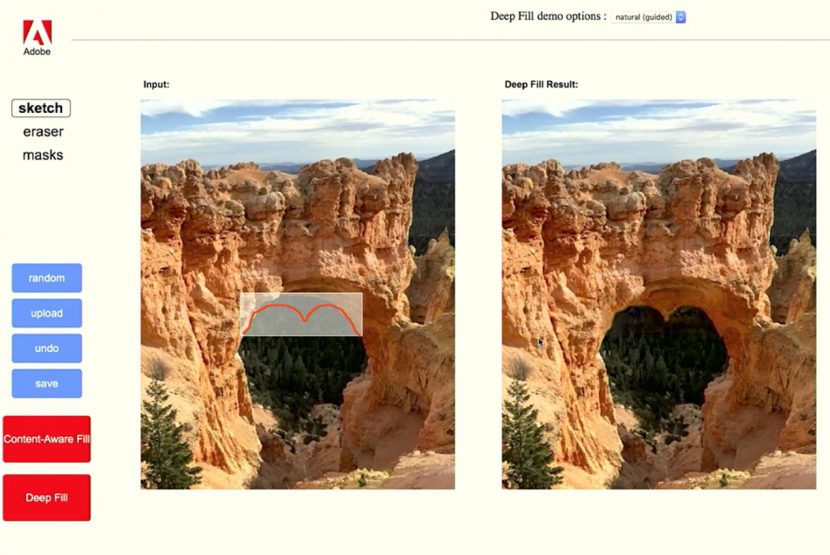

Project DeepFill

Project deepfill aims to do content aware fill but powered by Adobe AI engine Sensei.

This can be both guided and unguided intelligent fills. This was shown with still images but it provided a remarkable tool for digital matte painting, not just removing elements from video but creating new worlds.

The project uses “millions of real world images to train the Sensei model” according to Adobe’s Jiahui Tu from the Photoshop research team.

Project SonicScape

Yaniv de Ridder demonstrated the visual tool SonicScape. The idea of this demo was to provide a tool for VR editors to visually edit their sound in this 3D or 360 environment. The solution is to visualize the sound in the 3D 360 space. Project SonicScape takes the guesswork out of the immersive content editing experience by visualizing where the audio is graphically.

Project Lincoln

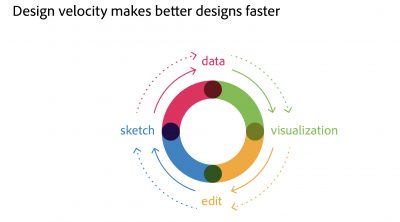

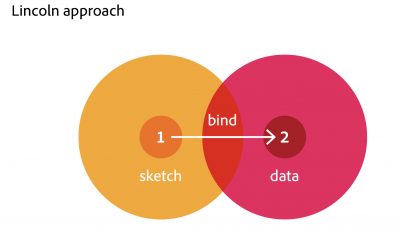

Bernard Kerr showed 14 data visualisations in less than 4 minutes, now admitted this was both a demo and test software, but as an indication of applying AI technology to Data visualisation it is extremely promising.

While Data visualization is often seen as a dull science, it falls on designs the task of producing a large amount of material very quickly.

The tools combine the notional input from spreadsheets with a large array of complex data visualisations all intelligently distributed and laid out. But as with all the technology demos, the actual product version of this technology appears some way off and any demo in this area will need enormous customization tools when deployed in a product.

Project Quick 3D

The last demo in this set was by an Adobe intern Aashima Arora who showed the Quick 3D demo. This took rough line sketches, interprets them and then searches the Adobe 3D library and provides a 3D model, normally orientated to the direction of the rough sketch. This is not modelling new 3D but quickly accessing the Adobe deep network to find 3D that can then be taken into Adobe’s new Dimension Application