As many have noted, the last Apple WWDC was intensely focused on machine learning, and it came right after Apple launched the new iPad Pro and iPad Air. Surprisingly, the iPad Pro came with the new M4 chip, and some users asked whether to upgrade. Do you need an M4 chip in an iPad Pro?

The answer comes down to your view on applications, privacy, and latency in an AI world. If you want to run cool apps such as Luma AI to make NeRFs in the cloud, then clearly, the computation is not done locally, and so the perceived difference will be low.

Features to Love

There are a lot of significant aspects to the iPad Pro. For a start, it is wafer-thin – I mean exceptionally thin; 5.1mm thin. It also has a great P3 screen with 1600 nits peak brightness and an adaptive:10-120Hz refresh rate.

The new pen with improved performance, orientation and new click functions is great but alone perhaps not enough to buy a new M4 iPad. The most remarkable brilliant add-on is the latest magic keyboard, with an aluminum palm rest, function keys row and a larger glass trackpad with haptic feedback and Multi‑Touch gestures. (This does come close to being worth the upgrade alone). Oddly, the normal travel case no longer covers the pen when it is on the iPad Pro – this means your pen easily gets knocked off its charging position in your bag, which is an odd backward step.

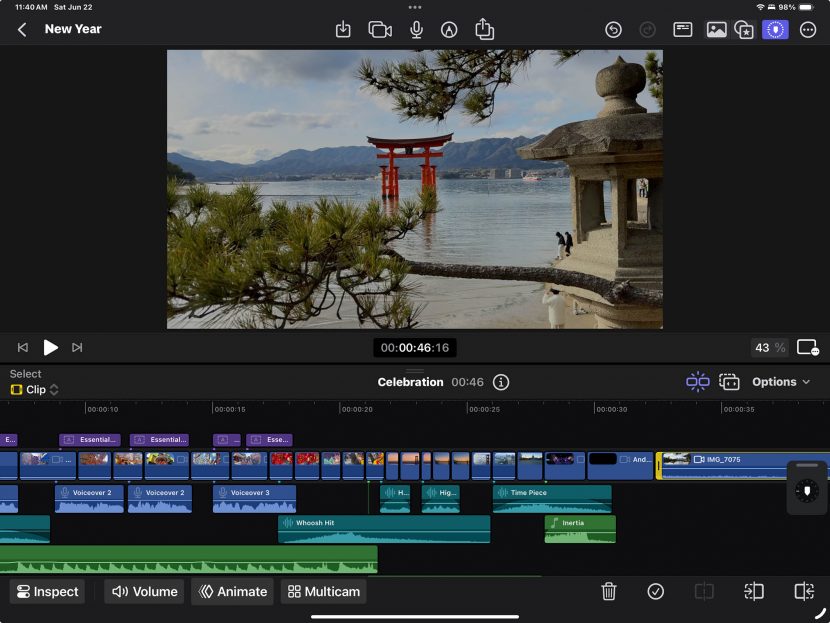

However, the main advantage for M&E professionals is processing on the iPad. When using FCP or DaVinci’s Resolve, uploading footage to the cloud is unnecessary. These packages are increasingly doing a lot of machine learning processing, and we can only expect that to increase. From Roto to specialist colour correcting and beyond, it is clear that having localised processing is critical, and the M4 delivers.

An excellent example of things we expect to see more of in the future is the ability to deal with spatial video in these editing programs and immersive content. When dealing with spatial video, you are effectively dealing with high-resolution stereoscopic dual imagery, which is extremely high bandwidth. In the case of immersive content that wraps around the viewer in Apple Vision Pro, it is extremely high-resolution content and ultra-high dynamic range.

Increasingly, great Apps allow you to work with your data locally, improving responsiveness and user experience. Even the new calculator on the iPad provides great new machine learning functionality you did not even know you wanted. Apple has stated publicly that it aims to introduce new features that can be translated into old devices. However, in the case of the Apple Intelligence features, which were shown at this year’s WWDC ’24, the limit is a hardware requirement.

When using large language models and other machine learning approaches, it is incredibly computationally expensive, so without using the cloud, it’s the size of the Apple Neural Engine that dictates whether these are fast enough to be helpful on the device, in theory, you could run some of these functions on older systems, but they would be slow and annoying. Naturally, the M4 chip has the most impressive neural engine and updates to Core machine learning help optimize and run advanced generative machine learning and AI models on device faster and more efficiently. This facilitates the iPad doing more of these everyday functions with machine learning and any other mobile device.

In addition to the traditional apps we’ve used before, there are also new apps made possible by the high-performing Apple Neural Engine. Many people would point to this as most relevant in games, but for artists in M&E, it is more likely to be animation tools and things such as Procreate Dreams.

These animation tools provide astonishing levels of control for a device such as a mobile tablet that you could easily use on a train, a plane, or sitting in a park. Naturally, the Mac Studio and other Mac models with professional digitising tablets would be the first tools used by experienced animators and environment artists. Still, one has to acknowledge the power now available on an iPad is remarkable.

fxguide does not tend to cover audio products, but this machine learning applies equally to audio editing. The ability to remove background noise, sweeten mixes, and separate out instruments to affect them separately, are all valid and robust uses of machine learning that audio teams are already enjoying.

The demands on editing software for even more advanced local machine learning processing will only increase as more ML audio tools are added to products such as FCP and Resolve in the future. Audio will likely become a major machine learning tool, which we will want to infer locally on our mobile devices. Already, Google Deepmind has released Video-to-audio research that uses video pixels and text prompts to generate rich soundtracks. “Video generation models are advancing at an incredible pace, but many current systems can only generate silent output. One of the next major steps toward bringing generated movies to life is creating soundtracks for these silent videos,” Google announced last week. In addition to producing scores and soundtracks for generated videos, these new approaches will allow for the creation of sound effects and automated syncing with video. For example, generating foley footsteps or, as Google has already shown, Video to Audio (V2A) drumming to match a video of a drummer.

It is reasonable to assume that these multi-modal applications will only grow in popularity. Local machine learning processing speeds up the process by avoiding uploading dense video files to the cloud, thus making such innovations more responsive with lower latency.

Apple advocates using more AI on devices for both performance and security. Clients and many artists prefer to ensure their material is not uploaded, no matter the developer company involved, since this ensures their material is not aggregated for use as training data without their consent.

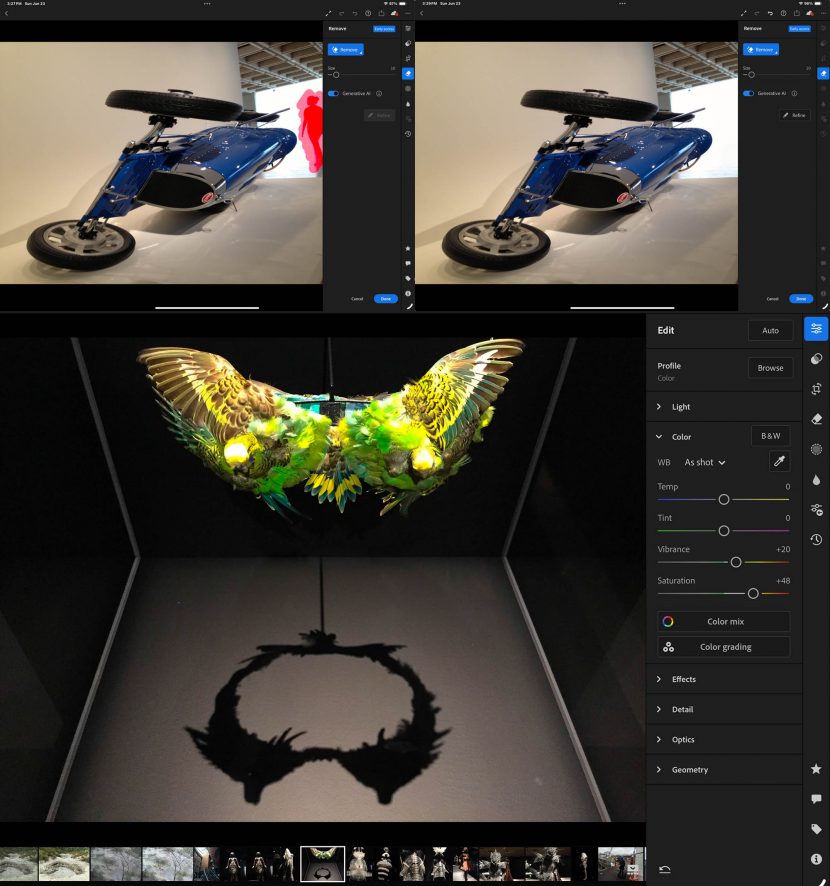

Generally, the more that can be done locally, the better the user experience. Adobe Lightroom offers AI tools such as automatic object removal, which is done in the cloud. For still images, latency is not a major issue, but for more complex tasks or tasks requiring multiple frames, local processing is ideal.

The iPad Pro has come a long way from the original iPad and iPad mini. As the processing power, screen resolution and size, and the Neural Engine have all developed, the iPad has turned from a passive reading and browsing device to a production tool.