Fxguide is heading off to FMX next week. Both John Montgomery and Mike Seymour will be there covering everything for fxguide and fxphd. We look forward to seeing as many fxphd members and fxguide readers as possible.

Each year we find FMX one of the best International conferences and this year is no exception. From Star Wars to Oscar winning Blade Runner 2049. TV shows like the VFX of Star Trek Discovery and the launch of the Foundry’s new Athera software. This year at FMX looks like being a great year for both practical talks and cutting edge technology.

Digital Humans

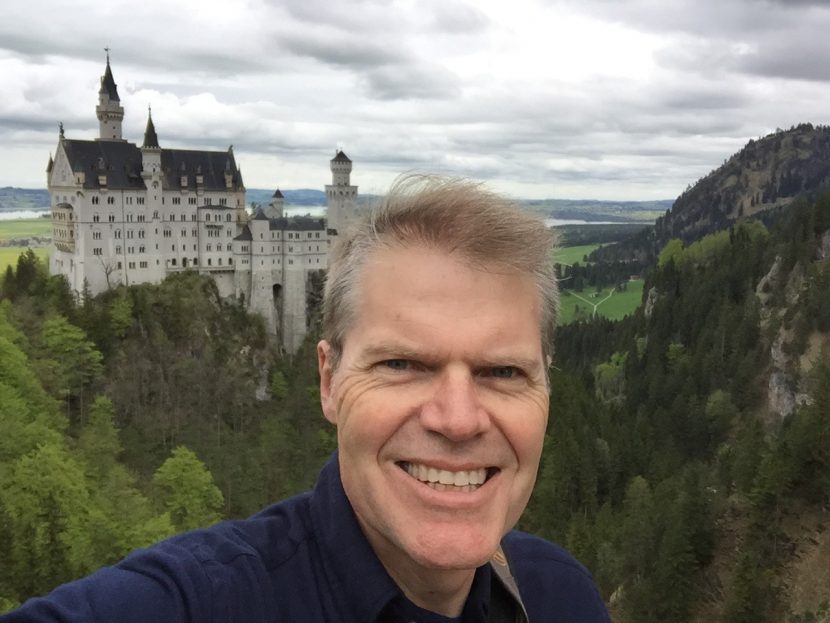

Fxguide’s Mike Seymour curates the Digital Human track each year and this year the line up is impressive.

Wednesday Morning and afternoon + Friday 2pm extra session

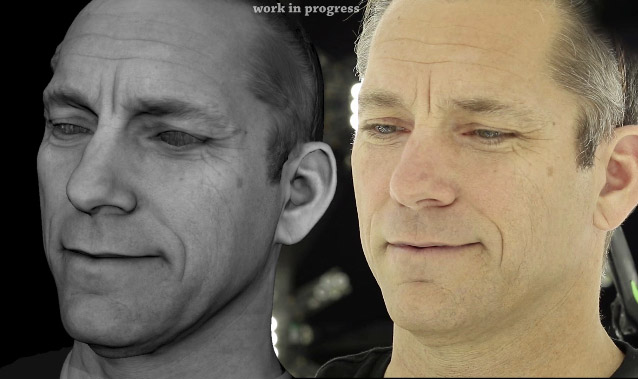

Digital Humans are the most complex and interesting problem facing computer graphics artists and technicians alike. The Digital Humans track will explore the very best of both cinematic and real time virtual humans. JP Lewis from Search for Extraordinary Experiences Division of Electronic Arts (or SEED) will discuss the challenges of creating truly believable and photoreal characters. JP Lewis is ex Weta and one of the most talented and experienced researchers internationally in this area. His deep grasp of the concepts, maths and issues makes him the perfect individual to start the Digital Human’s Session, discussing Traditional versus Deep Learning Approaches to faces.

Lewis’s talk will draw on his experience as an algorithm developer across a number of character projects, from the early Disney Human Face Project and The Matrix sequels to high-profile recent movies. Lewis is also associated with the pose space deformation algorithm, one of the the first applications of machine learning to characters (peeking under the hood, radial basis functions are a neural network with a single hidden layer).

The making of the MEETMIKE avatar will be presented by Jay Busch (Google) and fxguide’s Mike Seymour. This example of UE4 realtime rendering was a huge hit at SIGGRAPH and the team will walk through the steps in detail. This session is suitable for both students and industry professionals. Jay was integral in the USC ICT Lightstage with Dr Paul Debevec and is one of the most innovative and effective researchers we know. Her innovative career is only matched by how complex it is to define her role. She is a wonderful mix of artist, imaging expert and practical Engineer. Jay will show never before seen scenes while explaining the world of high end scanning.

Real time and gaming assets are half of the focus of the Digital Human’s session. The other half is state of the art fully rendered feature film work.

MPC Asset Supervisor Axel Akesson will give a behind the scenes look at how his team crossed the uncanny valley and created the photoreal human Rachael for Blade Runner 2049. This Oscar winning work was the subject of an fxguide story . In this session Axel will discuss the process in great detail.

Ian Comley from Industrial Light & Magic (ILM) will outline the past, present and future of digital humans at ILM. Ian was the CG Supervisor at ILM London for the incredible Star Wars: The Last Jedi as well as working on Star Wars: The Force Awakens and films such as Guardians of the Galaxy and Gravity.

On the Tuesday afternoon there will be a panel session on Digital Humans with all the speakers. This session is always extremely popular, and always so full we have had to turn people away. Please arrive early to get a seat.

The final digital human session is a special session scheduled for after lunch on Friday with Steve Caulkin of Cubic Motion. He will discuss how the real-time characters Senua, Siren and Meetmike were created and how the motion capture and solving technology has improved over the course of each project. Cubic Motion are a world leader in markerless facial tracking with complex AI solvers. Steve has been at the cutting edge of this work for several years and will show the latest developments which have moved beyond the traditional dual front stereo cameras on a HMC rig.

Virtual Production

The Virtual Production track also returns to FMX for the seventh year in a row, chronicling the ongoing use of real time computer graphics in the making of linear media. the Virtual Production track dovetails with some aspects of Digital Human’s but also emcompasses much more. This is one of the hottest topics in production right now. Its curator David Morin (Joint Technology Committee on Virtual Production) will discuss the actual state of virtual production.

Prof. Volker Helzle and Simon Spielmann (both Filmakademie Baden-Württemberg) will cover virtual production tools developed at Filmakademie, tracing the evolution from 2007 to the latest open-source development VPET (Virtual Production Editing Tools).

Daniel Gregoire founder of Halon Entertainment, will present tools for the next generation of storytelling. Shannon Justison of the award winning The Third Floor, will share insights into the visualization and virtual production for Thor: Ragnarok.

Chris Harvey (Oats Studios) and Mathieu Muller (Unity) will provide a behind the scenes tour of the making of the ADAM episodes 2 & 3, which were produced using a real-time engine. Habib Zargarpour (Digital Monarch Media) will talk about the history and origin of DMMS real-time tools and how their use evolved through The Jungle Book, Ready Player One and Blade Runner 2049, and current use on Tom Hanks’ film Greyhound.