The next generation of computer graphics will be on display next month at SIGGRAPH Asia 2018, the region’s leading interdisciplinary event showcasing the latest in computer graphics and interactive techniques, The 11th SIGGRAPH Asia will take place 4–7 December at Tokyo International Forum.

fxguide is a Media partner and both John Montgomery and Mike Seymour will be in Tokyo for the event. If you an fxphd member or fxguide reader we hope you will say ‘Hi’ if you see the guys at any of the events. fxguide will be reporting from the show and we have some great interviews lined up.

There is a lot of great talks and content at this year’s conference. Japan has a very strong graphics community, and this will be one of the biggest SIGGRAPH Asia conferences so far. Here are some of the key events that caught our eye.

Course and Production Sessions

The Featured Sessions will delve into technology advancements, character development and production behind some of today’s hottest projects.

Beyond the Uncanny Valley: Creating Realistic Virtual Humans in the 21st Century

Bridging the Uncanny Valley was once the Holy Grail for every roboticist, computer graphics, animation and video games professional. This panel gathers acclaimed professionals from those fields for an exploration of the State of the Art in creating realistic Virtual Humans and to explore how relevant the Uncanny Valley still is to producing digital humans. The discussion will be a great opportunity to find out the state of the art in animation and also explore some of the ethical and societal issues.

The panel is hosted by

- Christophe Hery, Pixar Animation Studios, with

- David Hanson, Hanson Robotics

- Matt Aitken, Weta Digital

- Prasert “Sun” Prasertvithyakarn, Luminous Production

- Erik Smitt, Pixar Animation Studios and

- Mike Seymour, fxguide & University of Sydney

Christophe Hery will host the panel. He joined Pixar in June 2010, where he holds the position of Senior Scientist. He has written new lighting models and rendering methods for films such as Monsters University and The Blue Umbrella, and more recently for Finding Dory, Piper, Cars3, Coco and Incredibles 2. He continues to spearhead research in the rendering arena at Pixar. An alumnus of Industrial Light & Magic, and during his career at ILM, he received two Scientific and Technical Awards from the Academy of Motion Pictures Arts and Sciences.

Matt Aitken is one of Weta Digital’s most experienced Visual Effects Supervisors. He has recently finished supervising Weta Digital’s work on Avengers: Infinity War. Matt’s other projects as Visual Effects Supervisor include District 9, The Adventures of Tintin, Iron Man 3 and all three films in The Hobbit trilogy. Matt has worked at Weta Digital since the early days of the company. Matt was nominated for the Academy Award for Best Achievement in Visual Effects, and the BAFTA Film Award for Best Special Visual Effects, both for District 9.

Prasert “Sun” Prasertvithyakarn is a lead game designer in charge of user experience with AI characters and system in Final Fantasy XV . His specialty in emotional AI design allows him to introduce the game industry with an unseen game experience created by AI. Sun was originally from Thailand, and he is now working at SQUARE ENIX. He was the lead game designer for Final Fantasy XV and was in charge of the buddy-characters, the friends that join the player along their journey in the game. At GDC this year he pointed out that games are “getting more complicating each day and the need for Artificial Intelligence has risen a lot these years. My job is not just only to find the right AI to solve problems, but to find the possibilities of using AI to create something new! Especially an emotional experience!”

Erik Smitt began his career at Pixar Animation Studios 18 years ago in the lighting department on Monsters Inc. In addition to his CG work, he is a painter, photographer, and live action cinematographer who combines elements from each discipline into his work at Pixar. Smitt has helped develop many projects inside of the studio including Wall•E, The Incredibles and Piper. Most recently he was the Director of Photography on Pixar’s Incredibles 2.

Dr. David Hanson has produced many renowned, one-of-a-kind robot characters that have received massive media attention and public acclaim. Dr. Hanson started as a Walt Disney Imagineer, working as both a sculptor and a technical consultant. Today, as CEO and Founder of Hanson Robotics, he publishes regularly in materials science, artificial intelligence, cognitive science, and robotics journals. He has been featured in the New York Times, Popular Science, Scientific American, the BBC and CNN.

fxguide’s Mike Seymour is both a co-founder of fxguide and also a researcher into digital humans. He has lectured around the world on the topic of Avatars, Agents and Actors, including FMX, SIGGRAPH, IVA, CGA and written numerous articles on the topic. He currently has a PhD fellowship at the University of Sydney where he has established the Motus Lab for Advanced Digital Human research into applications and ethics of real time virtual people. Mike is also co-founder of fxphd.com which is headed by John Montgomery.

Cinematography of Incredibles 2 – Function and Style

Erik Smitt, Pixar Animation Studios

Exploring the visual language of Incredibles 2 , Erik Smitt will cover influences and motivations both new and familiar in this blockbuster sequel. Smitt will explore visual concepts, and then talk in detail about Pixar’s methods for realising these designs to achieve their cinematic goals.

From Gollum to Thanos: Characters at Weta Digital

Matt Aitken, Weta Digital Ltd

Weta Digital is one of the world’s most advanced visual effects companies and specialist in advanced character work. Their history of advances has provided a road map for many to follow and advanced the state of the art in animated characters.

Matt Aitken is one of Weta Digital’s most experienced Visual Effects Supervisors. Aitken has been nominated for the Oscar for Best Achievement in Visual Effects, and the BAFTA Film Award for Best Special Visual Effects, both for District 9. He has won multiple Visual Effects Society Awards. Matt has a Bachelor of Science in Mathematics from Victoria University of Wellington and a Master of Science in Computer Graphics from Middlesex University, London. He has had technical papers published by Eurographics and Graphite and has given presentations on Weta Digital’s work at many conferences and festivals including SIGGRAPH, FMX, the Australian Effects and Animation Festival, Imagina, the London Effects and Animation Festival, and AnimfxNZ. Matt is an active member of the Academy of Motion Picture Arts and Sciences®, the British Academy of Film and Television Arts, and the Visual Effects Society.

Behind the scenes of Solo – A Star Wars Story

Nigel Sumner and Atsushi Kojima, ILM Singapore.

The team from ILM Singapore will discuss the making of the latest Star Wars film: Solo. This film used innovative real time projection systems and cutting edge character animation. The films approach to immersive environments were achieved with vast practical lighting display technology to blending the lines between traditional VFX and in camera VFX. To read more on Solo, and how the team used advanced projection technology to provide in camera effects and believable lighting see our fxguide coverage of Solo by clicking here.

Real-Time Live! Makes its SIGGRAPH Asia Debut

Real-Time Live! is a brand-new event at SIGGRAPH ASIA that allows attendees to watch breakthrough innovations presented and deconstructed by the people who created them. From mobile and console games to virtual and augmented reality, creators of cutting-edge real-time graphics will give attendees a look under the hood of their creations and share the techniques they use to achieve jaw-dropping results. Real Time Live has become one of the key ‘must attend’ events of the main SIGGRAPH conferences in August and this will be the first time it is also being held at SIGGRAPH Asia. The combination of being live and showcasing the most incredible advances in Virtual Production and real time rendering, make this a session attendees just ‘need to be in the room’ to witness first hand.

One of this year’s presenters will be Hao Li’s team at Pinscreen presenting Pinscreen Avatars in your Pocket: Mobile paGAN engine and Personalized Gaming, (see more from Pinscreen below in the technical papers section).

“We will demonstrate how a lifelike 3D avatar can be instantly built from a single selfie input image using our own team members as well as a volunteer from the audience” explained Hao Li. In addition, the team will showcase some additional 3D avatars built from internet photographs, and highlight the underlying technology such as their light-weight real-time facial tracking system. “Then we will show how our automated rigging system enables facial performance capture as well as full body integration. We will showcase different body customization features and other digital assets, and show various immersive applications such as 3D selfie themes, multi-player games, all running on an iPhone” he adds.

SIGGRAPH Asia 2018 Real-Time Live! is on Friday December 7th at 4pm-6pm.

Technical Papers:

There are a lot of outstanding papers this year, many with incredible advances thanks to new Machine Learning approaches. Here are two papers really caught our attention:

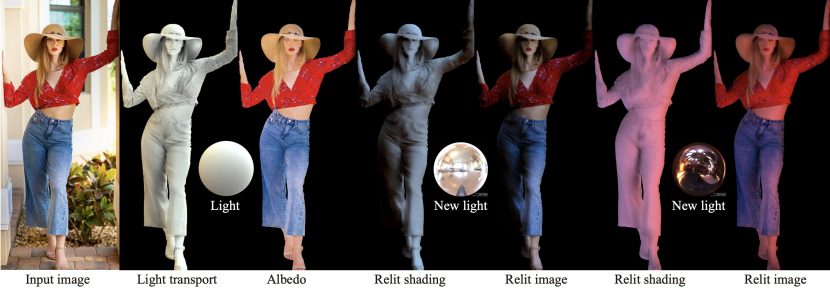

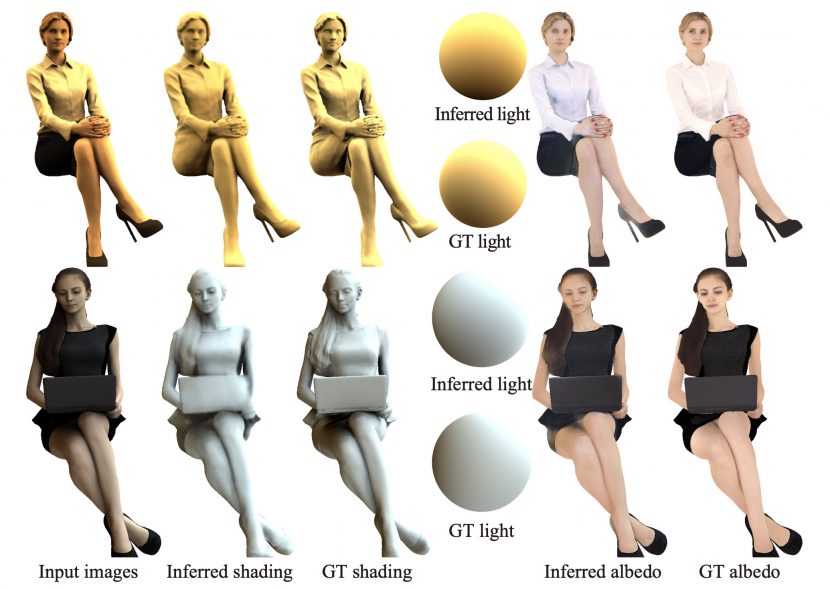

- Relighting Humans: Occlusion-Aware Inverse Rendering for Full-Body Human Images by Yoshihiro Kanamori from the University of Tsukuba and Yuki Endo from The University of Tsukuba & Toyohashi University of Technology.

- paGAN Real Time Avatars using Dynamic Textures by Nagano, Jaewoo Seo, Jun Xing, Lingyu Wei, Zimo Li, Shunsuke Saito, Aviral Agarwal, Jens Fursund, Hao Li ( Pinsxcreen)

Relighting Humans:

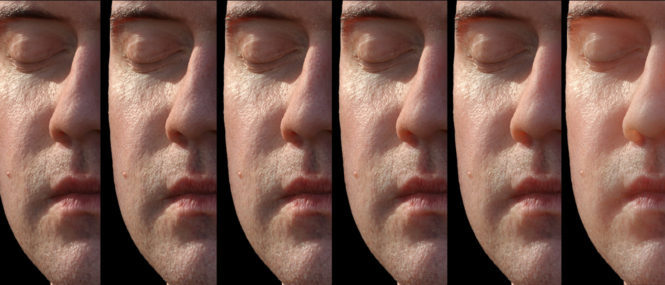

Relighting humans from standard video input is a tremendous challenge and yet one that holds enormous promise for advancing visual effects. This paper uses a combination of Machine Learning (convolutional neural networks) and spherical harmonics.

We sat down with Yoshihiro Kanamori, who has a Ph.D. in Computer Science and is Associate Professor, Department of Computer Science, Graduate School of Systems and Information Engineering, University of Tsukuba, Japan.

We spoke to Kanamori about this latest work which remarkably relights video footage in ‘post’ using Machine Learning. Key in machine learning is often having large and robust datasets. In this paper, the authors comment that the problem in making this inference possible is the lack of a training dataset, considering the unlimited variations of human portraits regarding poses, genders, builds, and garments. We asked if they had considered making synthetic data for the approach by making, for example, clothed people using cloth sims and a bi-directional path tracing?

YK: Yes, that’s one option. However, I don’t know whether there are such CG human models that have sufficiently high-quality and wide variety for our purpose. We needed the largest variations possible regarding materials (diffuse or glossy), textures, shapes, etc. Using a bidirectional path tracer or whatever physically-based render is also possible, but in this case, the ground-truth data cannot be completely reproduced with the SH-based formulation due to the ignorance of high-frequency signals and inter-reflection of light. I mean, there must be always inherent errors even if we continue training so long. Our academic Siggraph paper reviewers also expected that using a more physically-based renderer (than SH) for creating training data will further improve the results because the training data should be more similar to real photographs. I cannot conclude which is better because I haven’t tried the training with a physically-based renderer.

What we actually did for the training is to separately create binary masks, albedo maps, light transport maps, and lights beforehand, and generate ground-truth data on-the-fly during the training by calculating their products.

FXG: You mention faces are easier due to training data but also the face is fairly spherical and not subject to the same level of folds as cloth – is that not so?

YK: Yes, it is, except for elderly people whose faces might have deep wrinkles. We also mentioned such a previous method in our paper; Schneider et al, Efficient Global Illumination for Morphable Models, ICCV 2017

Here’s a quote from our paper:

“Schneider et al. [2017] also proposed to account for light occlusion in SH-based face inverse rendering to better handle face wrinkles. They extended a 3DMM [Paysan et al. 2009] so that not only albedo and shape but also per-vertex light transport vectors can be reconstructed via multilinear regression. However, light transport vectors are available only in the face region.”

FXG: This process is running at around 0.43 sec a frame, is that right? On a 1080 Ti Nvidia Card? I assume you could take advantage of the new RTX for both it’s graphics and it’s AI components?

YK: Yes, per frame but on NVIDIA GTX 1080 Ti. I hope that the tensor cores of the RTX cards will accelerate the inference.

FXG: How long have you been working this?

YK: The initial version was done just for a few months; we started almost exactly one year ago. We then submitted our paper to SIGGRAPH 2018. At that time the quality was not so good. In the rebuttal phase of the technical papers submission process, we strongly insisted to SIGGRAPH to accept our paper. What we wrote in the rebuttal is that in this deep-learning era, SIGGRAPH is loosing its presence, and if our paper is rejected, someone else will publish a similar technique in AI or computer-vision conferences, which will accelerate the decline of SIGGRAPH.

Our initial network architecture didn’t have a decoder branch for inferring lights. After the rejection, we adopted a network similar to SfSNet [Sengupta et al. 2018], which has three decoder branches for albedo, normal, and light, respectively. Then, our results suddenly become much better, and fortunately, the reviewers of SIGGRAPH Asia liked our improved results.

FXG: Yes, agreed, we need SIGGRAPH to embrace these types of Machine Learning research, as it has enormous long term significance. But in fairness it seems SIGGRAPH is working hard to do this now. Getting back to your paper, does highly specular materials cause special problems?

YK: Yes. The specular highlights will be baked in the albedo maps. But one might not notice the problem if we relight with textures. With a careful look, one will notice that the specular highlights do not move.

FXG: How large was your research team?

YK: Only two, me and Prof. Yuki Endo (the second author). Prof. Endo is my former student since he was a bachelor student until he obtained his Ph.D under my supervision. He is an expert of deep learning, and now I am his student regarding deep learning!

FXG: Has this advanced since you submitted the paper?

YK: Unfortunately not, because Universities in Japan enforce a huge amount of paperwork and requirements that Professors in foreign countries never have to do, e.g., supervision of exams, paper work etc. But I have a fantastic idea for a new project, which will be much more impressive than this relighting one. I just keep my fingers crossed so that no one else will publish similar work for a few months!

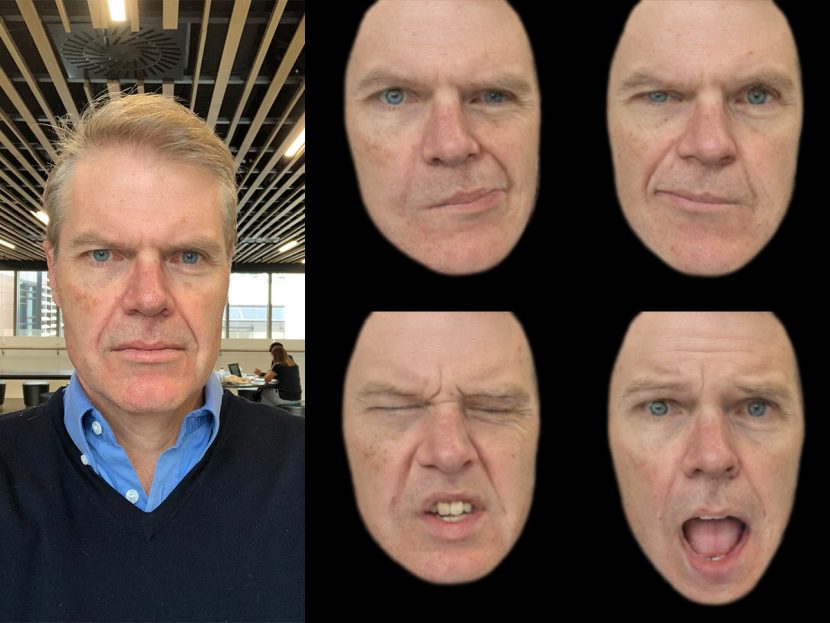

paGAN Real Time Avatars

paGAN Real Time Avatars using Dynamic Textures.

paGAN is Pinscreen’s Generative Adversarial Network or GAN used for making real time faces and Avatars.

The Pinscreen technology actually has two parts, a markerless facial tracker and the paGAN tool which allows effectively for puppeteering of a face. To allow compute time for the real time paGAN, the tracking software runs at an equivalent rate of 1000fps.This frees up processing time for the paGAN to make a plausible face using it’s innovative Machine Learning technique. paGAN stands for Photoreal Avatar Generative Adversarial Network. It is the ‘renderer’ of the Pinscreen facial system.

The Pinscreen team has used our own Mike Seymour for some tests and models, but this is not the same application or data as seen in last year’s MEET MIKE project or UE4.21 MIKE. Li’s team did however have access to the Wikihuman/USC ICT scan of Mike, as a ground truth reference. Pinscreen’s tools can work from normal phone quality input data, and not the rich data that the USC-ICT lab can provide (with the USC Light Stage). In this demo below, the face of MIKE was made from Pinscreen’s own Jpeg conversions of fxguide’s real Mike Seymour. Mike simply took a selfie and Pinscreen did the rest.

We are huge fans of this new technology and fxguide has been following and covering GANs and paGAN for some time. For more on Pinscreen and GANs in general see our fxguide story here.