Exclusive coverage from fxguide.com

At SIGGRAPH last July, Pixar celebrated 25 years of RenderMan (see our story here). Today the company has announced new breakthrough technology, a new commitment to R&D and massive pricing changes including free access to RenderMan for non-commercial use. Ed Catmull, President, Walt Disney and Pixar Animation Studios, along with Dana Batali, VP of RenderMan Products, Chris Ford, RenderMan’s Business Director and the Pixar RenderMan team have introduced sweeping changes to the way RenderMan will be developed, sold and the very latest technology that will ship before SIGGRAPH 2014 in Vancouver. This is clearly the start of the next 25 years.

The new product is a combination of RenderMan Pro Server and RenderMan Studio. There will now be one product, used by artists or on the farm, and movable between the two. The new RenderMan has a powerful bi-directional path tracer and serious new technology from Disney Animation, which underlines a new unified approach to rendering from the House of Mouse – the amazing powerhouse that is Disney today.

For those who like version numbers, the new release is built on a v19.0 baseline, and is a consolidation of the components of the previous RenderMan Pro Server (the batch renderer) and RenderMan Studio (the artist’s interface including RenderMan for Maya) into a single RenderMan product that will be continuously updated in both rapid dot and major releases, making traditional versioning more fluid than previously. RenderMan is the obvious name for the unified solution, and the ‘V19’ tag is all but dropped from use.

Background

Most people think of the REYES rendering approach as being at the core of RenderMan when thinking of imagery produced by Pixar’s RenderMan, although this has been historically true, RenderMan has been a hybrid renderer for some time now.

REYES (Renders Everything You Ever Saw) is the brainchild of Rob Cook, Loren Carpenter and Ed Catmull, and it is not actually ‘RenderMan’. It is a part of the RenderMan Compliant Renderer that Pixar has used and sold, but actually pre-dates what we might think of as ‘RenderMan’. It was in May 1988 when the original RenderMan interface 3.0 was launched, over 25 years ago, but it was actually greater than 25 years for the REYES renderer which was released in 1984 and different from PRMan was released in 1989. The original RenderMan specification was not for a product, in fact, it does not even define that you need to render with the REYES scanline approach or today’s bi-directional path tracing. The RenderMan Interface Specification it is about what you are rendering, not how you are rendering.

It was at SIGGRAPH Anaheim 1987 that the original paper The Reyes image rendering architecture was delivered by Cook, Carpenter and Catmull. This was at a time when a fully ray traced system was just not viable. REYES provided a way to render complex scenes but it is just a component of what makes RenderMan the powerhouse it is, and has been for two and a half decades. The flexibility of RenderMan is that it allows for change and allows for modularity which is why it has adapted so well to the years of various SIGGRAPH innovations, new ideas and Pixar R&D.

Today Pixar launches the newest in that long line of innovations, a new rendering architecture known as RIS, that supports plug-in integrators and comes complete with a new industry standard path-tracer as well as a cutting-edge bidirectional path tracer.

RIS can take the place of REYES as the engine that solves the integral at the heart of the rendering equation. It does not force out REYES but for a class of rendering imagery it performs better and produces a cleaner better render. Pixar is both giving out the source code for many of their plugins and most interestingly the source for the uni-directional path tracing integrator. Pixar are encouraging TDs and others to develop variations or entirely new integrators.

How keen is Pixar to have others use the software and extend it? In addition to providing the source code, the company is providing full uncompromised download and use of RenderMan for free for non-commercial use, and slashing the node price for commercial use by 75%! (more below). The REYES integrator has been the foundation technology for rendering at Pixar, the studio that redefined what animation was and what it could be.

The RenderMan team at Pixar are a separate group and one that services Pixar Animation, and now a host of companies worldwide. Over time, Pixar became part of Disney as has other great companies such as Lucasfilm which brought with it one of the world’s greatest visual effects companies, Industrial Light and Magic. Of course as part of Disney, Pixar Animation and the RenderMan team also came under the same umbrella as Disney Animation, and the perhaps lesser known but world class Disney Research groups that served not only Disney Animation but also the Theme parks and the huge Disney entertainment empire. Today Disney spans properties from Iron Man (Marvel) to ESPN, from the Muppets to the Star Tours Ride or Buzz Lightyear, and from Frozen to the new Star Wars films now starting production.

R&D

So many of the Disney companies require the use of advanced rendering and, unsurprisingly for a company the size of Disney, there are multiple advanced R&D teams exploring the advances in rendering. While Pixar’s RenderMan team may appear the most obvious candidate for this work, Disney Animation was behind the advances in areas such as Ptex and new BRDFs that made Frozen so visually impressive. Disney Research in Zurich has been pioneering work in many areas including facial rendering, in particular the Medusa rig, which ILM used on Avengers and now Maleficent. Of course, ILM themselves do hard core R&D and were behind such key technologies as OpenEXR. But there is also a key Disney Research center located at Carnegie Mellon University – in total there are five primary rendering research centres: Pixar, ILM, Disney Research (Zurich), Disney Animation, and the Carnegie Mellon Lab.

This represents the most significant set of rendering research being done in visual effects and animation. In a time when the film industry faces a brain drain to the tech sector, especially Silicon Valley and its stratospheric salaries and share options. Disney’s combined imaging R&D is not only key, but thankfully growing.

Ed Catmull discusses R&DAnnounced today by Ed Catmull as part of the vision for the next 25 years of rendering development, these five key research units will now become a co-ordinated research network. “The setup here,” says Catmull, “is that we can all share with each other, but we are only going to have one conduit for it going to the outside world and that’s through the RenderMan group, because the idea is as we get great ideas we have a base we can put them in and then have them go out into industry, but we’re not so naive as to think one group is going to come up with all the solutions.”

This is not being done to reduce waste, in fact, overlapping work is not expected to be cut, such competition being openly welcomed moving forward. However, unlike before, the fruits of this massive research effort will funnel through the Pixar RenderMan team in an effort to harness the winning ideas and use them as widely as possible.

This new organizational arrangement is not being done to find economies of scale, as is so often the case. Rather, Pixar’s RenderMan development team has recently been expanded significantly, both through inter-divisional transfers within the broader Disney Studio organization and also from the industry. For example, Philippe Leprince, formerly head of lighting and shading at Double Negative, has joined the RenderMan development team based in the heart of Soho London. Leprince brings huge amounts of applied production experience in VFX, which helps balance the animation based agenda that might be expected from the Disney/Pixar Animation division. Similarly, Cliff Ramshaw has recently transferred from ILM into the RenderMan development team. Interns from Disney Research are also working on RenderMan this year. On top of that, says Pixar’s Chris Ford, there will also be “personnel on periodic assignment from other tools development groups inside Pixar. We won’t disclose everything they are working on, but the RenderMan development effort has expanded by at least 25% over the past few months.”

There is no single rendering ‘Research Tsar’ that will run all the centres research agendas – such a move “carries with it a risk of too narrow a focus,” explains Ford. Keeping the various R&D groups separate within the broader Disney Studio organization so they can make their own decisions about what approaches to take, empowers them, according to Ford, to work on common problems from different angles. “Don’t mistake this approach for isolation, however,” he says. “Disney has internal cooperation and technology summits where each group presents it latest work, invites peer review, and collaboration and criticism. So we in effect have a balance of cooperation and independent development that is very healthy.”

While RenderMan needs to remain cutting edge to survive, it is perhaps not putting too fine a point on it to say that Ed Catmull’s personal support of RenderMan and all it stands for, is the most significant reason why the product enjoys such a strong future. Pixar’s RenderMan sales must barely represent a rounding error for Disney – if that, – but it represents technical excellence, collaboration and a commitment to the broader animation and VFX industries.

RenderMan and RIS

Along with a host of changes, Pixar announced their newest version of RenderMan today, including a new internal option for rendering or rather solving the rendering equation called ‘RIS’. This system of offering a range of ‘integrators’ inside RIS represents a major addition to the options for high quality imagery inside RenderMan.

RIS is a new renderer containing a new exciting rendering architecture. Think of RIS as a rendering framework for plugging in an integrator, some materials and patterns, and associating these with geometry, camera and lights. RIS promises to provide significant opportunities for certain classes of shots that users may have previously considered using rival renderers such as Arnold or V-Ray. Here at fxguide we started by trying to find out what RIS stood for, and surprisingly Pixar was very coy about the name’s history, as Dana Batali outlines: “Heh 🙂 We’ve decided to be cagey about this. Originally it was a placeholder. Now we let each user decide what RIS stands for.” Batali does suggest perhaps Renders Interesting Stuff and a dozen other possibilities (see the end of this story for more).

So what is a Bi-directional Path Tracer with VCM (vs a unidirectional path tracer) ?

The problem with ray tracing is that there is always some limit on how many times rays can bounce, and making sure they accurately or sufficiently find lights. Imagine a room with a light bulb hanging in the middle – this is the easy case. The light is likely to be ‘captured’ in the render equation as rays are likely to find it. But what if that light was behind a wall mounted recessed bracket – classic stylish hidden light bulbs you might find in a cool modern apartment. If you walked into such a room you would not see the up lighting unless the lights were switched on. In this case unidirectional path tracing will also find it hard to ‘capture’ the light into the rendering equation.

The solution is to fire rays from the light while at the same time firing rays from the camera. After a certain amount of bounces you sort of just connect the two sets of rays up. In this approach you are much more likely to have walls and non-light sources lit up by the lights, and thus lighting the room. It is often more efficient for random ray paths that start out at the lights to escape from the confines of say, the mounted bracket and into the room, than it is for random ray paths in the room to find their way into the bracket. By letting paths from the light source escape into the room the renderer has more opportunities to find unblocked ways to connect them to the camera paths. (Don’t forget in a standard direct lighting solution of years past, one would shoot a ray from a point to a nearby light which in this case would usually fail because the mounting bracket is in the way – that is the whole point of concealed lighting!).

Everything above may all sound like just a special case, but light coming under a door from another room, or through a keyhole (or even some arrangement of Luxo desk lamps) – these are all cases were old methods don’t work well in the new brave world of physically based lighting. Of course, the first unidirectional path tracer example would work completely if you ran the ray bounces ‘forever’ – but then who has time for forever when rendering?

The progressive VCM that Pixar has announced is an addition to bi-directional path tracing. VCM stands for Vertex Connection and Merging and the algorithm provides some great additions. In particular, it provides wonderful caustic and this is something RenderMan users will really benefit from.

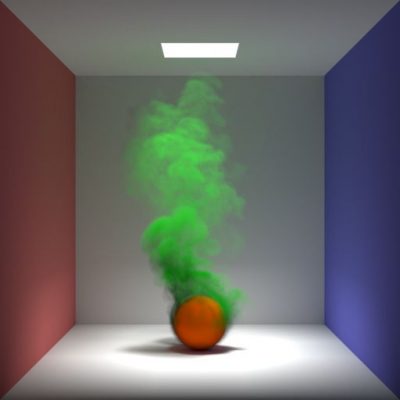

An early test of RIS and PxrVCM’s ability to handle caustic lighting. The 1 light source is above & behind the statues and produces caustic reflections onto the ceiling of the box. The left & right walls show secondary shadows from indirect specular light reflecting off the glossy back wall. Note: model from the Stanford Model Repository.So what is RIS better at rendering?

What does the new RenderMan RIS render better than REYES? In answering that question, one needs to be clear that either of these integrators inside the new RenderMan will work, so in examining the new software it is really about likely speed and probable ways one might go, than absolute choices based on only one integrator or the other being the only way of actually working.

As with most things, there is a continuum spanning a range of shots, but as a rule of thumb: ‘beauty’, shiny car shots will be ideal for the new RIS, whereas complex hair or furry animated creatures would be more suited to REYES. Now of course, Pixar has rendered say the Cars and Monsters films in RenderMan of old, but under the new system the Pixar Animation team might favour the new RIS if Disney/Pixar suddenly decided this year to make a Wall-E spaceship sequel, or REYES; if they instead made a Ratatouille sequel. Ultimately many things influence the choice of renderers – practical pipeline decision motivated by render farm budgets, required memory sizes etc, but the new approach certainly changes the playing field for the better.

In more concrete terms, RIS is better suited as a ray tracing path-tracing solution to complex shiny projects where the aim is to nail it in the render. REYES however is more flexible and thus would be better for a multi-pass pipeline where the look will be determined in the compositing. A natural extension of this is to assume a less technically focused team who do not want to have arbitrary control over every pixel, and who are more focused on a PBS (Physically Based Shading and lighting) approach, will gravitate to the new RIS and in so doing have faster lookdev and better feedback.

But it is a balancing act. For example, consider skin shaders and sub-surface scattering (SSS). There are approximation solutions and increasingly complex ray tracing solutions to skin. Dana Batali thinks that, on balance, RIS will prove the winning approach, “but mostly on the grounds of interactive feedback and the concerns of overmodeling (which RIS handles better). That is to say, there’s nothing computationally unique about SSS – it’s just a specialized integration technique for indirect illumination that travels through highly diffuse materials.” Batali adds that more-advanced SSS techniques are exploring “brute-force ray-traced simulations over a simple diffusion model. Generally ray traced shading in RIS is much faster than REYES + RSL so one can afford more rays on the same budget and perhaps push the state of the art in skin to higher heights.”

RenderMan has always worked well with in a complex pipeline and at SIGGRAPH last year Pixar showed an incredibly fast mock-up of a GPU based KATANA (from The Foundry) solution. That GPU prototype was shown as a technology demo and not a product at SIGGRAPH. Today there continues to be ongoing work within PIxar on applying GPUs to specific problem areas such as volume rendering “and we have been pretty open about that,” says Chris Ford. “Any given integrator can be accelerated by the benefits of computational GPU’s and it is no surprise that this is something we are actively evaluating. There is no GPU enhancement in the latest RenderMan however, as establishing the RIS framework is the major focus of this particular release.”

Even without the new GPU work, the new RenderMan is still very much focused on being used in a KATANA pipeline. The Pixar team are exploring optimisations currently, especially with load time, as the new renderer is in beta with KATANA (as it is, of course, with Autodesk’s Maya). While the beta of the new Renderer is going very well, and is already very advanced, the release of the product is not immediate. At the moment it is scheduled to happen only “in the SIGGRAPH 2014 timeframe”. The beta is not an open beta but Ford says that “we are not establishing a totally open beta, but we will make access liberally available to commercial studios upon request.”

Already key customers from ILM to MPC has been closely testing and evaluating the new beta version with very positive responses, such as this from MPC:

“This truly brings the future of fully photo-realistic ray-traced rendering to RenderMan. We did tests with the production assets from one of our latest movies and were completely blown away by the speed and interactively we could preview and render these assets. The RIS based integrator is going to change the way we work with more scalable rendering, and faster results.” – David Hirst, Global Head of Lighting at MPC

How fast is it?

We asked Chris Ford to comment on the performance of RIS inside RenderMan. “For RIS compared to REYES,” he says, “we are seeing performance improvements of between 2x to 10x faster than REYES in various situations.”

A subtle but key consideration associated with unbiased rendering, is that the notion of exactly when an image is ‘done’ is much more malleable. It is possible to get something very quickly, but it is still noisy by production standards – but the longer it renders the cleaner it is. This is key especially when using the renderer for interactive feedback work. “The general image resolves quickly,” explains Ford, “and most of the time goes into reducing noise to an acceptable level which can change from frame to frame in that more or less noise is acceptable in different conditions. (ie: fast-moving objects). To that extent, an image is never quite finished. (Or it always is!)”.

This new approach comes with the ample source code, for studios this means a framework for developing a studio specific ray-tracing platform for their own artists, with all the control and ‘plugin’ architecture to take the code wherever they see fit for their projects as RenderMan is extensible (something Pixar prides itself on). For artists and TDs the new RIS is only shading on ray-hits there is no over-shading. You gain incremental rendering that allows you to pick up renders later (see below “image checkpointing”), this and the very way the program renders means that with RIS one blurs the line between online and offline rendering.

With the growth of RAM and combined with RenderMan’s RIS approach to instancing, the whole scene can often be held in memory. This means path tracing not distributed ray tracing becomes an incremental rendering approach allowing interactive rendering, with faster feedback on the whole lighting solution.

RIS separates the integrator from the material interfaces, an idea outlined in ‘Physically Based Rendering’ by Matt Pharr and Greg Humphreys, a book that resulted in their Sci-Tech Oscar this year.

In their book in 2010, they pointed out that “it is common practice to combine the function of the surface shader and the lighting integrator into a single module and have the shader return the color of the reflected light at the point,” as RenderMan did in the past, but they went on to show that “by separating these two components and having the Material return a BSDF (Bidirectional Scattering Distribution Function)” the system would be better able to handle a physically based rendering system using a variety of light transport algorithms. This is exactly what Pixar has done with the RIS approach to integrators and by logical extension their material definition. This separation leads to this approach of the integrator now being a ‘plugin’ for RenderMan.

What does that mean for an artist? It means that one can swap in a different integrator and not change one’s lookdev. You could literally swap in a special integrator to help debug a shot, say if your normals seemed to be wrong downstream, a user could just render the same set, with the same material definitions with a custom vectors or normals integrator, this could be invaluable for highly complex shots. All of this would still happen in the users interactive progressive rendering environment.

The RIS shading and RenderMan has included the BxDF shading innovations from Disney Animation used in Frozen and actually ships with a basic set of BxDFs covering things such as hair, glass and volumes, eg. PxrDisney BxDF is the Disney BRDF from the Frozen work and is one of the core set of materials and will ship with it’s source code.

RIS incremental rendering also allows an overnight render to be done with a maximum per frame allowed to guarantee an over night render will work, but you can continue the render if you like what you see in the morning. (See below)

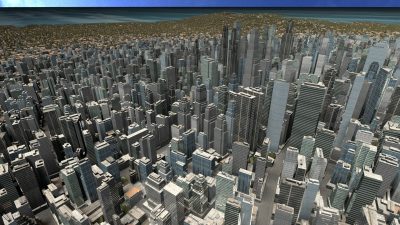

Direct lighting in a physically based lighting is all about area lights, and increasingly ‘real lighting’, so a scene at night in a city is actually lit by the lights of the city, hundreds and hundreds of real lighting sources. Geometric lights were introduced in the last version of RenderMan (RMS18) but now all the lighting is optimised for RIS mode. This new area light system allows for incredible complexity in lighting, while being actually simpler from a users or artists point of view.

Objects in any scene can behave as a physical light source when their shaders are set to emit light, allowing any shape to illuminate a scene. Emmisive geometry in this scene is provided by the animated metaballs, creating a lava-like effect.Q&A with Dana Batali, VP of RenderMan Products

fxg: Path Tracing was introduced a year ago with PRman’s support of path tracing (RPS 18) which produced “rapid but inaccurate samples to produce a good estimate”. How is the new RIS bi-direction path tracer different?

Batali: In RPS 18 we built a pathtracer atop the existing RSL-based shading framework. There: collaboration with all the shaders’ in-built integrators was required and this made for both a slower solution (due to RSL interpreter overhead) and a more brittle one (due to the unclear contract between the integrator and the shaders). With RIS, we’ve completely “reset” the contract for expressing material properties and we’ve allowed shader developers to code in C++. Now, whether an integrator follows a path-tracing or a distribution ray-tracing philosophy, the materials don’t “care” and the system is more orthogonal. And because the components are written in C++ and execute in a new shader-graph execution engine, the performance is significantly better. Historical note: as far as I know the first characterization of this design was introduced in pbrt where for didactic reasons it was important to separate shading into two broad categories: integrator and material. Most of our community has read this book and so it serves perfectly well as a plugin framework.

fxg: Can you explain what control you have for rendering to a certain level on all frames in a sequence overnight, and how that gets stored? Can I partially render a sequence and then continue (vs just a frame)?

Batali: When an offline render is spooled, we have the option of “image checkpointing” where a checkpoint image is written to disk on a regular interval (measured in render increments). Now a queueing system, for example Tractor, can kill a job according to a collection of policy decisions (eg. each frame gets 10m) and this will ensure that a nightly render schedule can be met.. In the past, since there may be high variability of noise across a shot, it was possible for individual frames to hold up the entire job resulting in high variability in daily deliveries. The other aspect of this feature: the renderer can take up where it left off. We store additional information in the checkpoint images that capture the internal state of the random-number generators and accumulated filter weights to allow the render to “pick up where it left off”. So one can imagine more queuing policies like: “in the wee hours of the morning, after all the dailiy work has completed, resume all the first-checkpoint rounds and take them to the next step… We’re really looking forward to “greasing the skids” of this important productivity boosting tech, both in tractor and with final touches on the command-and-control interfaces to prman atop this core capability.

Captured on just Dana’s laptop in real time, progressive rendering is shown here with adaptive sampling and a noise suppression filter. You quickly get a fair but noisy idea of the results.fxg: Could you discuss the benefits and the flexibility of instancing in the new version with RIS and REYES?

Batali: A couple of points on this topic: instancing in REYES is a completely different animal from instancing in RIS because the scanline renderer shades on grid-points before visibility resolution. In both cases adaptive tessellation requires that some notion of tessellation rate be conveyed to the renderer. We have a sophisticated instancing implementation that allows for multiple model resolutions to be available for instancing. But to minimize in-core instancing memory, it’s often best to identify a single resolution and this works better in a pure ray-traced environment since we shade after visibility. This is where RIS has a significant advantage for the common instancing cases. Ray tracing of instances is much faster (than the same un-instanced geo) since the memory traffic is greatly reduced. And to your question about different shaders – in the pure ray-traced world, since we don’t “cache” the shaded results, it’s much easier to provide arbitrary shader-binding on each instance. And so we now can easily support this in RIS mode.

fxg: Could you discuss the memory efficiency of the new system compared to REYES?

Batali: The biggest memory efficiency gains come from the pure-and-simple instancing environment (see above). We’re also on an ongoing mission to further optimize primitive memory use – principally for the primitives which arrive in large numbers (polys, points and curves). Another form of memory use in REYES that isn’t present in RIS is in “visible-point memory” (or deep framebuffer memory). This is a mixed bag since it’s the deep-data of REYES that makes for the best deep-comp and area-shadowmap outputs.

fxg: A property of the RIS is that it can involve less custom optimizations – so less experienced TDs/artists can get great results – can you expand on this idea of RIS being better suited to perhaps a less senior pipeline team?

Batali: This theme overlaps with the movement to eliminate multipass rendering solutions. Multipass takes a lot of expertise (but mostly plumbing expertise, not artistic). The other aspect of this cache-tuning – RIS has fewer caches and a lower requirement to tune them. Knowing how to tune caches falls well into that realm of the RenderMan guru.

fxg: Can you discussed the growth of pre-tessellation and how RIS handles over-modeling better than Reyes?

Batali: This is a fundamental point comparing ray-tracing to REYES. One of REYES’ strengths is that you shade before you perform visibility tests. This means that you can “share” the same shaded results across many pixels and this is the heart of why motion-blur is so fast in REYES. The cost of the feature is that we may often end up “over shading” and when objects are overmodeled, this effect multiplies. Add to that more expensive shading due to more accurate physical simulation of light transport and the story can become bleak. In the past, facilities had the time to optimize their assets. This means either providing multiple levels of detail or ensuring that models aren’t overmodeled. These days, time is a disappearing luxury and rendering architectures that are more forgiving to over-modeling are desirable.

So the final piece of the puzzle: ray-tracing only shades on the places that rays can “see”. On the negative side, this means that it’s hard to re-use shaded results to speed up motion blur. On the positive side: it handles over-modeled scenes well since the number of shading points is generally independent of the model complexity.

fxg: Could you explain your approach of thoughts on VCM more please (PxrVCM)? It seems like an excellent option or am I missing something? For example, at a research level is seems to do caustics very well?

Batali: VCM is a sort of hybrid in that it combines three techniques (Eyepaths, Lightpaths and Photons). But the idea of combining multiple techniques goes back to the seminal work of Veach/Guibas in their MIS (multiple importance sampling) work that combined two techniques for BPT (bidirectional pathtracing). VCM is a recent application of MIS that has provided the formalization to include “photon merging” into our MIS bag of tricks. This work was simultaneously introduced by Hachisuka, Jensen, Pantaleoni and by Georgiev et al. The latter is the source of the VCM moniker.

On paper BPT and BPT + VCM seem to address many of the shortcomings of unidirectional path tracing. In practice this is true but may not be worthwhile for a measurable percentage of real-world applications.

We’re still working with beta testers to build a sense of whether it’s reasonable to expect folks to change integrators according to the needs of the shot. So far there’s been very little push-back on this other than a question of how best to help artists understand or predict which integrator might be the best fit for the job.

The modularity of our integrator framework allows us to further develop technology in this important research area and see this ability to choose different integrators for the job as a fundamental (though perhaps ultimately hidden-to-artists) part of the RIS value proposition.

This is a simple scene shows the ability an emissive surface with a interesting topology to be an area light. The emissive section is self-shadowing & not flat

New pricing and availability

RenderMan will now be a quarter of the price and free to non-commercial users – free and completely un-watermarked.

It is bold strategy – Pixar wants to focus on the high-end animation and effects companies, but build both a user base and open source contributions. Disney and Pixar are separately very committed to the open source movement. Pixar has always been an active member of the broader graphics community and been leading contributors at conferences such as SIGGRAPH and DigiPro. In fact, Pixar’s first “open source” contribution was the RenderMan Interface Specification itself decades ago. “We are fully engaged in getting great value from initiatives such as Alembic and PTex, and contributing where we think we have a good solution for formats like OpenSubdiv,” says Chris Ford. “The provision of source code for the new uni-directional path tracer Integrator in RIS is another example. Other initiatives are still in development and we expect that we will do more open source projects in the future.

Products and Price: a Q&A with Chris Ford, RenderMan’s Business Director

fxg: Can you explain the new pricing and products please?

Ford: It is very simple. There is now one RenderMan product that can be purchased either individually at $495 a license, or for larger quantities through Peak Render Capacity (PRC) packages that can be configured to provide enough rendering not only for everyday requirements but optional additional burst render capacity to meet production crunches.

Another major benefit is that now the former RenderMan Studio and RenderMan Pro Server have been combined into one RenderMan product, all RenderMan users have access to both in any ratio through a flexible licensing system. The reason we made this change is because all trends point to ever cheaper computing access on both physical and virtual platforms, with studios and individual artists requiring more and more rendering for a given budget. This has become especially acute with the advent of physically based ray tracing. Therefore substantially reducing the cost of the individual render license makes it easier to cost effectively expand capacity and generate higher quality pixels. We are doing this specifically to encourage accessibility and remove barriers to growth, all as part of a long term strategy that is channeling into commercial production, technologies from the largest rendering development and research base in the film industry.

fxg: For any existing clients what is the upgrade path to the new version (especially if they are a smaller user)?

Ford: When the latest RenderMan is shortly released, all users who are on active maintenance will be automatically upgraded to the new version, as will all recent purchasers. For RenderMan users with older versions either on or off maintenance an upgrade path is available now.

As the previous RenderMan Pro Server and RenderMan Studio are being combined into the new RenderMan, you simply add up the number of each to equal the total number of new RenderMan licenses that will be received. Of course there will be questions about specific situations so an FAQ is available on the RenderMan website and we are happy to help individual situations directly of course.

fxg: You’ve discussed the batch nodes and user nodes being interchangeable – is that on a day by day basis at the user’s control – could I change it during the day to suit work patterns?

Ford: Yes absolutely. The internal renderer in the previous RenderMan Studio is being replaced with the standard (RenderMan Pro Server) batch renderer that serves both the artist interface (or the RenderMan for Maya plugin) and batch rendering requirements. Rendering licenses can be freely used to service both lighting, shading setup and test renders, and also final batch renders on the farm.

As long as the total number of RenderMan licenses is not exceeded, any combination of artist interface or batch renderer is permissible making this a very flexible and adaptable licensing system to the varying rhythms of production.

fxg: Will paid RenderMan licenses be any different from user non-commercial downloads?

Ford: No. There is only one RenderMan and the free non-commercial RenderMan is exactly the same as the commercial version. There are no watermarks, no time limits, and no reduced functionality. The only limitation is that upon acceptance of the EULA at initial installation, the software is to be only used for non-commercial purposes. We want to keep it very simple, and as importantly, RenderMan highly accessible.

fxg: Will there be access to any forums and documentation – and will that be separate from the forums or documentation that a paid user enjoys?

Ford: RenderMan’s product documentation is fully accessible from the Pixar website. We also made a decision that users of the free non-commercial RenderMan will be supported from community forums that our developers and support engineers monitor, however we will not support non commercial licenses directly. This is actually one reason why we are making RenderMan freely available for non-commercial usage as we want to focus our direct support more precisely on our commercial RenderMan users large and small while still making RenderMan openly accessible to the entire rendering community. Given the widespread availability of the Non-Commercial RenderMan we also expect third party resources to emerge to service the non-commercial user base, and we encourage them.

fxg: You have said you are providing the source code for the Integrators, what is the idea behind this and of a RenderMan as a development platform moving forward?

Ford: Modularity is a key part of the RIS proposition and we are supplying source code for the PixrPathtracer (uni-directional) Integrator as a template for RenderMan users to develop their own Integrators within the RIS framework. This is a demonstration of intent as part of an ongoing process of establishing common standards and practices, though it is not yet universal. The source code for the VCM integrator will not be supplied in the new release for example.

Michael Seymour and Dylan Sisson show the new RIS interactive re-rendering in action.What does RIS stand for?

Here are some of the other RIS naming “choices”, per Dana Batali above:

- Rendernator Integrator Supercomputinato

- Rather Intense Stuff

- Renders Insane Scenes

- Realistic Illumination System

- Really Intense Scenarios

- Replete Imagery Synthesize

- Realistic Image Savant

- Remedies Integration Syndromes

- Rather Intriguingly Social

- Relies Inherently on Sophistication

- Really Important Sounding

- Rendering In Speckles

- Resolving Iterative Samples

- Really Is Superior

- Redivivus Iter Sollers

- Render In Style

- Repurposable Integrator System

- Ray-casting Integrator Service

- Rectius Illumi Subtilis

- Ray Integration Scheme

- Realistic Illumination System

[…] RenderMan/RIS and the start of next 25 years fxguide.com/featured/rende… […]

[…] For more information read the exclusive fxguide in-depth coverage here! […]

[…] RenderMan/RIS and the start of next 25 yearsREAD ORIGINAL SOURCE ARTICLE HERE: http://www.fxguide.com/featured/rendermanris-and-the-start-of-next-25-years/ […]

[…] http://www.fxguide.com/featured/rendermanris-and-the-start-of-next-25-years […]

[…] RenderMan/RIS and the start of next 25 years […]

[…] http://www.fxguide.com/featured/rendermanris-and-the-start-of-next-25-years/ […]

[…] RenderMan/RIS and the start of next 25 years […]

Thanks for the amazing in-depth article, love the depth of these features.

We have an additional interview with Chris Ford over on our site if anyone is looking for a little more about RIS.

http://bigmancgi.com/renderman-ris-pixar-chris-ford-interview/

[…] Postscript: Here is a good good article on the new features and architecture of RenderMan (including the new RI…. […]

[…] 在fxGuide也有一篇主題“RenderMan/RIS and the start of next 25 years"介紹了新的RIS技術,在某些情形下速度比REYES模式快2~10倍。裡面包含了一個Progressive的renderer,可以得到視覺上快速調整結果。 […]