Regular readers of fxguide know that we’re big fans of tracking how image research ends up in products that are useful for artists. From research at universities to conferences such as SIGGRAPH and CVMP, you can gain insight into what to expect for the future. In addition, having knowledge about how the tool work can actually help you use the tool more effectively.

We’re fans of Adobe’s focus on image (and audio) research, as you can observe a clear path from this basic research to tools in a shipping product. Over the years, we’ve brought our readers early tech demos such as the Mercury Playback engine in Premiere and the 3D raytracer in After Effects — or the tech behind the Warp Stabilizer in AE. So we were especially looking forward to the Sneaks presentation at AdobeMAX.

The Sneak Peak session is big favorite of AdobeMAX attendees. The tech demos shown at the event might never see the light of day, but new features such as the the new camera shake reduction coming in Photoshop CC were shown at a previous AdobeMAX. It consists of a series of brief presentations that provide a look at internal tech Adobe is working on. It also highlights possible new features coming to their software.

The presentations were hosted by actors Rainn Wilson (Dwight from The Office) and Mary Lynn Rajskub (Chloe from 24), as well as Adobe’s Ben Forta. I enjoyed Wilson’s opening talk at the event, where he provided a bit of humor and as well as interesting insight into his Soup Pancake website. However, I found the stars’ inclusion in the actual Sneaks section to be — let me put this nicely — an unnecessary distraction. I’m surprised that Adobe felt that the real stars of the event — the Adobe developers/researchers/employees on stage showing off their efforts — wouldn’t be enough to carry the night. That being said, whether it was images, code, or audio, the audience was definitely behind what they saw up on stage. And presenters like Sarah Kong and others did a great job of holding their own for the night.

So let’s dive into what was shown, as well as provide a bit more background and speculate about some of the tech on display. We’re admittedly making a bit of a leap with our guesses as to the research behind some of these showings, and we’re certainly not saying the tech shown only uses techniques from the papers. But it’s likely it uses some of it. So if anyone from Adobe feels inclined, please feel free to comment as to whether we’re right or wrong.

Sylvain Paris: Playing with Lighting in Photoshop and After Effects

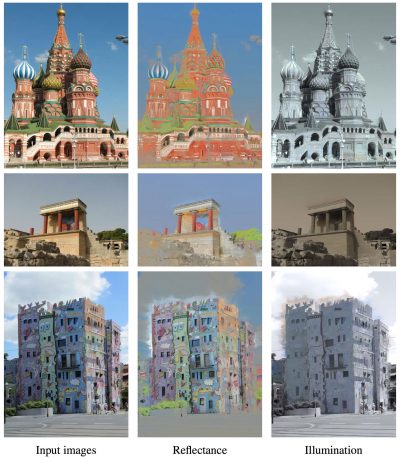

We’ve met Paris in the past, having enjoyed his presentations at CVMP and SIGGRAPH Asia. His name comes up often when discussing Adobe research, and In Singapore last year he presented information from the Coherent Intrinsic Images from Photo Collections research paper, upon which the relighting demo is based. The paper is the result of efforts from the team of Pierre-Yves Laffont, Adrien Bousseau, Fredo Durand, George Drettakis, and Paris.

In order to achieve the results you see in the clip from the Sneaks session, they have a routine which automatically examines the similarities between images in a photo collection, and then extracts the photos into separate reflectance and illumination layers.

The resulting reflectance layers are “coherent” across all views — effectively meaning being quite similar — while the illumination layer captures the shading and shadow variation that is individual to each picture. They can then take this information and effectively use one photo’s illumination layer information to relight other photos of the same scene. Talk about a killer “match grade” function.

The twist shown at AdobeMAX was the idea of analyzing the color grade from one scene and applying to another scene, as shown with motion video in After Effects.

Download Coherent Intrinsic Images from Photo Collections research paper PDF

Download supplemental images and information

Original research video

Sarah Kong: Perspective Warp in Photoshop

Sarah Kong, engineering manager for Photoshop, showed off a new perspective warp they’ve been working on. The idea is that by drawing rectangles on planar regions in an image, you can then adjust the perspective warp in isolated areas. As Kong showed in the Sneak, the current perspective tools are limited in their functionality..

Our guess as to the research behind the sneak peek

This Sneak looks to be at least based in part upon research done by Robert Carroll (University of California, Berkeley), Aseem Agarwala (Adobe Systems, Inc.), and Maneesh Agrawala (University of California, Berkeley).

The approach in the paper is to compute a 2D warp guided by constraints based on projective geometry. The results are not necessarily physically correct, but plausible and believable:

Our system optimizes the warp such that straight lines remain straight, planar regions transform according to a homography, and the entire mapping is as shape-preserving as possible. While the result of this warp is not necessarily an accurate perspective projection of the scene, it is often visually plausible. We demonstrate how this approach can be used to produce a va- riety of effects, such as changing the perspective composition of a scene, exploring artistic perspectives not realizable with a camera, and matching perspectives of objects from different images so that they appear consistent for compositing.

The UI in Photoshop doesn’t seem to take its cues from the the UI part of the paper. But this seems to be a sensible approach for the masses. From what we can see in the tech preview, the controls are straightforward, easy to use, and achieve useful results.

Download the Image Warps for Artistic Perspective Manipulation research paper PDF

Nick Bryan: Audio Layers

Our Chicago office switched to using Audition last year for our audio post and cleanup, so it was great to see the audio side of things getting a bit of love.

Bryan’s demonstration takes a single audio recording which contains two main audio sources, and extracts these two sources into individual layers which can be adjusted separately. The UI seems to use some of the same noise reduction selection cues from Audition (drawing on the audio spectrum) in order to help define the various sources.

Just watch the video.

As even Wilson noted, if this tech works as well as it appears — and Adobe makes it approachable for the average user — it could be a significant new tool in the post production toolbox.

Eric Natzke: iPad/Touch Painting

The session kicked off with Eric Natzke showing off some tablet tech utilizing the touch-sensitive Mighty Pen which was revealed at Monday’s keynote. One of the issues with paintings apps on iOS is the fact that the iPad doesn’t support a touch sensitive pen. Interaction with the device is based around the premise that it is ones finger operating the tablet.

Mighty’s pen tip seems to be about the same size as the tip on the Wacom Bamboo stylus, so the Mighty doesn’t have higher resolution drawing potential. This isn’t really isn’t a pen problem, but more of an iOS limitation. To register a touch contact with the screen, an object needs to be at least 6mm in diameter to trigger a touch event, which is sensible if you are considering fingertips as driving the UI. So don’t hold out hope for more granular drawing on an iPad.

So how does Mighty get around the fact that the iPad doesn’t do touch sensitivity like a Wacom table? It’s a bit of cool hardware tech. Inside the larger tip of the Mighty tech demo pen is a smaller brass tip that actually registers the force in which one draws with the pen. Because the pen is a Bluetooth device, it sends information about the pen’s behavior (including data from the brass tip) to the app and the app can then translate this pressure info into a brush behavior. So when you press harder with the pen, the iPad surface doesn’t know, but the Adobe hardware/software combo does. It’s a good example of a managed hardware/software ecosystem.

The demo during the Sneaks event focused on an app which attempts to bring a more natural media painting process to the iPad. Think Painter on iOS. Natzke demonstrated a variety of brushes, shapes, and pressure sensitive implementations of brushes. He showed onion skinning with images, textured paper, and paints which acted naturally and (seemed) to take the paper texture into account. If they can pull this off, it will be a welcome addition to the iPad artistic app category. I could see myself taking this on hikes into the mountains and deserts…

Julieanne Kost: Shake Reduction on iOS

One of the new features of Photoshop CC that was shown off this week is Camera Shake Reduction (see video, below). Julieanne Kost took this in a different direction at the Sneaks, showing the shake reduction algorithm working on images on the iPad. As you might imagine, this is a very processor intensive process and one not really suited to the A6 processor in the iPad (or less powerful ones in the iPhone).

So, enter the cloud. In the demo, when Kost browsed photos and then selected one to use, it would be automatically submitted for processing in the cloud (I’m not clear as to whether the original image resided on Creative Cloud already or on the local iPad). She could then move on to browsing more images and submit others to the queue.

As the cloud finished processing and image, a new result image would be visible on the iPad. In her demo, six images were eventually returned for each original image submitted. These six images were actually six different versions of the process, with different settings or algorithms applied to the result. She could then pick which one to keep and then discard the other ones. All this without taxing the proc (or battery life) on her iPad.

That’s cool (especially considering all the low light photos of beer I take). But what’s truly interesting about this process is the idea that Adobe can actually use this information from users. By having the end user effectively grade the results, they can use this massive amount of data to improve their algorithms over time.

Crowdsourcing to gain better image processing tools. Sweet.

Our guess as to the research behind the sneak peek (and the Photoshop CC feature)

We’re pegging the 2008 research paper High-quality Motion Deblurring from a Single Image by Qi Shan, Leo Jiaya Jia, and Aseem Agarwala. We’re guessing this one both based upon the content as well as the fact that Agarwala is a principal scientist at Adobe.

The abstract provides some details as to why their process is interesting, including the fact that their results don’t require additional image input:

As a result of these steps, we are able to produce high quality deblurred results in low computation time. We are even able to produce results of comparable quality to techniques that require additional input images beyond a single blurry photograph, and to methods that require additional hardware.

Download High-quality Motion Deblurring from a Single Image research paper PDF

Camera Shake Reduction in Photoshop CC

Holger Winnemoeller: Painting for non-artists

I have to admit, I always cringe a little bit when people show demos of tech that lets people create painterly images simply through cloning and with no artistic talent. But that’s a bit unfair, and I can see how your average user might find such tech fun and useful.

Adobe research scientist Holger Winnemoeller (his projects include Oilpaint Effect in Pixel Blender & Cartoon Effect from CS4) showed off some very effective tech for making painterly imagery. I’ll reference Painter again here, but this approach seems to be much more automated and useful for the non-artist.

In the software he showed, the cloning process seemed to be intelligent about the content of the original image. It seemed to do a better job of recognizing say the texture of the clouds vs. the sky. The process involved starting with a large brush and simply scribbling in the image, focusing on the various objects. Then, sizing down the brush and repeating the process to add more detail. The results were quite nice in the end.

Weinnemoeller’s work bio and personal research pages have a lot of information on synthesizing photographic images into stylized images. His publication called “NPR in the Wild” (get the free preprint here or purchase here) is a nice overview on many of the techniques used in our industry and others, including architecture, gaming, cartoon shading, and image synthesis in movies such as What Dreams May Come.

Nico Becherer: Image-based searches

Nico Becherer’s presentation began with a practical problem: how to successfully find clothes that matched a particular style. Of course, you can go to various clothing sites, do searches, and browse through images until you find what you want. But he proposed a better way in his tech demo, showing an image-based search running in an iOS emulator. In the image strip below, you can see a breakdown of the process he demonstrated:

The far left frame shows the user search area on the left and the search results on the right. In his example, he started looking for a women’s shirt you want to find a shirt. He started with a men’s shirt, which returned the results on the right. Clicking on one of the women’s shirts, the search is refined and displays similar images. Utilizing painting tools, he started painting the image and the search dynamically updated. Finally, even drawing a crude face seemed to return several results that were appropriate including the (presumably set up) t-shirts with Rainn Wilson on them.

This isn’t necessarily new technology, as there is a variety of image-based searching techniques out there (one example would be imgSeek and even Adobe Elements). But taking a leap you could see that a combination of some of the painting and image tools from Adobe’s products and the tablet platform could make for an interesting app. In addition, an image-baed search would be a great addition to Lightroom.

Other Presentations: the web and CSS

But they deserve some props. And I’m personally looking forward to using some of these tools to make fxguide.com and fxphd.com a bit easier.

Noam Almosnino showed a tech preview of Muse, Adobe’s very nice HTML creation app, which took advantage of new CSS3 effects such as animation, blurs, and more. The thing about Muse is that it is a very artist-friendly app in that you can visually create very nice looking sites without having to know how to code CSS and HTML.

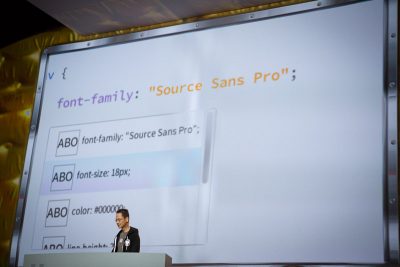

Joel Brandt showed off Brackets/Edge Code (text editors for the web) and a very cool feature which allows the designer to select an item that is rendered in a web page and then immediately focus on the CSS code which determines what it looks like. May not sound sexy, but with my web hat on…believe me, it is.

NJ showed very cool integration between Brackets and Photoshop, showing on how you take a Photoshop comp and extract CSS data from the source file. Right now, that process is a manual one and what he demonstrated could be real time saver.

And Todd Burke showed off a whole new library of various animated effects for tablet apps/publications created using InDesign. He showed effects such as sparkles, snow, rain, fire, and others.

Great article 🙂

Do you have a video link for the web and CSS sneak peek ?

Nicolas.

Not yet Nicolas — Adobe hasn’t posted anything else yet.

Did they really need Rainn Wilson to be around?