One of the really impressive technologies at SIGGRAPH was Atom View by Nurulize. Several companies have tried to address bringing the real world with Interactivity to VR. In the past we have covered 8i which is one such company. Others are exploring Light Fields to resolve this. Approaching this problem from a different but very high end perspective is Atom View by Nurulize.

Scott Metzger, is Nurulize’s co founder. Nurulize is a virtual reality software developer founded in 2013 by software development and VFX industry veterans Philip Lunn and Metzger.

You know something is hot at Siggraph when it generates a buzz amongst attendees. We were looking forward to meeting up with Scott and the guys at SIGGRAPH, but before we managed to get to their booth, we had almost everyone we knew asking us if we had seen their Atom View solution.

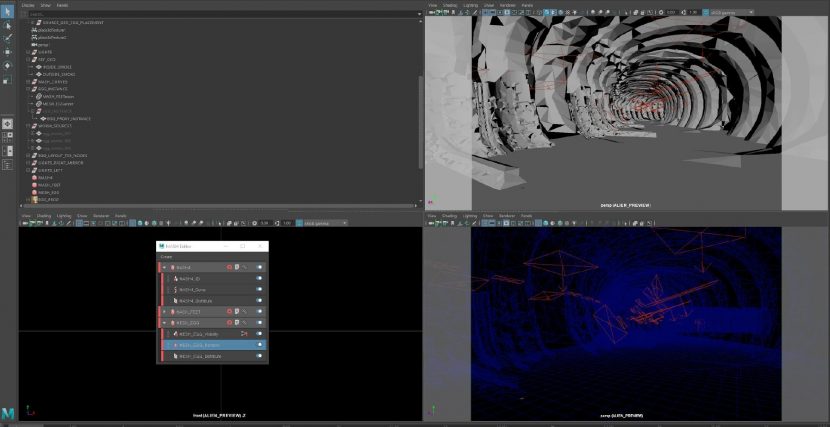

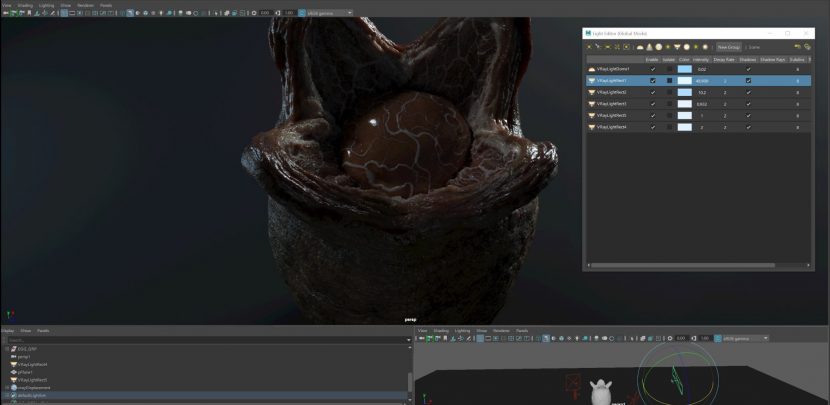

At SIGGRAPH we experienced a super high resolution 3D model in VR with Atom View. This was modeled in Z Brush, and then volumetric rendered in V-Ray for Maya, so it could be displayed in realtime using Atom View. The video above is impressive but in VR it is stunning and insanely detailed, yet fully interactively rendered so you can explore it in VR.

Atom View has developed from a series of explorations of scanning and high resolution environment work going back a few years. Today, it is a product that allows extremely high res real world scenes to be experienced with all the fidelity that virtual texturing and high resolution point clouds can provide.

Most VR falls into one of two camps with Real time experiences, which are

- Game Engine generated material such as Robo Recall or

- 360 video for VR such as Clouds over Sidra, which shows the Zaatari Refugee Camp in Jordan.

The former is 3D generated CGI material, the later is filmed live action but it lacks full interactivity (ie. lacking full head movement) and it lacks interactivity, since it is a filmed experience. Atom View addresses this by providing seamless photographed imagery but in a 3D space that allows detailed exploration and interaction. The quality of the VR experience is what defines Atom View. Rendering or displaying high resolution material is hard in VR given the screen size and data rates, and the need for apparently zero lag or delay in refreshing the view as the user moves or turns their gaze. There are vast amounts of data and UV sets needed to provide a photoreal looking CGI solution.

Nurulize solves this problem with Atom View. It is an agnostic point cloud engine for volumetric point data. The tool allows laser scanning of environments with sub mm accuracy to be shown as a point cloud, but it is so dense the point cloud doesn’t ‘look’ like a cloud. Other point clouds visualisations have a very pixelated appearance, Atom View’s does not. The software uses the GPU to interpolate between the points in a clever way to give the illusion of the imagery having a surface like geometry without needing an actual model to be built and then projection texture mapped. There is no explicit geometry meshing or texture projecting, because both of these can soften the look of the final image by either only approximating the geo or ‘stretching’ the texture so it looks unrealistic. The system stores RGB and depth information for each point and then at run time produces the appearance of a solid object. The net result is stunning in VR or even just on a monitor.

The second problem with point clouds is that it does not immediately allow editing. Atom View provides editing tools that work intuitively and avoid the overwhelming point cloud data avalanche that typically happens in LIDAR and other point cloud pipelines.

With Atom View you can input multiple xyz data from any source. You can adjust color in 32/16float, the editing tools and export cache format allows streaming of the point data. “You can bring in lidar scans, CG content and more. It has a robust filling algorithm that gives the illusion of solid surfaces like geometry with a lot less data” explains Metzger.

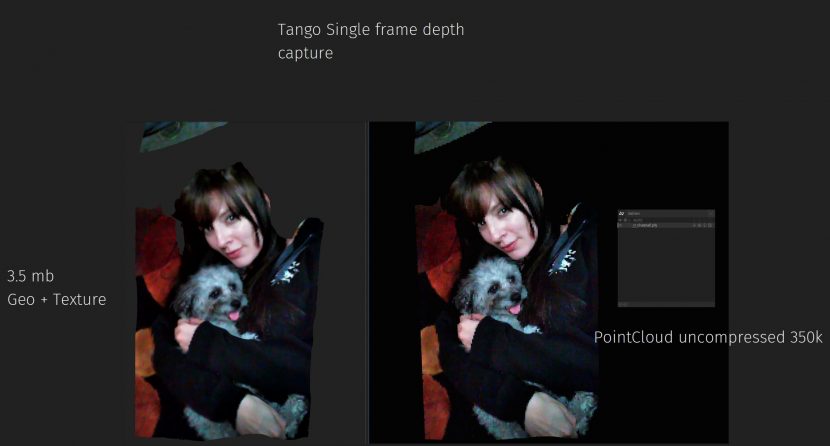

Current Input formats include Vrmesh (Pointcloud), PTC (Renderman Pointcloud), ABC (Alembic points), FLS (Faro Scanner), e57 (scanning format), ptx (scanning format), exr (Deep images), MVX (volumetric video format). At SIGGRAPH Metzger showed Google Tango RGBD captured data that was natively 3.5 mb (Geometry and Texture) – in the Atom View point cloud format it is just 350K. For a CG example, they showed a 1979 Ridley Scott style Alien pulsing face hugger pod, rendered out of CGI as a point cloud, allowing one to dangerously lean over and see inside the pod. (Thankfully it did not jump scare / VR animate leaping at one’s face in CGI or I swear you would not be reading this article). But if rendering out CGI as point clouds is one option, the perhaps more natural option is taking DEEP render scenes and converting them to the Atom View format so you can experience the scene in VR with head movement interactivity.

Atom View works as a plugin to UE4: With this plugin, UE4 takes the Atom View data and allows it to exist with polygons in UE4. “We have spliced our rendering code with UE4’s renderer” he explains.

NuDesign

Once you have accurate spaces in VR it opens up a world of opportunities. Nu Design is their collaborative VR production tool. Nu Design is built with Atom View under the hood and it allows it to rapidly import and view high quality robust volumetric data, from a vast range of sources. Nu Design is a multi user collaboration tool aimed at professionals. Users can connect and collaborate in VR while importing Atom View data. Metzger explains, “Nu Design uses our UE4 plugin of Atom View and allows measuring, drawing and the interactivity of active collaboration”. Critically important, due to the clever data manipulation, one can stream Atom View data across a network inside a facility which is key for collaboration.

Once you have accurate spaces in VR it opens up a world of opportunities. Nu Design is their collaborative VR production tool. Nu Design is built with Atom View under the hood and it allows it to rapidly import and view high quality robust volumetric data, from a vast range of sources. Nu Design is a multi user collaboration tool aimed at professionals. Users can connect and collaborate in VR while importing Atom View data. Metzger explains, “Nu Design uses our UE4 plugin of Atom View and allows measuring, drawing and the interactivity of active collaboration”. Critically important, due to the clever data manipulation, one can stream Atom View data across a network inside a facility which is key for collaboration.

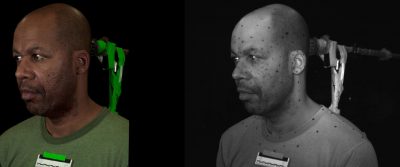

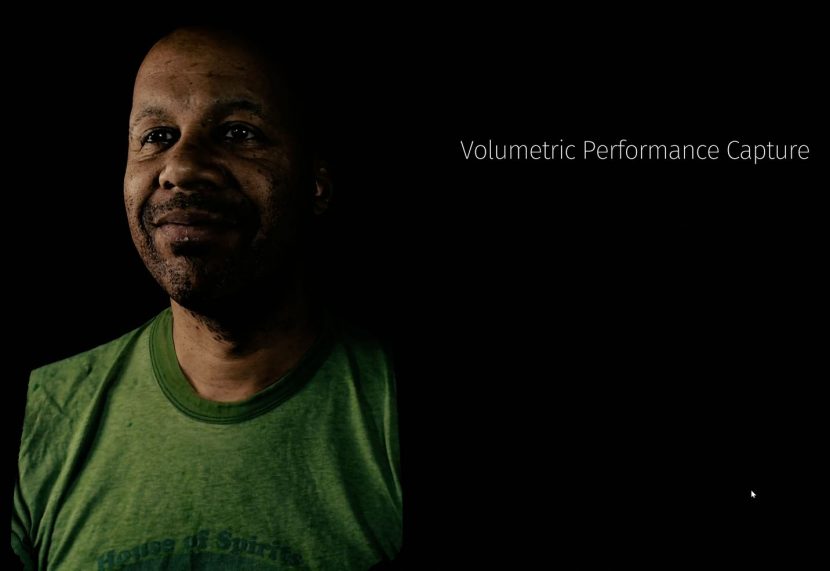

Digital Humans Test: Malik

One of the demos at SIGGRAPH that caught our attention was a brilliant human performance experienced in VR via Atom View. Nurulize did this just as a test with one of their programmers Malik Williams (ex-Method Studios). They set up 2 IR machine vision cameras that were mounted side by side with two Nikon D810’s shooting log format at 1920×1080 @ 60fps.

One of the demos at SIGGRAPH that caught our attention was a brilliant human performance experienced in VR via Atom View. Nurulize did this just as a test with one of their programmers Malik Williams (ex-Method Studios). They set up 2 IR machine vision cameras that were mounted side by side with two Nikon D810’s shooting log format at 1920×1080 @ 60fps.

Metzger did this as he wanted to shoot their subject, Malik, with dramatic quality lighting. “People react to good photography, but the trouble with a lot of Structured from Motion (SfM) algorithms is that they require a very even and flat lighting”, explains Metzger. The test solved this by recording in one set of cameras for lighting and in the other set for witness reconstruction. This was possible since they shot Malik in duplicate fashion (with both the Nikons shooting 60 fps, connected to Atomos Shogun recorders, and a set of IR cameras right beside them). Malik was lit with both LED and another set of IR lights. “I literally came up with a magic mixture that would let me mark up the performance only visible in IR and invisible to the lower color spectrum” says Metzger. This approach allowed complete freedom of lighting, which is key for production applications he explained.

After the performance was complete they did a Photogrammetry solve in Color and in IR. “The solve is resurfaced, every marker is modeled and a character is rigged with performance capture from all 4 cameras” he explains. The two Color Nikon D810 Cameras were then projected in V-Ray for Maya with camera based mattes and with blending between the projections. Any area where there is no color data from any of the cameras, the render would fill in information from a CG version. “Since we render out a point cloud from V-Ray all that crazy data requirements are dropped into a single point cloud that was uncompressed about 12 megs per frame of 32 float color data” he explains All that is needed is a XYZ position and an RGB value, there is no need for surface normals, UV’s or textures.

The problem was made more complex by having to line up shutter angles and sync all the footage, which was solved through endless testing and adjustment. To complete the model, Malik’s teeth were scanned and added. The team also built a full normal CG double, which could be used if there was missing data in the data set from the volumetric performance capture, since a small fill patch of approximate CG Malik, would be vastly less noticeable than a hole in the data/face.

“If I showed you pics of the setup you would totally laugh. I’m not looking to do this for service. I just wanted to show what our software can allow in terms of freedom of workflow and agnostic data workflows to any device or software” he explained.

Very interesting technique but I think Euclidion introduced this YEARS ago. In 2011 they posted this video: https://youtu.be/00gAbgBu8R4

But Euclideon has not made it available in any usable form. They chickened out from the game industry (which they never truly entered anyway – only made their own stereoscopic projection VR arcade with government money) and went straight into geospatial which is a much better fit for such industry. Why they haven’t done a Unity or a Unreal plugin is due to their sheer greed and fear of others immediatelly understanding the shortcommings of their technology. Same goes to the Atomontage engine.

Yes, I was just thinking about Euclidion. And of course the limitation with any point-cloud model is that the lighting/shadows and highlights are baked in. Though I see Euclidion has some raytraced flat surfaces thrown in.

Why would the lighting/shadows be baked in. Euclidion uses also point clouds from normal 3d polygon models which I THINK can be lit afterwards.

Once you put data on your points for normals, and all the other attributes that materials have, your file complexity balloons up. I see at the end of the Euclidion demo there is talk of shadows, but it appears to me that it’s more of an ambient occlusion thing… but perhaps I’m wrong. I’m not sure how infinitely small points can cast shadows, but all I really know well is polygons, so… =:-P