Link to our fxpodcast episode discussing Computational Photography with Stu Maschwitz

The iPhone’s video capabilities are remarkable. Through a combination of multiple lenses, computational photography, machine learning and new processing chips, the iPhone 13 Pro Max let filmmakers shoot HDR footage and ProRes compression types. This is immediately apparent on the iPhone’s screen, which in the iPhone 13 is now even brighter, providing 1,000 nits brightness (1,600 nits peak). As a point of reference, the latest M1 iMac features a 24-inch 4.5K Retina display with 500 nits of brightness. Frankly, it is insane that an iPhone 13 Pro Max has twice the brightness of a top-of-the-line iMac. It is extremely handy when shooting outside as it allows one to see their screen.

Much has been written about the new Promotion which interactively scales the iPhone’s display frame rate. However, in truth, the two main benefits to an industry professional of the new approach is that it sneaks a lot more use out of your battery and importantly it will not use high framerates on movies or clips that were not shot that way. If The Hobbit is available in its native 48fps it will display it as such, but if a movie was shot 24fps, it will playback at 24fps – without the need to disable annoying menu items. The new iPhone simply automatically respects the native format the filmmaker used.

Lens Tech

iPhone 13 Pro has a new lens and new signal processing. This allows for a range of filmmaking opportunities. Firstly, the optical zoom is 3x rather than 2.5x; and secondly, the new wide-angle also allows for macro photography. Here at fxguide, we were darn close to buying a macro 100mm lens and the iPhone came out just in time to save us from that particular B&H purchase.

iPhone 13 Pro has a new lens and new signal processing. This allows for a range of filmmaking opportunities. Firstly, the optical zoom is 3x rather than 2.5x; and secondly, the new wide-angle also allows for macro photography. Here at fxguide, we were darn close to buying a macro 100mm lens and the iPhone came out just in time to save us from that particular B&H purchase.

The macro provides focus under 15cm from distance and while the menus still offer the zoom choice of the other lenses, the macro image is always from the new wide lens.

Optical Stabilising

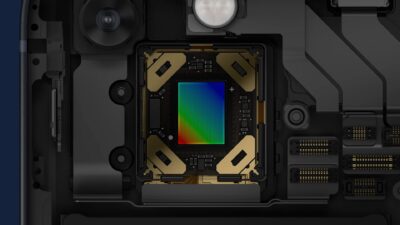

The sensor-shift optical image stabilization (OIS), unique to iPhone, is available on both iPhone 13 models. This impressive stabilizing is of the sensor instead of the lens, so images are smooth and video is steady, even when the user is not. Unlike optical tracking-based stabilizing, the OIS produces a result that is vastly less affected by what the camera is actually filming and more by how the camera itself is moving.

The sensor-shift optical image stabilization (OIS), unique to iPhone, is available on both iPhone 13 models. This impressive stabilizing is of the sensor instead of the lens, so images are smooth and video is steady, even when the user is not. Unlike optical tracking-based stabilizing, the OIS produces a result that is vastly less affected by what the camera is actually filming and more by how the camera itself is moving.

The new wide camera has a larger sensor with 1.9 µm pixels, the largest ever on iPhone, resulting in less noise and faster shutter speeds needed across lighting conditions. Coupled with the larger ƒ/1.5 aperture, the wide camera on iPhone 13 offers a massive improvement in low-light situations, up to 2.2x when compared to iPhone 12 Pro, and nearly 1.5x when compared to iPhone 12 Pro Max.

Computational Photography

The iPhone is a remarkable camera system because it does so much more than faithfully photograph and reproduce the images. Much of the genius of the phone’s imagery is from the hardcore computing done by the A15 chip.

Cinematic mode

If you have ever played with pin-hole cameras you know that such a mechanical device has a virtually infinite depth of field. For the longest time, this was a reason to not use an iPhone-style camera. A big sensor, being the opposite of a pin-hole, provided loads of depth of field. Once a filmmaker or photographer has a shallow depth of field, you can make a foreground subject pop. It is possible to pull focus and direct the audience, and a long lens wide open f-stop can produce a breathtakingly beautiful shot that is often called cinematic.

With the new Cinematic mode, perhaps for the first time, the lack of depth of field due to the tiny size of the iPhone’s lens and sensor works for filmmakers. It is no accident that on a full film shoot there is a dedicated experience focus puller. It is hard, really hard, to accurately and seemingly effortlessly manually adjust the focus to move from one subject to the next. Even with laser distance finders and other tools, it is a manual process that is baked into the shot. If you don’t like the focus pull, or the final focus is a fraction soft – bad luck, go again.

What Apple has done with their Cinematic mode is take advantage of the fact that the small camera has almost everything in focus and then adds the focus as a digital rack defocus. This means the shot can be edited and adjusted in post as to the timing and nature of any focus pull. It is important that Apple applies a digital rack defocus and not a normal blur, as the key to the cinematic shot is the bokeh. An in-camera defocus will cause a background ping to bloom into a disc shape. A blur on the same pin highlight in photoshop would average out the pin and at best fade it and at worse completely hide it.

To be able to blur in cinematic mode the iPhone needs a heap of processing power, which it has due to the new Bionic processor, and it needs to understand the depth of the image. When using the front-facing camera system that unlocks your iPhone using your face, this is aided by the invisible depth sensor, but on normal use of the back-facing cameras, it does this through photogrammetry using multiple lenses. As your iPhone’s lens are at a fixed, very precise distance apart, the camera can calculate a depth map. Apple won’t confirm it, but it is believed the depth map calculated for cinematic mode is a 320 x 180 black and white map. This sounds low res, but the output is a blurred image so sharp detail is not the number one priority.

In terms of what to keep in focus, the system can automatically track faces and move between them, or you can select a region on the screen to be your primary focus. One brilliant side point is that since the sensor actually sees more than what is recorded (and you can actually click a button to see this), the cinematic focus tracker will pick up a face before it enters your recorded frame or tracks someone out of frame fully before recalibrating. This sounds like a trivial feature but it would be horrible if the focus left a person too soon, or needed their whole face to be in the shot before it registered their presence and pulled focus to them.

The Cinematic mode can struggle if the segmentation machine learning cannot cleanly lock onto the image in the foreground.

The clip shot in Cinematic mode downloaded from the iPhone contains all the data, so this can still be edited offline in, say, Final Cut Pro on your iMac.

Machine Learning

Face detection is used throughout the iPhone but there are even more advanced image tools that use machine learning. When taking a group photo, rather than just picking a good balance of exposure, white balance and detail setting for the shot, the iPhone cleverly segments the image. For each person and sometimes part of a person, the system computes the best skin tones and then effortlessly mixes these together into one stable solution. At this point, we have long past the comparison to a normal camera. At this point, we have long past the comparison to a normal camera. For each point in the shot, there are 9 samples taken that are processed by the Image Signal Processor (ISP), the smart segmentation High Dynamic Range (HDR), deep fusion and noise reduction tools.

To further aid in handling special cases, the Pro night portrait mode uses the additional information gathered from the LIDAR sensor to help inform and build a depth map and image segmentation on the new wide-angle lens in low light.

The Smart HDR produces an output image that immediately looks spectacular on the iPhone’s HDR screen. However, many professionals want to effectively shoot a clip that is more ‘RAW’ and then edit it in post, especially if they want to intercut their iPhone footage with that of a traditional Arri, Red or another high-end cinematic camera.

Enter stage left the new option to shoot ProRes…

ProRes

Apple ProRes 422 HQ is possible to shoot on the newest iPhone with iOS 15.1. The files are ideal for post, but they are huge. A minute of 10bit HDR ProRes is 1.7GB if HD and 6 GB if 4K. If you needed a reason to buy a version of the phone with loads of memory – this – is – it. Prior to ProRes, the largest files on our iPhone were uncompressed RODE audio files, but that is nothing compared to a day’s filming in ProRes. The best way to download the files is to plug in your iPhone with a lightning cable. Airdrop is possible but only practical on tiny test shots and is not a realistic production option.

Apple ProRes 422 HQ is a higher-data-rate version of Apple ProRes 422, that preserves visual quality at the same high level as Apple ProRes 4444 but for 4:2:2 image sources. When shooting at HD resolution, ProRes 422 HQ offers visually lossless preservation of the highest-quality professional HD video that a single-link HD-SDI signal can carry. With the iPhone, one can record 60 fps HD ProRes, and download it later.

This codec supports full-width, 4:2:2 video sources at 10-bit pixel depths, while remaining visually lossless through many generations of decoding and reencoding. The target data rate for HD is approximately 220 Mbps at 1920×1080 and 29.97 fps. The iPhone shoots at 4K ProRes at 24fps at 704 Mbps. You can shoot 30fps 4K ProRes at which point the data rate goes up slightly to 760Mbps, but at this rate, for the first time, the 4K ProRes is slightly less quality than the normal 4K ProRes at 24fps, but the ‘compromise’ is slight and exceptionally unlikely to be noticed. With the exception of this top 4K-30fps, there is no difference or compromise between what the iPhone will produce and full spec ProRes generated by any Apple device.

While the current iPhone Camera App only provides ProRes in full ProRes 422 HQ, ProRes can also be recorded in lower ProRes formats on third-party Camera Apps. For example, on the FiLMicPRO app, you can select ProRes 422, and ResRes 422LT, and even ProRes 422 Proxy formats. In ProRes 422 Proxy, you can trade off the lower quality and shoot 4K (2160p) for a smaller file size but you still cannot shoot above 30fps 4K, to achieve 60fps you can only shoot ProRes at HD resolution.

There are a couple of major reasons one might want to shoot ProRes. Firstly, the format provides much greater grading opportunities. The second reason is that while the traditional iPhone video codec looks good most of the time, it can produce artifacts with loads of high-frequency material. If you are shooting detailed fur, water with sparkling highlights or even sometimes dense trees with moving leaves, the compression rates require these details to be recorded with a lack of fidelity that manifests as a semi-blurred region. This is not a problem on any home video but would be unacceptable on a production.

If you need to do complex grading of graduated skies or detailed production work, use ProRes but it should not be your default resolution.