Day 3 at SIGGRAPH in LA – we attended a path tracing in movies course and looked into the latest in games, plus checked out the trade show.

Trade Show

The trade show at SIGGRAPH is only open for about three-quarters of the show, and is moderate in size compared to the powerhouse production talks and technical papers, but for those not able to get full conference passes it offers an excellent chance to meet people and to see various products and artist demos.

The trade show at SIGGRAPH is only open for about three-quarters of the show, and is moderate in size compared to the powerhouse production talks and technical papers, but for those not able to get full conference passes it offers an excellent chance to meet people and to see various products and artist demos.

Our own Mike Seymour hosted one such panel on the Foundry Booth on Wednesday exploring some of the business issues facing the industry with Barry Zundell from Villusion, Oscar winning VFX sup. Alex Henning and Shannon Gans, head of New Deal Studios.

The talk covered the loss of talented staff to Silicon Valley, the need for a more transparent and perhaps time and materials business model and explored the move into new tech, most centrally how all three companies had established successful and key VR businesses. The talk was streamed live as part of The Foundry’s SIGGRAPH coverage.

Also seen on the Foundry booth was a tech presentation which included the Nokia OZO.

In addition to the big booths like Intel, Chaos (V-Ray) or PIXAR, there are many other more specialized companies and the SIGGRAPH Job Fair, which seemed a bit quieter this year than in SIGGRAPH’s past.

There were still a reasonable amount of booths, but perhaps less than in past years, and perhaps as a result it has been seemingly quiet when we have gone by, which is surprising given how key the job fair has been over the years.

One of the interesting things about the SIGGRAPH exhibit hall is finding new and perhaps undiscovered technology on some of the smaller booths.

One such example is Otoy which had on the corner of their booth last year, down low on a coffee table, a small tech demo of their lightfield technology. The technology was proven to be so interesting that all the major VR suppliers beat a path to their door, following the show last year. They are back again this year with a quantum jump on last’s year tech, which is again extremely impressive. Given the nature of the pre-release NDA hardware they are using, this is only being shown in a private room at the back of the Hall, and as we tweeted yesterday if you are at SIGGRAPH beg, bribe or grovel to get into that room to experience it.

One such example is Otoy which had on the corner of their booth last year, down low on a coffee table, a small tech demo of their lightfield technology. The technology was proven to be so interesting that all the major VR suppliers beat a path to their door, following the show last year. They are back again this year with a quantum jump on last’s year tech, which is again extremely impressive. Given the nature of the pre-release NDA hardware they are using, this is only being shown in a private room at the back of the Hall, and as we tweeted yesterday if you are at SIGGRAPH beg, bribe or grovel to get into that room to experience it.

The tech is so new that it only got working mid morning on the Wednesday of SIGGRAPH, and while fxguide was getting the first demo (after the guys who lent them the specialist hardware), the Otoy team were being messaged from Major Studio execs outside demanding to be let in to the demo room and onto the schedule as every single demo spot was already allocated until the end of the show.

Otoy’s lightfield tech is one of the best pieces of VR tech but it is not alone in having private suites off the main show. The material being shown by EPIC games is remarkable and one of the best game engine demos yet seen in VR, which is not surprising as their real-time Kite piece won best of Real-Time Live on Tuesday night.

For those not able to get into private demos and NDA suites, there is a host of outstanding work, but with more obvious hardware in the VR village next to the Emerging Tech displays. Ford and other car companies are doing great work and finding commercial uses for VR.

One of the great advantages of being at SIGGRAPH is being able to see specialist setups that are just not able to be downloaded or viewed any other way. One such example is a VR test crash done for insurance company NRMA. This piece has the viewer sitting in a real car in the VR village wearing a DK2 which then allows one to experience a car crash up close and personally with today’s safety equipment and that of 20 years ago. The piece had to be modified and redone for the left hand drive American car, explained 3D lead Stuart White, Head of 3D at Fin Design for Agency TBWA/Whybin. The piece has already won a load of awards locally, been taken to Cannes and to SXSW – where the SIGGRAPH team discovered it and invited it to SIGGRAPH in LA.

VR is the dominant new technology at SIGGRAPH 2015, with a huge range of booths, demos and technical papers covering everything from tracking facial expression when wearing a head set, to advanced Lightfield technical papers on the session on the last day. While not presenting in this session, 8i were at SIGGRAPH having just gone public last week. They have some of the most emotionally impactful VR we have ever seen, which uses LightFields – but not in a way most companies do. Watch fxguide next week for more on 8i and details on being able to download test VR experiences. Truly remarkable real people filmed for VR or mobile virtual playback.

VR is the dominant new technology at SIGGRAPH 2015, with a huge range of booths, demos and technical papers covering everything from tracking facial expression when wearing a head set, to advanced Lightfield technical papers on the session on the last day. While not presenting in this session, 8i were at SIGGRAPH having just gone public last week. They have some of the most emotionally impactful VR we have ever seen, which uses LightFields – but not in a way most companies do. Watch fxguide next week for more on 8i and details on being able to download test VR experiences. Truly remarkable real people filmed for VR or mobile virtual playback.

The mood of the majority of people seems to be that the technology has much room to grow and that while VR seems big, and well funded today, this is the tip of the iceberg and that once AR such as Microsoft’s HoloLens and Magic Leap start shipping the market could well end up as 25% VR and 75% AR.

Pixar

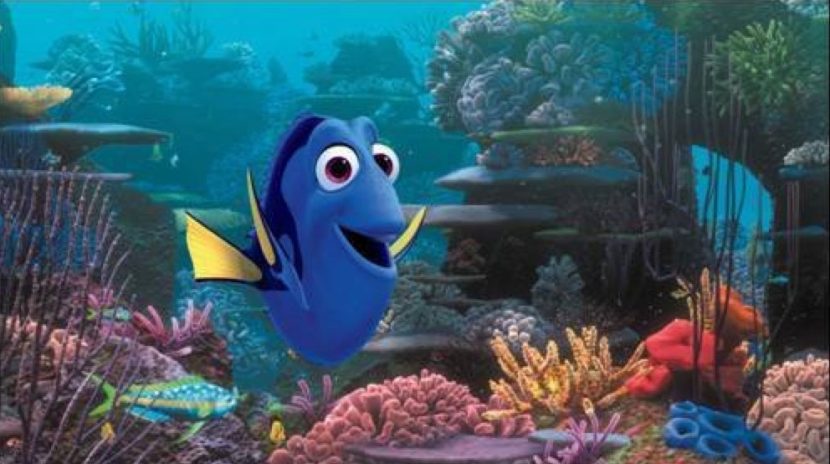

Pixar once again created enormous queues both for its annual handing out of toy teapots and for its User Group which was re-fashioned this year as an Art and Science Fair. RenderMan generated rockstar queues and inside the event not only were users treated to the new Pixar animated short film Sanjay’s Super Team and 1 min of the upcoming Feature Film Finding Dory. As Pixar’s Dylan Sisson joked – yeah we lost another fish !

The move by PIXAR to the RIS integrator is one of three key technologies in Finding Dory, the other two are a move to having RenderMan in Katana and using Universal Scene Description software (USD).

Pixar Animation Studios has announced it intends to release its USD as an open-source project by summer 2016. USD addresses the ever-growing need in the CG film and game industries for an effective way to describe, assemble, interchange, and modify high-complexity virtual scenes between digital content creation tools employed by studios.

The powerful techniques for composing and non-destructively editing graphics “scene graphs” that form the core of USD have been evolving at Pixar for close to 20 years, dating back to A Bug’s Life. These techniques, such as file-referencing, layered overrides, variation, and inheritance, were completely overhauled into a robust and uniform design for Pixar’s next-generation animation system, Presto. “USD is the marriage of Presto’s ‘composition engine’ to lazy-access cached scene description, with top-to-bottom enhancements for scalability and leverage of today’s multi-core systems effectively,” says Sebastian Grassia, Lead Engineer for the USD project. Although it is still under active development and optimization, USD has been in use for nearly a year in the making of Finding Dory, bringing new levels of performance and stability to Pixar’s production.

The open-source Alembic project brought standardization of cached geometry interchange to the VFX industry; USD hopes to build on Alembic’s success, taking the next step of standardizing the “algebra” by which assets are aggregated and refined in-context. Says Guido Quaroni, VP of Software R&D at Pixar, “One of the key aspects of Pixar’s pipeline is the ability for hundreds of artists to operate simultaneously on the same collections of assets in different contexts, using separate ‘layers’ of data that are composited together at various production stages. USD generalizes these concepts in an attempt to make them available to any DCC application.”

The USD distribution will include embeddable direct 3D visualization provided by Pixar’s modern GPU renderer, Hydra, as well as plugins for several key VFX DCCs, comprehensive documentation, tutorials, and complete python bindings.

ABC Latest News | Latest News Videos

Pixar’s new short – Riley’s First Date.

Pixar has already been sharing early USD snapshots with a number of industry vendors and studios for evaluation, feedback, and advance incorporation. Damien Fagnou, Global Head of VFX Operations for MPC Film, relates, “USD is an amazing opportunity for both software vendors and VFX companies; this type of common open standard drives the industry to greater innovation by focusing development and leveraging a wide community of artists and developers. We have been working with Pixar to evaluate USD since the beginning of the year and plan to use it as a key part of our future VFX pipeline.”

Jeff Clifford, Head of R&D for Double Negative, states, “Over the past 12 months, Double Negative have converted many large environment assets from our proprietary system over to USD for testing and more recently have been using the USD viewer successfully in production. Going forward we are hoping a dedicated team can help test and develop USD to cater for all our scene description needs, including our cross-studio collaborations.”

Among the vendors helping to evaluate USD are The Foundry and Fabric Software. Andy Lomas, Product Manager of Katana, says, “At The Foundry we believe that USD will have a huge impact, and deserves to become an industry standard similar to OpenEXR and OpenSubdiv. It is an ideal format for creating rich assets that will work naturally with Katana, allowing workflows that were previously only available to large studios using proprietary tools. We consider that it is exactly what is needed to enable a scalable collaborative pipeline, allowing the best in class tools to be used for each part of the production process.”

VP Product, Fabric Software, Kevin Tureski, states “USD perfectly complements Fabric Engine by handling complex scene data in an application-agnostic fashion. The design of the USD API made it straightforward for us to integrate it directly into Fabric Engine and we already have customers testing the combination.”

PIXAR also released and showed a new short film from the Inside Out team.

Driving the VR

Most of the VR world relies on state of the art graphics cards and GPU processing so it is no surprise that NVIDIA is at SIGGRAPH.

New Quadro GPUs from NVIDIA

NVIDIA announced two new Maxwell-based graphics cards at SIGGRAPH, the Quadro M5000 and Quadro M4000. These expand the Quadro line for the Maxwell architecture, with the M6000 introduced in April earlier this year. There are smaller Kepler cards that are still shipping, the K2200, K1200, K620, and K420.

Heres a quick look at the specifications of the new cards:

Quadro M5000

- CUDA Cores: 2,048

- GPU Memory: 8GB / 211 GB/s

- Single Precision: 4.3 TFLOPS

- Displays: 4 x 4K

- Power: 150W

Quadro M4000

- CUDA Cores: 1,664

- GPU Memory: 8GB / 192 GB/s

- Single Precision: 2.6 TFLOPS

- Displays: 4 x 4K

- Power: 120W

Convergence of Games and Film

One of the panels that fxphd.com was also involved with was the Convergence of Games and Film, as part of the main SIGGRAPH conference. Mike Seymour moderated the panel.

On the panel were:

- Rob Bredow, Industrial Light & Magic

- Kim Libreri, Epic Games

- Kim Davidson, Side Efects Software Inc.

- Frank Vitz, Crytek

- Rick Stringfellow, Electronic Arts Canada

- Colin Penty, The Coalition

- Bill Polson, Pixar Animation Studios (currently on leave writing a book on Pipelines)

This group ranged from chief creative offices, to visual effects supervisiors, games designers and was perfectly placed to discuss the various similarities and differences between the games industry and film. The conversion focused on artist issues and technology, rather than the use of film titles for games or vice versa.

Many aspects seem very similar in terms of tools and software but things such as render times which are know to be many hours in film need to be milliseconds in games. The issue was also discussed about workflow, staffing and the role of the director in each environment.

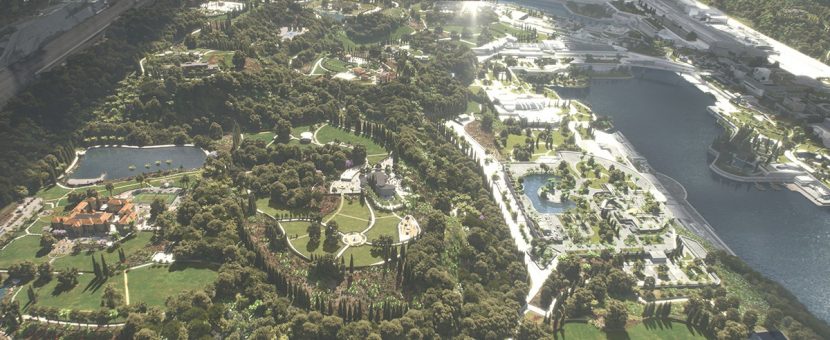

As part of the discussion Kim Libreri showed the short film KITE, fully rendering in real time, and then to prove the point, flew around the massive virtual world in realtime, altering lighting, moving past moving grass, clouds, animals – in a remarkable example of a Games company using their game engine for something not unlike a PIXAR short film.

Then later in the same panel Rob Bredow shows the work of X-LAB at ILM, which is a full live demonstration of a film company – LucasFilm doing work in interactive game like environment. In the case of ILM this was a fully live and in real-time interactive story that allowed the user to view and move around a Star Wars world, experiencing from the point of view of one of the main characters or as an observer – but remarkably all running on an Ipad. This is possible as the actual graphics are being rendered on the cloud – so in theory anywhere you can stream Netflix you could be moving around in the 3D Star Wars world in stunning high res graphics. And once the team had this fully working, they ported it to VR, and Rob showed a video of a VR experience.

The panel benefited from a remarkable depth of experience with most of the panel having worked in film and games. The discussion ended by discussing where the technology was going, how sampled digital people could play an increasing role in games, but with interesting observations that big data or social may need to fill the void otherwise often assumed will be filled with A.I.

Path tracing in movies course

One of the great advantages of SIGGRAPH is that in addition to the short technical papers and talks there are also much longer courses. These are typically half day session that are deliberately more educational than just research focused. For example this year there was an excellent course on Path Tracing in Movies.

While path tracing is now huge, it has not actually been used in that many films – yet. Clearly, the industry is moving rapidly to path tracing and an expert panel of researchers presented a strong line of papers and discussion explaining path tracing.

Dr Alexander Keller, Director of Research from NVIDIA, gave the first talk which was an excellent summary of all the terms and approaches the rest of the speakers would cover.

There are many ray tracing approaches and variants, in addition to just normal ray tracing or bi-directional path tracing, for example: next event estimation which is good for small light sources that can be missed or even light tracing which starts from the light source and then connects to the camera.

But the primary issue is getting enough good samples to converge on ground truth quickly enough or so that the noise is lower enough in the time you have!

Of course, the wide subject of path tracing plays into the broad area of direct illumination and global illumination, as well as how your sampling is done or ‘directed’ which leads to Multiple Importance Sampling. Which in tern leads to discussions of the surfaces of objects and their various BRDFs used to formulate the radiance reflected at any point in the scene.

Additional Objects can have vast textures to be traversed, they could have sub surface scattering, anisotropic properties or complex refraction. In short, a modern day renderer needs to deal with complex light path issues, complex sampling approaches and detailed surfaces.

The subject of rendering is huge and so this course focused on the primary strategies for the rays themselves and then four of the key renderers, RenderMan, Arnold, Manuka and Hyperion. Two of these are commercial packages and two are in-house renderers at Weta Digital and Disney Animation respectively.

Today there is a move to pushbutton paradigm which means that the systems are less about programming very complex shaders and/or tricks and more about physically plausible lighting with less controls but often more realistic results.

The day started with many of the key terms explained both technically and conversationally by Keller.

Summary of terms:

Converges: in simplest terms the longer the ray tracer render runs – the better the image gets towards the final. Converges guarantees that the render does not introduce persistent artifacts, former technologies did so. Convergence is a vital part of a good path-tracing renderer.

Monte Carlo is a term referring to the random nature of how rays are cast, and it allows one to compute a scene by “random sampling and estimation'” and it has been the prominent method. It is independent, unpredictable and uniform.

Deterministic: an uncertain system is a “stochastic systems”, in this regard, it can be classified as non-deterministic. By contrast a non random system is deterministic. Each to use on parallel architectures and normally faster than Monte Carlo.

Stratified – dividing a pixel into a set of sub regions and inside each sub is a ‘random’ ray

Quasi Monte Carlo – In a stratified approach we have ‘random’ rays but in a more uniform way. This can give roughly a 30% speed improvement.

Unbiased – using monte carlo, an unbias is really zero difference between what is expected from ground truth and what one computes. Why then would you then want a bias approach? – Well a bias version might guarantee a solution, with perhaps some particular artifact, but inside a time frame. This might be an approximation but one that is unimportant compared to the time of the render. Unbias is used a lot in marketing but actually it does not strictly mean the image will converge.

Bias renders have an ‘error’ but one you can control so they are consistent. A consistent bias render will converge.

Physically Based Rendering: (PBR) Using real or measured materials and everything is like a real set.

Arnold

Marcos Fajardo, the founder of Solid Angle

Interestingly, while bi-directional path tracing is popular it is not how Arnold works. Arnold uses normal recursive monte carlo path tracing for its light transport simulation. Arnold is a physcially based monte carlo ray tracer, some 150K lines of highly optimized C++ code. While it is CPU it is fast and able to handle very complex scenes, and keep the number of controls low so that realism can be obtained without complex shader coding or special rendering tricks. The quality of light simulation of Arnold has made it one of the most influential renderers in high end image generation.

Fajardo summarized the world of rendering to these three things: “The single most important thing in producing a great image…is shadows,” he explained, and and the only artifact you have to worry about “is noise.” To achieve this the number one focus “is memory”.

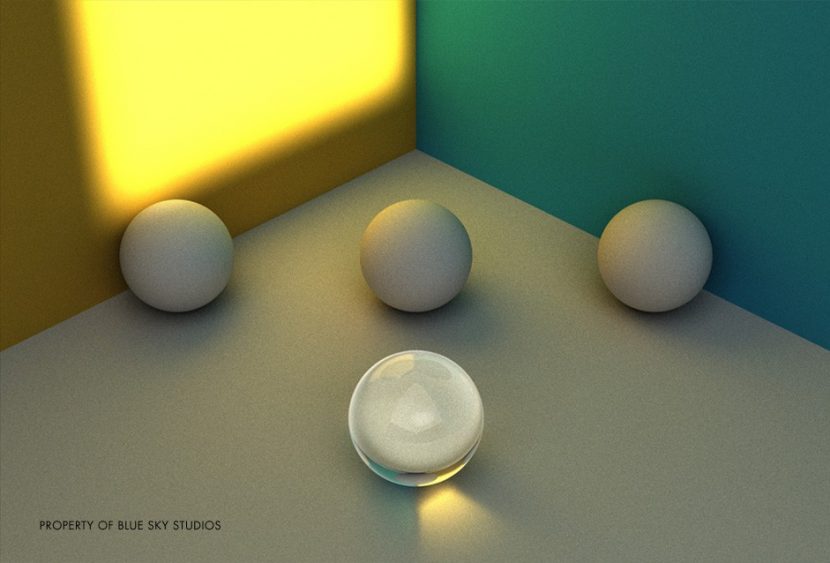

He proceeded to give a live demo and a run down of his personal journey including a pivotal visit to Blue Sky Studios in the late 1987, which included him seeing a simple model lit by a sky light and properly ray traced. “It was the most beautiful image I had ever seen.” Blue Sky would go on to win the Oscar the next year for best short film rendered in the same way with Bunny.

Arnold was of course not used by Blue Sky – but seeing their groundbreaking work inspired Fajardo to work on Arnold and co-develop it while he was at Sony Imageworks. Today Arnold is sold by Solid Angle and is able to handle incredible geometric complexity, a point Fajardo demonstrated showing the massive ring asset of Elysium (2013).

Looking ahead Fajardo would like to see faster volume rendering, and even a bi-directional path tracing solution, but while Fajardo agrees it can be excellent, he does not want to add it and slow down production rendering, nor does he want to add it and make rendering more complex for the user. His team are now exploring how or with what algorithm Solid Angle can embrace bi-direction path tracing while being as simple as Arnold is now and no slower.

RenderMan

Per Christensen from Pixar discussed RenderMan’s path to path tracing, and the new RIS which relies on Bxdfs (surface properties) and the path tracing integrators – (both normal and the bi-directional).

Today some 300 movies have been made with RenderMan, since the first version was released in 1989. Of course until recently RenderMan was primarily REYES and only recently did Pixar offer path tracing. Ray tracing has been a part of RenderMan since 2002 for producing ray traced shadows or for ambient occlusion. And ray tracing was used extensively in the Pixar film Cars.

Pixar decided to add path tracing as it is a single pass process that does not require baking, it has better mutli-threading that REYES, and it provides progressive rendering thus providing faster feedback and the ability to have check point rendering (more below).

The new path tracing system is provided by RIS, inside RenderMan. The old RSL shaders have been replaced by C++ Bxdfs and RenderMan provides many to try. For example the Marschner hair which came from Pixar’s feature film division, can now be used by any RenderMan user. Similarly Brent Burley recently produced a simple but accurate approximation of sub surface scattering and this has replaced a large number of rival systems inside Pixar. And it was this SSS that was used by MPC for their work in the latest Terminator film.

Dory primarily uses uni-directional path tracing, with the bi-directional/VCM integrator used mainly for generating caustics slides that are affixed in a projection from a light in the general render. The Good Dinosaur is Pixar’s last movie rendered with the older hybrid approach that uses REYES and ray tracing.

Per Christensen went on to discuss more complex integrators including two recently presented at Siggraph such as VCM: (bi-directional path tracing + photon mapping) and UBPB.

Regarding noise reduction, David Adler at Disney and his team developed an intelligent denoiser at Disney for Big Hero 6 and Andrew Kensler at Pixar productized it, removing it from the Disney specific pipeline, developing it further – and now providing it as a part of the RenderMan toolbox. It works by taking the diffuse colour, specular color, albedo depth, normal, motion vectors (if given a clip) and variation. So unlike earlier image processing algorithms, the denoiser does not just process the final frame – it manages to denoise the image without softening the textures and producing classic denoising artifacts.

Manuka

Johannes Hanika, who has been working part time at Weta Digital, discussed Manuka’s path tracing sampling algorithms. Weta’s major feature film pipelines require very high visual complexity from complex digital doubles, detailed creatures right through to massive fight sequences and incredibly complex dragons!

Manuka is a physically based renderer, and developed from a research project into a full production renderer (see our fxguide story from its launch).

Even with highly complex scenes, with vast geometry, the system is built to work well with hundreds of points per ray and not thousands.

Hanika ran through a series of technical examples of how various renderers perform at technical level, from normal monte carlo, Metropolis and Energy Redistribution Path Tracing (ERPT). ERPT is not practical at this point, and the parameters are hard to control. Manuka uses bi-directional path tracing with careful Manifold next event estimation with MIS and is implemented with Physically Based Shading and Energy Conserving lighting. The system uses Manifold next event estimation algorithms to capture good caustics which are vital for the detailed character work Weta does. Manuka is also a spectral sampling system. The spectral rendering really becomes important in MIS and in volumetrics. For MIS it is possible to pick exactly which part of the spectrum to focus on for importance sampling. The spectral rendering actually comes more into the design of Weta’s shaders (which is being covered later in the week here at Siggraph 2015).

Hyperion

Gregory Nichols and Christian Eisenacher from Walt Disney Animation Studios finished up the course by discussing their new Hyperion renderer as used on Big Hero 6. The Hyperion is a path tracer which fxguide also covered on its introduction, where we outlined the two primary aspects of the new Disney renderer.

Firstly, the renderer sorts large potentially out-of-core ray batches, effectively lumping similar ray bounces together, and secondly and most importantly, the renderer does not do the actual shading of the ray hits until the rays are sorted and grouped. This allows for a cache free system of doing large model global illumination, irradiance, radiosity and/or physically based lighting inside the memory constraints of a practical renderer on a chip.

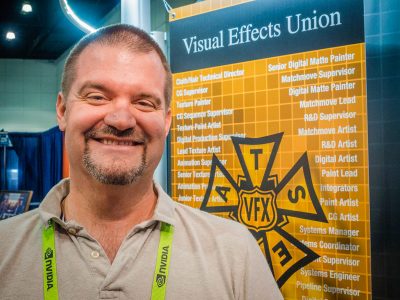

BOF: Union for Visual Effects – A discussion & Info Session

Steve Kaplan from The Animation Guild (Local 839) led the panel along with The Art Directors Guild (Local 800), also in attendence was Vanessa Holtgrewe (International Representative – IATSE) and representing Vancouver (Local 891) was Dusty Kelly.

The crowd was smaller than the last Los Angeles panel (2012) which to be fair included VFX Soldier and Scott Ross and was much closer to the groundswell of emotion around the Life of Pi and Oscar protests. There also seemed to be more students in attendance concerned about what they are hearing about the field they are pursuing.

The issues appear unchanged from the 2012 discussion: visual effects is the only major craft on feature films not represented by IATSE, conditions at some facilities include “day rates” and other labor law abuses, pension/retirement needs, health care (US), to name a few.

Kaplan navigated the history of The Animation Guild, how it came to be due to conditions inside Disney back when Walt was a formidable force to negotiate with. It came down to “are you willing to walk out”, and they were. Animators at Disney, DreakWorks and many other places today owe their working conditions, pension, etc. to that willingness. He stated that if visual effects artists want change it will likely come down to reaching that point. An audience member asked why that did not happen during the Life of Pi/Oscar Protests/Rhythm and Hues closing days. Kaplan said that while there was a lot of raw emotion during that time there were also a lot of people losing their jobs, he has observed that translating that raw emotion into action can be difficult. People need to decide having union representation is important enough to them to be willing to walk out. Until we hit that, change will be difficult.

A student in the audience lamented that at his school there is no focus on the business side, what to expect financially when you graduate. fxguide did a story in 2012 on Laguna College of Art and Design (LCAD) where they told us that every student goes through business courses, setting up a web site, selling to a gallery (for more physical artists). The importance they placed on that part of the curriculum seems lacking in some schools. It was mentioned that this is a big part of Florida State’s program.

Overall the system for seeking union representation remains the same (due to federal labor laws), artists must fill out physical cards and mail them to IATSE. The Animation Guild can be contacted for cards, they even have an online system to request a card be mailed out. On twitter after the session Kaplan also mentioned he is willing to meet with anyone to discuss issues.

The Academy Color Encoding System (ACES): A Standardized Production Infrastructure for VFX

There were several ACES sessions at SIGGRAPH and we covered some of the color space issues in our 1st day report from DigiPro. This one was moderated by Sebastian Sylwan, CTO and Creative Partner at Felix & Paul Studios and former CTO of Weta Digital and aimed to cover history, the small vendor perspective, DI, as well as large movies and facility experience.

Ray Feeney, four-time Academy Award winner for Scientific and Engineering Achievement and President and founder of RFX Inc. started with history. ACES has been 10 years in development, designed to address loss of I underlying standards in the film to digital transition. There are 22 companies listed as logo participants plus many more have implemented. IMDb lists 40+ films today as being finished in ACES. Feeney said that we are at a tipping point now with studios asking for HDR deliverables to protect for future device support. As Visual Effects already uses many of the core concepts like linear light and many of the original contributors to the standard were from visual effects, the transition should be easier.

Feeney mentioned that he was part of the original DPX spec design team and Sylwan joked if nothing else good comes of ACES getting rid of DPX as deliverables will be worth it.

Feeney posed the question: if a vfx facility has a robust color pipeline in shop – why switch to ACES? He related the all to common story of the vfx crew seeing a film in theatre and seeing a crushed, totally different look than they had worked on. Sometimes due to making vfx facility shots blend in DI. Sometimes it is a creative decision. ACES should eliminate the first problem and protect integrity of work that you do.

Marie Fetiveau, R&D engineer and pipeline technical director, Rodeo FX (“Fantastic Four”, “Furious 7”) brought the small vendor perspective. They had two projects going, an older one in rec2020 and a newer in ACEScg (see DigiPro article for details). Artists found the two color spaces very similar and comfortable to work with. They ingested using ACES image then to ACEScg and a white balance pass (which saved artists time). Viewing was done using a LUT (ACEScc). CDL (Color decision list from editorial) was only used at delivery.

One issue is that textures are painted in sRGB. Photoshop does not handle float well so they used use 16bit int + sRGB, this is an area they would like to solve moving forward. Also on the to do list: opencolorio and naming name plate with colorspace.

Chris Davies, technical director, Skylab (“Chappie”, “Elysium”) represented the DI side of things. The pipeline is ever changing in fact the projects he discussed were about two years old. So future proofing is key. He has used ACES on 10 features, commercials, documentaries and more.

On the Chappie production side they had mixed cameras, with Red Epic as primary shot anamorphic. In post all ingest was exr. using Red to ACES, they were using in house translations on other cameras to match match that. The finish was 4K D-Cinema, 35mm, IMAX, and more and the archive was done as exr files.

Davies showed the uncolor corrected plate cut in log, then same sequence with rec709, still ungraded. Then with dailies grade from dailies – this was provided as data to artist to be able to turn on or off. Then same sequence with graded plates that was only applied at end, and reversible – he called this workflow as “must do these days” as the grade may change creatively so the comp may need to be rebalanced to the new color non destructively. He then showed comps cut in.

Alex Fry, compositing and color pipeline supervisor (“The Lego Movie”, “The Great Gatsby”) talked about ACEs on The Lego Movie, he drove this work at Animal Logic. Showed a very informative Lego test image – one side of screen was SRGB, the other was ACES with sRGB Output Display Transform (ODT). This showed that exposure does not work properly in sRGB without ACES, nor do heavy grades. Subtle color differences were quickly destroyed and higher exposures worked incorrectly.

Reasons they chose to use: Knowing show would have bright colors and hot hilight pings. Also provided ultra wide gamut, HDR, a standard and was RGB. Lego movie was done in p3 ACES because ACEScg was not finalized yet. Gave Technicolor ACES EXR files for DI.

Michael Whipple, executive director, post production technologies, Sony Pictures Entertainment was up next and he got involved with ACES very early because the of the Sony electronics development of the F65 in 2011. Sony wanted to use ACES for this new high end camera. This let to “a whole lot of LUTS and education”. Aces 1.0 built in lot of stuff that previously they were doing. SMPTE standard, broadly supported by manufacturers. Look modification transforms. He also found it saves money with less compositing issues showing up in DI. He has finished more than ten features in ACES and is already using older ACES archives to create new HDR content. Also stressed the importance of archive saying it seems to come up a lot. Studios are requiring higher quality archives for future display tech.

President Obama

First thing on the last day of the show, the Smithsonian and USC ICT presented the work done on location at the White House to take a 3D portrait of President Obama, in what is the highest resolution digital model of any head of state, and then produce a statue as part of their permanent collection.

The Lightstage is too large to transport, besides it is bolted to the concrete floor of the ICT. As it happened, the USC ICT team had been working on a portable version of the Lightstage called the FlashMob. The Presidential (modified Light Stage X) rig was a hybrid between the two systems with 50 lights (the FlashMob has 24), and 8 Canon 1Ds, plus 2 GoPros (for BTS not reconstruction).

Two years ago, Adam Metallo and Vincent Rossi created a vision document titled “Next Generation 3D Portraiture: A Digital Life Mask of President Obama.” 18 months later, in the State Dining Room of the White House, the Smithsonian team showed the first 3D portrait of a United States President.

At the talk it was explained that the inspirations for the project were the Smithsonian’s Lincoln life masks. Created in 1860 and 1865, they capture President Lincoln one year before he took office, and two months before his assassination. The marked changes in the President’s facial features speak volumes about the toll of the Civil War.

For the 1865 life mask, created in the White House, scholars believe that President Lincoln’s face and beard were greased before applying a thin coat of plaster paste. The President breathed through straws in his nostrils while the plaster dried.

During a facial scan, the cameras capture 3 x 10 photographs, each set in under a second, and each with controlled structured lighting conditions for a total of 3 sets of 80 photographs.

Once the face scanning was done, it was combined with a hand scan of the skull and body of the President, while it is estimated that it took 15 minutes for the plaster to dry on President Lincoln’s face, it only took the Smithsonian team 7 minutes from the time President Obama entered the room to finish the data capture.

The Autodesk team put together the final 3D model by fitting together the different scans into one unified 3D space, and normalized the color information from each scan to prevent visible seams of different types of color in the final model. A digital sculptor added a plinth to complete the bust.

Within 72 hours, the process resulted in a master file of 15 million triangles, which yielded print-ready files for an Obama bust and a life mask, as well as photorealistic 3D models for digital viewing. There were no special eye scans or photography so based on the diffuse pass, ICT artist Jay Busch engineered the President’s eyes for the color video version of the President.

Two days later, the team transferred the files for 3D printing to 3D Systems. For the 1:1 bust, in a process called Selective Laser Sintering (SLS), a laser melted nylon powder into a highly accurate and durable print which was sand blasted and finished. Given the size of print, the printing process took 42 hours, after which the print cooled down for a further 24 hours.

The bust was decided to be white and neutrally uncolored to match the style of the Lincoln masks. But the Smithsonian commented that the data was there and in the future – perhaps many years from now when new as yet unimagined 3D printing becomes available perhaps a future generation will make new and fully colored statues.

The conference ended with another round of great production sessions on Ant-Man and Avengers: Age of Ultron…and the Maxon party! 2016 will be in Anaheim once again in California. (Time to go home now – bye).