The Meg, directed by Jon Turteltaub and starring Jason Statham, has had bigger impact than anyone anticipated. In its first week, The Meg movie become one of the most successful shark films of all time with its global box office openning.

The film centres around deep sea rescue diver Jonas Taylor (Jason Statham), a visionary Chinese oceanographer (Winston Chao), and his daughter Suyin (Li Bingbing). In the film, a deep-sea submersible—part of an international undersea observation project has been attacked by a massive creature, previously thought to be extinct. It now lies disabled at the bottom of the deepest trench in the Pacific, with its crew trapped inside. With time running out, Jonas Taylor has to save the crew from this unstoppable threat: a pre-historic 75-foot-long shark known as the Megalodon. Taylor teamed with Suyin, must confront the Meg, risk their lives and come face to face with the greatest and largest predator of all time, the Meg.

Sony Imageworks

Early 2016, Visual Effects Producer Steve Garrad contacted Sue Rowe at Sony Pictures Imageworks (SPI) about working on the project, specifically the third act of the film. The two knew each other from working together at Cinesite. “He said to me ‘I’ve got this great movie. Here’s the thing: I want you on the third act, and it’s going to be really challenging” recalls Rowe.”What was interesting about the planning was that they planned to turn over to us 300, maybe 400 shots, but the final sequence itself is going to be 200 shots. What they wanted was us to go in and get them material – quick and dirty – that they could use in the edit”.

Garrad suggested this approach as he knew the director, Jon Turteltaub, would work best being able to immediately edit material that was shot with even rough effects shots. As the third act was naturally going to be effects rich and a strong action sequence, they believed this was key to making the film work. “So with that in mind, I approached it slightly differently than usual. I got the team rendering things in (Maya) Viewport 2.0. Two years ago, it was kind of newish still and a bit buggy, so it took a little bit of convincing. But what was great about this way of working is that I could put fog into the image and we could put spotlights in and yet render immediately. It was perfect for underwater”, Rowe explained. As the SPI team could provide depth queuing and key lighting, they moved very quickly from this pre-viz/post-viz to final visual effects shots. As the production moved into the final stages, SPI did roughly 400 shots and ended up finishing 280 shots for the final film. “I’m, pretty proud of how we worked, because we were able to help them pin down the work and then nuance the shots.” By working this way, in the early stages, Rowe avoided going through all the departments such as the Lighting team or Comp, and just worked with Animation and Layout. This allowed SPI to work exceptional quickly.

One of the first shots the team tackled was the giant Meg breaking the water. At the post-viz stage they just had rough bubbles for the surface froth, but this shot proved so complex it was also the last one they finished.

The size of the Meg posed some real challenges. In real under water footage, natural water causes light and detail to drop off after about 10 to 15 ft from the camera, “and that’s a big problem when you’re key ‘actress’ is 75 foot long” commented Rowe. “You just can’t make all the shots be close ups, so we did have to cheat, which is, – you know, – artistic control!” she joked. SPI also found that the Meg only looked really huge when given context or something else in shot for comparison. The Meg, alone on screen swimming, did not read as being 75 feet long. “We learned pretty quickly that we needed to give it some orientation. So I would cheat into shot the water surface behind the shark or add in fine particulate” Rowe explained. She also decided from the beginning, to make the footage look dirty and gritty, in an almost documentary style. For example, if “we were underwater filming, I decided, ‘let’s have the camera always have some drift in it as if there’s real water resistance and currents”.

For the cat and mouse chase in the Glider mini submersibles, the actors were filmed on 5 degrees of freedom motion bases. “They could move in many different ways. It was quite physical. When Jason (Statham) was throwing the Glkder around, it was great, and he really enjoyed that part of filming it,” she recalls. “That gave us a little bit of physicality that was really useful, because when your object is static all you can do is move the camera backwards and forwards. We ended up doing a lot of sweeping moves, which was good”. The team rebuilt the Bay from principle photography and always made sure that the action was going in the correct direction based on the real bay and the story points.

One aspect that Rowe found interesting and amusing were the bubbles that come out in the back of the glider. “My first day on set, director Jon Turteltaub say to me, ‘So what about ‘cavitation’? This film’s all about cavitation, – you know the cavitation is all up to you?’. And I replied, “Oh yeah, no you’re right. It is all about cavitation” and then I went straight off set and looked up ‘cavitation’ on Wikipedia. Of course, it’s the term that’s used for when the propellers turn at such a speed that they actually boil the water and propel someone forward”. In reality, the cavitation would be a vfx problem since real cavitation ejects straight out the back of the glider “and it is kind of boring” she explains. Right towards the end of the production, in the last month, “Jon changed his mind on what the cavitation was going to look like and we had to re-simulate everything. We changed the gravity and the water… But he was right, and it looked much better. It now fills the frame with this sort of kinetic energy”.

Lighting

Sue Rowe was on the project for 18 months. The film was shot on the Arri Alexa Mini with Zeiss Master Anamorphic Lenses by DOP Tom Stern (Sully). The shoot included 2 months of on set work. SPI rendered their VFX work in SPI’s version of Arnold to match the plate photography. Although SPI’s solution was often not to re-produce a perfect match to the underwater photography, but just be sympathetic to it. Rowe was on set off the coast of Auckland at sea for 3 weeks shooting plates and elements, which was 3 weeks she did not particularly enjoy (due to seasickness). “On the last day at sea, they were like evacuating the boat and they said, ‘Okay, who wants to on the next one back to land?’ and I thought, ‘Right, let’s put an end to this thinly veiled love of sailing!’ – I enjoy it, but it’s was my last day. I was keen to get off the boat and get to dry land. But actually, it was a really good shoot” she laughingly recalls.

SPI did have to cheat light sources but they aimed to do it fairly subtly. A good example is adding underwater shafts of light or “God rays” in the background to give a shot depth. In general terms they kept the frames purposely fairly dark, as Rowe wanted to build up the frame in a Multi-planar way, especially with lights in the background. “We did the usual gags really,… whenever the shark came close and we added a little backlit it, and a little bit of Rim light catching the teeth, lit a bit from underneath it. ‘Monster lighting’, I used to call it, ’cause -you know, – that’s what the script called for !”

The Meg

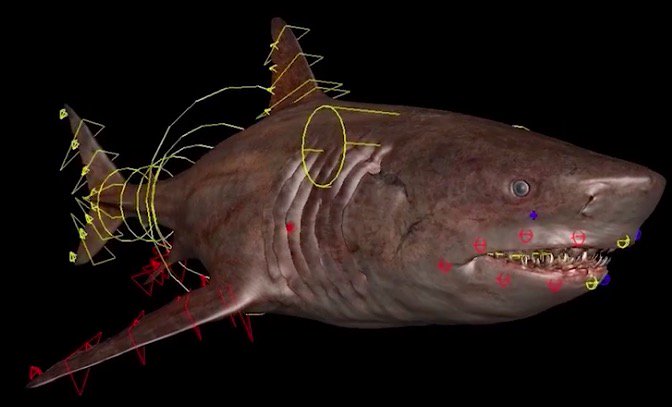

The actual Meg asset was made by ScanlineVFX originally, using the Ziva dynamics for the creature. Scanline shared the asset with SPI who re-rigged it for their pipeline. “Scanline were involved from the very beginning. Scanline got the Shark designed and modelled,” Rowe explains. “We shared assets, that was really great. We completely reworked it and put our own muscles system into it. We (also) used Ziva. The muscle system comes from building the creature anatomically correctly, and then you get these great muscle shapes and creature animation. I’ve used it before on Maze Runner. We were able to control how much of a ‘muscle ripple’ the shark had. In the end we exaggerated that for effect”. The shots in the third act are short, normally just two or three seconds. “They don’t really linger very much…so you will see in a couple of our shots where the Meg is almost like a thoroughbred racehorse, with a little ripple down the back. That was probably not correct, but it just made ‘her’ feel right” Rowe explains.

The team at Sony also exaggerated the shark’s gills. Interestingly, a real shark gills vent water. The shark could not move quickly through the water with its mouth open, if water could not pass through the body. This means, on a real shark, it is possible in some footage to see through the open mouth directly through the gills. Naturally the SPI team modelled this, but Rowe recalls ” looking at a turntable test that we’d done of the shark, and I was like, ‘oh no, we got an intersection problem here!’ But the modellers explained, ‘Oh no, that’s correct, – you can see right through a shark'”.

“She had to look like she was driven and purposeful, and that is where we had fun”

They actually gave the Meg a bit more of a lip curl for expression. The team also added a slight nose curl and controversially, (at least internally), Rowe also asked for a controllable eyebrow. “And the team immediately said, ‘Oh, please don’t anthropomorphise the shark” But I told them…, ‘trust me, just trust me’. And so we added a tiny, eye brow control, just to take the perfect circular aspect out of the eye, and it changed everything”. Animation supervisor Craig McPherson and Rowe worked carefully together to subtle animate the head – sometimes only turning it 15 degrees, or adjusting the nose slightly off centre to get a scary deliberate performance. “Those little hints and tiny things that we did, which were all a very subtle, – just made the difference. That’s where innovation comes in”. Rowe wanted to make sure the MEG did not look like she was just floating in the water but rather traveling powerfully with intent. The team added tail flicks and other thing to keep the massive beast looking like it was driven and purposeful. But making the Meg always look large was difficult.

To add the impression of size, the team added schools of fish, and anything they could to allow the audience to read scale. “I mean, oh my God, we tried everything! Fish in the foreground, fish in the background, adding Kelp, – except we discovered that even the Kelp in that area could be 200 feet high, which did not help!” Rowe explained. The trick the SPI team kept coming back to was their multi-plane idea. “I would put things in the foreground. She (the Meg) would be in the mid distance, with smaller version of the rocks fading off behind her. Scale was a big issue”. For SPI, the number one solution, that seem to help add energy and scale, was adding bubble to the shot. The team named them streamers. Even when they might be unmotivated, a stream of small bubbles from a fin edge or her nose – all added a sense of scale, intensity and movement relative to the water. Rowe recalls that she ” would get the bubbles coming out of the gills or nose and streaming down the body. It didn’t matter why…and suddenly the energy came and it looked frenetic and it looked like a proper underwater fight. Those small bubbles down the length of the body, past the gills down past the fins and to the tail, – it just showed speed and it showed scale”.

ScanlineVFX

ScanlineVFX did a lot of the vfx work in the first part of the film. They are known for their brilliant water simulation work and thus they were a natural choice to work on this film. ScanlineVFX also used Ziva to aid in the shark’s muscles ripple and bulge as it swims and the fat beneath its skin reacts to the pressure of water as it splashes at the surface.

The process uses AI (Machine Learning) to produce training data for the process. They relied on using Intel Xeon Scalable processors for the process. This drove the Ziva Physics Engine.

Additionally, ScanlineVFX used Intel Xeon processors to render the shots for the film, Stephan Trojansky, president and VFX supervisor at Scanline, commented, “to create ‘The Meg,’ we needed a massive amount of performance in our computer system. Years ago, you would have needed a huge render farm and a large crew for a very small amount of footage – today, we can use 2,500 Intel Xeon processors with almost 100,000 cores that are used to compute all of the needs of the movie. This enables fast iterations and the ability to present multiple options to the director, which is critical in making the best possible visual effects”.