Vladimir Koylazov, or Vlado to millions of dedicated V-Ray users, has just been awarded the Academy Plaque by the Sci-Tech Committee of the Academy Awards for “the original concept, design and implementation of V-Ray from Chaos Group”.

In 1997 Vlado was awarded another prize for a high school project entitled “Thing”, which he had been coding for roughly a two year period. The video below is the original executable, compiled with Borland Pascal and running in DosBox.

The man who gave him that first award in Bulgaria in 1997 was Peter Mitev, a fellow university student and the two of them started the Chaos Group. Vlado started writing V-Ray at the end of 2000, as a method for rendering in their first product, the Phoenix effects plugin. When he started writing it, he did not imagine it would be a stand alone product, or anywhere near as successful or important as V-Ray is today.

“I didn’t start out to write a renderer – we just needed basic ray tracer functionality…but it became an interesting topic so eventually we decided to do a full blown ray tracer and we turned it into a commercial product,” he explained.

Vlado showed an early V-Ray version to some people at Siggraph 2001 and “they really liked it, and that is when we decided to make it into a product.” The first official release was in March 2002, and at that time the entire team was just Vlado and Peter Mitev. Today there are about 50 to 60 V-Ray developers in a company of over a 200 people.

As we stated in our 2015 interview with Vlado, “Chaos Group’s V-Ray is one of the most important renderers in the world; it has grown from being popular for architecture visualization to a stable tool in VFX, automotive design and increasingly, feature film. The renderer is extensive and focused on production.”

V-Ray is known the world over in VFX, but what may be not as well-known is that Vlado credits much of his success to his high school computer teacher!

Back when he was in school in the 90’s, there was no formal computer training or programming, but Vlado was lucky enough to have a special class once a week outside of school hours with Ms. Katalina Grigorova.

“We had classes once a week, I think about 3 hours. We learned programming (in Turbo Pascal at that time), algorithms and data structures. We also participated in various informatics competitions. I never got beyond the nationals myself, but some of the other students went on to participate in the International Olympiad in Informatics (IOI). Preparing for these competitions was actually a major part of what we did,” he explains.

Vlado has since contacted his old high school teacher to let her know he turned out alright, and that his first job out of school is now into an international software company. In turn, she has gone on to become a professor at the University of Ruse in Vlado’s home town. “In any case, her ethics and attitude towards her work made quite an impression on me, and I’d like to think that some of it stuck with me.”

V-Ray

V-Ray’s efficient approach to ray-tracing and global illumination, along with it’s support for a wide variety of workflows, were instrumental in the adoption of fully ray-traced rendering for motion pictures.

Unlike other Academy Awards, Scientific and Technical Awards aren’t granted for work developed in the past year. Instead, they take into account the overall contribution of particular technology to the process of making movies. This year alone V-Ray has been seen in such movies such as Doctor Strange, Captain America: Civil War, and Rogue One.

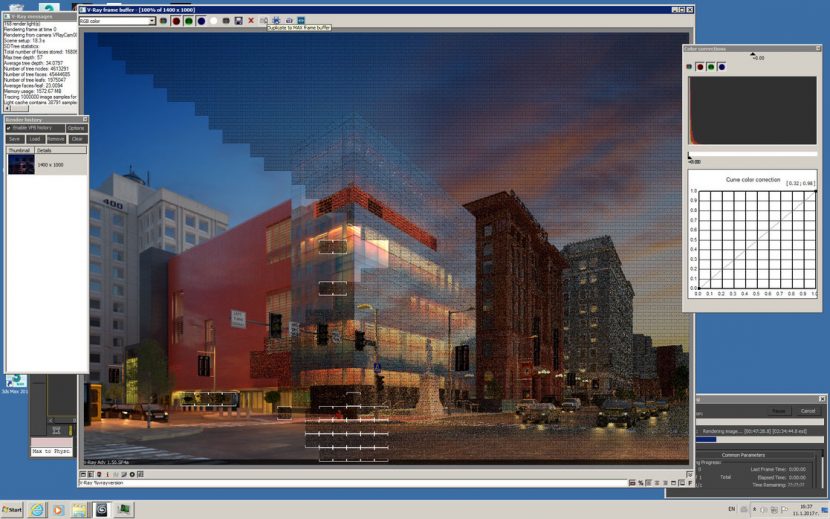

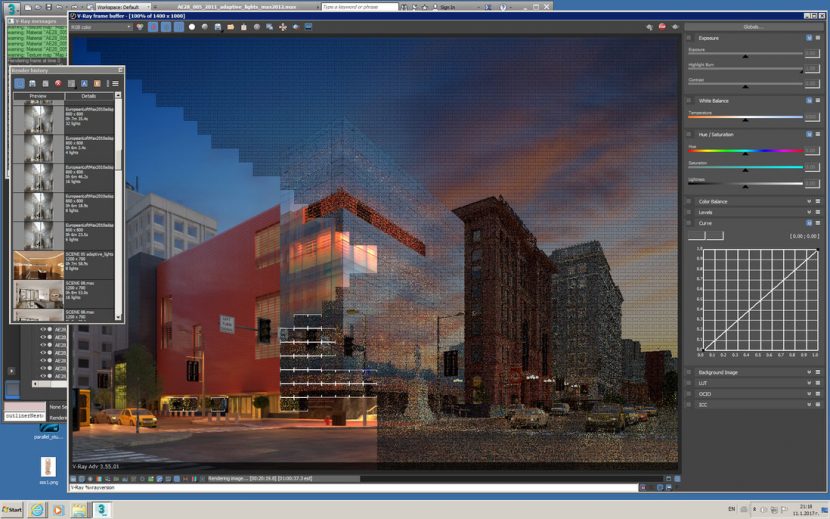

V-Ray v3.4 – v3.5 and beyond

V-Ray is currently shipping with version 3.4, and V 3.5 will be rolled out over the next month (see sample renders below). Fxguide decided to take this opportunity to explore some of the current issues with Rendering and V-Ray with Vlado.

The general approach that V-Ray takes is to provide a realistic and plausible lighting or rendering approach to scenes, with the flexibility to fully control beyond any aspect a senior TD might need. One of the central aspects of ray tracing is converging to a noise free image. Ray tracing is a sampling problem and one of a broad class of computational algorithms that rely on repeated random sampling to obtain results. Broadly speaking, if you render longer, you get a cleaner image. Noise decreases as there are more samples, hence less error. How one randomise the samples and how you control the various aspects of the sampling is central to the ray tracers speed and quality.

In the last few years there has been a growth in the wider render community in only rendering so far, and then using a clever form of noise reduction to produce satisfactory images. This noise reduction is very different from an image processing algorithm used on an arbitrary input image. The ray tracer knows almost everything about the scene and so it can hand over a set of unique data to allow the denoiser to be a very focused and make very informed denoising decisions.

Noise

Vlado points out that denoising has been used for some time, from his point of view this current trend is not new, and “I know V-Ray users who were doing denoising ten years ago.. but it seems only lately that it has been taken up for film work and that goes hand in hand with the shift to ray tracing in general.”

When producing seamless feature film VFX renders, many facilities have looked at denoising because it takes a long time to clean up the last few percentage of noise in the image, particularly if you have a more difficult lighting situation. “Especially in VFX, people can’t really tell if the image has been cleaned up by denoising so it works really well..and I guess that is why it has been so successful”. Chaos added a denoiser to V-Ray in 3.4 last year and it is now part of V-Ray as a post process part of the render, or as a stand alone tool. V-Ray also has a Nuke Plug-in that allows people to use the AOVs (Arbitrary Output Variable) and control the denoise as part of the composite. This allows people to not only denoise the standard image but also denoise a deep composite render.

Vlado would prefer to not use denoising from a purist point of view, although he fully supports the various real world applications where it has a major benefit. “It is a bit like GI caching,.. at some point GI caching was the only way you could get global illumination in a practical timeframe. But it had its own issues. GI caching could cause flickering or loss of detail. With denoising it is something similar as it is a post-process, and it is bias”. From this purist point of view it is an approximation, as it “messes up the image in some way” to reduce noise, “so if you are a fan of pure brute force ray tracing then denoising is something you would frown on, but it can be a practical solution to getting renders out the door.” If it was up to Vlado, he would prefer to not need to use it, and much of the company’s R&D is focused on making the images as noise free as possible, as quickly as possible, so it is not needed by users. Which begs the question: how easy is it to produce low noise images in V-Ray and where is the company focusing its efforts?

Today Vlado believes that the industry has pretty much accepted that Ray Tracing is the way forward. V-Ray is a ray tracing rendering engine that uses global illumination algorithms, including path tracing, photon mapping and directly computed global illumination, but everything pivots on sampling.

Sampling drives the two key image aspects that affect an artist, noise and anti aliasing, and the one key aspect that affects a producer, render time.

With the release of 3.4 the standard default settings of the renderer were changed to better reflect the team’s best guess at the most effective general purpose render settings. Some power users will always believe they can do better than the defaults, while some implementations of V-Ray completely hide the controls such as with the version of V-Ray in Adobe’s new Project Felix. But for most users there are now just a few controls worth tweaking.

For V-Ray’s adaptive image sampling, the Colour Threshold is one of the most critical settings for noise. This is one of the overall controls for the whole renderer. Vlado finds one rarely needs to adjust the individual light or material subdiv per object. V-Ray has Global DMC, or Deterministic Monte Carlo sampling. In simple terms, one needs to sample with a good probability distribution. Samples are meant to be random, but random does not mean just evenly distributed (a common misconception). A Monte Carlo sampling is a standard approach to getting a mathematically nice distribution. The difference between a standard MC (Monte Carlo) sampling and V-Ray’s DMC (Deterministic Monte Carlo) is that just MC uses pseudo-random numbers which are different for every evaluation (and so re-rendering a single image will always produce slightly different results in the noise), while DMC uses a predefined set of samples, optimized to reduce the noise, which allows re-rendering an image identically.

V-Ray has a single unified framework that determines how many and which exact samples are to be taken for a particular value, depending on the context in which that value is required. Vlado’s driving design aim is that V-Ray should be improved internally to always deliver the best results. Increasingly this means not relying on a user’s detailed and extensive understanding of sub-menus. V-Ray is already smart in how it specifies the sampling of images and this will only continue to improve.

“I think there has been a general shift in the last couple of years to hide away the general complexity from the users, so this means hiding settings that people don’t need to worry about. V-Ray has always been very intelligent in the way that it does it’s sampling, and we have tried to make it even smarter about that.” In the latest version of the product there is very little need to go and “tinker with the settings” Vlado comments. “We have tried to make it as automatic as possible, so there are now very few controls that you need to adjust to make great pictures, and usually it is just the maximum number of samples per pixel, the noise threshold – and V-Ray will take care of the rest, even for advanced users”.

Extending from the notion of the denoiser being ‘smart’, as V-Ray knows so much about the scene it is rendering, is there more work to be done in implementing any additional AI or clever pro-active aspect so as to have V-Ray make suggestions to the user? “If there is any such additional smarter algorithm then it should be built in to V-Ray and should probably be totally invisible to the user, and the only thing that will change is how long it takes to render. If we did that, my design approach would be to make it completely transparent to the user and the images would just render faster for the same quality,” explains Vlado. “If an AI engine works out some better sampling setting… distinguishing between different types of scenes, then it should just apply those without asking the user.”

Vlado does not think that the possibilities of smart denoising improvement are exhausted. “I don’t think this type of approach is being utilized to the maximum potential yet, so that is definitely something that we are exploring”. The jury is out on whether Nuke +V-Ray specialist smart denoising will be standard. “I think we are just starting to work this all out,” he comments. “We are just now seeing the denoiser used for film and VFX. I think the various film users are just now getting a handle on how much to use the denoiser in their pipelines. I know a lot of them are playing with it and getting good denoised images out, but I think we are still a little way off knowing if there is a consensus on if people want to use denoising or not”.

Physically Plausible Lighting and Shading Challenges.

If you have not ever had to light a scene to the director’s exact requirements, then it seems that making everything in the computer behave just as it does in the real world is the correct logical end game of any 3D system.

But,… as every DOP on set knows, hours can be spent hiding and addressing problems and reflections caused by real lights. Problems which a 3D system can solve in an instant, by breaking the laws of the real world.

Against this is the notion that real lighting is nearly always better and more believable.

V-Ray’s approach is to acknowledge that odd reflections or shadows will always need to be controlled, but that users should not need to introduce hacks and cheats to get realistic results. Chaos’s aim is that an artist can start with a system that easily delivers realism, without hacks, but then allows control to deliver the director’s vision. “I think there is a certain amount of ‘fake’ that people will want and this will never go away, but what they do not want is the general behaviour of the renderer. It should by default be real as possible.”

Central to realism is materials, and Chaos group has delivered specialist services for materials such as their VRScans material and library service which allows real world materials to be scanned at a specialist and accurate V-Ray material light response models provided. One material that can not be sent off for scanning is an actor’s face, or more specifically their skin.

We asked Vlado how far he felt V-Ray had come in producing photo-real human skin, and if the problem of reliably delivering digital humans was a render problem? “In terms of rendering, we are already at a stage where we can get a perfectly photoreal human. All the recent advances in skin and hair shading are already enough to get a digital human. The problem from here on is going to be Animation. It has always been my view that even with a not very advanced shader, if you get the animation right, it will be believable.”

Caustics

If the Holy Grail of 3D is digital humans, and that is solved for rendering, then is the greatest challenge for rendering? Is there any area of rendering that Vlado would like to see a new paper come out at, say, EGSR this year? “It is a hard question, we are almost there in terms of shading for pretty much everything starting from skin, hair, eyes and all of that. Everything can be made more efficient, but in terms of quality I think it is there, and worse case there are brute force methods”, he explains. “There is one problem that no one has really solved efficiently yet, which is the problem of caustics. It’s a general problem, and there are some solutions such as bi-directional path tracing, vertex connection- merging (see fxguide’s previous Vlado interview), but if you want to solve this you need to switch the renderer to a different mode than what you normally use.” Today most ray tracing solutions are built on uni-directional path tracing models, as in production it has proven to be a reliable and efficient solution. Moving to a bidirectional path tracer is a major and fundamental difference in approach. “It is not like you can just switch the caustics, you have to switch the entire approach,” he adds. While there are partial solutions, such as Weta’s 2015 paper (Manifold next event estimation: Computer Graphics Forum July 2015)… there is no good general solution for this yet.”

Geometric Complexity

In terms of cutting edge research (or a conference such as EGSR) Vlado believes there are always exciting advances “and never a dull moment” but overall he feels the render research is “from now on, just incremental improvements”. The one area of research that the V-Ray team is exploring itself is the complex issue of level of detail rendering. “Scenes are getting more and more complex all the time, and RAM has increased a lot – yet I still feel there should be a more efficient way to handle geometric complexity.” At the moment, systems resort to brute force and trying to cram as much geometry into memory as possible, “we have been doing various types of research in this area, some more successful – some not, but it is still not a ‘solved problem’. I would really like to have some type of solution here, especially for connected meshes, one that could possibly have view dependant tessellation, but then for trees and grass there is no really good level of detail rendering – and there should be !”

Vlado will collect his award at the Scientific and Technical Awards Presentation on Saturday, February 11, 2017 at the Beverly Wilshire in Beverly Hills. Portions of the Scientific and Technical Awards Presentation will be included in the Oscar telecast, to be held on Sunday, February 26, 2017.

While the Academy has singled out Vlado individually, he is quick to point out that V-Ray is a team effort, and so in addition to his high school computer science teacher, Ms Grigorova, he is also incredibly appreciative of his fellow developers and their respective teachers and educators.

Front Cover art Credit: © Toni Bratincevic

Date: 9–21 June 2017 · Helsinki, Finland

Core conference topics include:

- Global illumination

- Real-time and offline rendering

- Acquisition, modeling, and manipulation of light transport & appearance

- Realistic, non-photorealistic, 2D, and 3D image processing & synthesis

- Mathematical techniques for image synthesis

- Computational photography/optics/displays

- Image synthesis for augmented or virtual reality

- Software and hardware systems/architectures for rendering

- Audio/sound rendering

They are currently soliciting contributions to their two primary tracks:

- the “Experimental Ideas & Implementations” (EI & I) track for papers that will be presented at the conference only, and

- the “CGF” track for papers that will also appear in the Computer Graphics Forum journal, in addition to being presented at the conference. Authors will have the choice of submitting their work to be considered for the CGF track, the EI & I track, or both tracks.

The CGF submission track continues the tradition of disseminating the state-of-the-art scientific results in rendering. Accepted papers in this track will be published in an issue of Computer Graphics Forum.

The objective of the “Experimental Ideas & Implementations” conference-papers track is to solicit submissions that introduce exciting new ideas that have not yet been validated according to the high academic standard of the CGF track, or that describe interesting implementation issues for known algorithms in industry-scale uses. For this second EI & I submissions, the requirements on comparisons to related work, citations, and quality of results are relaxed. Instead, the review process gives more weight to criteria such as whether the idea is stimulating and interesting, or whether it would spark discussion and give valuable insights to the rendering community.

For both tracks, “we are looking for work that shapes the future of rendering and related fields. In particular, we encourage submissions in the related topics of appearance modeling, virtual/augmented reality, computational displays, fabrication, and hardware architectures” commented Matthias Zwicker

and Pedro Sander this year’s EGSR 2017 papers chairs.

Thanks for this really interesting article and congrats again to Vlado and his team!