Framestore used it on Gravity, Weta Digital used it exclusively for tracking The Hobbit in stereo, it has been used on Prometheus, Man Of Steel, The Wolverine, Iron Man 3 and The Hunger Games. It is the ‘go to’ tracker for high-end complex camera work. It is 3DEqualizer from Science.D.Visions.

Based in Germany, Science.D.Visions’ software is used around the world: in Sydney, Animal Logic used 3DEqualizer for tracking The Great Gatsby and to allow us to Walk With Dinosaurs in 3D. In London, MPC relied on it for Skyfall, World War Z, Man Of Steel, The Lone Ranger, The Secret Life of Walter Mitty and 47 Ronin. At Framestore, 3DEqualizer was used on this year’s Oscar winner Gravity for its sync/mocap features. Nearby at Double Negative it was used on Rush, Man of Steel, Thor and Hunger Games: Catching Fire. Cinesite used it for Iron Man 3 and World War Z. And this is only in recent times; since it was first released, 3DEqualizer has been used globally on such Oscar winning films as Gladiator, The Lord of the Rings trilogy, the Harry Potter franchise, King Kong, Avatar, The Avengers and The Amazing Spider-man.

Year after year 3DEqualizer has been at the center of complex object and camera tracking pipelines, and Science.D.Visions has developed an incredible reputation for working hand in hand with the world’s top facilities, sometimes by moving their developers and coders in-house with their clients.

For years this high-end package was limited to advanced pipelines but with new pricing and a new more open policy it is now being used on an ever-increasing range of productions. We sat down with Rolf Schneider, co-founder of Science.D.Visions, to discuss the issues facing tracking.

fxg: What is the biggest challenge facing camera and object tracking today?

Schneider: There are various problem scenarios. Here are some examples…

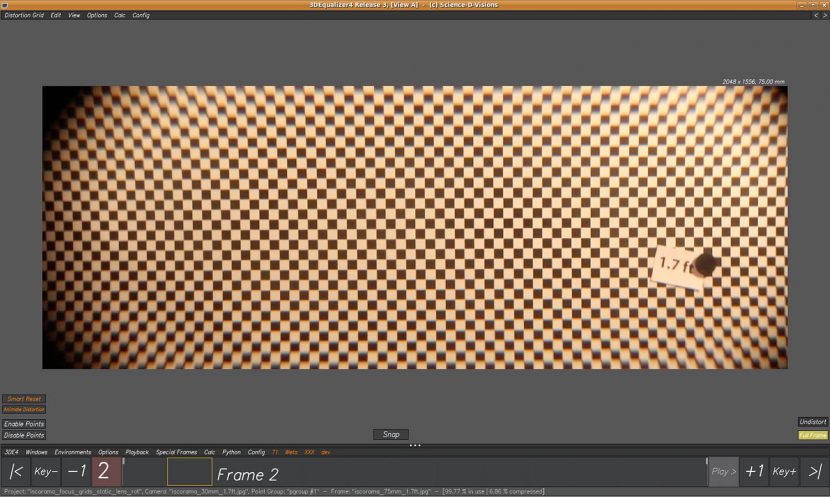

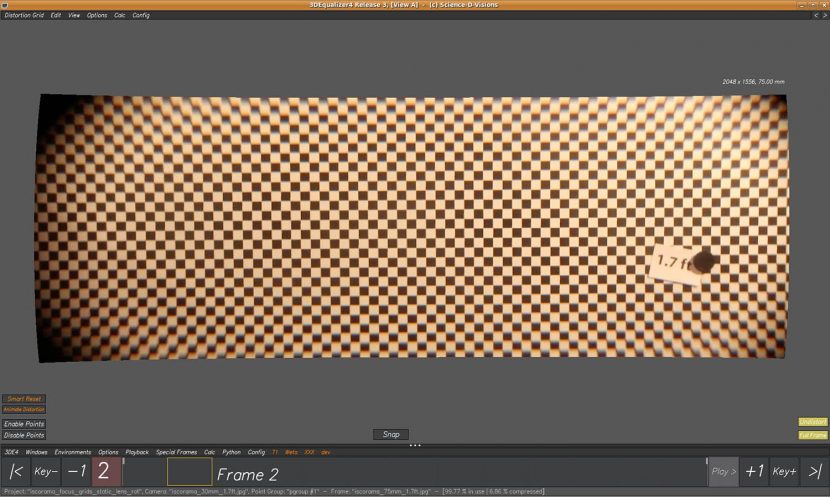

1. Heavily distorted anamorphic shots, containing zooming or focus pull (and thus dynamic distortion), where no lens gridshots are available. In such a case, there are basically 2 different techniques which can lead to success:

A. It is possible to animate distortion manually as pointed out in our tutorial “Anamorphic Distortion and Lens Breathing” – there is a specific script for this task.

B. If proper survey is available, single “key” frames can be picked from the shot. The distortion parameters for each of those frames are then optimized by the help of 3DE’s parameter adjustment window and stored within its dynamic distortion curves (there is a specific script for that, too).

2. Low parallax shots where no survey is available. These type of problem shots usually cause the resulting 3D point clouds to become “distorted” (e.g. compressed or expanded along Z, or even turned inside out), which then causes the resulting camera/object paths to become “distorted”, as well. There are various techniques to address these kind of problems, such as adding more points, “guessing” approx. survey, applying nodal camera constraint, increasing/reducing weighting of certain points, etc.

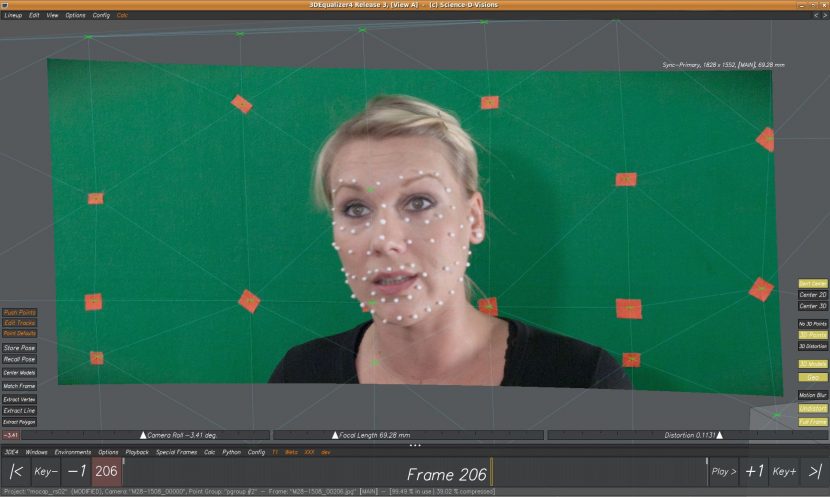

3. Shots with (almost) no visible features to track (such as green screens without any additional markers attached). Again, there are various techniques to tackle those scenarios, such as “enhancing” the green screen (through image controls) in order to track some “noisy shades of green”, or using “edit 2D tracks”/”deform track” scripts to copy already existing tracking curves and “massaging” them into place, etc.

There are various classes of problem shots – probably always will. We think it is necessary to provide the user with enough flexible functionality so that he can react on each situation individually. This can go as far as writing a custom script for specific tasks (like e.g. Wolfgang Niedermeier’s “Deform Track” script).

fxg: Can you discuss the complexity and scope of the 3D polygon geometry that can be derived from tracked scenes?

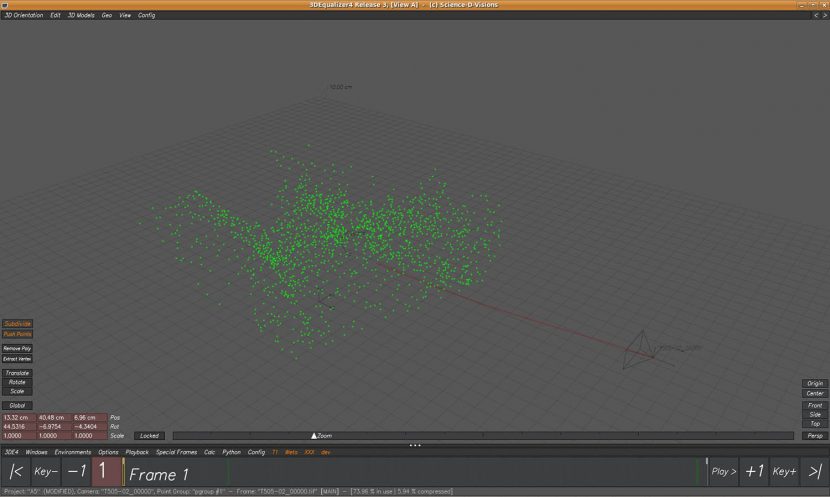

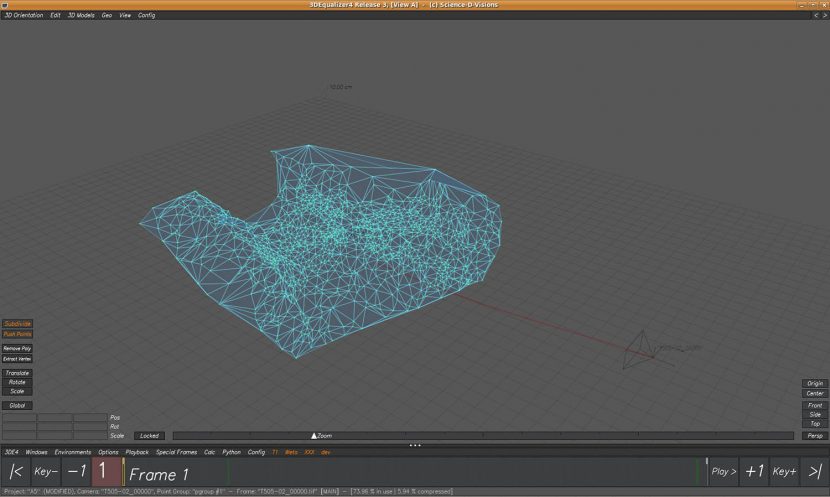

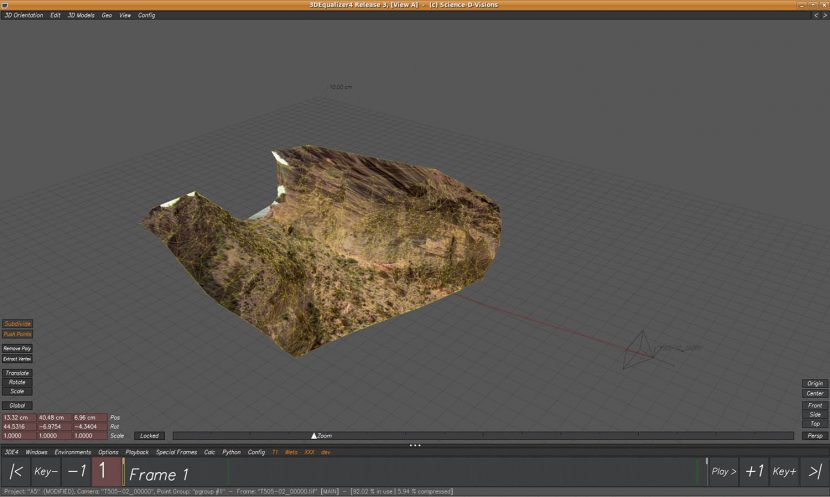

Schneider: Last year we spent a great deal of time to develop a separate function for creating 3D polygonal models out of point clouds, which turned out to be quite non-trivial (if one intends to build a flexible “all-purpose” solution)!

This function is able to generate complex shapes out of nearly every possible set of points. Usually, these point clouds are being created by 3DE’s auto-tracking engine, but can also be created otherwise (imported, manually tracked, or “modeled” by hand).

In connection with R3’s new feature to project undistorted footage onto the surface of 3D models in realtime, it is a powerful feature for checking/tweaking a project’s tracking quality on a per-pixel level. In addition, our clients working in the stereo conversion business benefit from this by a great deal, as well.

fxg: Given you handle a range of source camera footage – how are rolling shutter and other camera artifacts affecting successful tracks today?

Schneider: I’d say that more or less all possible artifacts introduced through lens distortion or through the beam splitter (of stereo rigs) are very well understood today, and 3DE4 can handle them flawlessly.

Rolling shutter however, is a nasty one. The “effect” itself is, mathematically, not very complex, but can seriously damage a frame up to a point where needed image content simply isn’t recorded and thus lost forever!

For a 3D tracking software it means that all 2D tracks have to be corrected before the actual 3D calculation process can be started. If the respective camera move has been quite smooth this correction usually will be successful. However, if there is a noisy (handheld) camera move, then it happens that the camera often will change its moving direction during the recording period of a frame. In these situations every possible 2D-tracking-rolling-shutter-correction (interpolation) method will fail!

We actually learned this the hard way last year, when we tried to develop a motion capturing tutorial. We used a Canon 5D MKII with the magic lantern raw recording hack, for shooting an anamorphic, handheld camera move as main sequence. Although the image quality was excellent in comparison to the MKII’s regular mpeg recording mode, the image was wobbling all over the place. This, in connection with critical synchronized witness camera calculation (mocap) caused too many problems in the end, and we decided to postpone the project until we have access to a rolling shutter free camera.

As a by-product we developed R3’s realtime rolling shutter compensation display, which seems to be quite useful.

We’d recommend every client who has the choice to stay away from rolling shutter suffering cameras if serious visual effects must be done – it will definitely save a lot of money! If there is no way out of a rolling shutter situation, we usually recommend our clients to render CGI content with a rolling shutter “effect” because this will guarantee best possible “matching” results…

fxg: Can you discuss the work you have done in the area of complex lens artifacts – namely breathing and anamorphic distortions as they affect tracks?

Schneider: The development of new features is very often the direct result of comprehensive discussions with our users. For the case of R3’s new anamorphic lens distortion model we got very useful input from Michael Karp who inspired us to introduce “squeeze-x” & “-y” parameters. These parameters actually makes it relatively easy to model those “classic” lens breathing “effects”. However, the real breakthrough in R3’s new anamorphic distortion model clearly is located in its new “lens rotation” parameter. I had some very useful discussions about this with Wolfgang Niedermeier when I was working at Weta Digital for a few weeks back in fall 2012.

By the end of 2010 we introduced a few new distortion models, including a specific 18 parameters anamorphic one. At this point we thought a higher degree distortion model (more parameters) would eventually

finally solve the problem – but it didn’t. Some of the grids we collected over the years worked better, others did not! We even learned that some proprietary distortion pipelines exist with 5 times the amount of parameters, which (obviously) don’t work either…

It turned out that in some lenses the elements responsible for “squeezing” the image 2:1 can be slightly rotated – either because it’s a separate lens element (anamorphic projector) and thus difficult to mount precisely or due to poor design in general. By introducing the above mentioned “lens rotation” parameter, which simulates rotated anamorphic squeeze elements, we were suddenly able to solve all of our grids without major problems – even with a lower degree distortion model (less regular parameters)!

For correcting lens breathing artifacts properly it is necessary to shoot a series of grids – each one taken with the exact same distance between camera and grid, but a different focus setting. These grids are then used to build a LUT for dynamic distortion driven by focus distance. The subtle pixel aspect changes which cause the “lens breathing effect” are “baked” into the above mentioned “squeeze” parameters. For actual focus pull shots this LUT is then used to optimize a specific focus curve using R3’s new dynamic (focus) curve calculation routine. As a quick-and-dirty alternative it is also possible to animate both “squeeze” and a few “regular” distortion parameters manually by the help of a script – in case no grid shots are available.

fxg: Can you discuss tracking complex shots with high distortion such as fisheye or wide GoPro footage?

Schneider: Fisheye shots surprisingly are relatively easy to solve since this type of distortion is structured quite simple. However, what even many industry professionals don’t know is the fact that distortion of a fisheye lens is directly related to focal length (“equisolid angle” projection)! That means, the real “fisheye distortion” kick comes when reducing focal length significantly – that’s more or less the whole magic behind it…

fxg: How much is LIDAR playing a role in accurate camera tracking?

Schneider: Well, having access to precise survey (regardless from being LIDAR, total station data or reference frames) is always a good idea for doing serious VFX. It simply means that calculation can be done faster, less points can be used for tracking, low parallax shots pose less problems, etc. Working with dense LIDAR point clouds or converted polygonal models usually allows the user to lineup survey data faster to the respective background plates because much more details is shown. In addition, more details means that more areas of the frame are covered with precise survey, which means more (survey) features can be assigned and 2D tracked. So, properly prepared LIDAR scan data provides more flexibility to the user!

fxg: How much has stereo cinematography affected camera tracking? Are there any good opportunities in witness camera / stills additional input … tips in this area?

Schneider: Stereoscopic cinematography created a whole new ecosystem of problems for 3D tracking that had to be solved! Here are just a few:

1. Scale. In monoscopic projects scale doesn’t matter, which means that the resulting point cloud of an object track can either be 10 or 1000 units away from the camera – the difference being invisible to the

viewer and thus can be faked. In stereo, the scale of object tracks must be optimized through software very precisely, which often conflicts with survey data and/or interocular distance specifications – resulting in higher deviation!

2. Beam splitters. Usually stereo rigs are build in a way that one eye looks into, the other through a beam splitter (mirror) from behind. This introduces (extra) distortion – differently for each eye. On top of that comes the need for variable convergence and interocular distance, which means the position/distance between cameras and beam splitter may change (sometimes throughout the shot), which means distortion may change as well!

So, distortion is critical for stereo tracking and must be carefully addressed. In the past, some production companies thought it would be a good idea to take gridshots of their stereo rigs – without beam splitter attached. Well, as pointed out above it’s not. In fact, those kind of gridshots were more or less useless!

In order to address these specific distortion artifacts we experimented with various distortion models and in the end came up with one which is now able to mimic decentering of lens elements (unsymmetrical distortion towards the corners of the frame), as well as slight cylindrical distortion (introduced with R3).

3. Dynamic interocular distance. As pointed out above, when doing convergence and interocular distance. Dynamic convergence is computed automatically by 3DE’s specific stereo constraint more or less as a

by-product since each eye’s rotational channels have to be calculated/optimized separately anyway.

However, calculating dynamic interocular distance (distance between left and right eye) can be tricky because it turned out that its relation to deviation often only is quite weak. Some stereo rigs record (more or less) precise metadata which can be imported, but scale and offset often isn’t compatible with on-set survey.

To be able to calculate dynamic interocular distance curves from scratch we had to develop a new dynamic curve calculation infrastructure for R3. This infrastructure is very computational intensive unfortunately because it optimizes deviation for a shot as a whole (and not on a frame-by-frame basis like traditional methods). The resulting dynamic parameter curves generally are of much better quality, though!

4. Quality issues. As pointed out above when working monoscopic there is some room to fake certain things. For instance it doesn’t matter much if a 3D camera is 1 or 2 pixels off in comparison to the background – as

long as this offset stays the same throughout the entire shot, of course. For stereoscopic tracking there are other rules. If the left eye of a stereo track is off by 1 or 2 pixels (which would be ok for a mono project) then it often happens that the right eye is off by the same amount as well – but in a different screen direction! That means, when toggling quickly between left and right eye, CG would jump back and forth very badly and in the worst case appear not in the correct instance to the camera in respect to the background.

In order to overcome these problems, it is necessary to work overly precisely. First, the 2D tracking must be of very high quality and each tracking point must be compatible between left and right eye (exactly

the same feature must be tracked). 3DE provides some very useful scripts for these tasks. Secondly, all additional project parameters must be setup really precisely. Even if assuming that external measurements like survey and stereo rig metadata are correct and in the right scale to each other, a lot of parameters have to be optimized. Due to the fact that beam splitters introduce some extra amount of different distortion (see above), it is necessary to setup a separate lens object for each eye – and thus doubling the number of lens parameters immediately (focal length, distortion). In addition, there are some stereo constraint specific parameters, such as interocular distance and vertical/depth shift!

This means that users often come into a situation where they have to optimize 5-10 parameters simultaneously for critical shots to work. For this purpose 3DE4’s parameter adjustment window provides a comprehensive set of tools, but still this process can take a long time depending on a shot’s number of frames and tracking points!

Stereoscopic 3D tracking is much more labor intensive in comparison to regular monoscopic work. It’s hard to say how much exactly, but I guess at least a factor of x2 must be taken into account!

fxg: Can you outline the three versions of 3D Equalizer that are available today?

Schneider: The current three editions of 3DE4 are completely based on each other. That means – for instance when comparing R1 to R3 about 90% of the GUI looks and feels the same. Under the hood, the situation is a bit different – I’d say that we have replaced about 30-40% of its calculation and tracking routines during the last 2 years.

R2 lying somewhere between the above numbers…

In terms of new features the good news is that the basic user interface structure of 3DE4 hasn’t changed at all. In fact, we recently released a new beginner tutorial which was designed to work for all three editions

– surprisingly there are only a very low number of locations where we had to pop up a message, saying that this or that feature wasn’t available in R1 and/or R2. I personally think that changing the user interface of a product – just for the sake of changing *something* – is not in the interest of the user!

If someone is familiar with the Foundation Editions of 3DE4, then bringing him/her up to speed with the Enterprise Edition is just a matter of watching a few of our tutorial videos, and can be done more or less in a few hours (if at all)…

fxg: How has the user base grown? Is it still primarily feature film high end work or has the user base widened? Should the new pricing be seen as reflecting a desire to widen your user base?

Schneider: Our new pricing structure which we started to introduce about a year ago has been quite successfully adopted by our clients, although we still “only” concentrate on the high end with the development of new features for 3DEqualizer (and always will). With the recent price drop of R1 to 499 Euro and R2 to 1999 Euro we continue that path.

The site licenses we were able to install at some major clients really accelerated things for us, because hundreds of freelancers are forced to work with 3DE every day just at those handful of companies alone.

These freelancers carry their knowledge to other, sometimes much smaller, companies. An effect which we often experienced in recent years. Our updated pricing structure now easily allows new clients to grow with their installation of 3DE, simply because R1, R2 and R3 are very similar and compatible to each other.

3DE4’s alleged “low end” foundation edition (R1) in truth is a very powerful 3D tracking software which has been best-of-class for all of those major clients until 2011 – in our eyes a great deal for students who want to enter the VFX industry or small shops who only need 3D tracking a few times per year. Once their business is growing R1 can be easily upgraded to R2/R3 and grow with them…

Amazing software, i started using it last week after seeing the AD on this site and wow.. i’ve no idea how it hasnt been in my life. thank you!

3DE is an awesome bit of kit, BUT no amount of technology is going to help when onset VFX sups don’t bother putting in markers, shoot in low light with DOF and no lens info…. I await the day where camera tracking is done real time in camera by default.