For many, the advent of volumetric capture poses an interesting technical challenge, but it also offers a wide range of creative opportunities. Today there exists a set of trade-offs between the technical and creative, but the area Is rapidly evolving and fxguide got to sit down with the team who recently pioneered volumetric capture for the Coldplay + BTS My Universe promo to discuss not just the practical lessons they learned but where they see this rapidly evolving technology is heading.

Ingenuity Studios, coordinated the visual effects work from five VFX studios including its own, along with AMGI, BUF, Rodeo VFX, and Territory, from inception to delivery of the wildly creative My Universe music video for Coldplay + BTS. The music video was directed by Dave Meyers and produced by Nathan Sherrer of Freenjoy. An entire team worked to plan, design, and deliver cutting-edge visuals with motion graphics to drive the music video’s future-facing narrative and celebrate the love of music in a galaxy far, far away…

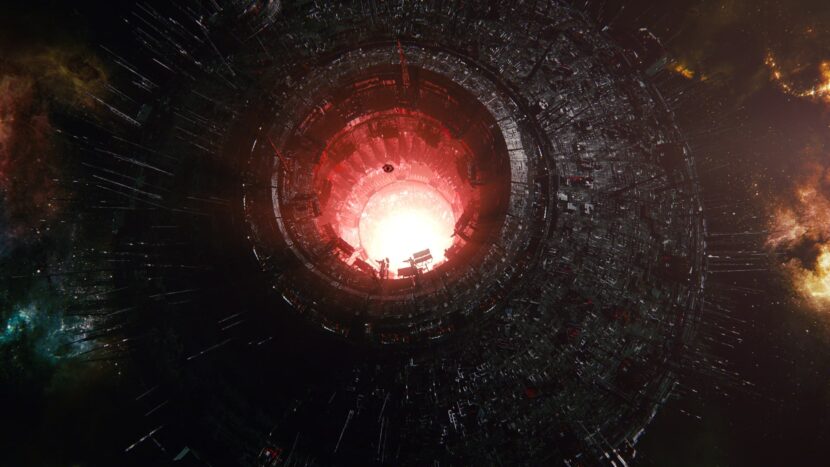

My Universe has the groups are on separate planets and seek out an illegal way to communicate and jam together with the help of a renowned renegade in the stars (DJ LAfrique) who transmits a joint frequency between the worlds. Work on this project spanned approximately two months and Ingenuity’s workload was heavy on CGI environments, along with coordinating with multiple studios. Ingenuity completed 210 VFX shots for the video.

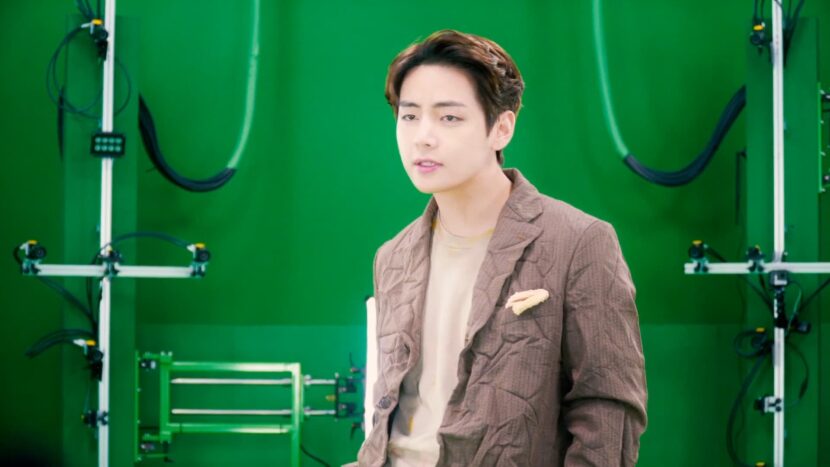

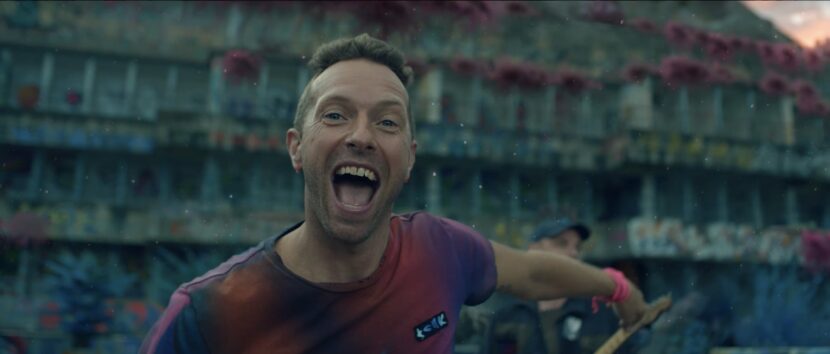

VFX Supervisor for the whole project was provided by David Lebensfeld and Grant Miller both partners at Ingenuity Studios. The material was shot in Seoul, Spain, and London. “BTS was shot on green screen in Korea. Coldplay was shot on location in Spain on two separate locations, and the alien band members were all actors, also shot on location,” comments Grant. “Then we obviously heavily augmented and modified the backgrounds for each of those.” Jin(진) , Suga(슈가), J-Hope(제이홉), RM, Jimin, V(뷔) , and Jungkook(정국) or BTS are the best-selling artist in South Korean history. My Universe was written by both bands and is sung in both English and Korean. While Coldplay did manage to travel to Korea during songwriting, the film clip had the various bands and actors filmed separately.

VOLUMETRIC CASE STUDY

The volumetric capture was based on a mobile rig with a 360 degree,108 cameras rig. The rig captured a point cloud of data that was valid from any angle. AMGI handled most of the volumetric capture data from Dimension Studios’ Polymotion Stage. Coldplay’s guitar and bass were captured in-camera, while the drums were tracked with MoCap, then modelled and recreated in post-production. In the Polymotion Stage (which is partnered with Mark Roberts Motion Control), 96 cameras are positioned around the capture volume facing inwards, and 10 above pointing down. With multi-directional microphones, we can capture spatial audio or reference tracks to be sent to sound design.

Matchmoving elements appropriately

The volumetric capture allowed the bands which were shot at different times, using different cameras, in different locations to be matchmoved into the one location that ultimately ended up being the Supersolis planet, explains David. “For example, Coldplay got volumetrically matchmoved into that location, so when you’re moving around the band or the Coldplay characters as holograms, they move appropriately.” For this to work, each band member had to be separately captured as the volume was only large enough for one musician at a time to be captured.

Flexibility

The team found that while the data handling of the volumetric capture required a lot of work, that the approach did provide great flexibility. “We even found after the fact on our first pass, what we thought was an obvious placement of holograms, the director said, no, no, no, they need to be turned 180 degrees opposite where they need to be placed this way, or let’s just shoot some stuff and we’ll figure out where it goes later. And so it did offer the flexibility. I think it was maybe more work than anyone really appreciates, but it did give us infinite flexibility to rotate the characters.”

Size of the Rig

The 108 camera rig was only large enough for one member at a time to be filmed. This is not a limitation of the core technology, but rather the team’s desire to see the band members’ faces. The wider the volume the less the team could push in on the band members’ faces. This was particularly an issue for capturing BTS. While the band enjoyed the experience, as a very tight and well-established group, the members of BTS are used to being able to physically connect with each other as part of their stagecraft, rather than perform alone.

The 108 camera rig was only large enough for one member at a time to be filmed. This is not a limitation of the core technology, but rather the team’s desire to see the band members’ faces. The wider the volume the less the team could push in on the band members’ faces. This was particularly an issue for capturing BTS. While the band enjoyed the experience, as a very tight and well-established group, the members of BTS are used to being able to physically connect with each other as part of their stagecraft, rather than perform alone.

The limitations of the cameras are what motivate the size of the volume, “in this case I was happy to have the size that we did because it meant the fidelity of the capture was much higher,” says Grant. “It was a little constraining for the artists to perform probably, but I don’t get the sense that it was a problem for them.” The team at Ingenuity Studios had done a much bigger space for a project for Canon, “that was a much larger space, – and the fidelity of the capture really isn’t as good on a larger area.”

Virtualizing an Additional CloseUp Camera

Colin was quick to point out that perhaps a hybrid solution would work well moving forward. Perhaps a system that had wider coverage as is standard with the 108-camera rig, but with an additional set of longer lens cameras that could be used to virtualize a closer camera position for facial performances. This idea would effectively have the band members being captured in two separate but overlapping volumes, one for the closeup, and one for the wide shot.

Performing to the Camera.

An interesting issue for a performer as well as a director is being able to perform to the camera. Artists as professional and experienced as Coldplay’s Chris Martin, are more than comfortable performing to a camera. Furthermore, often a director will seek very specific performance blocking in relation to the framing, and yet without a single primary camera, this is impossible. Thus, BTS and Coldplay’s band members had to perform much as they would in a concert but no obvious front of the stage or single camera reference point. While both styles of performing pose no problem to either band, it is unusual for them to present as they would at a concert but captured individually and in a much tighter space.

Don’t shoot it in Post

Regarding the cinematography, volumetric capture is like motion capture which can also be recorded without blocking or framing from any specific camera point. “James Cameron has spoken about that issue of virtual cinematography in Avatar,” comments CCO/ CTO of Animation at AMGI, Colin Brady. “If you are filming actors on a wide shot you want the performance to be broader than if you are filming it for a closeup. You want the actors to be really subtle in a closeup and you have to prepare for that. So I think in this case the issues are similar.”

“Where it is successful and where it can be really useful is for viewing an actual performance from 80 different angles” explains Grant. He believes that if a project called for recording an actual Coldplay concert and presenting that concert in a video game and watching it in a pair of VR goggles, “then filming it in a volume metric fashion you will be able to capture the performance better than in a MoCap suit and then retargeting it to a 3d character,” he explains.

What one cannot do in post is alter a person’s head direction or move an arm with any type of offset. The captured performance has visual authenticity, but they are not able to be reanimated the way a rigged 3D digital human avatar could be moved.

The Visual Language of Holograms

For the audience to appreciate the narrative of the bands ‘beaming in’, the creative decision was made to degrade the volumetric captures and make them conform to the universal language of holographic cinema. From the time of Leia and R2D2, holograms have been visually indicated with transparency, a bluish tint, and visual imperfections.

It is slightly ironic that rather than trying to make the captured volumes look as real as possible, the team needed to degrade the data to ‘sell’ the narrative. As luck would happen in the early testing the tech delivered, – by accident. “From the beginning, the director always wanted us to add some effects and make them kind of glitchy and imperfect,” explains CCO/CTO of Animation at AMGI, Colin Brady. “Our very first pass actually had to natural glitches. There was kind of an offset of the textures versus the actual geometry. And they were kind of glitching every six frames or 16 frames in some cases in strange ways. But the thing is, it kind of looked cool! And looking at it, the natural glitches almost looked like we were putting them in.”

Motion Blur and Camera Blur

Given the nature of the process the system does not produce motion blur. In the case of a shot with a moving camera, the virtual cinematography will allow for camera motion blur, but an individual’s movement such as J-Hope’s arm moving will produce no motion blur of the hand. In some respects, the team believes this is a feature and not a bug. David comments that “We see it as part of the look of the shot being a hologram and here, we are not trying to sell it as a real presence of a person that was shot in-camera.”

Data management

The team found the data format to be very interesting to work with. “The raw format is, is a really cool format. It is an MP4 format. It’s basically like a movie file, that is incredible,” Colin comments. Microsoft developed the format that encodes a ‘3d data movie’ into an MP4 type file, “and it’s pretty mysterious how it works. However, we are rendering with the Unreal Engine, it didn’t quite play well with the format.” To solve the format problem the team had to turn that the 3D data into Alembic files or 3D caches of data. Unfortunately, those didn’t always sync up with the textures. “So just in order to get a consistent playback, it was about 10 times harder than we thought it would be,” Colin joked. “But for the record, the actual MP4 format is, is a beautiful format. It’s just not predictable and repeatable for rendering processes, – but it’s a wonderful live format.”

One curious aspect of the file format was the edges of the characters. “One advantage of using the volumetric is occasionally we were able to give a little bit of a glow at the edges of the holograms,” explains Colin. For example, when Chris Martin’s arm was crossing in front of his body the image displays something similar to Fresnel, “which you wouldn’t have gotten in live-action, – it’s a totally nerdy thing that I’m proud of when you do see it.,” Colin adds.

The system captures a point cloud which is then reconstructed via polgonization on a per-frame basis, without any temporal cohesion between frames. The team at Dimension Studios gave the team the choice of having the data exported as is with full captured data per frame. “However, they recommend having a keyframe every six frames, a keyframe to aid with consistent geometry,” Colin outlines. In this respect, the data format works like MPEG. The team could also have chosen to have the data handed over with a keyframe every 12 frames. In real terms this meant that the data size was smaller as the Alembic cache texture files would only fully update every six frames. “if you could see the textures that were associated with the alembic cache, it was a crazy movie file to watch where every six frames you would see a texture, it would then have a little bit of a shimmer- and then it would change every six frames to another completely wildly different UV layout,”

Unreal Engine

The team when working on this project used UE4 with the data imported via a plugin. Unfortunately, at the time of the production, while the importing worked extremely well to review, when the same data was run through UE4’s sequencer, the playback became unstable and the “frame rates somehow did not match the actual frame that we thought we were rendering,” Colin recalls. “It was a little bit like rolling the dice and even if it was just a couple of frames off you just can’t build that kind of unpredictability into a show like this, so we moved the path of Alembic caches.”

Unfortunately, that was not the end of the issue. Colin points out that when they were doing this project earlier this year Unreal was not “really great at bringing in large Alembic files.” The issue was the import process which could take a reasonable amount of time in UE4. “We’ve done some other experiments recently and we brought in about a nine Gigabyte Alembic cache into Unreal. And, even with our fastest machine, you might as well walk away for 20 or 30 minutes. The good news is you only have to do it once.” As My Universe had 65 volumetric shots, the team decided to build them into one giant scene file. The company used Perforce for shot management and with COVID and remote working this meant the team had to transfer 90 Gigabyte files between the artists who were not in the office.

The team produced My Universe in UE4.26 but they recently tested the same Volume capture data with UE427 and they have the same issues with Sequencer, unfortunately in the new version. Ingenuity Studios, in particular, are huge fans of Unreal, they use it a lot and yet they are still keen to see it go further in being able to be used on highly complex productions such as this. A particular aspect the team is keen to encourage Epic to develop is some procedural control for versioning with universal timecode. Grant explains that, in his opinion, this is the biggest problem that they have with Unreal. “It’s not procedural in nature. If we were in Houdini, you’d have that master scene and you could have transforms for every shot (allowing shot-specific adjustment) and thus you could do shots specific overrides for where the camera is – with constant time code for the whole shoot.” He loves Unreal and yet he dreams of having the ability to say to a client ‘here are three options’. “And I can save these three options and come back to them later !”.

Colour grading and integrating

As the data from the capture is a solid 3D object, it can have some relighting, but the process is not designed to be fully relightable, since any lighting that is added is effectively on top of the lighting of the volume. Unlike a Light Stage Volumetric capture, there is no separate polarized diffuse and specular passes.

What is possible is to cast very accurate shadows, since unlike a video card or layer, the musicians were solid forms. Another very useful aspect that naturally follows is that any smoke or mist simulation will flow naturally around the character. So while nothing could be ‘attached’ to the captured people, since their geometry was not temporally stable, an actor could be made to look as if they were moving through a stack of ping pong balls or indeed water, if correctly simulated.

My Universe:

AMAI also contributed character animation shots.

Additionally, for the film clip, the following companies contributed major shots.

BUF:

VFX Producer – Philippe Lépine

VFX Producer – Camille Gibrat

BUF crafted the full CG intro and outro sequences as well as the spaceship’s interior shots. The team shared the scope of their work between our Paris and Montreal teams.

RODEO FX:

VFX Supervisor – Erik Gagnon

VFX Producer – Emilie Debiasi

RodeoFX VFX team was involved from shooting to delivery. In addition to creating the special effects, Erik Gagnon, VFX supervisor at RodeoFX, worked closely with director Dave Meyers on set in Barcelona. RodeoFX created the entire environment of the planet ‘Floris’, the one on which Coldplay performs. In order to create this universe, their team numbered over 30 artists.

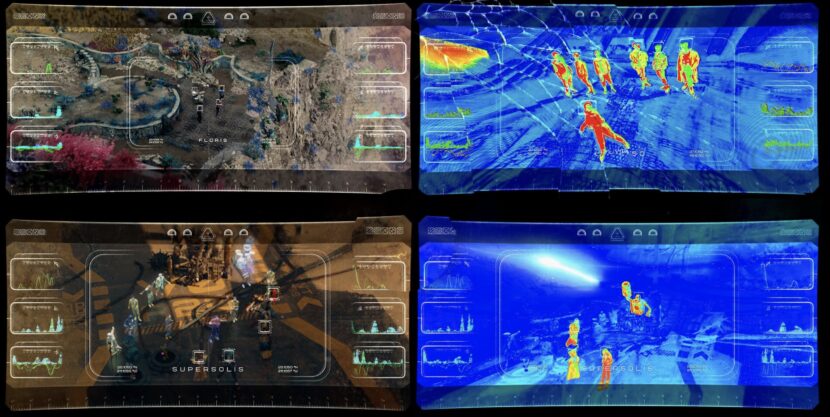

TERRITORY:

Creative Director – Marti Romances

Executive Producer – Lyniel Dao

Producer – Ari Ali

Territory Studio was tasked with designing the UI and communication systems for My Universe, building uniquely refined UI, holograms, and graphical elements featured throughout the promo. The multitask brief required design and animation of menus and HUD systems for the spaceship interior, the drone POVs of different galactic planets, VFX transitions teleporting band members from one planet to another, as well as titling and text elements.