Trojan Horse was a Unicorn is wrapping up here in Portugal. As we’ve already commented, it’s an event like no other – almost completely artist-centric and one where every attendee feels like they’ve been part of something very special. Trojan Horse is limited to only a few hundred attendees, who have direct access to all the speakers, often for review of their portfolios. It almost feels like you walk away with a few hundred new friends at the end of the event, too.

We’ve seen some of the world’s best graphic artists, illustrators, designers, CG artists, make-up effects artists and visual effects supervisors at Trojan this week and now we wrap-up our coverage with a look at Kevin Mack’s psychedelic VR experience, an insight into perception from David Prescott and a showcase of the event’s opening and closing title sequences by the talented team at Juice.

What Dreams May Come to VR?

Visual effects supervisor Kevin Mack won an Oscar for What May Dreams May Come, a groundbreaking effects film that showcased optical flow, L-systems and a painterly aesthetic. He worked for many years at Digital Domain and at Imageworks, also contributing to such films as Fight Club, Ghost Rider and Speed Racer. During his time on those films, Mack remained dedicated to fine art and for several years produced digitally created surreal sculptures and scenes and abstract images. Now he’s placed his 3D creations into what can only be described as a psychedelic VR experience, called Shape Space, that has the painterly shapes glide slowly past the viewer with an equally psychedelic soundtrack. fxguide donned the VR goggles for a trip into this world at Trojan Horse was a Unicorn in Portugal.

Note: We certainly realize it can be hard to appreciate VR experiences in articles such as these without seeing them in action – but you can check out a short video demo of the work at Mack’s website here to get an idea of the look and feel of Shape Space.

fxg: Your VR experience seems to make the viewer feel something, even though it’s a very abstract experience – how did you do this?

Mack: People do report some pretty extreme emotions, even crying. A lot of the stuff I’ve seen in VR can lack a certain substance to it. I think the issue is that most people are considering VR an extension of existing media. It’s an extension of games, or adding 3D to a movie, an added feature to our traditional forms of narrative content. But virtual reality is a fundamentally different medium. It’s a completely different part of our brains that respond to a virtual experience. With movies, we respond to them as a third person objective perspective – we are watching it as the voyeur. But in VR we are having the experience. It’s a first person subjective experience.

Still, everyone’s talking about the future of storytelling as VR, but I don’t think it’s a storytelling medium in the way that we think of traditional narrative. It won’t work in VR. I think narrative and storytelling will evolve into something else in ways that does work in VR.

What I’m doing is avoiding all of that! By not having any narrative, not having any game or interactivity. But I’m going for a psycho-active experience. Because there’s nothing for you to get a hold of, there’s nothing representational there – it’s photo-real, but your brain cannot recognize anything verbal or known or understood. So it activates a very different part of your brain. It seems to trigger an experience of presence and mindfulness in that it engages your brain in a feedback loop. Your brain tries to figure out what you’re seeing. So you get some pareidolia, where you see some shapes and things in there and perceive those in a certain way.

fxg: You were producing those 3D painterly images a while ago, weren’t you – how are they made?

Mack: It’s procedural, done in Houdini. It’s not fractals, it’s a combination of rule-based systems, implicit surfaces. I basically build natural systems. Instead of modeling a natural system after the way crystals grow or after the way plants grow or clouds form, I use the same overall system in that it’s a system – but it’s not any system we’ve seen before. It’s my own system. And I incorporate into that system elements that are hand-made. I make digital paintings that are made by hand. Those serve as sources to the system. The system builds a whole world out of those elements. They’ve been animated all along. The animation is integral to their creation. That’s part of the function that creates them over time.

fxg: What was your learning curve to creating these images now for VR?

Mack: Well, to compose for 360 degrees is a very different challenge. I’ve been a huge fan of 3D since I was a kid – I used to draw 3D comic books with red and blue pencils. So I was trying to make an experience that would take advantage of the capabilities of virtual reality and exploit its strengths and not worry about trying to tell its story or make a game or solve a puzzle.

One thing that threw me a little was the whole motion sickness issue. I don’t get motion sickness so it’s hard for me to judge. You can spin me around all day – I love it! But I realized in one of my early tests that I was moving the camera too much. They say you shouldn’t move it at all, but I found that by moving it very slowly and not in a perfectly straight path, that it creates this experience where you can’t quite work out what you’re looking at.

One of the big issues with mobile VR is that it doesn’t have translational tracking – it’s just rotational. So when you move your head it’s not perfectly nodally, and you’re going to get that discrepancy and lack of parallax. But it doesn’t seem to matter for what I’m doing because your brain can’t work out that it’s not happening because what you’re seeing is so complex.

fxg: The experience was already very immersive, but there were times when I wanted to put my hand out and manipulate the objects and stir the paint up a bit. Tell me that you’re going to try and do that…

Mack: Ha, it’s definitely something I’m interested in pursuing. It’s not really possible right now. I am investigating realtime rendering with Unreal Engine, but this has way too much complexity for a realtime engine. It’s interesting to me – everyone’s response is that they want to reach out and touch the things. So many people say they want to manipulate them and interact with them. But I’ve learn from the whole process that the fact that you can’t do that is part of the power of the experience. You are disembodied and it causes a change, a shift in your brain. As soon as you can interact with the world, you’re engaging a different part of the brain.

fxg: How did you manage to render these complex images?

Mack: I struggled with the rendering. With the sheer horsepower required, I had to beg, borrow and steal to get these rendered. I could have rendered almost a whole movie for the 7 1/2 minutes of VR. The rendering was done by Intel in the end for me. They had some powerful development machines and it was 45 days and nights of solid rendering. There are breaks in it because things happen, machines crash et cetera. So it took several months to render seven minutes in stereo and in 4K.

fxg: Could you do preview renders beforehand at all?

Mack: No, I had to guess everything and that’s the first pass that you saw. It’s interesting, because I know the workflow from visual effects where you render AOVs and you have a pass for everything and can composite things together. But I’ve always resisted that process – I just much prefer to fix the render than in comp. Compositing isn’t my favorite thing, I’d rather not have to do it. Sometimes I do some post processing, but the Shape Space VR has none – that’s one pass. One element. No layers.

fxg: What was involved in working out how to render it out?

Mack: It’s an equirectangular lat-long. I render in Houdini, in Mantra. There is no equirectangular renderer in Houdini. And I wanted to do it in stereo and that was something that wasn’t really out there yet. So people told me I couldn’t really do that. I saw it as a geometric problem that’s been going on since cartesian coordinates were invented – there’s going to be a discontinuity somewhere if you have a stereo pair and have panoramas. When you look down and now you’re going to hit a line where the image is going to invert and it’s going to be backwards – the front will go back and the background will come foreword.

I thought about that and I solved it geometrically. I know other people have done this – it wasn’t like I was the first person to solve it. But there was nothing out there, I couldn’t find any information about how to do it. So I figured out how to deal with the discontinuity, but not solve it because it’s intractable. But I was able to reduce the discontinuity to a singularity at the folds.

My son, Jon, is a Houdini guru and he wrote a lens shader for me. He’s now adding features to the shader. He actually did it in an hour and said it was pretty trivial, but even he didn’t believe me that it would work. We named the render the MackDome shader and it can render domes as well as spheres.

A lesson on perception

David Prescott is also a former Digital Domain visual effects supervisor with credits there on films like Titanic, What Dreams May Come, The Day After Tomorrow and Aeon Flux. He also worked at DreamWorks Animation on Rise of the Guardians. Although Prescott did present on some of his innovative solutions used on those films, his real message was about perception – and how artists can look at things from different perspectives and bring incredible benefits in the process.

Prescott grew up in South Africa, had an early interest in photographing miniatures and quickly developed an interest art and photography. After scoring a job working in commercials, he says he had a ‘lucky break’ while delivering a Toyota commercial. It was a spot that required smoke, which at the time was typically done in CG with spheres. But when these spheres intersected they often had weird looking lines, so Prescott thought why not do them with circles (which kind of looked like spheres). The result was successful and Prescott reflected that it was his almost complete lack of understanding about CG techniques or vertices or normals that helped – what he had actually done was cheat the point normals, but in his own mind he had just thought about the problem differently.

It just so happened around the same time that the team at Digital Domain needed a smoke solution for Apollo 13, and Prescott shared his technique, ultimately scoring a job at the studio. It was at DD that Prescott says he got ‘paid to look’. He would continue to innovate, too, devising a L-system approach to the tree DD contributed to What Dreams May Come and even recommending significant changes to the shooting of miniatures via motion control (by previs’ing all the possible movements in Houdini) that would shave weeks off the process.

That moco innovation turned out to be something Prescott did without being asked to do it, and without him asking the studio to do it either (it’s better to ask for forgiveness than for permission, he says). On top of that, it was actually an admittedly self-serving gesture, since all he really wanted to do was spend more time on the moco stages shooting with the likes of VFX supe Erik Nash.

In terms of perception, Prescott shared his experience working at DD on the first X-Men movie. For a scene in which the mutants fight near and on the Statue of Liberty at night, the VFX team delivered a digital statue. Having shot photographic reference of the real statue at night, they noticed it was bathed in blue light (and so appeared blue, of course, even though it is green in color during the daytime). They made it blue and showed the client, who said it didn’t look real. Prescott realized that most people THINK the Statue of Liberty is green, and that was why in the night shots they thought it looked incorrect. With some fast color correction the team was able to make the structure green instead of blue and final the sequence.

On Rise of the Guardians, Prescott had several challenges. One was to make a sand effect look ‘evil’, a critical story point. Initially it was thought the golden sand that had been created already could just be turned to black, but it still didn’t look evil enough. When one of the DreamWorks artists was playing the scenes through it happened to be shown in reverse. Immediately the team realized that that sand looked ‘evil’. The reason, suggests Prescott, was that as an effects sim, the motion of the normal sand was still somewhat predictable, but backwards it was not.

So he asked his effects team to ‘run the sim backwards but then make it go forward’ (a line Prescott says made him feel like he had become just like a client with an impossible, obscure request!). But the DreamWorks artists nailed it by running the sim, re-ordering it from back to front and then placing it along a spline to give it the unusual sought after motion. Again, it was all about coming at the problem from a different perspective.

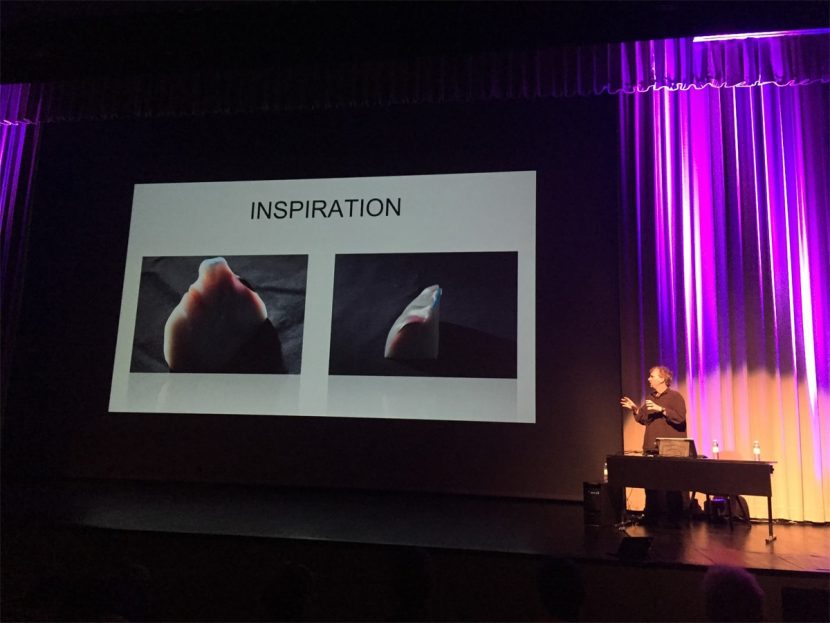

Similarly, Prescott looked for a different approach in realizing the look and feel of character skin in Rise of the Guardians. He said skin at the time on animated characters was starting to look the same and he wanted to do something that wasn’t just about writing a new CG skin shader. Instead, he and production designer Alex McDowell took the unusual step of consulting Legacy Effects to see how they would tackle the issue of skin appearance and sub-surface scattering.

Legacy built a 1/4 face in silicone (it was only 1/4 as Prescott wanted to avoid the film studio commenting on the actual face design too much) out of several silicone variants. The result showed different translucencies and a blue iridescence on top – the skin actually looked different from different angles. When Prescott asked Legacy how it was achieved, the answer turned out to be more technical than he first thought – with clearly measurable widths of silicone below the surface determining the level of translucency.

And that’s exactly how DreamWorks would implement the actual skin shader – by having no detail on the surface but allowing rays to hit beneath it at clear predetermined depths. “That’s what Legacy had done,” says Prescott. “So we replicated it.”

Juice freshly squeezes Trojan Horse’s unique titles

If you haven’t heard of Poland post house Juice, you should check out some of their great work. The studio, which also has an office in Hamburg and soon Japan, helped bring to life this fun teaser for The Martian with Neil deGrasse Tyson. At Trojan Horse, Juice showcased opening and closing titles made specifically for the event.

The titles were designed to evoke Trojan Horse’s sense of passion, and the idea of how the event can re-invigorate and renew artist passions. They also reveal the concept of the ‘tribe’ at Trojan Horse, that is, the collective of attendees. After a week here in Troia, fxguide has clearly seen how the tribe mentality works – and the fact is it’s an incredible bringing together of conversation, ideas and absolute devotion to art.

Beginning with concepts in Photoshop based off the voice-over (which is Trojan Horse ambassador Scott Ross), Juice created an animatic for the titles, and then launched into modeling and animation production that ultimately spanned the globe. The characters featured in the titles were in fact all sculpted by different modelers, with face shapes modeled individually and blend shapes used for animation. The fluid simulations were achieved in RealFlow. Rendering was handled in Chaos Group’s V-Ray. You can find out more about Juice and their range of unique projects at the studio’s website.

Trojan Horse might be over for another year, but here at the event a dedicated video team captured many of the sessions and filmed specialized shows that will be available over coming months on THU TV.