Over the past year, Pixomondo (PXO) has opened three virtual production facilities, with shows like Star Trek: Discovery, Star Trek: Strange New Worlds, and Avatar: The Last Airbender employing these stages and crew for their projects.

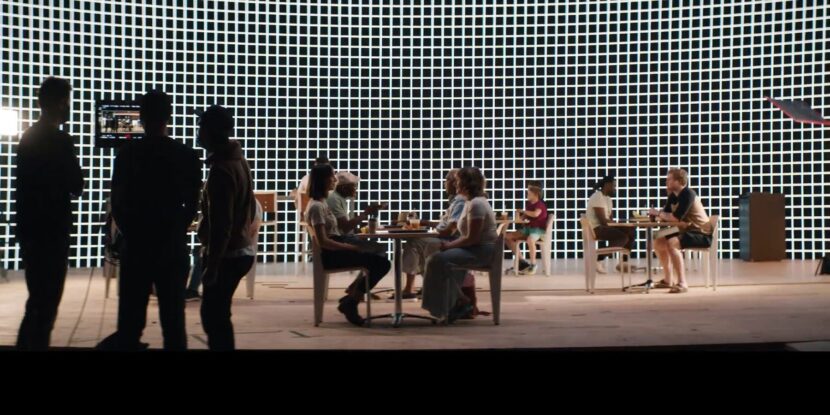

While Virtual Production is a clear option for otherworldly fantastical settings, PXO wanted to show how well it could work for TVCs. The result was a spec/proof of concept for McDonalds that was shot entirely in Virtual Production (VP) on one of PXO’s Toronto stages. The spot showcases how this technology can be deployed in the most traditional of TVC environments: a fast-food interior commercial.

For this piece, the story itself illustrates the power of shooting on the LED Volume. The team were not only able to simulate the weather of three entirely different seasons being Winter, Spring, and Summer but also create sunrise lighting for the entire day. Controlling the quality of light, while feeling the production is shooting on location, is a primary focus of what can be achieved within the LED Volume studio. The McDonald’s shoot was one 12 hour day, requiring a third of the normal crew, and a third of the lighting equipment, the team estimated. To execute this script in a conventional way on location would have normally meant a two-day shoot, excluding any travel time if the location was remote.

The Mcdonald’s project was a collaboration between Alter Ego, PXO Virtual Production, William F. White International Inc., Feels Like Home Films, and co-directors Matthew Manhire and David Whiteson. Mahmoud Rahnama is the head of Studio/VFX Supervisor at Pixomondo and the DOP was Eric Whipp. We spoke to the team about the project and the benefits they found in working this way.

FXG: Can you discuss asset creation? For example, was the store fully 3D or an actual restaurant photographed and mapped onto simple geo to allow for the parallax?

Director David Whiteson, In order to be as accurate to a real world environment as possible, I sat with a traditional architect to layout the space in CAD. The benefit of using an architect is that they understand the form, structure, and materials needed to build a real space. Once we had a space we were happy with the CAD model was handed over to the CG department where they lit, textured, and brought an immense amount of realism to the environment. Absolutely no real photography was used. The entire space was built in a 3D environment maximizing the potential for parallax when the camera moves.

FXG: What tools or programs were used to create the digital assets?

VFX Supervisor Mahmoud Rahnama: I would say 90% of this entire environment was built in Unreal Engine. The common elements between the practical world and the virtual world were scanned, re-topologized and built in Maya before going to Unreal. The building itself came from the architect as an FBX file which we took to Unreal and used it as the basis for everything. We utilized a lot of assets from the Marketplace, Sketchfab, and the Unreal Megascans. We did all the look-development inside Unreal.

FXG: What is the size and nature of the LED panels on the stage?

Mahmoud: This spot was shot on PXO/WFW’s (Pixomondo and William F. White International) smaller volume which has close to 800 wall panels and about 200 ceiling panels. The volume is 62’ in diameter and the wall height is 20’. The ceiling has 5 different sections that can move vertically and tilt using a remote control. The wall panels are ROE’s latest Black Pearl II v2 with 2.84mm Pixel Pitch which is the best panel on the market for in-camera vfx these days.

FXG: I assume the top hanging LEDs are different from the sides?

Mahmoud: That is correct. Our ceiling panels are ROE CB5s that are 3 times brighter than the wall panels. They are meant for lighting and reflections, but they still hold up in camera if they are not in focus.

FXG: What was the camera tracking system you used?

Mahmoud: On this particular stage we use OptiTrack cameras. We have about 50 cameras with different ranges tracking inside and outside of the volume. This stage supports multi-camera tracking. We can also track props and dynamic lights, for example, a practical light within the volume can illuminate the virtual world.

FXG: Did the DOP use any virtual bounce cards (via the LED walls) to aid with closeups?

DOP Eric Whipp: We definitely were using virtual bounce (white cards on the virtual wall) in our initial tests. For the actual shoot day, once we had our lights in place, we found that we didn’t require any extra bounce, but had it standing by if needed. The CG set on the Virtual Wall creates a great overall lighting of the characters. From there, I added a hard light to simulate some sun coming in the windows, and some softer fill to shape the faces where required.

FXG: What is the latency on this stage, and is this stage just primarily using nDisplay?

Mahmoud This really depends on so many different factors, but I would say 3-5 frames, and yes it was using UE 4.27 nDisplay.

FXG: Can you discuss the camera package?

Mahmoud: This spot was shot at 4K but down-resed for the Web. Our partners at William F. White International provided us with an Alexa mini LF, Cook Anamorphic lenses, and a Chapman Super PeeWee III plus Dolly.

FXG: How long did the Set build take (the 3D store) ie. how much pre-production was needed prior to the shoot day?

David: The layout of the space took 2 weeks with an architect which was then passed off to the CG department. After that point, the 3D environment build took about 6 weeks. One week prior to the shoot we went into the studio to perform what is called a “blend day” This is the process where we load up the environment onto the LED Wall and see how well it seams with the real-world set. We laid down a very small section of our real floor up against the wall and had to make slight lighting and color adjustments to blend the real floor into the 3D floor. This is also an opportunity for a director and DOP to really start to shape the overall lighting and set the tonality. In a traditional location environment, this would be similar to a pre-light day.

Mahmoud: As this was the first time that the directors and DP were seeing the environment on the wall there were some slight adjustments needed to the restaurant. We had planned on shooting on a Sunday and our blend day was the previous Monday so that gave us ample time for tweaks to the environment. On the morning of the shoot, the Art department laid the entire floor on the stage, set up the prop table, chairs, and a garbage can or two. Overall the propping was very minimal. I think 6 tables in total. It’s really quite difficult to see which is real and which is fake.

We did all the previz and techviz in Unreal and because of that we knew exactly what type of shots could be achieved on the stage. We knew exactly how to rearrange the set. For example, for the last reveal shot, we knew exactly how long of a track we need and how fast the camera should move back. Doing our homework in advance and seeing everything in Unreal before showing up to set made things very easy and enjoyable for everyone.

FXG: It appears in the BTS set photos that the store was not mapped with an interior ceiling? In some shots, it appears there is a digital sky above what would be the interior of the restaurant. This seems like it is an example of the amazing amount of flexibility Virtual Production can provide a DOP?

David: We had constructed a ceiling in the original 3D build and we did use it in some scenarios, but the advantage to using the LED Volume is that you can turn off any section of the environment and replace it with a full white bounce card or in this case some textural sky for additional lighting.

In the final shot of the commercial, we wanted to reveal the entire stage in a wide shot. In both reality and our 3D build, there would’ve been a front wall to the restaurant that would have made it impossible to get the camera that far back and wide. In the world of the LED volume we literally just turned off the front wall of the building and freely moved our camera as far back as we liked. Something not possible on location.

Eric: The advancement of the LED Volume allows for an extreme amount of natural light and freedom in camera movement. I’m no longer chasing the sun, trying to maintain shadows and light quality. No need for giant soft silks being assembled, or numerous 6k lights to simulate the sun. I had a total of four lights for the entire day, and yet we have this continuous sense of sunrise all day long. It’s incredible! It really does feel like we’re in a McDonald’s restaurant……Except we’re not!