New with UE4.20 is support for Apple’s ARKit face tracking, using the hardware of the iPhoneX, this API allows the user to track the movements of their face and use that in the Unreal Engine. (See below)

We spoke to both Apple and Epic Games about this partnership in AR and how the new ARKit2.0 and UE 4.20 expands the AR range of creative solutions.

Augmented reality (AR) describes user experiences that add 2D or 3D elements to the live view from a device’s camera in a way that makes those elements appear to inhabit the real world. ARKit combines device motion tracking, camera scene capture, advanced scene processing and complex merging to simplify the task of building an AR experience. You can use these technologies to create many kinds of AR experiences using either the back camera or front camera of an iOS device.

With UE4.20 and the new Apple ARKit 2.0, the potential advances in AR are enormous.

Augmented reality means creating the illusion of a virtual content living in the real world. It’s super imposing virtual content on top of camera image and updating that in real time so that the position and orientation is matching the real world.

Many great apps already exist using ARKit and Epic’s UE4 such as Follow Me Dragon from the Virtual Reality Company, (Chris Edwards (The Third Floor) and Robert Stromberg company (where Stronberg is Co-Founder & CCO)).

Support for ARkit in UE4 first appeared as a preview ‘experimental support’ in UE 4.17, and then it was formally released in 4.18. But as Apple has updated ARKit so too has Unreal updated UE4. For example, the first version of ARKit really only tracked the floor, with version 1.5 it was possible to handle not only vertical surfaces but also key 2D graphics. UE4 lagged a little behind ARKit 1.5 but with UE 4.20 it is within a couple of weeks of Apple’s ARKit v 2 release rollout.

WWDC 2018 keynote Apple announced ARKit 2, which is available in iOS 12 and includes improved face tracking, 3D object detection, persistent experiences and shared experiences. Multi-user augmented reality for shared experiences adds the ability for numerous people to see different perspectives of the same scene at once, either as a participant or an observer. All devices running iOS 11 will be able to update to iOS 12, and will be able to take advantage of what the Unreal Engine can provide via ARKit.

Early great examples include the LEGO Group’s, Creative Play Lab – LEGO AR-Studio. This is a creative tool, developed with Apple’s ARKit, that allows children to play with digital versions of some of the most popular LEGO sets, in real-world scenes using AR that mixes them with physical LEGO models.

Dr. Andreas Möller and Dr.-Ing. Christian Lipski from Apple presented core concepts and best practices for augmented reality on iOS at FMX last year. Apple had two major goals in the AR team:

- First, they wanted to make the barrier for making AR material low

- Second is that they wanted to bring AR to a broad range of devices

While ARKit is supported on all iphones from iPhone 6 and on, it is really with the newest iPhoneX combined with ARKit 1.5 and now ARKit 2 that Apple is really changing the whole experience. That being said, when you factor in AR friendly iPads, Apple now supports AR on hundreds of millions of ARKit enabled devices which makes iOS devices the largest AR platform in the world.

AR apps such as the Lego AR Studio and IKEA’s AR App, all use world tracking.

“World tracking is key to ARKit. Apple uses Visual Inertial Odometry to accurately track the world around it and determine the orientation and position of your device in the real world, in order to render content at the correct location” explained Andreas Möller, ARKit Engineer. By fusing sensor data with CoreMotion data, the two inputs allow the device to sense how it moves within a room without any additional calibration. “With this we can provide give a very precise location of the device in the location”, he adds. To add content fully and realize all the types of experiences AR has, Apple also has to detect surfaces or plains in the real world that can then be used to place content on.

Andreas Möller has a background in computer science and user experience. He received Ph.D. from University of Technology Munich (TUM) for his research on multimodal mobile interaction. He has been working in the field of AR for years and is currently in the ARKit team at Apple.

Apple started with just horizontal plane detection in the first version of ARKit but as of v1.5, there is also support for vertical plane detection such as walls. Another added feature was the ability to detection key images so that you can use their location to trigger experiences.

With the information about the surfaces and where the device is, Apple needs to accurately place 3D objects relative to those planes. If the intersection point is not the correct spacial coordinate when you place your content it may initially appear to be in the space, but when you move, or it moves, it may seem to float or intersect behind the plane, thus breaking the illusion. It seems obvious, but a 2D overlay can look correct, as if it is on an object or surface, but be clearly wrong when casting a 3D shadow or move around in 3D relative to that surface. ARKit is very good at solving this correctly, by mapping and tracking accurately in 3D relative to the real world. Apple’s ARkit provides hit testing via the API, which Möller explains “means intersecting a ray from your current position, and into geometries overlaid on the real world. The interaction point gives you an exact point or co-ordinate to insert your content” .

Finally, Apple estimates the lighting of the real world to help with more realistic rendering. And this has been improved in the latest version.

You can render content with the simple ARKit renderer, or using Apple’s Metal rendering, or the more advanced options provided by Realtime Engines such as Unreal Engine. ARKit is a building block that sits below the application level in an iOS application and it takes care of all the processing that one needs for augmented reality, so you can use it side by side with different rendering approaches.

AR apps are no longer limited to a single person or device participating in any AR experience with ARKit 2.0. Now multiple users can use their iOS device to simultaneously view AR experiences or play multiplayer games. This is perhaps the most significant feature that has not really gotten then attention it deserves. A problem with VR is that it can be an isolating experience for many people. Even when other people are in the same VR space, you can not actually see them or more importantly see their true facial expressions. The fact that ARKIt 2.0 provides a truly shared experience will open up the range of AR applications enormously.

AR apps are no longer limited to a single person or device participating in any AR experience with ARKit 2.0. Now multiple users can use their iOS device to simultaneously view AR experiences or play multiplayer games. This is perhaps the most significant feature that has not really gotten then attention it deserves. A problem with VR is that it can be an isolating experience for many people. Even when other people are in the same VR space, you can not actually see them or more importantly see their true facial expressions. The fact that ARKIt 2.0 provides a truly shared experience will open up the range of AR applications enormously.

ARKit 1.5 added support for 2D image detection, letting you trigger an AR experience based on 2D images like posters, artwork, or signs. This allows a vertical poster or logo to be an anchor point and identifier. ARKit 2 extends this support to offer full 2D image tracking, so you can incorporate movable objects like product boxes or magazines into your AR experiences. ARKit 2 also adds the ability to detect known 3D objects like sculptures, toys or furniture.

The world is surfaced from ARKit to UE4 via the API. “60 times a second you get a frame and access to the actual captured image, and depending on how you configure the ARKit that can be at different resolutions, and you can also turn off and on focus. Along with the frame comes the tracking information and any other information about the environment and the position of the device”, explains Lipski.

Christian Lipski is a computer vision and computer graphics engineer on Apple’s ARKit team. After obtaining a PhD in computer graphics from TU Braunschweig (Germany), Christian joined the augmented reality start up Metaio which was acquired by Apple in 2015. His work focus lies on prototyping new algorithms for mobile AR and creating high quality data sets for testing and quality assurance.

Lipski points out that the ARKit API provides UE4 with the focal length or field of view, which changes not only device to device but actually frame to frame.

Also new in ARKit 2.0 is USDZ. Building upon open PIXAR’s Universal Scene Description, USDZ is the new file format for displaying and sharing 3D content natively in iOS, with optimizations for storage and sharing. This was developed by Apple along with Pixar. USDZ, is a “zero compression, unencrypted zip archive” designed to work with the USD runtime. It doesn’t require unpacking, according to Pixar. Features include:

- Robust schemas for interchange of geometry, shading, and skeletal deformation

- High performance data retrieval, including powerful instancing features

- The ability to package user-selectable content variations, natively

- A sound architecture that is flexible enough to adapt to future needs

- As the name implies, file format is based on Pixar’s existing Universal Scene Description USD spec.

UE4

As regular readers would know, the fxguide team have been involved with the research and special projects team at Epic Games for some time. With UE 4.20 you can even get a free Digital copy of our own MIKE to play with in UE4.(See our other UE 4.20 story here).

We sat down with Chance Ivey, Partnership Manager at Epic Games and Mike Beach, Senior Programmer at Epic Games (AR/VR) to discuss the new advances with 4.20.

Epic has been committed for some time to expanding the world of games beyond consoles. Not only is the company pushing hard into AR but also non-game applications as evident by the Epic Unreal Studio release. This application of game engine technology to design and architecture is another perfect candidate for the application of AR technology. Epic has been working very closely with Apple and UE 4.20 is designed not not only run well today, but it is heavily synced with the Beta of iOS 12 and thus ARKit 2.0.

As iOS12 is not out yet, Ivey points out that Epic is providing the “earliest possible access, but since everything is still In Beta, we do expect some of these things to change between now and 4:21”, which may come out closer to the full final release of iOS 12. “UE4’s AR will mature as the platform matures with Apple”, he adds.

For example, the ARKit 2.0 shared experiences all work with UE4 such that “you can have shared experiences in UE4 where essentially you can scan a space and then save that information out and then share it with others, so they can see the same track geometry and all the things that you might drop into that worlds” explains Ivey. The change from the UE4 point of view is that “you would just have to go through and share and replicate the data. So you would have to add a networking component to your project so that people could connect to it and still access that same data and their devices as well” he adds. The complexity of doing it is similar to a normal multi-player game. One sets up the networking aspect of the project. Then you would share the information, which would include all the different points and different track geometry. This would then be replicated the same way one would with any multiplayer network game.

Lighting estimation has also been improved in this version. The previous version did have some light estimation, but it was also affected by the auto-white balance of the iPhone. ARKit 2.0 added a form of environment scanning. It is not LIDAR scanning but it does improve light matching and “it is actually grabbing texture data from the room around you, ” Ivey points out. “What is really cool about the new environment scanning is that it actually goes through and grabs actual texture information from the room around you and recreate, say a cube map for a skylight.” This means items in the room will have better lighting, reflections and shadows. “So it gives it a bit more of a cohesive feel of the virtual space in relation to the real space”.

AR on the iMAC Pro

At fxguide have been developing educational AR tools in UE4 on the newest iMac, which is the killer iMac Pro. This machine is also being used at Epic Games to develop the Unreal Engine to work with Apple’s ARKit. The iMac Pro is the most powerful computer Apple has designed to date, and fully loaded it is an 18-core stunning machine with the 2.3 GHz 18-core Intel Xeon W processor with 4.3 GHz turbo boost plus 128 GB of RAM. And it is all behind a breathtaking Retina 5K display

You dont need this Pro iMAC to develop games and apps – but it sure doesn’t hurt.

Chance Ivey, Partnership Manager at Epic Games commented, “definitely we’ve seen the best results on the desktop iMacs for development just because of the processing power with the Unreal Engine, it’s just super stellar”. Ivey agrees that it is not required to have the absolute top of the line iMac Pro for ARKit work, “I definitely think that this is probably overkill for many developers, but we’ve got a couple of iMac Pros that are just killer for the great iteration time, especially while we bang around on the engine itself to get the best results”.

“I have an IMac Pro,.. and the compile times are just remarkable, they are just so nice”

For most developers the UE4 engine benefits from a good core processor. While the ARKit may seems like it is GPU focused, during development, “for the most part, you want to good machine that’s got a good processor – that’s going to be the biggest thing”. Ivey himself, away from his desk, uses his MacBook Pro. “Especially, while I’m building content for applications as opposed to working specifically on the engine itself. If you’re gonna be doing some lower level engine stuff, the desktop iMAC Pros have been stellar for our developers to get stuff up and running” he explains.

“We just got another one here. I have one, QA has one,.. and the compile times are just remarkable, they are just so nice” he adds.

UE4 runs extremely well on a range of Apple products, but the newer Pro tools such as the iPad Pro and the iPhoneX are particularly impressive with UE4 and ARKit. “From my experience working on my iPad Pro, – we’re able to pull a lot of horsepower out of them. Especially the IphoneX” comments Ivey.

The iMac is a serious development machine, and yet it not the end of renewed Apple’s Pro approach. For those developers working on Apple MacBook Pros there is not only a new machine (70% faster & with twice the RAM) but the Blackmagic eGPU we covered last week. It is also confirmed that there is a new Pro machine coming, beyond the iMac Pro, sometime 2019. (Based on comments made at WWDC). Apple has announced that it’s working on a completely redesigned Mac Pro for its high end creative customers. Apple has created a Pro Workflow Team that is run by John Ternus and works closely with the engineering team. Ternus said: “We said in the meeting last year that the pro community isn’t one thing. It’s very diverse. There are many different types of pros and obviously they go really deep into the hardware and software and are pushing everything to its limit…we want to provide complete pro solutions not just deliver big hardware which we’re doing and we did it with iMac Pro, but look at everything holistically.” With AR featuring so heavily on Apple’s roadmap it is hoped that the new machines will cater to AR and UE4 style heavy graphics work.

AR on the iPhone

There are two cameras on an iPhone and two very different AR experiences, especially if you are using the iPhoneX. Significantly, until the iPhoneX the back camera was always more powerful than the front facing camera. With the advent of the front facing TrueDepth camera, for the first time in history the ‘selfie’ camera is the more technologically advanced. “In addition to world tracking we also do face tracking, using the front camera, which lets you do a lot of cool AR stuff using this ‘selfie’ camera,” commented Apple’s Möller. “And just like with the world tracking, I want to point out you don’t have to specially prepare anything in any special way. No special markers or tracking beacons or lighthouses – you just use your iPhone and it all just works with the external data” he adds.

In terms of UE4 on the iPhone, the base tracking that’s been in build 4.19, it’s actually being improved in 4.20. “You’ll be able to access all the nice bells and whistles that come with the iPhoneX (in 4.20)” later explained Ivey. But the new UE 4.20 goes further with the Front facing camera.

In terms of AR, the Back camera is best for most common AR solutions which augmented the users camera feed with other visual content. While the front facing depth camera can be used to read and animate human faces.

Augmented Reality with the Front Camera on the iPhone X allows AR Face Tracking using real-time information about the pose and expression of the user’s face to then render and overlay virtual content. For example, you might show the user’s face in a camera view and provide realistic virtual masks. You can also omit the camera view and use ARKit facial expression data to animate virtual characters, as seen in the Animoji app for iMessage.

Möller points out that not only does the ARKit on the iPhone X provide face and expression tracking, but it hands over the color and depth imagery, “but you have to keep in mind that the color image is provided at 60fps while the depth image is updated at 15fps”. The face topology is also tracked at 60 fps and passed on as data. You can get the mesh of the users face, in terms of triangles and vertices/texture co-ordinates. You can also be provided with Blend-shapes.

The blend shape co-efficients are a higher level facial abstraction, common in nearly all face pipelines. They are all tracked independently and in real time. (More on this here soon with our SIGGRAPH preview)

The Apple Animoji characters work with traditional Blend Shapes. The developer is presented with a high-level facial expression system that recognizes 50+ specific features. Movement all being relative to the neural pose.

BlendShapes

Below are the ARBIend ShapeLocation Coefficients:

BrowDownLeft, BrowDownRight, BrowInnerUp, BrowOuterUpLeft, BrowOuterUpRight

CheekPuff, CheekSquintLeft, CheekSquintRight

EyeBlinkLeft, EyeBlinkRight, EyeLookDownLeft, EyeLookDownRight, EyeLooklnLeft, EyeLookInRight EyeLookOutLeft, EyeLookOutRight, EyeLookUpLeft, EyeLookUpRight, EyeSquintLeft, EyeSquintRight EyeWideLeft, EyeWideRight

JawForward, JawLeft, JawOpen, JawRight

MouthClose, MouthDimpleLeft, MouthDimpleRight, MouthFrownLeft, MouthFrownRight, MouthFunnel, MouthLeft, MouthLowerDownLeft, MouthLowerDownRight, MouthPressLeft, MouthPressRight, MouthPucker, MouthRight, MouthRollLower, MouthRollUpper, MouthShrugLower, MouthShrugUpper, MouthSmileLeft MouthSmileRight, MouthStretchLeft, MouthStretchRight, MouthUpperUpLeft, MouthUpperUpRight

NoseSneerLeft, NoseSneerRight

Light on their face

Lighting estimation of the face is an interesting area to consider. Given the face is not unlike a sphere, and that ARKit passes the topology of the face along with the color, the face can be considered not unlike a grey ball for lighting estimation. This is done by accessing the Spherical Harmonic from ARKit.

Boy Demo

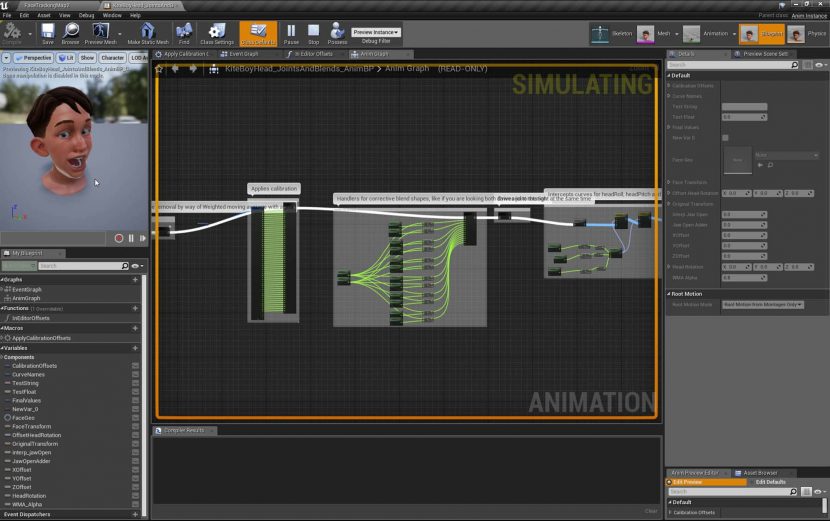

As part of 4.20’s release there is a version of the Boy from Kite being rigged up so that you can drive his face from UE4. “We are working closely with our technical animators on getting everything we can out of the iPhone to feed a rigged up version of the boy from Kite that we are releasing with 4.20” points out Ivey.

The rigged head is available now as a download demo. This is significant, as it will work as a template for other developers. (See our original story on Kite here).

While at fxguide we have the rigged MIKE face running in real time on in UE4. The free MIKE that is shipping with UE4.20 is not a rigged face. This is due to the advanced private IP used for the MIKE SIGGRAPH demo. By contrast the Boy from Kite is rigged and allows you to not only work with the character but also apply similar rigging to other characters.

More VR in UE4 as well.

Mike Beach commented on the other VR improvements in UE 4.20, away from ARKit – which include a simple GreenScreen tool to allow people to be in VR. “In this release we have early access to our mixed reality capture framework. The idea is that people can easily hook into their games, allow users in virtual reality to compose a video feed into the VR scene and also capture that on an output display window of what is being played. It is a plugin for people who are creating VR games or VR products.” he explains. This is intended to be a smaller version of the Composure Plugin which was released with v4.17.