The 2012 Scientific and Technical Awards will be presented at the Beverly Wilshire Hotel in Beverly Hills on February 9, 2013. fxguide has already published several pieces on award recipients this year – check out our video interviews about Weta Digital’s Tissue system, our fxpodcasts on tracking software Mocha and lighting software Katana, and our interview about Cooke lenses. In this article we feature three more of the SciTech winners – research into wavelet turbulence, pose space deformation and PDI/DreamWorks’ Light tool.

Wavelet turbulence

Theodore Kim, Nils Thuerey, Markus Gross and Doug James are to be awarded a Technical Achievement Award (Academy Certificate) for the invention, publication and dissemination of Wavelet Turbulence software. This technique allowed for fast, art–directable creation of highly detailed gas simulation, making it easier for the artist to control the appearance these effects in the final image. We spoke to Dr Ted Kim about the landmark 2008 SIGGRAPH paper that lead to this Sci-Tech honor.

The problem that the team tried to address was providing fine high frequency detailed turbulence. When they published the paper the problem was that for large scale simulation, a brute force approach took “lots of time and lots of memory.” The volume was usually divided up into a grid or a series of cubic spaces and if one did not get the detail required you needed to subdivide and try again, subdividing and trying until the detail was what you required but at the cost that you could run out of both memory and time before getting great results.

The solution that the team came up with was to do a two stage process. Use a correct simulation to do the broad volumetrics and then run a post process later (using wavelets) to add detail. This two staged approach addressed both the memory and computational time issues, and produced very believable results. Far more believable than perhaps one might think.

One of the nice features is that the overall motion of the low resolution version was maintained during the second pass. The system up-reses say smoke and adds very believable fine smoke detail.

There had been other attempts to solve this problem such as adaptive refinement, which worked fairly well on fluids but not as well with smoke or fumes. Adaptive refinement tended to fill the whole space with smoke, as opposed to giving the smoke more detail, while remaining moving like the original. There was also an approach of using Turbulence Modeling using FFT. And work in velocity field turbulence research was the stepping off point for this research.

The final solution worked out where the lower res animation was ‘losing’ its fine scale detail (using a wavelet decomposition), and then using an advection texture on the low res grid, added that fine detail back in. The algorithm enables large and small scale detail to be edited separately, allowing high-resolution detail to be added as a post-processing step. Instead of solving the Navier-Stokes equations over a highly refined mesh and hitting the memory limits as mentioned above, the team used the wavelet decomposition of a low-resolution simulation to determine the location and energy characteristics of missing high-frequency components. They then recreated many of these missing components/details using a turbulence function, and yet don’t break the movement and art direction of the first lower res structures or smoke. The method guaranteed that the new higher frequencies did not interfere with existing ‘look’ of the smoke, allowing animators to set up a low resolution simulation quickly and later add details without changing the overall fluid motion.

The solution was picked up by several key software companies including Side Effects Software for Houdini where it appeared under the name “Fluid Upresing”. Jeff Lait, a Senior Mathematician at Side Effects, was heavily involved in the development of the fluid-based tools at Side Effects for Houdini:

“When we first read the Wavelet Turbulence paper, we knew we had to try to implement it in Houdini. Not only is it a clever way to bridge the procedural and simulation worlds, but it also is elegant enough where we could add it to our fluid solver without having to generate any new code.

We soon fell in love with the artistic control it afforded. Because it layers on top of a base simulation, artists can iterate over the high frequency detail of their simulation after locking down the bulk motion.

While increasing RAM, CPU speeds and solver speeds, it is now less necessary to do up-ressing to add detail. The idea of having a second pass to a fluid simulation remains as a useful tool for fluid workflow. What began as a tool for adding detail to a sim is now used as a tool for retiming and re-sourcing simulations as well.

We are quite indebted to the team for developing and publishing this effective approach to fluid simulation.”

As with all Sci-Tech awards, the contribution is not about what happens this year, in say some new 2012/2013 feature film. The awards honor contributions to the science of film making, but often this can span years and when an award is received it is not when the work is perhaps its most impactful. Nick van Zutphen (Nick Nimble), a freelance Houdini effects artist specializing in the simulation and rendering of natural phenomena such as fire, water and viscous liquids and who teaches at fxphd.com, explains, “I think it’s quite a paradox that this technology is now honored with a SciTech award. Volume fluid simulations in Houdini have advanced so much, due to faster solvers on CPU and GPU, that the upress technique that used to be ground breaking isn’t used that much any more.”

Such is the pace of technological advancement in films, but still, many feature films have very successfully used the approach since it was invented. Even before it was presented at SIGGRAPH in 2008, Dreamworks wanted to implement it for the Dreamworks pipeline and it was used in films such as Monsters vs Aliens (2009, the end sequence mothership explosion). FumeFX also implemented a major version of the approach into its popular plugin.

The train crash sequence in Super 8, is another example – “there are lots of extremely large scale looking pyro effects, those were made to look as large as they appear using the wavelet turbulence software,” explains Kim. (This sequence from Super 8 (Dir. JJ Abrams, 2011) was produced by ILM/Scanline). Ultimately, the Wavelet Turbulence team gave away their source code as open source so forming an exact map of its use is hard to obtain.

A large smoke plume synthesized from a simulation that was 7 times smaller.Interestingly, the original research was started as the team could not get a good stable fluid sim out of the Cell processor in the Sony PlayStation 3, as the target was always to produce high end effects. Wavelets are used in many ways such as compression. In this case, wavelets are not used for the time based property, but for spatial localization. Says Kim: “With, say, Fourier transforms it will tell you if somewhere in your picture ‘is there high frequency detail at all?’ – with wavelets you actually get more than that. You get where in the picture the high frequency detail lies. But there is a little bit of a trade off to get that locally based information. We used that spatial locality to detect where there should be high resolution detail and we only inject the high res detail where we thought it should be in the original fluid simulation.”

Fluids and smoke when modeled in computer graphics with the Navier-Stokes equations have some key assumptions/ properties. The first is that it is assumed gases and fluids are incompressible (you can ignore air compression to model smoke – but yes there is tiny compression – hence sound etc but it can be mathematically ignored). The second key assumption is advection. This is used in the second half of the solution, to add back in detail.

In physics or sims work, advection is a transport mechanism of a substance or a property of it that is conserved in a fluid during the fluid’s bulk or primary motion. The fluid’s motion is described mathematically as a vector field. Advection requires ‘currents’ in the fluid or gas. And it is this concept that is key to the added new high frequency detail looking correct and not looking out of place or random.

“The whole point of our model was we wanted an artist to sketch something out and then just add some high frequency detail.”

Central to this work is the way it evaluates turbulence and how well this translates to features that are added. One great example of correct looking added detail or turbulence and something this approach does well is ‘forward scattering”. This is when large eddies break up into smaller eddies. In short, there is a very strong mathematical formula of when and how a piece of smoke might split and move once it does. Thus when the wavelet approach adds tiny eddies – they are not just added randomly – they are accurately predicted and added based on what the program learns from the low res simulation – from its vector field. The fine detail is in effect very accurately guessed and added in a really believable way. It is not perfect but it looks extremely real, and yet is extremely fast and quick to compute.

This is not doing a low res pass followed by a progressively higher res pass, as in rendering. There is only the low res pass. “We detect where this forward scattering would occur,” says Kim, “we analyze this low resolution fluid (velocity field) and say we think high resolution motion is about to appear to emerge in these locations and take that information and then effectively add a special noise texture to those regions. So it does create some high frequency motion, (so it simulates a high resolution velocity field) – we then in effect, add smoke particulate into that velocity field and as it now does have high frequency in the velocity field file – high frequency smoke appears. The whole point of our model was we wanted an artist to sketch something out and then just add some high frequency detail.”

Comparision of a 12800 × 25600 × 12800 simulation to a 50 x 100 x 50 simulation using particle-based densities with an obstacle added.Fluid sims can be hard to parallelize on GPUs. But this wavelet algorithm works well in parallel so their approach is very well suited to GPU implementations. It is interesting to think that perhaps this approach may be used in future GPU driven games, bookending nicely with the Cell PlayStation problem that spawned it.

Today, Ted Kim is working on model/character skinning, but ironically the two R&D concepts are starting to mix, as his team “try to do real virtual humans. We are trying to simulate out how human skin and muscle deform as say when you make a fist or flex your arm.” This work again involves Doug James who co-published the original Wavelet research paper.

For more information about the Wavelet Turbulence research, including the original paper, videos and source code, see here.

Pose Space Deformation

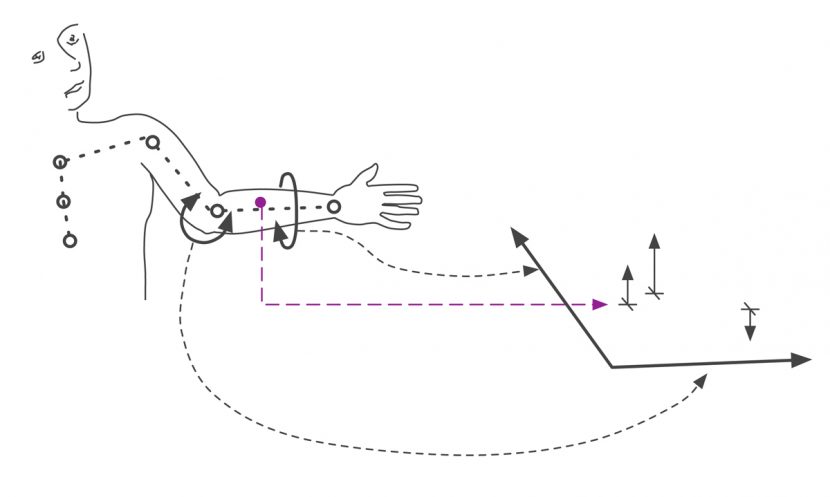

For their foundational research in the creation of computer-generated characters, J.P. Lewis, Matt Cordner and Nickson Fong will receive a Technical Achievement Award (Academy Certificate) for the invention and publication of the Pose Space Deformation (PSD) technique. fxguide got some insights from each SciTech winner on how the idea of PSD was developed and ultimately published as a SIGGRAPH 2000 paper.

Just what is pose space deformation?

We posed that question to Lewis, Cordner and Fong, who each gave their own take on how they would describe PSD for a ‘non-visual effects person.’

Cordner: I told my brother, ‘When you animate a character, sometimes it doesn’t look that great in certain poses. So you just re-sculpt that pose to fix it, and then the animation looks right.’ He said, ‘That’s an invention? Why didn’t they always do it that way?’ I think I oversimplified…

Lewis: We usually think of animation as ‘things moving over time’. There are also surface interpolation techniques (splines, etc.), which are ‘things interpolated over space.’ PSD introduced the idea of thinking of animation instead as ‘things moving when the pose changes’.

Here pose can even mean a collection of abstract dimensions that you invent for the purpose, though that is not the first thing to say to a non-visual effects person. A more grandiose description might be to say that this is a rigging system that ‘learns from examples’ rather than requiring scripting. However, many or even most machine ‘learning’ techniques are closely related to interpolation, so I think calling it interpolation demystifies it.

It can still be confusing however, even for us, since the interpolation is happening in a high dimensional space (e.g. 5 or 11 or … dimensions, depending on the skeleton), and this dimension can be changing over the surface, but the result at each 11 dimensional ‘point’ there is a normal 3-dimensional point or offset. Switching between these ‘spaces’ does require a clear head.

In PSD the creature shape is a function of some ‘pose’, so whenever the creature is in a particular pose, it has the same shape. That assumption is practical and can save labor, but it is also clearly a simplification. To a limited extent, this can be addressed by adding ‘history’ or other abstract dimensions to the pose space. For example, you can add a ‘how much are you carrying’ dimension, so muscle bulges can be dialed in even when the pose is the same. Once you realize that the domain of the interpolation can usefully be things other than time or space, however, the possibilities really open up. James Jacobs at Weta has had some creative experiments in this area regarding learning cloth simulation with PSD.

Fong: A lot of people ask me the same thing. Here, if I may contribute my simple version of PSD: it’s a process or technology to create natural looking muscle and skin in animation. Still I get people staring at me and ask me if I’m speaking English?

The origins of PSD

Lewis, Cordner and Fong each had visual effects production experience prior to their PSD research. Here’s how, in their words again, the technique came to be.

Lewis: The PSD idea resulted from experience (frustration, actually) with an earlier system that I had developed while at ILM. The goals were similar, but in the earlier system the interpolation was a hack. It required the artists to specify too much information, and the motion was sometimes lumpy and unpredictable. A couple artists tried it, but evidently they didn’t like it well enough to continue using it. It didn’t catch on. But I left ILM with a nagging feeling that there was some better way to do this.

At Centropolis (about 5 years later) we had finished Godzilla and were talking about how to do creature skin if we had time to do it over and do it right. In the interim I had also studied Bishop’s Neural Networks for Pattern Recognition book and had learned about Radial Basis Function (RBF) networks and other principled approaches to high-dimensional interpolation.

RBF seemed like both a much simpler and more powerful approach. But this was a risky project and we thought difficult to justify to the company, especially since there wasn’t another creature show at that time. We made the plan to just work on the project over the holiday break, and somehow we stuck to it. It turned out pretty well, a lot better than we would have guessed in fact.

Matt Cordner and Nickson Fong did the hard work – Matt wrote the original PSD plugin for Softimage, and Nickson contributed his modeling skills to create all the examples. We submitted the work to SIGGRAPH 1999 in January and it was…rejected! After some revision and some depression we submitted again the next year and it was accepted, and even became a minor hit in terms of citations.

I think there is a message there – in my opinion the academic reviewing process works correctly ‘on average’, but in any particular case it can be wrong. So if you have something you believe in, keep at it. As well, I have to acknowledge that even the final paper is not written very well, so probably the first iteration deserved to be rejected.

“We submitted the work to SIGGRAPH 1999 in January and it was…rejected! After some revision and some depression we submitted again the next year and it was accepted, and even became a minor hit in terms of citations.”

Cordner: The way I remember it, John told us about his idea for PSD, and he was sure that it would be SIGGRAPH-worthy. I have a lot of faith in John, plus I owed him one for screwing up my first FX shot, and as my supervisor, he got the flak for it. Even though I was new to CG and didn’t fully understand animation or why this was so revolutionary, I went ahead and wrote a plugin that implemented PSD in Softimage 3D. I don’t think I even told John I started working on it, I just showed him the prototype one day, like a Christmas present (I think it was around that time). From there Nickson and I worked to improve it and actually get it working on real-life test cases, while John wrote fancy words and equations for the paper.

Fong: As a production artist I have always embraced technology and over the years I strongly believe that it is important for an artist to understand the tools and always be ready to explore and learn new technology. When John showed me the concept of PSD I understood what he was trying to do but it was pretty abstract to me in terms of how technology works in a real production environment.

PSD also reminded me of the early R&D in 1996 back in Dreamworks when I was exploring rigging with hierarchical b-splines (nickname: H-splines) in skinning/ facial animation for the movie Shrek. The research was a bit ahead of the curve and implementation in production was difficult and time consuming! Knowing how difficult it was back then I was really excited and this would be a great opportunity and a second chance to do it right and without all the big companies policies and politics.

Why did we need PSD?

Before PSD became widely used, artists relied on a standard skinning approach (linear blend skinning). The limitations of these approaches, according to Lewis, were manifold. “The artists have to specify the results by editing weights, which violates the HCI direct manipulation principle,” he says. “There are characteristic artefacts such as the collapsing elbow. Further, you can show that fixing the weights to look good at one pose cause it to look worse in other poses — there is no single best set of weights.”

“Editing the weights was also a tedious and unreliable process,” adds Lewis. “It also used to have a reputation for being RSI-inducing. Traditionally animators fixed these problems by introducing additional layers of skinning ‘influence objects’, etc. The process of rigging might be cynically described as a process of layering various hacks, each hiding an artefact in the previous layer. All in all, this was an area badly in need of improvements.”

Fong agrees with Lewis, and remembers some of the challenging early days of R&D at Dreamworks. “We were using an early version of Softimage (it was top-of-line at the time),” he says, “and the rigging and modeling tools were primitive for what we were try to accomplish on Shrek. We wanted to achieve fluid and natural looking facial animation and modeling the facial expressions were a nightmare. Therefore, an early version of digitizing device called Microscribe with a 3D arm was introduced and i remember we had to draw hundreds of lines on over 20-30 Shrek facial macquettes and digitize thousands of points a day and the process took months!”

“The worst nightmare was yet to come,” continues Fong. “When we had all the models in Softimage we have to figure out a way to skin it on the facial rig. On top of that the models were heavy and even with the fastest SGI workstations we had a hard time loading files and working in real-time was really out of the question. I remember we had to load the files like half an hour before showing it to Jeffery Katzenberg – if not, the waiting would definitely agitate him or even anyone!”

“I have only mentioned the facial animation process so far and when it comes to Shrek’s body animation like the arm, neck and joints, the whole multiple weighting process with clusters in Softimage was just too much to handle. Furthermore, I remember we had to make the animation controls easy and intuitive for animators with little or no 3D application experience because 3D animators were scared at the time and most of them would have came from traditional 2D animation productions.”

How was PSD tested and implemented?

As noted above, Cordner began by developing a PSD plugin in Softimage. “Not being a modeler,” he says, “my very first test case was a simple deforming grid and a cylinder. I think this annoyed Nickson enough to provide at least some of his motivation to power through all of the bugs in my code and create all of his great examples.”

“Matt was right,” says Fong. “I was not only annoyed but a little shocked when he showed me his cylinder test and Matt said something like – that could be an arm and the both of us had a big laugh! So, i jumped right into it and started creating the arm models and Matt worked on the plugin. The whole working experience was so cool like we knew just what to do and the three of us were in perfect sync.”

However, while fun, the initial few tests were somewhat of a ‘disaster’, according to Fong. “When I tried to save poses on the models it would ‘blowup’ like the models were hit by a grenade and shattered into hundreds of pieces – of course it would crash Softimage as well. Matt and I spent many sleepless nights running test, debugging the rig setup, the plugin etc. The thing that puzzled me was when John introduced the ‘sigma’ value to control the interpolations we had more success but if we saved too many poses near a relative joint angle the model became unstable and sometimes ‘blewup” still. But somehow we managed to create some real examples for our first year SIGGRAPH paper submission and got rejected!”

The process continued with facial animation. “With only linear interpolation in Softimage at the time our PSD facial example worked like a charm!,” recalls Fong, who continues to work in production and animation in Singapore. “Though the complexity was in how we defined the dimensions of poses with multiple skeletons on a clock like setup rig. In actual production, the average animators would find it difficult to navigate with the clock setup and this is an interface issues we have yet to resolve to fully take advantage of PSD.

Where has PSD been used?

Although novel in 2000, PSD has now been implemented in various ways at different studios and in different software. Lewis notes that he’s “heard rumors of a number of PSD implementations over the years, including one company that I think was said to have two different implementations. I’ve also heard of several attempts that failed – not surprising, since although it is fundamentally simple, the dimensions thing can be tricky to think about.”

“Gene Lee and his collaborators at Disney gave several presentations at SIGGRAPH Asia (2009,10) where they showed fundamental exploration and improvements to PSD,” adds Lewis. “They were perhaps the first to include a PSD component in a production facial system, and have extended it for animation retargeting, level of detail, and multi-layered animation.”

Weta Digital, where Lewis is now Research Lead, has implemented PSD for several purposes. “Notably,” he says, “it has been used to ‘learn’ the results of muscle simulation – the Weta Tissue system – in order to speed up computation. It also allows a form of shot-sculpting that starts from the physically correct simulation. I believe James Jacobs and Simon Clutterbuck pioneered much of this work. Also at Weta, I was able to collaborate with David Gould (author of the Complete Maya Programming books) and Jens Schwarz in writing a Weighted-PSD plugin for Maya. I was hoping this collaboration would make me more of a Maya API wizard, but sadly, the Maya API told me clearly that it was still the boss.”

Sony Pictures Imageworks (and Animation) also have a PSD implementation, says Cordner. “I’m told they use it on most of their character work now, including replacing cloth sims for certain cases.”

Interestingly, Cordner, who currently works at Blizzard, says he is often cornered about PSD when visiting other studios. “Usually there is some kind of internal battle over how exactly to implement it. What does that say about PSD? It might mean that it’s just an incomplete solution, or that it’s difficult to implement, or that is a very versatile tool and has many uses. In any case, I try to sidestep it, giving the excuse, ‘I can’t remember exactly how I did it 14 years ago.'”

Further PSD research

Since 2000 there has continued to be academic research in the PSD space. Lewis cites Sloan, Rose, and Cohen’s Shape by Example paper in 2001 as a work that described an alternate and improved formation for interpolation, and Kry’s EigenSkin paper in 2002 showed (among other things) how to compress the training data.

“One of the most important developments for PSD,” says Lewis, “is the Weighted PSD idea published by Kurihara and Miyata in their SCA 2004 paper. Weighted PSD is clearly a better approach; it greatly reduces the amount of training data required. In addition to their paper, Dr. Kurihara’s PhD thesis is a great read. Taehyun Rhee showed in 2006 that W-PSD could be implemented on the GPU.”

“My own research since 2000 has been in other areas (see a list of Lewis’ projects at his website), though I have continued to learn about machine learning and relevant numerics. I could write a better PSD paper today – the original paper should have discussed regularization, and at the time the RBF kernel seemed magic, whereas now I understand where it comes from and how to derive it (at least in simple cases). Anjyo, Pighin, and I had a SIGGRAPH Asia 2011 course where we presented some of this. I have also recently been exploring a way to extend RBF-like computation to much larger problems, using randomized linear algebra ideas.”

Light at PDI/DreamWorks

PDI/DreamWorks Animation’s Light system was developed in 1996 before the studio had began delving into its all-CG feature film Antz. Since then, the tool has remained largely unchanged and been used to provide interactive lighting, final-quality interactive render previews and with a powerful user-configurable spreadsheet interface on numerous films and projects. Lawrence Kesteloot, Drew Olbrich and Daniel Wexler will receive a Technical Achievement Award (Academy Certificate) for the development of Light at the SciTech Awards. fxguide spoke to Wexler about the honor.

Spreadsheets

Behind Light are two key components – a deep rendering system (discussed below), and a spreadsheet used to manipulate complex setups with thousands of objects. “We knew as is constantly the case in CG film production, scenes were getting exponentially more complicated every year – the number of lights, the number of characters and props was growing at an astounding rate,” says Wexler.

Wexler suggests that the common method of CG lighting deals with objects one at a time and can be an impractical approach. “The director might say, ‘Hey can you make all the lights in the scene 20% darker?’ which is a very common request. In other tools, you have to go through all the lights in the scene and adjust their intensity, whereas with the spreadsheet you can select just the light sources – each light source is a row in the spreadsheet. Their column is the intensity. You can select them all at one time and say go darker 20% with a single entry. That’s much easier for an artist to understand because everyone’s familiar with a spreadsheet, to a certain extent.”

The spreadsheet let artists select many cells and make bulk changes (for example by typing *2 to double values or by using an interactive slider). Another example, says Wexler, might be “a lighting rig that has lighting sources placed above a set, and to be able to have a single command to point all of those light sources at a single point on the set. It will go through all the light sources and figure out how to aim it at that single place.”

Deep rendering

At the time Light was developed, there was no way that film quality imagery could be rendered in the time needed to have an ‘interactive’ lighting system. So the team looked to a defered rendering system (ironically something Wexler suggests is now being used in real time games with GPUs). The effect was, like the intention of the spreadsheets, to save the lighter’s time.

“We do a pass where we compute the visible objects for a single frame,” says Wexler, “without determining their lighting characteristics, figure out which objects are visible, store that information in a deep frame buffer and then in a second pass we shade only the visible objects in the scene. This defered rendering tech has the advantage that it’s roughly proportional to the number of pixels that are in the image, in terms of its performance. As opposed to a system that goes through every object in the scene, lights it and renders it – which is more proportional to the number of objects in the scene.”

The deep rendering system is ultimately used for both the fast interactive lighting and the high-quality final rendering. In fact, the final batch render is done with the cached intermediate rasterization data to produce a deep file since it results in each pixel having more data than just color. “For each pixel it stores only the visible geometry in the scene,” explains Wexler. “You end up with an image, more sophisticated than a JPEG file, instead of having a single color for each pixel, it actually has a tree for the visible geo for that pixel. So for each pixel we have a tree-like structure which stores the visible geometry that you can see for that pixel.”

Various rasterizers generate these deep files from the scene database in the lighting system. There’s a Z-buffer for fast rasterization, an A-buffer for high quality, and a ray tracer for reflections and refractions. These deep files are then either loaded into the interactive lighting tool or passed to a command-line shading system.

“In a typical renderer,” says Wexler, “you have light shaders and material shaders, plus some less important ones. Typically when someone wants to do texture mapping in something like RenderMan, they would alter the material shader and perform some sort of functional look-up – read from an image or generate it procedurally. The input for those functions is typically a position on a surface and it produces an output of a single color at that point on the surface – you can do that by looking up a two-dimensional parametric value in your image and finding the color in that image at that point or you can write a mathematical function to do that.”

“We essentially broke that chunk out into a separate class of shader into what we call ‘texture map shaders’,” he continues. “They take an input which defines a coordinate space and then they produce as a result a separate a color or a non-color value. It’s a mathematical function – it takes a set of floating point arguments and produces a vector of floating point arguments as a result. Then you can wire those together into complicated trees – for instance you could make a texture map shader that simply multiplies two values together to produce a result. It allows a slightly different modular breakdown which allows us to have fewer shaders overall than you have in a typical renderer. If you only have material shaders like most other systems, you only have to make a different material for each combination of different parameters whereas what we can do is make functional blocks and then re-attach them together in different ways.”

Continued improvements

Over time, Light has been adapted to allow for other systems to interact with it, such as a sub-surface scattering system and global illumination add-ons developed during Shrek, as well as for interactive shading to occur remotely in parallel on a mini-farm. The core underlying Linux based system of files, pipes and programs developed in the early days of PDI also remained constant.

“It’s surprising,” notes Wexler, reflecting on the development of Light now some 16 odd years ago, “we’ve been out of DreamWorks a significant amount of time but we went back and saw our lighting tool in operation in its current form and we were all struck by how similar it seemed from when we designed it. A number of sophisticated technologies were added, but the tool itself was very recognizable.

Thanks for good article. I have translated the last part “Light at PDI/DreamWorks” at my blog.

http://maxwellsandy.blogspot.kr/2013/02/pdidreamworks-light.html