Recent years have seen the arrival of Machine Learning (ML) research into the area of visual effects. From noise reduction to facial pipelines, Deep Learning has proven to be a rich tool for major effects projects. One of the hallmarks of Machine Learning and Deep Learning, as we discuss in the next section, has been the widescale publishing and sharing of code and libraries. Gone are the days of submitting a paper to SIGGRAPH and then waiting to have it accepted, then published and then a few years later appear in a product. Today work is posted on sites before it is accepted to conferences, with even key advances sometimes being seen first on social media, years before it might appear in a journal or conference proceeding.

The Foundry has responded to this new innovative, dynamic and collaborative world by releasing the ML-Server. This is not plug and play Gizmos for Nuke. The Foundry has instead released a Machine Learning environment that allows series TDs and engineers to work with these new algorithms and thus get new ideas and tools into the hands of their artists inside the visual effects and animation studios. This new ML-Server allows companies to start experimenting with ML within their existing pipelines and build on the enormous flood of research that is being published.

The newly introduced open-source ML-Server client/server system enables rapid prototyping, experimentation and development of ML models in post-production, within the familiar, rock solid structure of Nuke. Furthermore, key data, code and examples from the Foundry system can now be found on their GitHub repository page:https://github.com/TheFoundryVisionmongers/nuke-ML-server

ML-Server is essentially a really thin client that connects to an IP address. This machine runs the project’s deep learning inference on a Python instance. This means you can take your Tensorflow or Cafe ML libraries and whatever models or data and set them up on the Server. Once set up, you are able to send out an image to the Server and perform some inference and send back a result.

History: Deep Learning Primer – Why is it different?

To understand why the Foundry have taken this open approach one has to look at the recent history of Machine Learning and Deep Learning in particular.

Hugo Larochelle at Google Brain has one of the best recounts of why Deep Learning is different from other areas and therefore why the Foundry’s approach makes so much sense. Larochelle argues that Deep Learning is different from other software and research areas for three reasons, built around how the the Deep Learning community has structured itself to facilitate rapid innovation:

- Quickly after the major algorithmic or coding innovations, the DL community adapted to exploit the computation resources of GPUs and graphics cards.

- The community produced a lot of tools for performing Deep Learning research with very high quality open source code libraries. The community published tools and libraries that make getting started feasible and (almost) easy.

- The community become really good at discussing and sharing information about how to do Deep Learning.

It was only about ten years ago that the modern version of a layer neural network took off. Prior to that, working on AI and Neural Networks was a career killer, academic suicide, no one wanted to know. Today AI experts in Deep Learning make rockstar ‘telephone number’ long salaries.

One key aspect to this explosion was that the new approaches were moving rapidly to running on GPUs in about 2010. This meant that researchers anywhere could now have amazing computational power and develop code in a way that previously only a few researchers could do. GPUs love parallel processing and the modern Deep Learning approaches are very well suited to modern graphics cards. This continues today with, for example, Nuke running on a high end graphics card inside a high end PC, which is a perfect vehicle for Machine Learning.

Secondly, the community decided to not develop silos of confidential code. The research companies and the big tech companies decided in around 2013 to post libraries that make getting applications running much easier. High quality, robust, easy to use, open and free code libraries for supporting Deep Learning research, such as Theano and Torch and a few others, appeared and this fed rapid research into deep learning. This means that on Nuke it is possible to take advantage of vast amounts of code and just focus on your industry specific application.

This exploded into commercial applications around 2016, fired by the third structural difference – people no longer waited to publish. Authors skipped journals and conferences with long lead times, and they started posting their work publicly on websites such as arxiv.org. 2016 was also when Google released AlphaGo and the world ‘discovered’ the amazing impact Deep Learning could have.

What is a Deep Neural Network?

Artificial neural networks, or Convolutional Neural Networks (CNNs), are computer programs that enable a machine to learn. They are inspired by our understanding of how human brains work. We will discuss Deep Learning more in a follow up article here at fxguide with Andrew Glassner, but one should not think that Deep Learning is actual intelligence. Glassner says that comparing CNNs and our brains is like comparing a toothpick to a giant deciduous forest. That being said, in Deep Learning, a convolutional neural network is a class of deep neural networks, most commonly applied to analyzing visual imagery.

At the core of an artificial neural networks is the artificial neuron. They are like real neurons, in that artificial neurons are connected. CNNs are regularized versions of multilayer perceptrons (or binary classifiers). They function as fully connected networks, that is, every neuron in one layer is connected to all the neurons in the next layer. It is called ‘Deep’ since it has many layers, unlike the original neural networks from decades past.

What can Deep Learning do?

Deep Learning is data driven and so is a different way of solving a problem. It is less prescriptive and more of a learning approach based on data. Luckily, in the modern computer age there is a lot of data, and hence a lot of applications. It is also not often that ML produces a correct answer but that it produces a ML inference. Something that is plausible and looks correct, but may not be actual reality, which is exactly in line with visual effects. If the shot looks right, it is, unlike say medical applications which comes with vast ethical issues.

There are countless examples but generally Deep Learning has excelled in object classification, object segmentation, identification, image synthesis, computer vision and many other features that are really helpful in visual effects. The single biggest impact that was directly felt in our industry was noise reduction. This is the noise reduction of ray tracing and not the older style of convolution filtering that is traditional image processing. Films such as Big Hero 6 were able to produce results far faster than anyone had thought possible. This led to NVIDIA introducing real time ray tracing with the RTX cards at SIGGRAPH last year.

Today there are great examples of relatively straightforward ML tools, such as the cloud based chroma-key tool “Blue Fairy”, which is a cloud based service that aims to reduce or eliminate the need for artists to create their own alpha, by using AI.

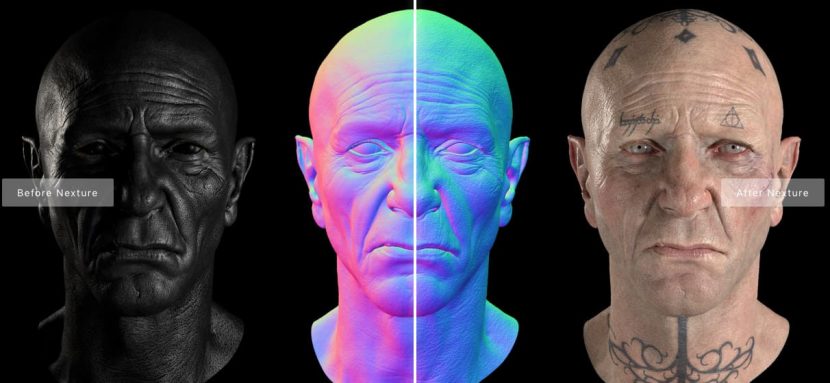

Or skin micro-detail & texture enhancer, “Nexture” by Cronobo in France.

Nexture combines a state-of-the-art artificial neural network with a custom image synthesis algorithm, to transfer details from a reference pattern bank. This dataset was captured on actual human skin by the team at Cronobo. To quickly get up to speed, Nexture features a human-skin texture bank, included with the software and specifically tailored for texture transfer. It contains a diversity of 100 patterns commonly found on human skin at different scales.

We have also covered Roto-Bot in the past.

The real question is, what can you now do with Machine Learning inside Nuke?

ML-Server in NUKE (*Not for everyone)

While the tools and approaches that can now be experimented with using ML-Server will no doubt one day be standard features, for now ML-Server is aimed at companies with dedicated IT or programming teams.

However, visual effects and animation software is traditionally not web or cloud based. Current software development approaches and release cycles compound the problem. They require vendors to be the gate-keepers of what technology makes it into the next version, with long delays between idea and release.

ML-Server system follows a client/server model. The client plug-in inside NUKE communicates with a server that can run and return the results of an ML inference. This approach solves a number of practical considerations, most notably connecting the heterogeneous computing environments between host software and ML frameworks. For example, only the server is required to have the high-end hardware or specific OS required by the ML framework. And as it is a server, it can be a shared resource among artists or ML practitioners.

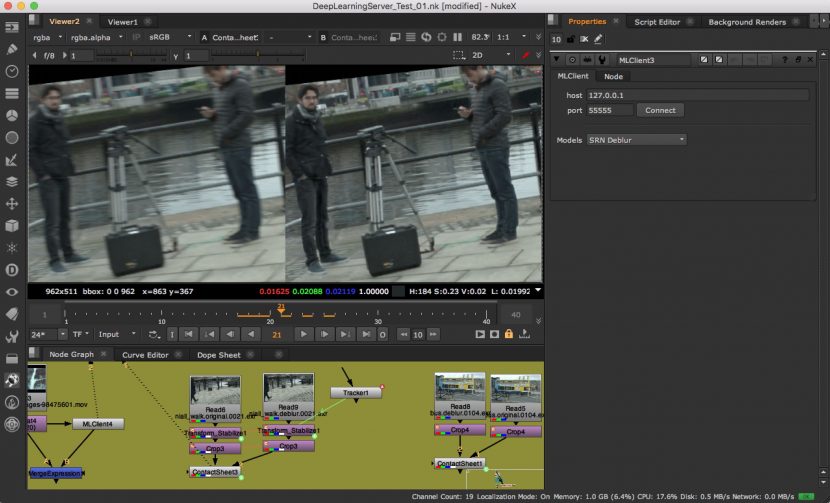

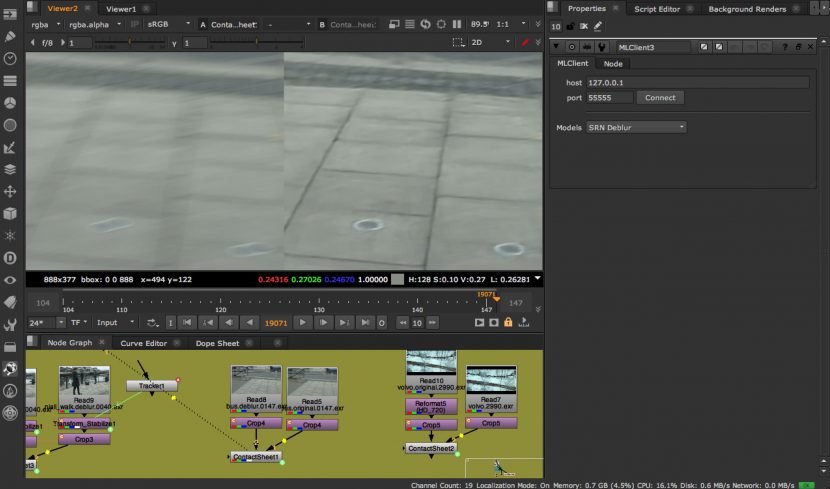

SRN DeBlur in Nuke

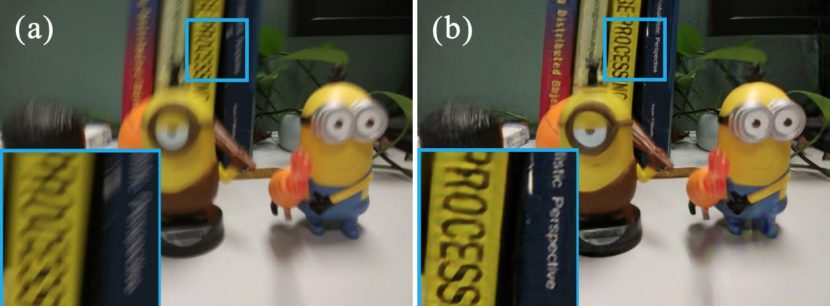

Some of the initial ML applications the Foundry have tested involving using these openly published ML Libraries. For example, SRN Blur was published as a ML approach to removing motion blur. This was first published as Scale-recurrent Network for Deep Image Deblurring. As with so many ML solutions this is able to be easily incorporated into ML-Server, since the work is open source, works with code libraries and is not shielded away behind patents or unworkable SDKs.

The Foundry has taken SRN Debur and incorporated it in ML-Server. While below are just stills, this tool is remarkable. Fxguide visited the Foundry in the UK and the actual results are incredible.

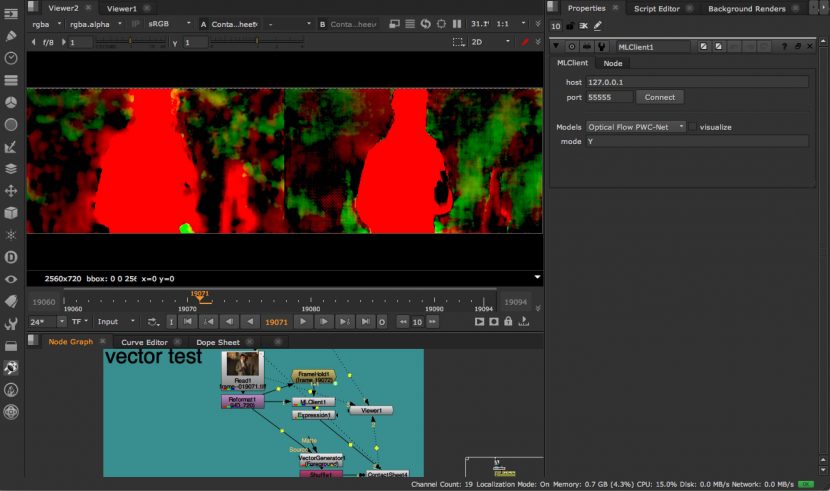

PWC_MotionVectors

ptical flow estimation is a core computer vision problem and has many applications, e.g., action recognition, retiming, and video editing. PWC_MotionVectors is a motion vector ML solution that produces very clean and useable motion vectors. It was made with a compact but effective CNN model for optical flow, called PWC-Net. PWC-Net has been designed according to simple and well-established principles and is an example of a ML solution that might be core to some larger Nuke solution.

Mask RCNN in Nuke

Mask RCNN is a conceptually simple, flexible, and general framework for object instance segmentation. Their published approach efficiently detects objects in an image while simultaneously generating a high-quality segmentation mask for each instance. The method is shown below in Nuke.

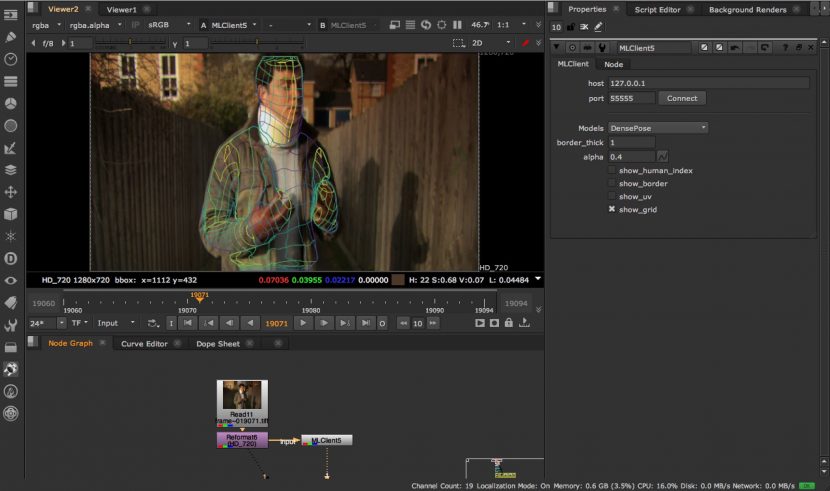

DensePose in Nuke

Given the work inObject segmentation, it is a natural step to consider roto. This is the killer app that alone would make the world of difference to visual effects and stereo pipelines. DensePose is a specialist pose estimation tool that is trained on humans and thus is very specific. Dense human pose estimation aims at mapping all human pixels of an RGB image to the 3D surface of the human body.

A possible ML tool is general purpose, artist lead, roto tool. Roto replies on image segmentation and object classification. But the ML-server approach that the Foundry has been experimenting with allows for artist input. As discussed below, this is critical.

Artist Involvement

The Foundry is quick to not promote ML as an artist replacement, as they point out that post-production is not an automated turn-key process. It often requires hundreds, sometimes thousands, of skilled artists and users to complete a show. In contrast, Machine Learning often aims to solve an entire problem in one go, removing artists from the creative or technical process. For many artists this is a cardinal sin. ML based tools will be widely adopted only if they offer artists the same level of control they are accustomed to. Removing artists from the process also fails to leverage one of the most important things that ML can offer: the ability for an algorithm to learn from its mistakes and correct future errors.

The Foundry’s team of Dan Ring, Johanna Barbier, Guillaume Gales and Ben Kent have approached the problem in two ways. Firstly, they have designed tools that can include sensible user interaction, and secondly they have provided a mechanism for an algorithm to update itself using the knowledge of its talented users.

The team stress that the ML-Server is not a polished nor commercial product, but it is a vehicle for exploration. It is an enabling technology that the Foundry wants to give out to their customers immediately. The great thing is that once ML-Server is set up, a team can create their own model, and within five minutes, see it in Nuke.