The North Sea by director John Andreas Andersen is a Norwegian disaster movie, set on a North Sea oil drilling platform. After an offshore disaster, a team of researchers, including submarine operator Sofia (Kristine Kujath Thorp), rush to search for the missing driller and assess the cause of the damage. As rigs are evacuated, the story follows Sofia whose companion has become trapped in the depths of the sea. Sofia is a young entrepreneur who works with advanced underwater robots. The film is also known as the Burning Sea and will be released in the USA later this month.

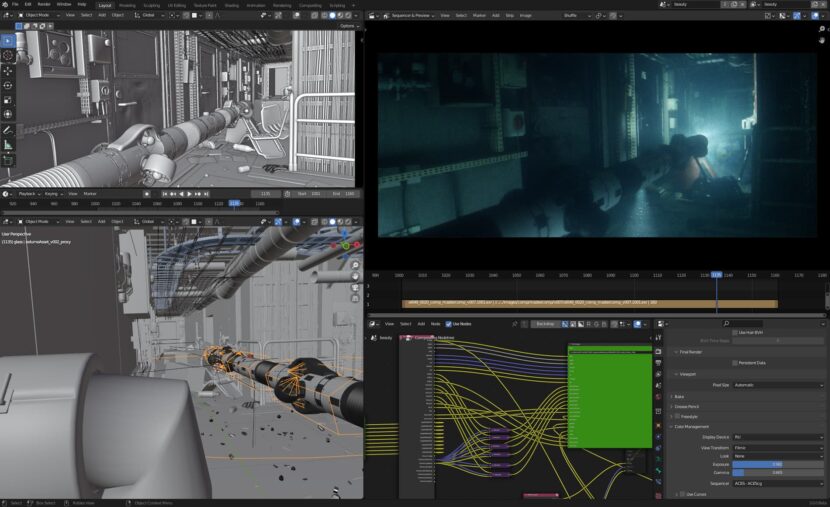

Theodor Flo-Groeneboom headed up a small team at Rebel Unit who created all the CGI underwater scenes, from the sinking lifeboat to the underwater robot searching through the broken platform for survivors. The team decided to shift most of their workflow to open source, specifically Blender to deliver the 3D for the show, not due to budget but flexibility and productivity. “We definitely value all the other tools that we use,” comments Theodor. “But we wouldn’t use Blender if it wasn’t up to scratch, – but it is and it works well in a pipeline.” About 80% of the team’s 3D work was done in Blender with the remainder requiring Houdini simulation work over the 8 months the team has shifted focus to Blender. “We do use some Houdini because the simulation and physics side of Blender is just not up to the quality of Houdini at this point,” he adds. “But all the modelling, the animation, the lighting, shading, and rendering were all done in Blender.”

The small team finished about 100 shots of full CG VFX for a Norwegian film. “Which marked our venture deeper into using more open-source tools in production than previously, after some extensive testing,” explains Theodor. The pipeline is based on Linux most recently Pop.OS, which is based upon Ubuntu, and features the COSMIC GTK-based desktop environment based on GNOME.

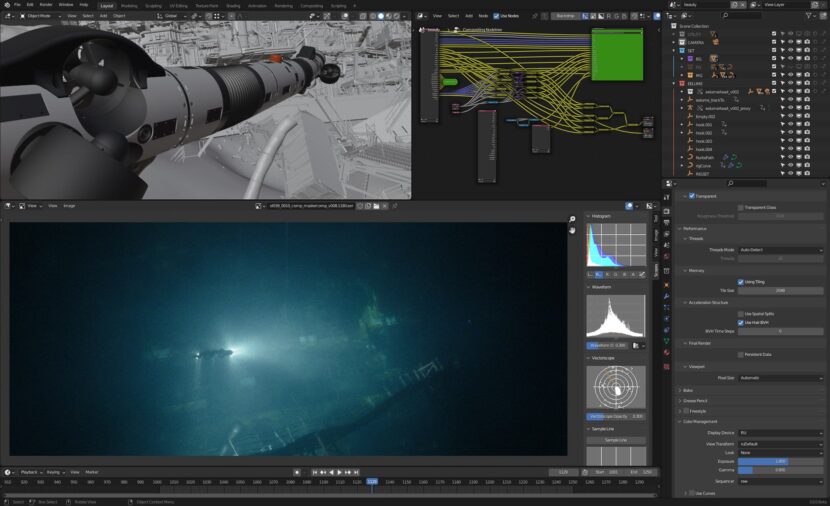

To prove that Blender could provide the scale of work the team knew they needed to do, Rebel Unit did a series of tests, such as the one above. This Blender Look Dev test was done in a couple of nights, with renders as low as 1 minute a frame in 2k.

As the team was small and flexible, they used the latest versions of Blender, even if these were only beta releases. The attraction of upgrading was the new features that kept coming out such as the newest denoising, point rendering, and cryptomatte support. Their modest HP Cluster 16 machine render farm which is all open source with virtualized render nodes and CGRU software with Afanasy render farm queue management. “The tech in CGRU is really good. Like it scales incredibly well”. CGRU may not have the prettiest UI comments Theodor but he likes that companies such as RISE are using it for managing their renders.

Currently, Theodor is looking at Gaffer from Image Engine, which is another open-source, powerful tool for lighting workflow. Gaffer is a free, open-source, node-based VFX application that enables look developers, lighters, and compositors to easily build, tweak, iterate, and render scenes, with in-application scripting in Python and OSL. While Theodor likes Substance, he “has a sneaking suspicion, looking at the development target of Blender, that the development is heading in that direction.”

The pipeline was to render multiple AOV passes and assemble the final look in Nuke. “We would render indirects and lights as separate passes for all the volumetric lighting and we would get 2K renders without noise rendering in 30 seconds for this film, that’s surprisingly fast” he explains. Most underwater shots were rendered out to Nuke with about 5 layers of CG, such as volumetrics, key models, and other foreground items that would allow the compositing of the light beams and atmospherics to be artistically balanced correctly. This was where Blender’s new support of Cryptomatte proved invaluable. Cryptomatte was created at Psyop by Jonah Friedman and Andy Jones. It creates ID mattes for the team, automatically supporting transparency and depth of field, using organizational layering information already available at render time

Machine Learning.

In the story, a dead driller is found floating in the collapsed rig. While there was material shot for this by first unit, the creatives decided to replace it with a more believable floating CG corpse. To make the CG person the team used PIFuHD. This open-source Machine Learning tool produces image-based 3D human shape estimation using deep neural networks. The team used the ML program to extract a mesh model from 2D images of the driller. PIFuHD can output animated meshes but Theodor joked “given the floating driller was dead, that wasn’t needed!”

Background and Virtual Production.

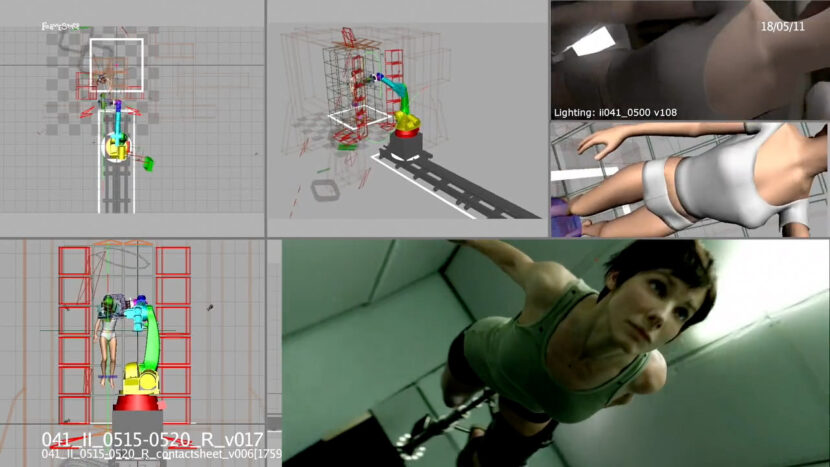

Theodor Flo-Groeneboom background is in programming, art, and music. In this capacity, he got involved in 3D for narrative form, and in particular real-time animation. He got involved with the Gravity project when it first started at Framestore, where he was then working in the UK. Theodor was joined by Patrick Lowry, as Environment Technical Director on Gravity. While Theodor’s film credit was sequence lead, both artists worked on set helping adjust and set the lighting in the Gravity cube, which was the precursor to modern LED stage volumes. The pair spent 80 days on set under VFX supervisor Tim Webber working with the DOP, Emmanuel Lubezki.

This was an era pre-Unreal Engine, (or any game engine) being used for virtual production. Gravity used TouchDesigner, which Theordor would use again on Gareth Edwards’ Rogue One (2016) with Greg Fraser as DOP. Fxguide reported on this back in 2016 “The advantage of the TouchDesigner user interface was it allowed the lighting to be triggered as needed. For example, the DOP would stand beside someone with a TouchDesigner iPad – who could trigger the lighting changes to the LED when the DOP indicated.” That ‘someone’ from 2016 was Theodor. He used TouchDesigner again on Robert Zemeckis’ Allied (2016). For Allied, VFX Supervisor Kevin Bailey wanted to incorporate a 180 degree live-action LED wrap-around stage displaying principally live-action but with the ability to still be able to trigger events via TouchDesigner.

After working at several key facilities on a range of major projects Theodor Flo-Groeneboom co-founded Rebel Unit. Today the company does a range of work from complex LookDev to Machine Learning and complex visual effects for film and TV.