Russian3DScanner

Russian3DScanner (R3DS) is a company developing tools that help create digital characters based on 3D scans. Their Wrap software can automatically fit an existing topology onto a 3D scan of a person. R3DS has now taken this further and recently released a new product called Wrap4D which is designed for the processing of 4D sequences of scans.

4D performance capture has garnered a lot of attention over the last couple of years. More and more studios are now looking into it as a source of training data for machine learning and as a way to take the quality of facial animation to a new level.

For a long time, 4D capture has been available as a service provided by just a few vendors. Wrap4D gives an opportunity for a wider range of studios to use this technology. It’s a standalone application that people can install and use in production.

A 4D sequence captured by Infinite-Realities using AEONx Motion Scanning & Wrap4D processed.

What is Wrapping?

If an artist wants to create a photorealistic digital character based on a real actor they normally start with a scan. While accurate geometry of the face comes from a 3D scan, the actual scan normally consists of millions of triangles and usually contains noise, artifacts, and missing parts. In order to turn a 3D scan into an animated character, one needs to convert it into a low-poly mesh with a user or animation-friendly topology.

Traditionally this means manually performing a retopology. This option works well for a single character, but if a studio needs to scan 10 characters, they would ideally like to process the various scans in the same topology.

R3DS Wrap provides an automatic solution to this problem. This process is called wrapping. It takes a base mesh with the desired topology and uses an optimization approach to automatically fit the base mesh to the surface of the scan.

How Wrapping Works

Even though wrapping can be completely automatic, an artist can guide the fitting process as and when needed. For any given point on the base mesh, a user can explicitly specify a corresponding target on the surface of the scan.

In practice, artists often need to scan not just one, but dozens of actor expressions. While the wrapping method works well for a neutral expression, processing other expressions requires a different approach. In the case of non-neutral expressions, the method should also take into account the textures. Every vertex on the neutral mesh corresponds to a skin feature or a pore on the actor’s face. If one can determine the correct position for this vertex on a target expression, then it is important to find the exact same skin pore in each scan. Ideally one should repeat this process for each of the thousands of vertices in the scan – which is virtually impossible to do manually. This process is often called ‘solving correspondence’.

To solve this problem, R3DS implemented its own OpticalFlowWrapping method. It renders the neutral mesh and the target scan from different angles using a set of virtual cameras. For each camera, it finds the optical flow between the images and makes a decision on where to move each pixel. The decisions from all the cameras are then combined into a global solution.

After the base mesh is matched to all the expressions with pixel-level accuracy, not only can one blend between the expressions, but also between their textures, producing realistic blood-flow and wrinkle effects. Without this correct matching alignment, the facial skin would seem to smear or drift across the face.

How OpticalFlowWrapping works

The Wrapping and OpticalFlowWrapping tools are currently used by many studios to create digital characters for games and movies. For each scan, they reduce the retopology time from several hours to a few minutes. But Wrap is not limited to only working with 3D scans. For example, it’s often used to fit the desired topology onto a high-res digital sculpt or to quickly apply skin micro detail maps onto a new character.

4D Performance Capture

“After releasing the OpticalFlowWrapping tool, we realised that we could extend this approach to the processing of 4D sequences consisting of thousands of frames. This research later resulted in a new product called Wrap4D”, says Andrey Krovopuskov, CEO of R3DS.

Unlike traditional performance capture methods based on the tracking of markers and contours, 4D capture obtains information regarding where every millimeter of an actor’s skin moves. One can think of it as high-speed 3D scanning at video level frame rates. Some methods actually explicitly compute a 3D scan on every frame, while others get the information about the shape implicitly.

It is worth noting that 4D capture is not a new idea. One of the earliest examples of 4D capture was the Universal Capture System created for The Matrix Reloaded. (UCAP would go on to win a Sci-Tech Oscar in 2015).

As the quality of digital doubles continues to increase, 4D capture has become widely used in high-end VFX productions. Because of the growing demand for believable digital characters, more studios from the game and cinematic industries are also looking into building 4D capture rigs.

Hardware

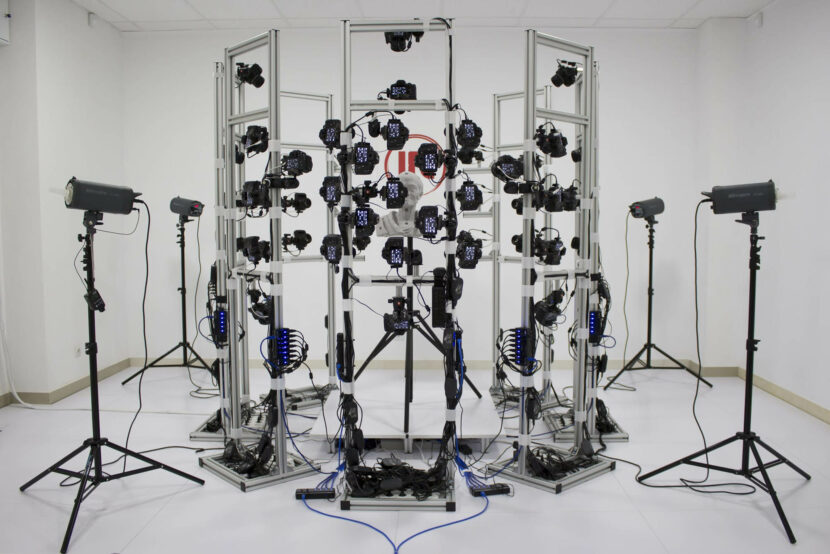

Today the majority of VFX and game studios have access to multi-camera 3D scanning rigs. A traditional photogrammetry rig involves a set of DSLR cameras located around the actor’s head. The cameras are synchronized with a set of flashes or polarised light. The rig simultaneously captures a set of photos of an actor’s face from different angles. The photos are then processed in photogrammetry software to produce a 3D mesh with textures.

4D capture (or videogrammetry) rigs use a similar idea except that they can synchronously capture sequences of images at a high frame rate (usually 30-60 FPS). This little difference leads to big changes in hardware.

The first problem is camera synchronization. Each frame of a sequence should be captured simultaneously by all the cameras. Unfortunately, most DSLR cameras that are used for 3D scanning can’t guarantee such precise synchronization. A common practice is to use machine-vision cameras. These small devices are specifically designed for computer vision tasks and allow streaming synchronized image sequences usually in an uncompressed format. Recording high-frame-rate image streams from dozens of cameras require very fast drives and large storage capacities.

The second problem is lighting. To produce high-quality scans it is key to minimize motion blur and maximize depth of field. This can be done by using a shorter exposure time and a lower aperture but it also means that less light reaches the camera sensor. In practice, exposure values of around 1-2 ms requires extremely bright lighting. Using constant light sources, the brightness can be so bright that it becomes very uncomfortable and has a negative affect on the actor’s performance. A common solution to this problem is to use strobe lights. The strobe light sources are synchronized with the camera shutters and only emit light when the shutter is open. For example, if one captures 1 second of animation at 25 FPS with 1 ms exposure, the strobe lighting should only emit for 25 ms compared to 1000 ms for the constant lighting. This means that 40 times less light is required. However, strobe lighting comes with a cost. It can only be used efficiently with global shutter camera sensors, and these are more expensive than rolling shutter sensors.

During R3DS’ work on Wrap4D, “we used our custom 4D capture rig based on 18 machine vision cameras capturing 3 MP images at 60 FPS. Soon after the first tests, we switched to far superior data provided to us by our friends from Infinite-Realities,” commented Krovopuskov.

Infinite-Realities is a UK-based scanning company that created an outstanding 4D capture rig consisting of 50+ cameras using the Darkstar lighting system from IDA-Tronic. Their rig generates more than 5 TB of data every minute and provides 3D scans of extremely high quality. Lee Perry-Smith, the co-founder of the company, was excited about R3DS’ early results and provide a sample 4D sequence that is packaged with Wrap4D, so that new users can experiment and dive in immediately.

Wrap4D

Even though 4D capture rigs are more expensive and harder to build, more and more studios are creating such rigs to meet their needs.

“The next question after building a 4D capture rig is how to process the captured data. There are only a few players on the market that provide 4D capture and processing, and they mainly provide this as a service. Today, I see more customers looking into 4D capture as a source of training data for machine-learning algorithms or as a way to get an unlimited number of intermediate blendshapes for rigging. It’s important that they can generate as much data as they need without having to pay for every second of processing. That is why we decided to create Wrap4D”, says Krovopuskov.

As expected, wrapping a 4D sequence is quite different from wrapping a single expression. When working with a single expression one can manually specify point correspondences and clean up the mesh if something goes wrong. With 4D processing, one has thousands of frames and so performing a manual cleanup can become extremely time-consuming. The goal of the team at R3DS was to create a method that provides clean results with no need for manual editing.

“Wrap4D takes a sequence of scans as the input and produces a sequence of meshes with the desired topology as the output,” Krovopuskov adds. “Unlike other approaches that use frame-by-frame tracking, we process every frame independently, which allows us to compute all the frames in parallel. It provides great scalability as we can now process a single sequence on multiple machines.”

A character created by Infinite-Realities using a 4D-captured performance and Wrap4D.

The independent processing of each frame prevents error accumulation from one frame to another. You can quickly preview the result with any frame of the sequence without having to compute all the preceding frames.

On the other hand, fitting frames independently is harder than tracking them frame-by-frame because the difference between the current frame and the neutral mesh is much bigger than the difference between neighboring frames.

In order to make this method controllable and robust, R3DS uses 3 additional sources of data to assist the algorithm:

- Marker tracking. Markers help to handle very extreme facial expressions where the optical flow can fail due to blood-flow and wrinkle effects.

- Lip and eyelid detection. You can train a personalized contour detector by providing it with a set of training frames. The detected contours help the algorithm to deal with noisy eyelashes and inner parts of the lips.

- Reference blendshapes. They are a set of meshes that help the algorithm to understand how different parts of the base mesh can be deformed. You can use a personalized set of FACS of the actor or just a small set of generic blendshapes.

“The tracking steps are performed in our new software called R3DS Track. The idea is to bring all the labor-intensive work into 2D space so that you don’t need to clean up meshes in 3D, which requires much more experience and time. The tracked data is then passed to Wrap4D and the rest of the process is completely automatic,” Krovopuskov explains “We tried to make Wrap4D flexible and open-ended”. The process can work with different input data whether one captures 360 degrees scans or ear-to-ear scans. The process can be easily adjusted for different input data quality, for instance, if the artist capture scans are achieved with a static array of dozens of cameras or just a few cameras on a helmet.

“I was overjoyed after I sent the early beta of Wrap4D to Infinite-Realities. The guys saw the software for the first time and came back to me two days later with a completely processed sequence,” he adds.

An example of a 4D sequence captured by Infinite-Realities and processed with Wrap4D.

The Heretic

Even before the official release of Wrap4D it was used in a number of productions. One of the first projects was the real-time short film The Heretic, created by Unity’s Demo Team in 2019.

In this project, the Unity team decided to use 4D facial capture for the first time. All the sequences were captured by Infinite-Realities and processed by R3DS. The movie contains 8 shots with 4D-captured performances. The R3DS team was in charge of mesh tracking, head stabilization, noise filtering, and refinement of eyelids and lips. The last two shots consisted of more than 1000 frames and were processed by R3DS in just one day.

The resulting mesh was further refined by the Unity team. Shading, grooming, and micro details were then added. A Snappers rig was used to art-direct the facial expressions on top of the 4D performances where needed. Unity released the character asset from the film, so it can be explored in detail and used as a reference by Unity artists.

“We really enjoyed working on this project and it gave us many ideas that were later transformed into tools for Wrap4D”, said Krovopuskov.

Gawain, the main character from The Heretic short

What’s next?

“I hope that Wrap4D will help more studios to bring the facial animations in their games, movies, and cinematics to a level of quality comparable to Hollywood blockbusters. It’s amazing to see how within a couple of days you can produce an extremely complex facial animation without being a rigger or an animator. I’m very curious how far we can go using 4D data to improve head-mounted camera capture methods and facial rigging”, concludes Krovopuskov.

Note: R3DS team has a virtual booth at SIGGRAPH 2020.